From Narrow to Superintelligent: Discover the Types of AI

Artificial intelligence is an expansive field, and its capabilities are evolving at a breakneck speed. From the humble narrow AI we use in our daily apps to the theoretical concept of superintelligence, AI spans across multiple categories.

Each type reflects a different level of cognitive ability and problem-solving potential.

In this comprehensive guide, we’ll break down the types of AI into clearly defined categories, offering insights into what they are, how they work, and their potential.

Types of AI: Based on Intelligence

Narrow AI (Weak AI): Specialized and Task-Oriented

Narrow AI (also known as Weak AI) is the most common form of artificial intelligence that we encounter today. It’s designed to perform specific tasks and cannot generalize beyond its pre-programmed limits. Narrow AI excels in singular domains, such as recognizing speech or identifying images, but lacks broader understanding or consciousness.

- Definition: Narrow AI refers to AI systems designed to perform a specific task or a narrow range of tasks. These systems operate within a limited scope and cannot perform beyond their predefined functions.

- Capabilities: They do not possess general intelligence or awareness.

- Examples:

- Voice Assistants (e.g., Siri, Alexa): Designed to respond to voice commands.

- Recommendation Algorithms: Such as those used by Netflix or Amazon to suggest content based on past behavior.

- Chatbots: Automate customer service tasks using pre-programmed responses or learned patterns.

- Spam filters in email services

- Key Characteristics: Highly specialized, no self-awareness, cannot perform tasks outside their programming.

Narrow AI is impressive but constrained. It cannot perform tasks beyond what it’s been explicitly programmed for, which differentiates it from more advanced forms of AI.

General AI (AGI or Strong AI): The Future of Human-Like Cognition

Artificial General Intelligence (AGI), or Strong AI, represents the type of AI that can perform any intellectual task a human can. Unlike Narrow AI, AGI doesn’t require task-specific programming. It’s capable of learning, reasoning, and adapting across a wide range of challenges, much like a human mind.

AGI is still theoretical. Despite numerous advancements, no machine today possesses the flexible, broad-based intelligence required to be classified as AGI.

- Definition: Artificial General Intelligence (AGI) is AI that can understand, learn, and apply intelligence across a wide range of tasks, much like a human. AGI aims to mimic the breadth of cognitive functions humans possess.

- Capabilities: General AI systems would have the ability to think, reason, learn, and make decisions autonomously across different domains without being programmed for specific tasks.

- Examples: AGI remains theoretical at this point. No such system exists, but it is the ultimate goal for many AI researchers.

- Key Characteristics: Capable of general learning, adaptability, self-awareness, and reasoning on par with humans.

Though AGI remains an aspiration, experts predict it could revolutionize industries like healthcare, law, and education by providing nuanced insights that even the most advanced narrow AI can’t offer today.

Superintelligence (ASI): Beyond Human Capability

Artificial Superintelligence (ASI) is a hypothetical form of AI that surpasses human intelligence in all respects—whether it’s creativity, problem-solving, emotional intelligence, or even social skills. This type of AI would outperform humans in every intellectual and functional domain.

While ASI still belongs to the realm of science fiction, it raises important ethical questions and concerns about control, safety, and the consequences of machines developing superior intelligence.

- Definition: Artificial Superintelligence (ASI) refers to a hypothetical AI that surpasses human intelligence in all aspects, including creativity, problem-solving, emotional intelligence, and even social intelligence.

- Capabilities: ASI would be exponentially more capable than humans in decision-making, innovations, and solving complex global challenges. It could evolve to redefine concepts of intelligence.

- Examples: Currently, ASI is a theoretical concept, but it’s widely discussed in debates about the future of AI and its ethical implications.

- Key Characteristics: Far beyond human cognitive abilities, potentially able to improve and evolve its own intelligence autonomously.

The conversation around ASI often centers on whether we can manage the risks and harness its immense potential for global good.

Types of AI: Based on Functionalities

Reactive Machines: Basic Functional AI

Reactive Machines are the simplest forms of AI. These systems are designed to respond to specific stimuli based on pre-programmed rules. They cannot form memories or learn from previous experiences, meaning their understanding of the world is entirely based on the present moment.

- Capabilities: They can only perform present tasks based on immediate input. They do not form memories or learn from history.

- Examples:

- Deep Blue: IBM’s chess-playing computer, which defeated world chess champion Garry Kasparov in 1997. It could analyze positions and move combinations but lacked memory or learning abilities.

- AI in Games: AI in video games that reacts to player moves without any form of adaptation or learning.

- Key Characteristics: No memory, no learning ability, static decision-making based on current input only.

While limited in scope, reactive machines are highly reliable for specific, repetitive tasks. However, they are far from understanding the broader context or thinking creatively.

Limited Memory AI: Learning from Data

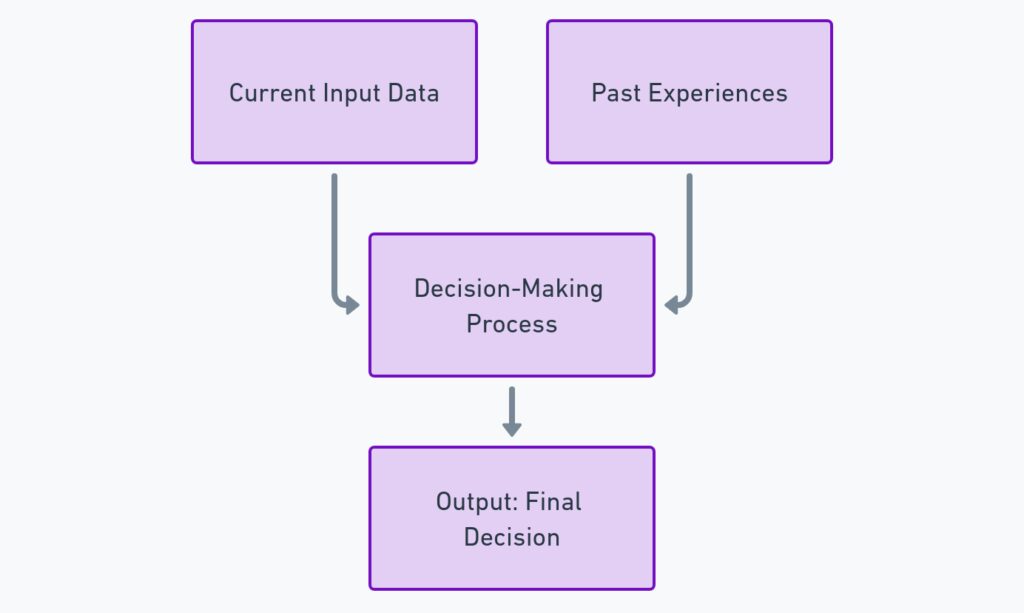

Unlike reactive machines, Limited Memory AI systems can retain data for a short period, allowing them to learn and make informed decisions. This is a common characteristic of many machine learning models that analyze large datasets to generate insights, predictions, and solutions.

- Capabilities: Limited Memory AI can retain information for a short time or analyze large datasets to improve future decisions.

- Examples:

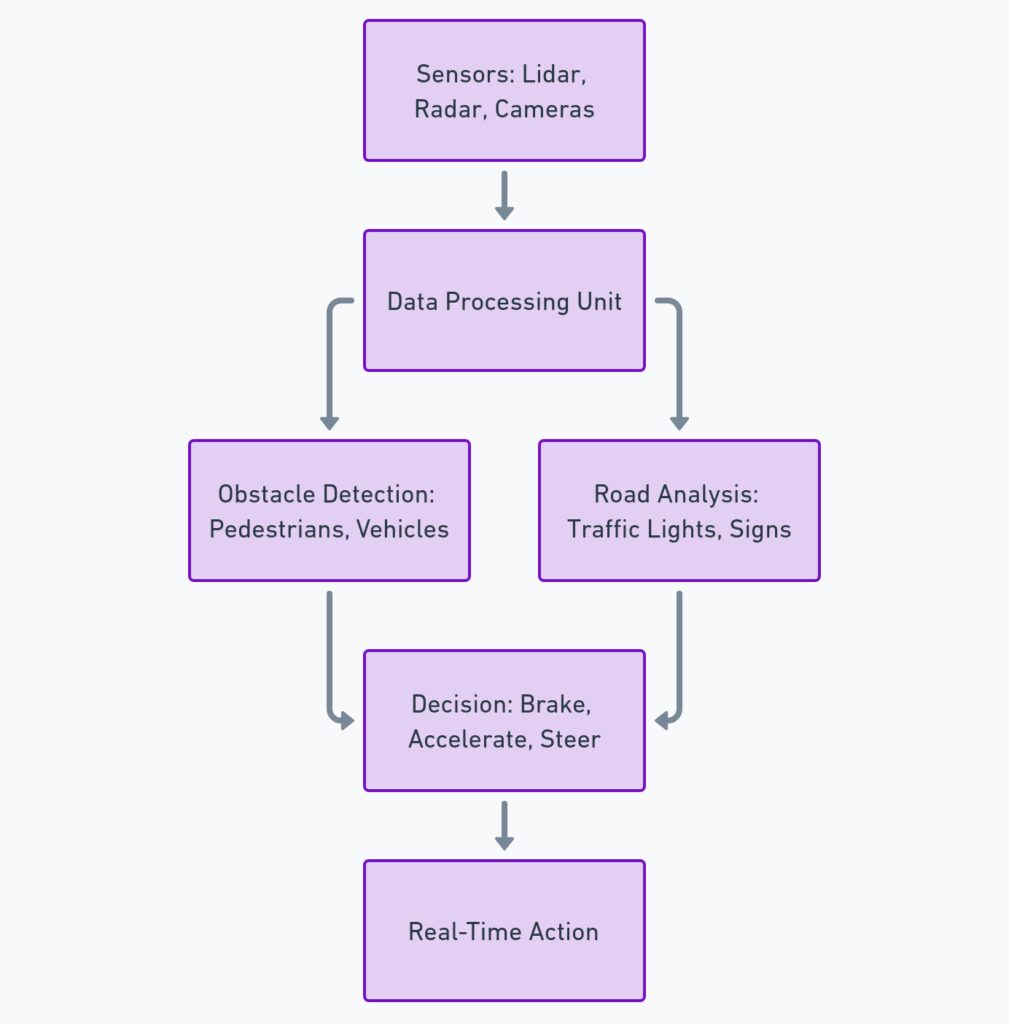

- Self-Driving Cars: Use historical data such as speed limits, nearby cars, and route information to navigate roads.

- Chatbots and Customer Support Systems: Can learn from previous interactions to improve responses in future conversations.

- Key Characteristics: Short-term memory, able to learn from past data but not continuously improve without human intervention.

Limited Memory AI is more advanced than reactive machines but still limited to specific tasks, often requiring vast amounts of data to be effective.

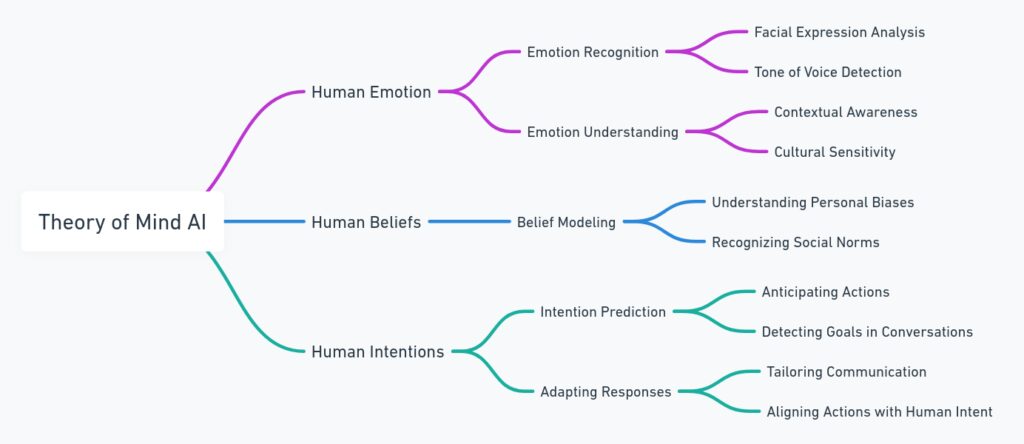

Theory of Mind AI: Emotional and Social Intelligence

Theory of Mind AI is another theoretical category that refers to AI systems capable of understanding and processing human emotions, intentions, and thoughts. This AI type would interact with humans in a much more natural, socially aware manner, adjusting responses based on how a person feels or what they may be thinking.

While researchers are exploring this concept, it remains at the experimental stage. Fully developing AI with theory of mind capabilities would likely revolutionize industries like customer service, healthcare, and social robotics.

- Potential Applications:

- Therapeutic AI capable of understanding mental health conditions

- Emotional companions for elderly or socially isolated individuals

- Advanced social robotics

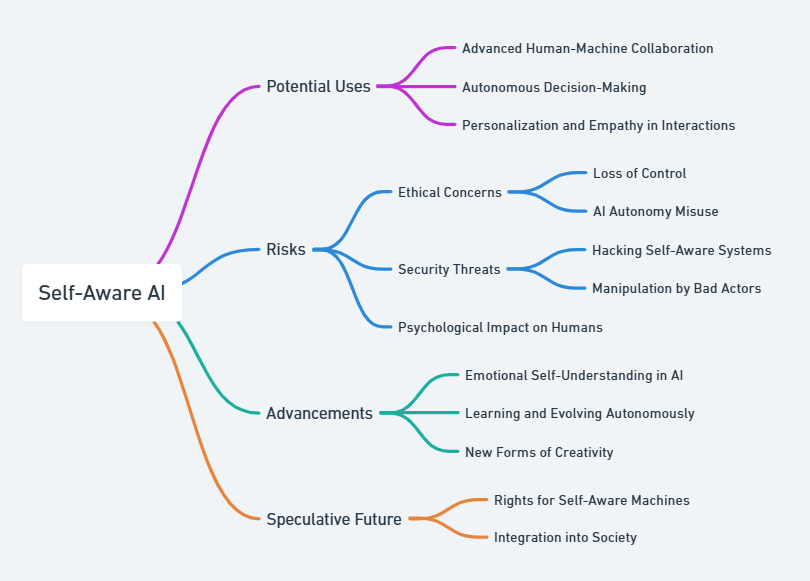

Self-Aware AI: Conscious Machines

Self-aware AI refers to machines that possess consciousness and self-awareness similar to human beings. This form of AI could understand not only emotions and intentions but also have a sense of self—the ability to reflect on its own existence.

Currently, no AI system has come close to this level of self-awareness. It’s more of a philosophical discussion than a technological reality at this point.

- Future Implications: If achieved, self-aware AI could lead to machines that make autonomous decisions and self-regulate. This opens up deep ethical debates regarding their rights, responsibilities, and our control over them.

Types of AI: Based on Learning Approaches

Artificial intelligence is also categorized by the different ways it learns and adapts. AI systems rely on various learning techniques to become more intelligent and improve their performance over time. Understanding these learning approaches is key to grasping how AI works and evolves.

Supervised Learning: Training with Labeled Data

Supervised learning is one of the most common types of AI learning approaches. In this method, the system is trained on a labeled dataset, where both the input data and the correct output (target) are provided. The AI model learns from these examples, making predictions or decisions, and refining its performance as it encounters more data.

- How It Works: During training, the AI model compares its predictions to the actual outcomes. It uses error-correction algorithms to minimize mistakes over time.

- Common Use Cases:

- Image classification (e.g., identifying objects in photos)

- Spam detection in emails

- Speech recognition systems

Supervised learning is highly effective when there’s a large amount of labeled data, but this also means it can struggle with tasks where such data isn’t readily available.

Unsupervised Learning: Finding Patterns in Unlabeled Data

In unsupervised learning, the AI model works with unlabeled data. Instead of being trained on specific inputs and outputs, the system must discover patterns and relationships within the data by itself. The primary goal is to cluster or group similar data points together or reduce the complexity of data for further analysis.

- How It Works: The AI model looks for underlying structures and hidden patterns in the data, without any explicit instruction. Algorithms like K-means clustering and principal component analysis are widely used.

- Common Use Cases:

- Customer segmentation in marketing (grouping customers based on purchasing behavior)

- Anomaly detection (finding outliers in datasets, useful for fraud detection)

- Data compression

Unsupervised learning is powerful for discovering previously unknown insights in large datasets, but its accuracy is often harder to measure since there’s no labeled “correct” output.

Semi-Supervised Learning: Bridging the Gap

Semi-supervised learning falls between supervised and unsupervised learning. In this approach, the AI system is given a small amount of labeled data and a larger pool of unlabeled data. The labeled data helps guide the AI model, but it also learns from the larger, unlabeled set.

- How It Works: The model first learns from the labeled data, then uses this knowledge to explore and label the unlabeled data automatically, improving its predictions.

- Common Use Cases:

- Web content classification (tagging articles or pages based on their topic)

- Medical image analysis, where labeling data is time-consuming but essential

Semi-supervised learning is a useful strategy when labeling large datasets is expensive or labor-intensive. It’s often employed in fields like healthcare, where labeled data is scarce but highly valuable.

Reinforcement Learning: Learning Through Rewards and Punishments

Reinforcement learning is a unique approach where AI agents learn by interacting with their environment and receiving feedback in the form of rewards or punishments based on their actions. This type of learning is inspired by the way humans and animals learn through trial and error.

- How It Works: An AI agent is placed in a specific environment, and it performs actions that either bring positive or negative outcomes. Over time, it optimizes its actions to maximize rewards.

- Common Use Cases:

- Game-playing AI, like AlphaGo and OpenAI’s Dota 2 agents

- Robotics, where robots learn how to navigate complex environments

- Autonomous vehicles, improving their driving behavior based on real-world conditions

Reinforcement learning excels in dynamic, complex environments where the AI needs to adapt based on long-term outcomes, but it requires significant computational resources and is often difficult to scale.

Self-Supervised Learning: Unlocking More Autonomous Learning

Self-supervised learning is a cutting-edge approach that has gained traction in recent years, especially in natural language processing and computer vision. In this method, the AI model is designed to generate part of its own labeled data from unlabeled datasets. It uses internal structures within the data to create training examples, thus making it more autonomous in its learning process.

- How It Works: For example, in text-based applications, the model might predict missing words in a sentence, using the surrounding words as context. Over time, it develops a nuanced understanding of language without relying on large labeled datasets.

- Common Use Cases:

- Language models like GPT and BERT, which predict and generate human-like text

- Image generation models that can complete a partially hidden image based on the visible portion

This method is considered the future of AI learning, as it offers a way to overcome the limitations of manual data labeling and opens doors to more scalable, robust AI models.

Transfer Learning: Adapting Knowledge Across Tasks

Transfer learning allows an AI model trained on one task to transfer its knowledge to another, related task. This approach can drastically reduce the time and resources required to train new models, as the system has already learned key features and patterns in one domain that can be applied elsewhere.

- How It Works: For instance, a model trained to recognize objects in images can be fine-tuned to identify new categories with minimal additional training.

- Common Use Cases:

- Image recognition models that are adapted for different industries (e.g., healthcare, retail)

- Language translation models that adapt to new languages with less data

Transfer learning is especially useful when there’s limited data available for a new task, and it’s widely used in natural language processing and computer vision.

Federated Learning: Distributed Learning Across Devices

Federated learning is an emerging approach where AI models learn across multiple decentralized devices (such as smartphones or IoT devices) without transferring the data back to a central server. This approach allows for collaborative learning while maintaining data privacy.

- How It Works: Each device trains a local model on its own data, and only the model updates (not the raw data) are sent back to a central server. The server aggregates these updates to improve the global model.

- Common Use Cases:

- Personalized AI applications, such as improving keyboard suggestions on smartphones

- Healthcare, where patient data remains private but models are collaboratively trained

Federated learning addresses privacy concerns and reduces the need for centralized data collection, making it a valuable tool for industries like healthcare and finance.

Evolutionary Learning: AI Inspired by Natural Selection

Evolutionary learning draws inspiration from biological evolution to improve AI models. In this method, algorithms evolve over time through processes similar to natural selection, including mutation, crossover, and selection. Models that perform better are retained and improved upon, while weaker models are discarded.

- How It Works: The AI starts with a population of potential solutions to a problem. Through repeated cycles of selection and refinement, it evolves to find the optimal solution.

- Common Use Cases:

- Optimization problems in engineering and logistics

- Game AI development, where agents evolve to find the best strategies

Though it’s less common than other learning approaches, evolutionary learning is useful for solving complex problems where traditional methods may fall short.

Hybrid Learning: Combining Multiple Approaches

Hybrid learning systems combine different AI learning approaches to create more robust models. These systems leverage the strengths of one learning method while compensating for its weaknesses with another, allowing for greater adaptability and versatility.

- How It Works: A hybrid system might use supervised learning for initial training and reinforcement learning to refine its performance in real-world environments.

- Common Use Cases:

- Autonomous systems that need to operate in dynamic environments (e.g., self-driving cars)

- AI-powered recommendation engines that combine collaborative filtering with unsupervised learning for better personalization

Hybrid learning is increasingly popular as AI applications become more complex and require more versatile, multi-faceted approaches to problem-solving.

Types of AI: Based on Architecture

Artificial Intelligence systems are not just distinguished by how they learn but also by how they are architecturally designed. The architecture of an AI system defines its structure, including how different components interact and how the system processes data. AI architectures can range from simple models designed for specific tasks to complex networks capable of simulating cognitive functions.

Symbolic AI: Rule-Based Systems

Symbolic AI, also known as good old-fashioned AI (GOFAI), is one of the earliest forms of AI architecture. It relies on explicit rules and symbolic representations to solve problems. The system works by manipulating symbols (like words or numbers) according to predefined rules, often using if-then statements to mimic reasoning.

- How It Works: These systems use logic and knowledge representation to process information. Knowledge is encoded in the form of symbols and rules, which are processed using algorithms like decision trees or rule-based engines.

- Common Use Cases:

- Expert systems used in medical diagnosis (e.g., MYCIN)

- Early chess engines like Deep Blue

- Simple chatbots based on fixed rules

Symbolic AI is rigid and struggles with tasks that require learning or adapting to new situations. However, it’s excellent for tasks with clearly defined rules.

Neural Networks: Mimicking the Human Brain

Artificial Neural Networks (ANNs) are inspired by the structure of the human brain, consisting of layers of interconnected nodes (neurons). These architectures are designed to recognize patterns, make predictions, and adapt based on input data. Each node receives information, processes it, and passes it to the next layer, allowing for complex decision-making.

- How It Works: Neural networks use layers of neurons—input layers, hidden layers, and output layers. Each neuron applies weights and biases to the input and passes the result through an activation function. Learning occurs through backpropagation, where the model adjusts the weights based on errors in the output.

- Common Use Cases:

- Image recognition (e.g., facial recognition systems)

- Speech recognition systems (like Siri and Google Assistant)

- Predictive modeling in finance and healthcare

Neural networks are highly flexible and can learn from data, but they require large datasets and significant computational power to train effectively.

Convolutional Neural Networks (CNNs): Mastering Visual Data

Convolutional Neural Networks (CNNs) are a specific type of neural network designed primarily for processing visual data like images and videos. CNNs use convolutional layers to automatically extract features from input images, such as edges, textures, and patterns, making them highly effective for tasks like image classification and object detection.

- How It Works: CNNs consist of multiple layers, including convolutional layers, pooling layers, and fully connected layers. The convolutional layers apply filters to detect patterns in the input data. Pooling layers reduce the dimensionality, and fully connected layers map the extracted features to output labels.

- Common Use Cases:

- Facial recognition software

- Medical image analysis (e.g., detecting tumors in MRI scans)

- Autonomous vehicles (object detection and navigation)

CNNs are exceptionally powerful in handling visual tasks, but they can be computationally intensive and require a lot of labeled data for effective training.

Recurrent Neural Networks (RNNs): Handling Sequential Data

Recurrent Neural Networks (RNNs) are designed for sequential data where context matters. Unlike traditional neural networks, RNNs have the ability to retain information from previous inputs by using internal memory loops. This makes them ideal for tasks where the order of data is crucial, such as time series analysis and natural language processing.

- How It Works: RNNs have connections that form loops, allowing information to persist across time steps. This enables the model to maintain a “memory” of past inputs. Backpropagation through time (BPTT) is used to train these networks.

- Common Use Cases:

- Text generation and language modeling (e.g., GPT, LSTM models)

- Speech-to-text systems

- Financial forecasting (analyzing stock market trends over time)

While RNNs are effective at handling sequential data, they struggle with long-term dependencies due to vanishing gradient problems, which can limit their ability to remember distant information.

Long Short-Term Memory (LSTM): Overcoming RNN Limitations

To address the limitations of traditional RNNs, Long Short-Term Memory (LSTM) networks were developed. LSTMs are a special type of RNN capable of learning long-term dependencies, allowing them to retain information over extended sequences. This makes them ideal for tasks like speech recognition and machine translation, where maintaining context over longer sequences is critical.

- How It Works: LSTMs use gates (input, forget, and output gates) to control the flow of information. These gates decide which information to keep, update, or discard, allowing the network to maintain relevant long-term information.

- Common Use Cases:

- Text prediction (e.g., autocomplete suggestions)

- Speech recognition (handling complex, sequential data like spoken language)

- Video processing (analyzing frame sequences for patterns)

LSTMs are more powerful than traditional RNNs for handling longer sequences, but they can still be computationally expensive to train.

Generative Adversarial Networks (GANs): Creating Data from Scratch

Generative Adversarial Networks (GANs) are an innovative AI architecture that uses two neural networks—a generator and a discriminator—to create new, realistic data from scratch. The generator creates synthetic data (e.g., images), while the discriminator tries to distinguish between real and fake data. Over time, both networks improve, resulting in highly realistic outputs.

- How It Works: The generator network creates fake data, and the discriminator network evaluates whether the data is real or generated. The generator tries to fool the discriminator, and the discriminator learns to become more accurate, leading to an iterative improvement process.

- Common Use Cases:

- Deepfakes (generating highly realistic but artificial images or videos)

- Image generation (e.g., creating photorealistic artwork)

- Data augmentation (generating new training data to improve AI models)

GANs are highly versatile but can be difficult to train, often requiring careful balancing between the generator and discriminator to achieve optimal results.

Transformer Models: Revolutionizing Natural Language Processing

Transformer models represent a breakthrough in natural language processing (NLP). Unlike RNNs or LSTMs, transformers do not process data sequentially. Instead, they use a mechanism called self-attention to understand the relationships between words in a sentence, regardless of their order. This architecture allows transformers to process data in parallel, leading to faster and more accurate results.

- How It Works: The transformer model uses self-attention mechanisms to weigh the importance of different words in a sentence relative to each other. It also employs multi-head attention and positional encoding to capture word relationships and context efficiently.

- Common Use Cases:

- Language translation (e.g., Google Translate)

- Text generation (e.g., OpenAI’s GPT models)

- Summarization and sentiment analysis

Transformers have become the gold standard for many NLP tasks, due to their ability to handle large datasets and capture complex dependencies in language.

Modular AI: Combining Different Architectures

Modular AI refers to systems that integrate multiple AI architectures to solve complex problems. Instead of relying on a single architecture, modular AI systems use different components or models, each specialized for a specific task. The results from these modules are combined to deliver more versatile and powerful solutions.

- How It Works: A modular AI system might use a neural network for image recognition, a symbolic AI system for reasoning, and a rule-based engine for decision-making. The AI system integrates these results to perform more comprehensive tasks.

- Common Use Cases:

- Robotics, where different modules handle perception, reasoning, and motion control

- Autonomous vehicles, combining sensor data analysis, path planning, and decision-making

Modular AI is useful for solving complex, multifaceted problems but requires careful orchestration to ensure all components work together seamlessly.

Hybrid AI: Combining Symbolic and Connectionist Approaches

Hybrid AI combines the strengths of both symbolic AI and neural networks (connectionist approaches). While neural networks excel at pattern recognition, they struggle with logic-based tasks. On the other hand, symbolic AI handles logic and reasoning well but cannot learn from data. Hybrid AI merges these two approaches to create systems that can both learn from data and apply logical reasoning.

- How It Works: In a hybrid AI system, the neural network might handle data-driven tasks like image recognition, while the symbolic AI system processes the recognized patterns using logical rules.

- Common Use Cases:

- Cognitive computing systems that mimic human-like reasoning (e.g., IBM Watson)

- Natural language understanding systems that require both learning and logic

Hybrid AI is increasingly seen as the future of AI, as it combines the best of both worlds—learning from data and reasoning with symbols.

Types of AI: Based on Tasks

Artificial Intelligence (AI) can also be classified by the tasks it is designed to perform. Task-based AI categorization helps us understand how AI systems are used in the real world, depending on their capabilities and specific objectives. Some AI systems are designed for simple, routine tasks, while others can perform highly complex and adaptive functions.

Reactive Machines: Handling Simple, Predefined Tasks

Reactive machines are the most basic type of AI, designed to handle specific tasks with predefined inputs and outputs. These systems do not have the ability to learn from past experiences or adapt to new situations. Instead, they follow rules or algorithms to respond to certain inputs with a fixed action.

- How It Works: Reactive AI does not store past experiences. It processes real-time inputs and provides immediate, task-specific outputs. These machines follow preset algorithms for decision-making.

- Common Use Cases:

- Chess-playing computers like IBM’s Deep Blue that defeated Garry Kasparov

- Basic customer service chatbots with pre-programmed responses

- Spam filters that react to patterns of spammy keywords in emails

Reactive machines are excellent for single-task environments where decision-making follows clear rules, but they lack flexibility or adaptability.

Limited Memory AI: Performing Tasks with Learning

Limited memory AI systems can perform tasks based on past data to make better predictions and decisions. These systems can remember and learn from historical information for a short time. Most of the AI applications in use today fall under this category.

- How It Works: Limited memory AI processes data in real time while using past data to influence current decisions. It requires training with large datasets, allowing the model to continuously learn and improve.

- Common Use Cases:

- Self-driving cars, which rely on real-time traffic data and previously learned patterns for decision-making

- Image recognition systems that improve accuracy over time

- Voice assistants like Siri and Alexa, which refine their responses based on past user interactions

Limited memory AI is powerful because it combines real-time decision-making with the ability to learn from experience, but its knowledge is still restricted to specific tasks.

Theory of Mind AI: Understanding Human Emotions and Intentions

Theory of mind AI represents a more advanced category, which aims to understand and respond to human emotions, beliefs, and intentions. This AI type is still largely theoretical but holds the potential to interact with humans in a more intuitive, socially aware manner.

- How It Works: Theory of mind AI would use models that not only process external data but also consider the emotional or psychological state of the human user. It could adapt responses based on empathy or social cues.

- Potential Use Cases:

- Social robots that can interact naturally with humans in settings like eldercare or education

- Therapeutic AI designed for mental health treatment, responding empathetically to emotional states

- Customer service agents that can detect frustration or confusion in a user’s tone and adjust their assistance accordingly

While the technology for theory of mind AI is still in its infancy, it is expected to become a game-changer in industries that require emotional intelligence and social interaction.

Self-Aware AI: Understanding Its Own Existence

Self-aware AI is a hypothetical category of AI that would have the ability to recognize its own existence and understand its environment at a deeper level. This type of AI could potentially think and reason in a way that parallels human consciousness.

- How It Works: Self-aware AI would be able to form self-representations and understand not only its tasks but also its role and impact on the world around it. It would make decisions based on self-perception, like humans do.

- Potential Use Cases:

- Fully autonomous robots capable of making complex decisions in unpredictable environments

- Creative AI that can generate art, music, or innovations based on a deeper sense of purpose and self-awareness

- Ethical decision-making systems, which can assess moral dilemmas in ways that account for broader societal impacts

Self-aware AI remains theoretical, raising ethical concerns about autonomy and control, but its development would represent the highest level of cognitive AI.

Task-Specific AI: Narrow but Highly Specialized

Task-specific AI, also known as Narrow AI, is designed to perform one task exceptionally well. These systems are built to handle highly specialized tasks and can outperform humans in their domain. However, they are incapable of performing any other task outside their specific function.

- How It Works: Task-specific AI is trained on one dataset and optimized for one problem. It uses deep learning, machine learning, or rule-based algorithms to excel at the given task.

- Common Use Cases:

- Language translation tools like Google Translate

- Recommendation systems (e.g., Netflix or Spotify’s algorithms)

- Medical diagnostics (AI systems trained to identify specific diseases in medical images)

Task-specific AI is extremely powerful in focused environments, but it cannot generalize its knowledge to handle multiple or unrelated tasks.

General AI: Mastering Any Task Like a Human

Artificial General Intelligence (AGI), or Strong AI, refers to systems that can perform any intellectual task a human can. Unlike narrow AI, AGI would be able to learn, reason, and adapt across different domains without task-specific programming.

- How It Works: AGI would integrate multiple forms of learning (supervised, unsupervised, reinforcement learning) to master a variety of tasks. It would continuously evolve and improve its understanding of general knowledge across domains.

- Potential Use Cases:

- Robots that can perform a wide range of tasks across different industries, from manufacturing to customer service

- AI assistants that can not only answer questions but also think critically and handle complex, cross-functional problems

- Healthcare AI that can diagnose and treat a wide variety of conditions based on real-time and historical data

AGI remains speculative, but achieving it would revolutionize every industry by offering human-level intelligence that could adapt to any task.

Automating Repetitive Tasks: Robotic Process Automation (RPA)

Robotic Process Automation (RPA) focuses on automating repetitive, rule-based tasks that require little human intelligence. This type of AI doesn’t possess learning capabilities but follows predefined workflows to complete tasks more efficiently than a human worker could.

- How It Works: RPA tools interact with digital systems by mimicking human actions, such as clicking, typing, or navigating software. They follow strict instructions to perform routine tasks.

- Common Use Cases:

- Data entry and management tasks

- Invoice processing in accounting

- Customer service automation (responding to FAQs or routing queries)

RPA is invaluable for businesses looking to cut costs and improve efficiency on routine, mundane tasks, but it’s limited to rigid, rule-based processes.

Autonomous AI: Acting Independently in Complex Environments

Autonomous AI systems are capable of making decisions and acting independently in dynamic or unpredictable environments. These AI systems must process real-time data, learn from their surroundings, and adjust their actions accordingly. They are often used in systems where human oversight is minimal or impractical.

- How It Works: Autonomous AI relies on sensors, real-time data analysis, and reinforcement learning to interact with and navigate complex environments. It learns from feedback and improves its performance with experience.

- Common Use Cases:

- Self-driving cars that can navigate urban environments

- Drones used for delivery, surveillance, or search-and-rescue operations

- Robots in manufacturing plants, adapting to different production needs

Autonomous AI is ideal for tasks that require decision-making in real-world environments, and its capabilities are growing as machine learning algorithms become more sophisticated.

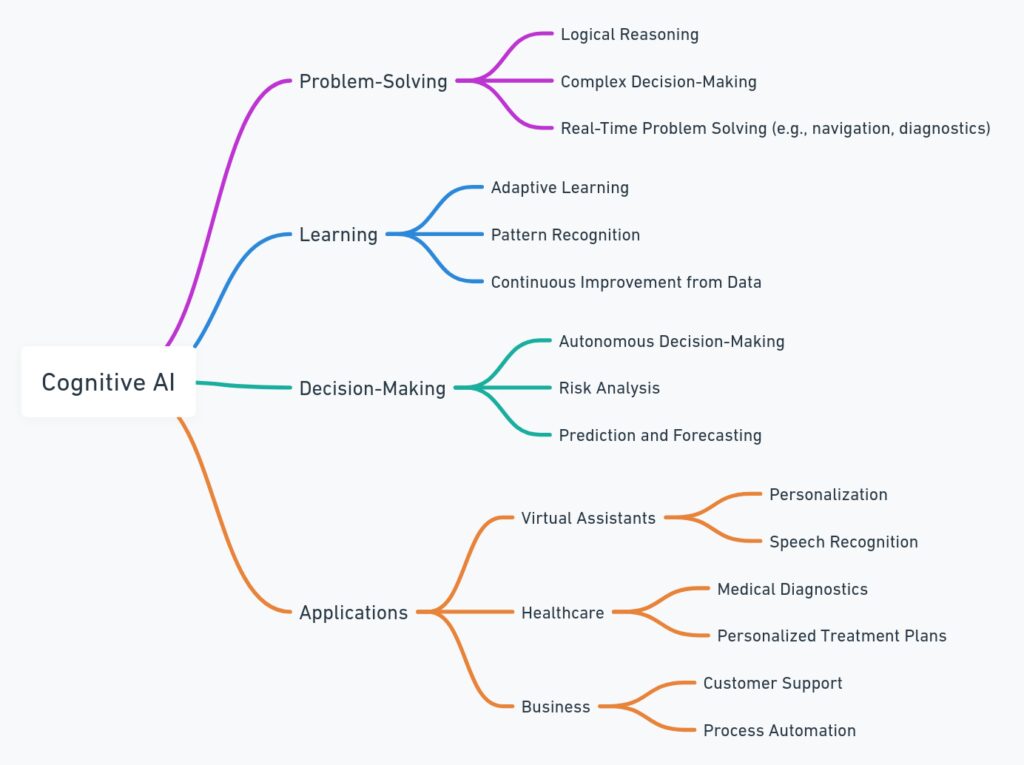

Cognitive AI: Mimicking Human Thinking

Cognitive AI aims to replicate human-like thinking and reasoning. These systems simulate cognitive processes such as problem-solving, decision-making, and learning. Cognitive AI goes beyond simple automation, using techniques like natural language processing and knowledge representation to interact with humans in a more natural, intuitive manner.

- How It Works: Cognitive AI systems use algorithms to process language, understand context, and make decisions based on real-world knowledge. They simulate how humans process information to reason, learn, and solve problems.

- Common Use Cases:

- Virtual assistants like IBM’s Watson, capable of answering complex queries and providing expert advice

- Customer service AI that can resolve queries based on understanding context and emotions

- Healthcare diagnostics, where cognitive AI helps doctors by analyzing patient histories and recommending treatments

Cognitive AI is at the cutting edge of task-based AI, bringing machines closer to human-like reasoning.

These task-based categories of AI highlight the range of capabilities that modern artificial intelligence systems possess. From reactive machines handling simple tasks to the potential of AGI mastering any intellectual challenge, the future of AI is rich with possibilities. Each type plays a crucial role in transforming

Summary of AI Types

| Type of AI | Description | Key Characteristics | Common Use Cases |

|---|

| Reactive Machines | Basic AI that reacts to specific inputs with predefined outputs. Lacks memory and learning ability. | Fixed, task-specific, no memory or learning | Chess engines (IBM’s Deep Blue), simple chatbots |

| Limited Memory AI | Can learn from past data for short periods to make better decisions. | Short-term memory, real-time decision-making | Self-driving cars, image recognition, voice assistants |

| Theory of Mind AI | Aims to understand human emotions, intentions, and social cues. Still largely theoretical. | Emotionally and socially aware, empathy | Social robots, therapeutic AI, customer service AI |

| Self-Aware AI | Hypothetical AI with consciousness and self-awareness, capable of understanding its own existence. | Autonomous reasoning, self-perception | Autonomous robots, creative AI, ethical decision-making |

| Task-Specific AI | Narrow AI designed to perform a single task exceptionally well. | Specialized, non-generalizable | Language translation (Google Translate), recommendation systems |

| Artificial General Intelligence (AGI) | Hypothetical AI that can perform any intellectual task a human can, capable of cross-domain learning. | Human-like learning, reasoning, and adaptability | Fully autonomous robots, advanced virtual assistants |

| Robotic Process Automation (RPA) | Automates repetitive, rule-based tasks without learning capabilities. | Rigid, rule-based automation | Data entry, invoice processing, customer service bots |

| Autonomous AI | AI capable of making independent decisions in complex, dynamic environments. | Real-time decision-making, self-learning | Self-driving cars, drones, robots in manufacturing |

| Cognitive AI | Mimics human-like cognitive processes such as problem-solving, reasoning, and learning. | Understanding context, language processing | IBM Watson, healthcare diagnostics, customer service AI |

Summary

AI can be classified in many different ways, and understanding these types helps to appreciate the diversity and complexity of AI development. Here’s a breakdown:

- By Intelligence: Narrow AI, General AI, Superintelligence.

- By Functionality: Reactive Machines, Limited Memory AI, Theory of Mind AI, Self-Aware AI.

- By Learning Approaches: Supervised, Unsupervised, Reinforcement, Semi-Supervised, Self-Supervised, Transfer Learning.

- By Architecture: Symbolic AI, Connectionist AI (Neural Networks), Hybrid AI, Evolutionary AI.

- By Tasks: Robotic Process Automation (RPA), Cognitive AI, Generative AI.

Each of these classifications provides different lenses through which we can understand the broad and evolving field of AI.

Evolution of AI: Where Are We Heading?

From narrow AI applications that power our digital world today to the far-off concept of superintelligent AI, the evolution of artificial intelligence is on a fast track. Researchers and developers are constantly pushing boundaries, moving from task-specific machines to systems that aim to understand the world in more general and sophisticated ways.

Yet, with every new advancement, there are significant challenges and ethical concerns that arise. How will we manage the risks associated with AGI and ASI? And can we ensure AI remains a force for good in a world increasingly reliant on it?

If you’re interested in staying on top of these developments, make sure to follow credible sources like OpenAI and MIT’s AI Lab for updates on this rapidly evolving field.