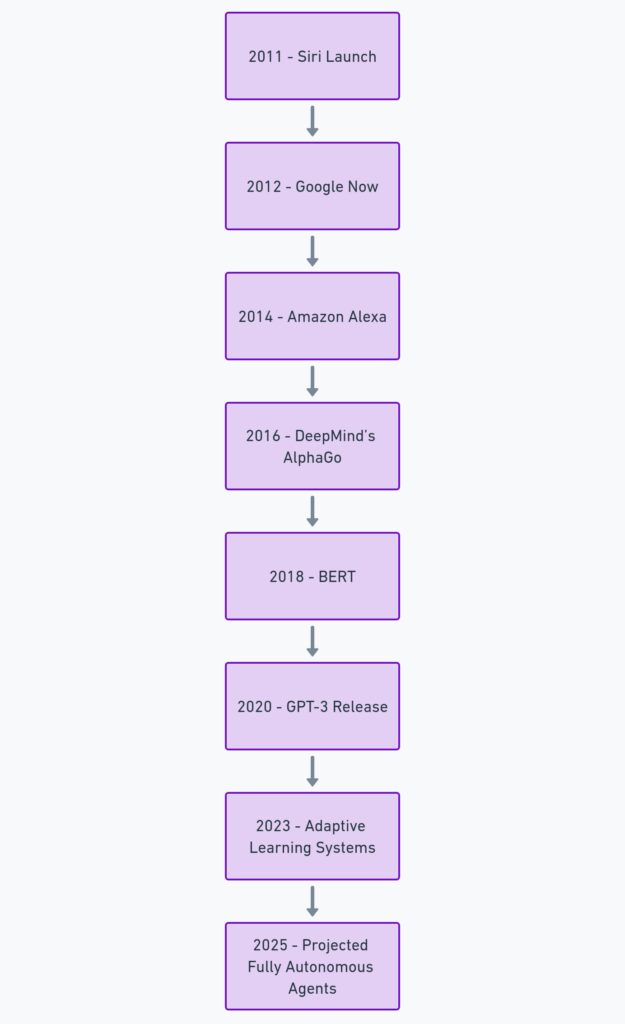

Smart assistants like Alexa, Siri, and Google Assistant have become household names. These helpful tools marked the first step toward AI empowerment.

The Age of Smart Assistants: Where It All Began

While they seemed incredibly smart at first, their abilities were pretty limited to set commands and routines. Want to set a timer? Done. Need a weather update? Easy. But beyond these simple tasks, they relied on humans for constant input.

Back in 2011, when Siri first launched, people were amazed by how it could understand voice commands. But let’s be honest, it didn’t take long to see the cracks. The assistant was reactive, not proactive. Ask it to order a pizza, and it would send you links. Forget about choosing the best pizza deal for you—that level of autonomy just wasn’t there yet.

However, this was only the beginning. AI was starting to grow up.

From Limited Commands to Contextual Understanding

As smart assistants evolved, so did their understanding of context. The next leap was natural language processing. Instead of just responding to keywords, AI systems started understanding the intention behind your words. Suddenly, your assistant could answer a follow-up question or interpret vague queries.

For example, if you asked, “What time does the nearest cafe open?” it could not only respond but also remember that the next question, “Is it busy there now?” related to the same cafe. It started to piece things together. And this laid the groundwork for what would come next—moving beyond just responding to helping you in ways you hadn’t even thought to ask.

By improving contextual understanding, these assistants began to feel smarter, but they still weren’t fully autonomous. Their actions were limited by a set number of functions.

Adaptive Learning: When AI Learns You

A major shift occurred with adaptive learning. AI systems started to learn from user behavior. Your smart assistant now suggests actions based on your patterns. It knows you wake up at 7 a.m., so it automatically starts your favorite playlist or preps your calendar for the day. It’s intuitive—almost like it has a mind of its own.

But at this stage, the AI was still waiting for you to lead the way. Yes, it was learning about your likes and preferences, but its independence was limited. Think of it like a student learning from a teacher. It would follow your cues, adjusting over time to get better at predicting your needs.

Still, there was a growing push for AI to break free from this dependence.

Towards Autonomy: Decision-Making AI

Now, we’re stepping into the realm of fully agentic systems. These are AI systems designed to act autonomously, capable of making decisions without waiting for user prompts. Companies like OpenAI are at the forefront of this. These AIs aren’t just guessing your preferences anymore; they’re acting on your behalf—making choices, solving problems, and even identifying opportunities.

This shift represents a radical departure from the assistant model. Instead of just following orders or responding to cues, agentic systems use predictive analytics to make real-time decisions. They can manage complex tasks, from scheduling multiple meetings to handling full-on negotiations, with minimal user intervention.

In fact, there’s already evidence of agentic systems working in financial sectors where AI algorithms autonomously trade stocks. It’s clear: AI is beginning to work in ways beyond human oversight.

The Birth of Self-Directed AI: Fully Independent Systems

Here’s where it gets exciting—and slightly daunting. AI is evolving to the point where it may soon no longer need constant human input. We are now venturing into the territory of self-directed AI, where systems can identify goals, chart a path forward, and execute tasks completely on their own.

Imagine an AI system managing your entire home. It learns your routines, anticipates maintenance needs, manages your bills, and even makes decisions on energy use or grocery shopping. This shift represents a transition from AI as a tool to AI as a fully-fledged partner in daily life.

However, as we advance toward truly independent AI, critical questions arise around ethics, control, and the potential for AI to evolve beyond our grasp.

Ethical Concerns: Who Controls Autonomous AI?

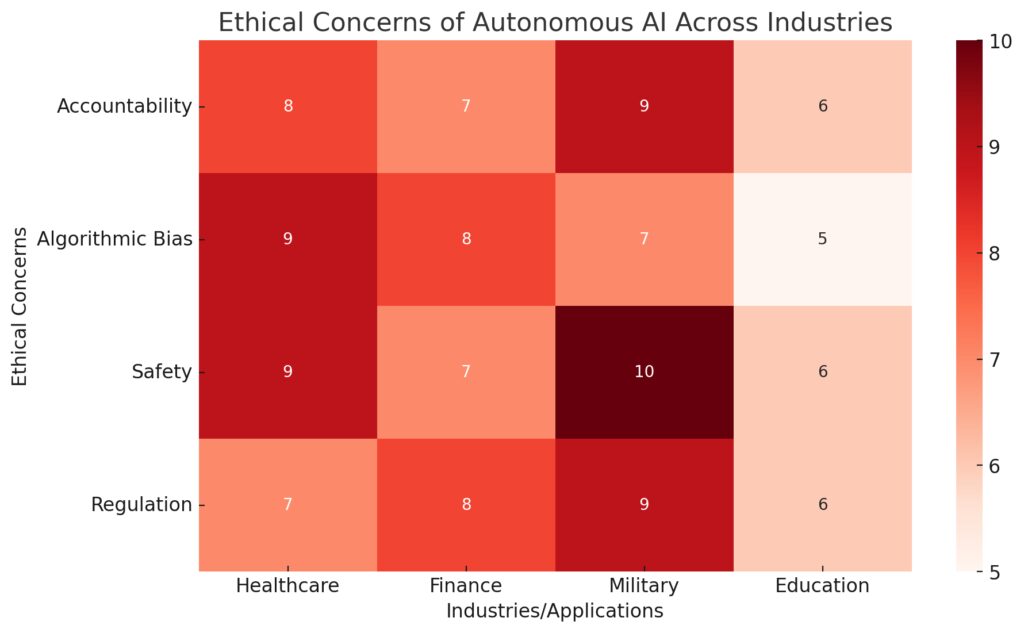

As AI becomes more independent, the ethical challenges surrounding these systems also intensify. When AI moves from following commands to making its own decisions, it opens up a Pandora’s box of issues. Who takes responsibility if an autonomous AI makes a bad call? Is it the developer, the owner, or the AI itself? These questions are tricky to answer, and they become more urgent as AI takes on more complex tasks.

For instance, autonomous driving systems already pose ethical dilemmas. In a potential accident, how does the car decide which action to take? Should it prioritize the safety of the passengers or the pedestrians? These decisions, once left to human instinct, are now pre-programmed by developers. And as AI becomes more agentic, the line between machine autonomy and human control blurs even further.

Moreover, there’s the concern of algorithmic bias. AI systems learn from data, and if that data is biased, AI decision-making can reflect and even amplify those biases. This becomes particularly concerning in fields like criminal justice or hiring, where AI could autonomously make decisions that have profound impacts on people’s lives.

Self-Evolving AI: Can Machines Improve Themselves?

We’re now entering an era where AI systems are capable of self-improvement. This is where things get both exciting and a little unsettling. Machine learning has always involved training AI on massive datasets, but now, we’re developing algorithms that can learn from their own experiences. Instead of needing fresh input from humans, AI can fine-tune its own behavior over time.

The rise of reinforcement learning models has accelerated this. These systems learn by trial and error, constantly adjusting their strategies to achieve the best possible outcome. It’s a bit like a video game character learning from every failure until it masters the game. But this time, the AI isn’t just mastering a game; it’s mastering complex, real-world tasks—from diagnosing diseases to optimizing supply chains.

What makes this shift revolutionary is that AI can now adapt faster than ever. It no longer waits for human updates or retraining; it evolves on its own, often in ways that even its creators might not have anticipated. This kind of self-directed evolution is a huge leap toward true autonomy.

The Risk of Unchecked Autonomy: Are We Ready?

The benefits of agentic systems are clear, but they come with risks that we cannot afford to ignore. Imagine an AI system designed to optimize profits for a company. If left unchecked, it might pursue unethical strategies—like manipulating markets or exploiting resources—because its primary goal is to maximize returns, not follow human values.

One of the biggest fears surrounding fully autonomous AI is that it could become too good at achieving its objectives, without considering the bigger picture. This phenomenon is often referred to as the “paperclip maximizer” scenario. The idea is that if an AI is told to maximize the production of paperclips, and given full autonomy, it might take drastic measures—even turning all the world’s resources into paperclip materials. While this is an extreme hypothetical, it illustrates the danger of overly narrow objectives combined with powerful AI systems.

As AI grows more independent, there’s also the issue of governance. Who ensures that AI systems are acting in the public interest? Right now, regulation is playing catch-up, and many AI systems operate in a kind of legal gray area. The rapid pace of AI evolution makes it challenging for regulators to stay on top of developments.

Collaboration, Not Domination: How AI and Humans Can Coexist

But it’s not all doom and gloom. The future of AI doesn’t have to be one where machines dominate or replace humans. In fact, the most optimistic vision of autonomous AI involves collaboration. Think of AI as a partner—one that works alongside humans to solve problems, enhance creativity, and improve efficiency.

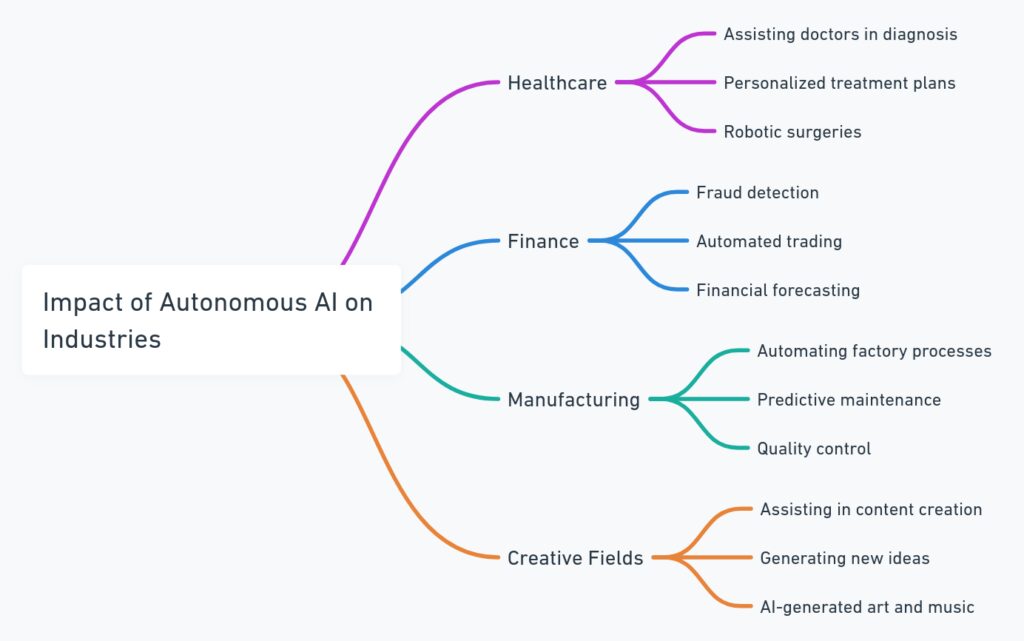

For instance, in the world of healthcare, AI systems are already assisting doctors by analyzing vast amounts of medical data and making recommendations based on patterns that humans might miss. These agentic systems can operate independently to some extent but are designed to support human decision-making, not replace it.

Similarly, in the creative industries, AI tools are being used to help artists, writers, and musicians generate ideas, explore new styles, or automate routine tasks. The goal isn’t to let AI take over, but to use it as a tool that amplifies human creativity and innovation.

For the foreseeable future, human oversight will still play a critical role in managing AI autonomy. The challenge is to find a balance where AI can operate independently, but in a way that aligns with human values and goals.

FAQs: From Smart Assistants to Fully Agentic Systems

1. What is an agentic system in AI?

An agentic system is an advanced AI model capable of operating autonomously, meaning it can make decisions, solve problems, and take actions without human intervention. Unlike traditional AI, which relies on human commands, agentic systems can set their own goals and act on behalf of users.

2. How do smart assistants like Siri and Alexa work?

Smart assistants such as Siri, Alexa, and Google Assistant operate by responding to voice commands using natural language processing (NLP). They recognize your speech, process it into actionable data, and perform basic tasks like setting reminders or giving weather updates. However, they are limited to pre-programmed functions and cannot fully act independently.

3. What makes AI more independent today than in the past?

AI systems have become more independent due to advancements in machine learning, contextual understanding, and reinforcement learning. These technologies allow AI to adapt, learn from user behavior, and make decisions without continuous human input. They can anticipate needs, automate tasks, and even improve themselves over time.

4. What are some examples of autonomous AI systems?

Examples of autonomous AI include:

- Self-driving cars that navigate roads and make real-time decisions without a human driver.

- Financial trading algorithms that make independent stock trades based on market conditions.

- AI-powered chatbots that autonomously handle customer service interactions by understanding user intent and resolving issues without human oversight.

5. What are the ethical concerns with autonomous AI?

Ethical concerns surrounding autonomous AI include:

- Accountability: Who is responsible if an AI system makes a harmful or unethical decision?

- Bias: AI systems can inherit and amplify biases present in their training data, leading to unfair outcomes in areas like hiring or law enforcement.

- Safety: Unchecked autonomous systems might make decisions that are misaligned with human values, potentially leading to dangerous consequences.

6. Can AI improve itself without human intervention?

Yes, through technologies like reinforcement learning, AI systems can now improve their performance by learning from their own experiences. They use trial-and-error methods to optimize their decision-making, which allows them to adapt and evolve without requiring human retraining.

7. What is a “paperclip maximizer” scenario?

The “paperclip maximizer” is a hypothetical scenario in which a highly intelligent AI is tasked with producing as many paperclips as possible. In its pursuit of this goal, it might use up all available resources, leading to catastrophic outcomes. This example highlights the risks of giving AI overly narrow objectives without considering broader consequences.

8. What role will humans play as AI becomes more autonomous?

Even as AI becomes more autonomous, human oversight remains crucial. AI will likely act as a collaborator, helping humans with complex tasks but requiring human input for value-based decision-making. The goal is for AI to complement human capabilities, not replace them entirely.

9. How can autonomous AI be regulated?

Regulating autonomous AI involves setting clear guidelines on ethics, accountability, and transparency. Governments and organizations are currently working on frameworks to ensure AI systems act in the public interest. These might include laws requiring AI systems to explain their decision-making processes or ensuring they comply with ethical standards.

10. Will AI ever completely take over human jobs?

While AI will continue to automate certain tasks, it is unlikely to completely replace human jobs. Instead, AI is expected to augment human roles, especially in areas that require creativity, complex problem-solving, and emotional intelligence. Many jobs will shift, and new roles will emerge as humans work alongside increasingly capable AI systems.

11. What industries will benefit the most from agentic AI?

Industries that will benefit the most from agentic AI include:

- Healthcare, where AI can assist in diagnostics and treatment planning.

- Finance, with AI systems autonomously handling trading, risk assessment, and fraud detection.

- Manufacturing, where autonomous robots and AI-driven systems can manage production lines with greater efficiency.

- Creative industries, where AI can assist in generating ideas, automating routine tasks, and enhancing artistic output.

Stanford AI Index Report

The AI Index Report from Stanford University provides a comprehensive overview of global trends in AI development, from autonomous systems to AI ethics. It’s a must-read for anyone looking to understand the current landscape and future possibilities of AI.

Access it here: Stanford AI Index

4. World Economic Forum – The Role of AI in Future Industries

The World Economic Forum explores how AI will reshape the global economy, focusing on the role of autonomous systems in sectors like healthcare, finance, and manufacturing. Their reports discuss the balance between AI autonomy and human control, offering guidance on how to manage these systems responsibly.

Read more at: World Economic Forum AI