Generative models are reshaping the landscape of AI by enabling machines to create data rather than simply analyzing it. At the heart of these models lie latent variables, an essential concept that powers Generative Adversarial Networks (GANs) and Variational Autoencoders (VAEs).

These models have made waves in fields like image synthesis, video generation, and even drug discovery.

In this article, we’ll explore the fascinating world of generative models and latent variables, diving deep into the mechanisms of GANs and VAEs.

What Are Generative Models?

Generative models are a class of machine learning algorithms designed to model the distribution of data. Their primary goal is to learn the underlying structure of data in order to generate new, realistic data points. These models don’t just classify or make predictions—they create.

The Role of Latent Variables

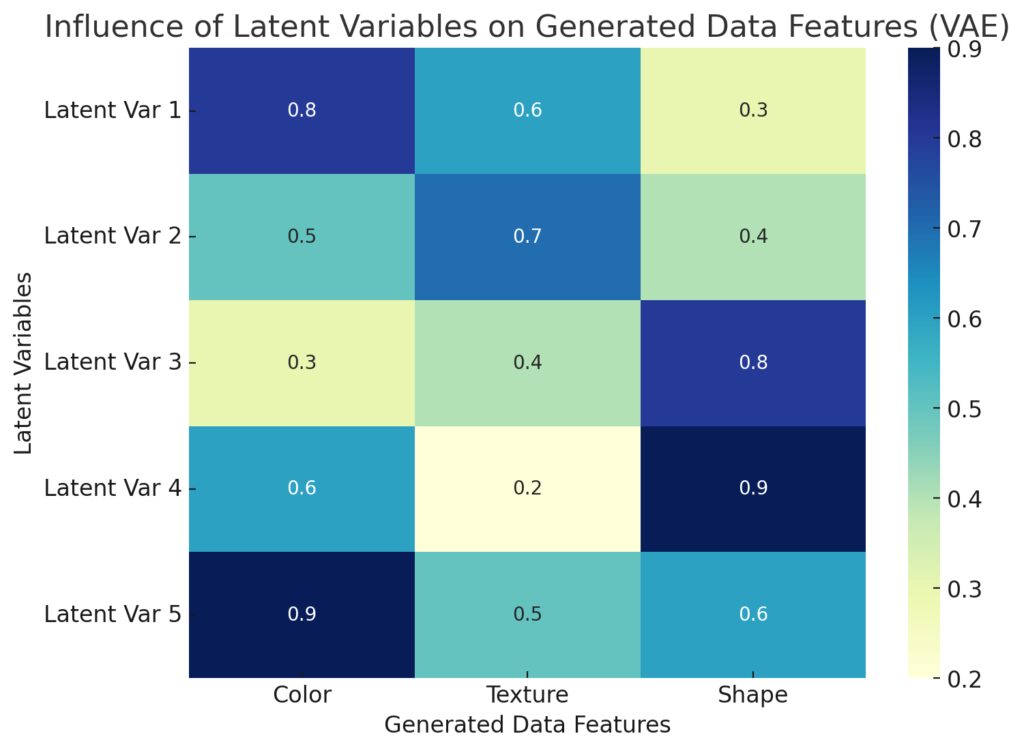

To understand how generative models work, we need to talk about latent variables. Latent variables are hidden, unobserved variables that influence the observed data. Think of them as the unseen forces that shape reality. In generative models, these variables help to represent complex data in a simpler, lower-dimensional space.

By learning this hidden structure, generative models can sample new data points from the latent space to produce entirely new content.

GANs: A Battle Between Two Networks

One of the most exciting and successful generative models is the Generative Adversarial Network (GAN). GANs are unique because they consist of two competing neural networks: the generator and the discriminator.

How GANs Work

The generator’s job is to create fake data, while the discriminator’s task is to distinguish between real and fake data. They are locked in a constant game of one-upmanship. Over time, the generator becomes better at producing realistic data, and the discriminator gets better at spotting fakes.

In this adversarial setting, both networks improve, eventually resulting in a generator that produces data almost indistinguishable from the real thing. The magic of GANs lies in their ability to create high-quality images, videos, and more by sampling from a learned latent space.

Applications of GANs

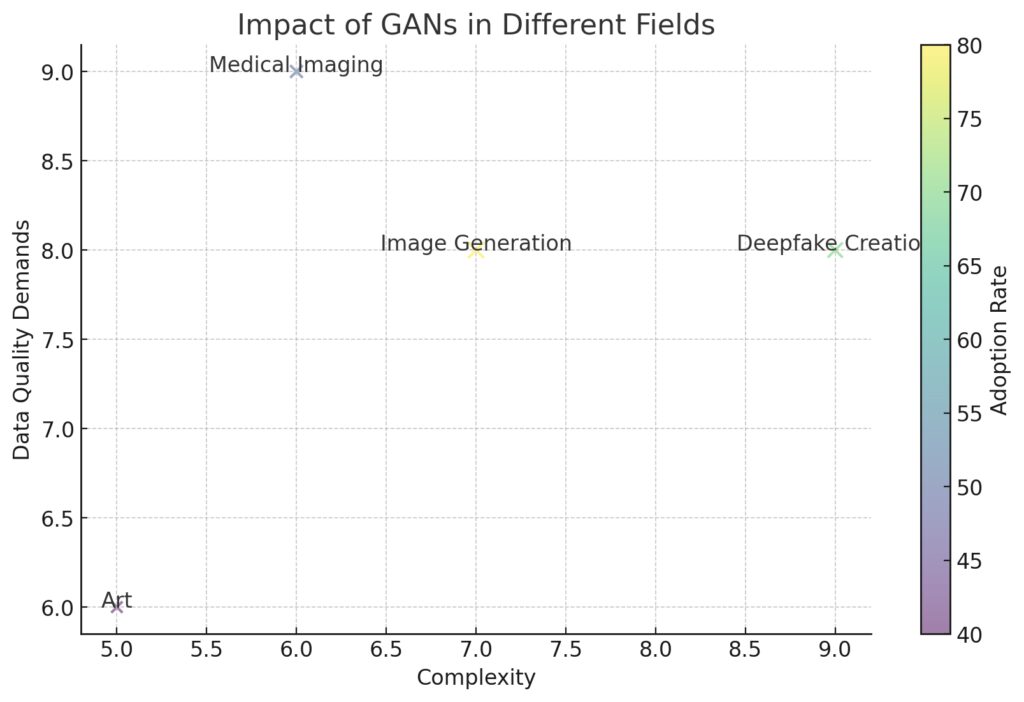

GANs have revolutionized industries across the board. Here are some popular applications:

- Image Generation: GANs can generate high-resolution images that look like they were captured by a camera.

- Deepfake Technology: GANs power the creation of highly realistic fake videos and images.

- Art Creation: Artists and designers use GANs to create unique, AI-generated artworks.

Despite their impressive capabilities, GANs can be challenging to train due to the adversarial nature of the generator and discriminator. But when trained correctly, they can produce stunning results.

VAEs: Learning Smooth Latent Spaces

Another powerful type of generative model is the Variational Autoencoder (VAE). Unlike GANs, VAEs approach the problem of data generation from a probabilistic perspective.

How VAEs Work

VAEs consist of two key components: an encoder and a decoder. The encoder compresses input data into a latent space, while the decoder reconstructs the original data from this latent representation. What sets VAEs apart is that they treat the latent space as a continuous, probabilistic distribution.

This allows VAEs to generate smooth transitions between data points. For instance, you could morph one image into another by navigating through the latent space. VAEs are incredibly useful when you need interpretable and smooth latent variables.

Applications of VAEs

- Image Reconstruction: VAEs can reconstruct and generate images based on learned latent representations.

- Data Compression: VAEs provide a compact representation of data, making them useful for compression tasks.

- Anomaly Detection: VAEs can detect outliers by measuring how well a data point fits within the learned latent space.

Although VAEs are not as capable of producing sharp images as GANs, they offer more control and structure over the generated data.

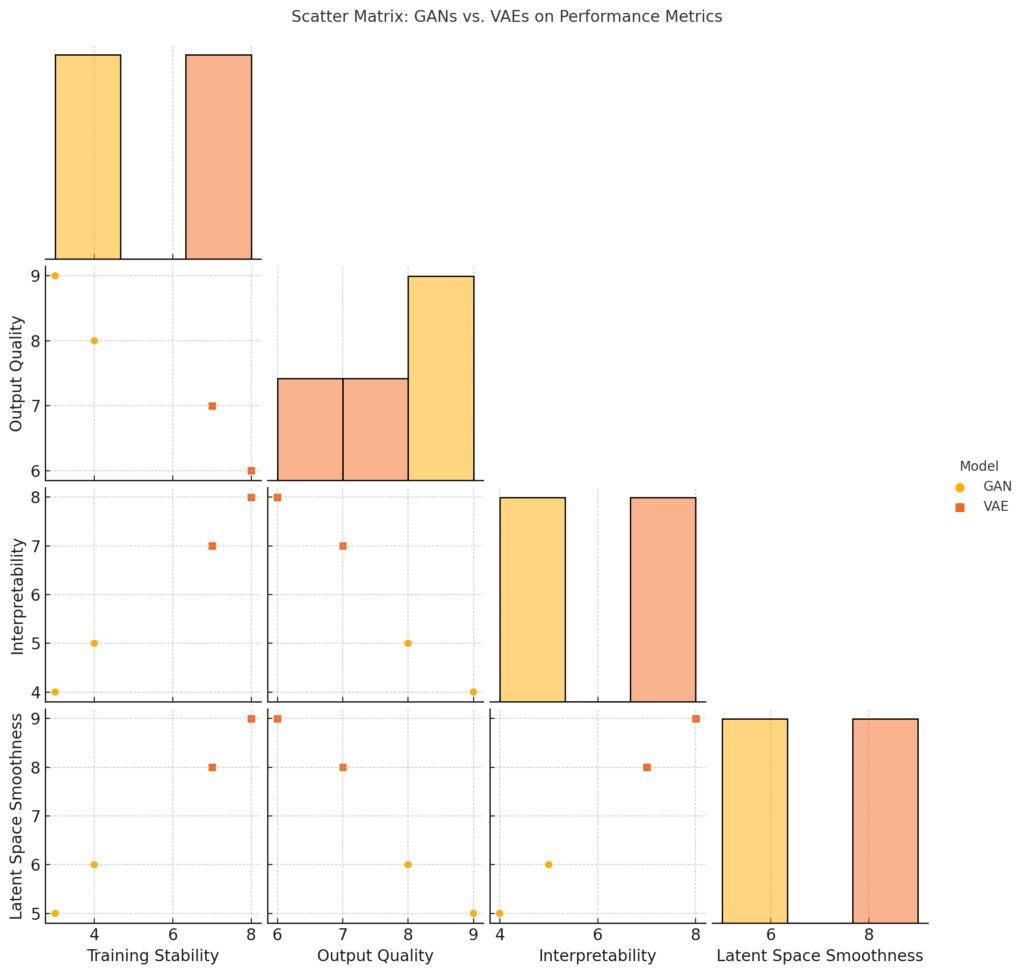

Comparing GANs and VAEs

Both GANs and VAEs are generative models, but they have distinct differences:

- Training Complexity: GANs are notoriously hard to train due to the adversarial process, whereas VAEs are easier to optimize thanks to their probabilistic framework.

- Output Quality: GANs typically produce sharper and more realistic images, while VAEs often generate blurrier but more structured outputs.

- Latent Space: VAEs provide a more interpretable and smooth latent space, whereas GANs’ latent spaces can be harder to navigate.

In many cases, researchers combine the best of both worlds by integrating aspects of GANs with VAEs to create models that balance realism with interpretability.

Why Latent Variables Matter

Latent variables are the backbone of both GANs and VAEs. They allow these models to capture the essence of complex data and represent it in a simplified form. By exploring this hidden structure, we can generate new data points that are both novel and meaningful.

Latent variables open up a world of possibilities for creative applications, from generating new images to creating entirely new forms of media.

The Future of Generative Models

As GANs and VAEs continue to evolve, we can expect even more exciting developments in the world of AI-generated content. Researchers are constantly finding ways to improve these models, making them more stable, efficient, and capable of generating higher-quality data.

From realistic virtual environments to AI-assisted creativity, the potential applications for generative models are endless. The magic behind these models—and the latent variables that power them—will continue to unlock new possibilities across industries.

Further Reading

- NVIDIA Research: Generative Models

- NVIDIA’s research hub offers insights into cutting-edge generative model advancements, including applications in graphics, deep learning, and gaming.

- A Friendly Introduction to VAEs and GANs

- This paper provides an approachable introduction to both VAEs and GANs, detailing their architecture, differences, and potential real-world applications.

- Machine Learning Mastery: How to Develop GAN Models

- A step-by-step guide by Machine Learning Mastery that explains how to build GAN models from scratch, with clear Python code examples.

Latent variables and generative models are indeed magical, offering a glimpse into a future where machines can create, imagine, and innovate right alongside humans. The only limit is our imagination.

FAQs

How do GANs and VAEs differ?

While both GANs and VAEs are generative models, they differ in how they generate data. GANs use an adversarial process with two competing networks, while VAEs rely on a probabilistic framework to encode data into a continuous latent space. GANs usually generate sharper images, while VAEs provide smoother transitions between generated data.

What are some practical applications of GANs?

GANs are widely used in image and video generation, deepfakes, art creation, and even in fields like fashion and product design. They’re also making headway in medical imaging, helping to generate synthetic data for research and training purposes.

Why are VAEs useful for data generation?

VAEs are particularly useful because they create smooth, interpretable latent spaces that allow for meaningful transitions between data points. This makes them great for tasks like image reconstruction, data compression, and generating structured, varied data.

What makes training GANs challenging?

Training GANs can be difficult because the generator and discriminator networks are in constant competition. This adversarial relationship can lead to instability in the training process, making it hard to achieve equilibrium where both networks perform optimally.

How do latent variables help in data generation?

Latent variables capture the essential, hidden features of complex data in a simplified form. By learning these latent representations, generative models can create new, realistic data points by sampling from this latent space. This process allows for efficient data generation, even in high-dimensional datasets like images or audio.

What are some common challenges with VAEs?

One common challenge with VAEs is that they tend to produce blurry images compared to GANs. This is because VAEs prioritize learning a smooth, continuous latent space, which can result in less detailed reconstructions. Additionally, balancing the trade-off between reconstruction accuracy and smooth latent space sampling can be tricky during training.

Can GANs and VAEs be combined?

Yes, researchers sometimes combine elements of both GANs and VAEs to leverage their strengths. This hybrid approach, often called a VAE-GAN, uses the VAE’s structured latent space with the GAN’s ability to generate sharp, high-quality images. The result is a model that balances realism with interpretability.

Are there alternatives to GANs and VAEs for generative tasks?

Yes, there are other generative models such as Normalizing Flows, Autoregressive Models (like PixelCNN), and Energy-Based Models. Each of these models has its own strengths and trade-offs, depending on the specific application or type of data you want to generate.

What industries benefit most from generative models?

Generative models are impacting numerous industries, including entertainment (creating digital content), healthcare (medical imaging and drug discovery), fashion (design automation), and even marketing (generating personalized ads). The ability to generate new data is opening doors for innovation across various fields.