Class imbalance is a common issue in many machine learning problems, and it often leads to biased models. With K-Nearest Neighbors (KNN), this problem can result in underrepresented classes being overlooked, as KNN assigns class labels based on the majority class within its neighborhood.

But don’t worry—there are effective ways to handle this! Here, we’ll cover the core techniques to achieve fairer, more balanced classification with KNN.

Understanding the Challenges of Imbalanced Classes in KNN

Why Class Imbalance Affects KNN

In an ideal dataset, each class has roughly the same number of instances. However, class imbalance arises when one class significantly outnumbers others, which can skew predictions. In KNN, this problem becomes evident when a model consistently predicts the majority class over the minority class simply due to proximity to a larger number of majority-class neighbors.

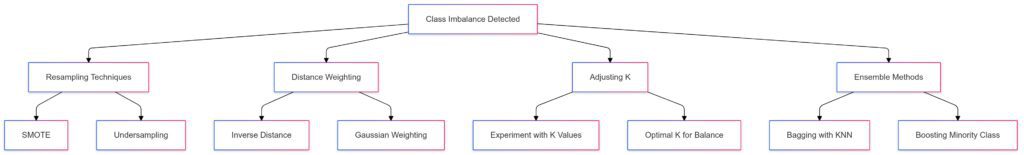

Resampling Techniques (SMOTE, undersampling),

Distance Weighting (inverse distance, Gaussian weighting),

Adjusting K (experimenting with K values),

Ensemble Methods (bagging and boosting).

For instance, in a binary classification scenario, if a rare class only makes up 10% of the data, KNN might fail to correctly identify it because the algorithm is swayed by the majority class in most neighborhoods.

Real-Life Implications of Imbalanced Classification

The consequences of unbalanced KNN classification are especially serious in fields like medical diagnostics or fraud detection, where minority classes often represent critical outcomes. Misclassifying minority instances could lead to serious repercussions, such as missed diagnoses or security threats. Addressing this imbalance ensures the model performs well across all classes, enhancing fairness and reliability.

Resampling Techniques for KNN with Imbalanced Classes

1. Oversampling the Minority Class

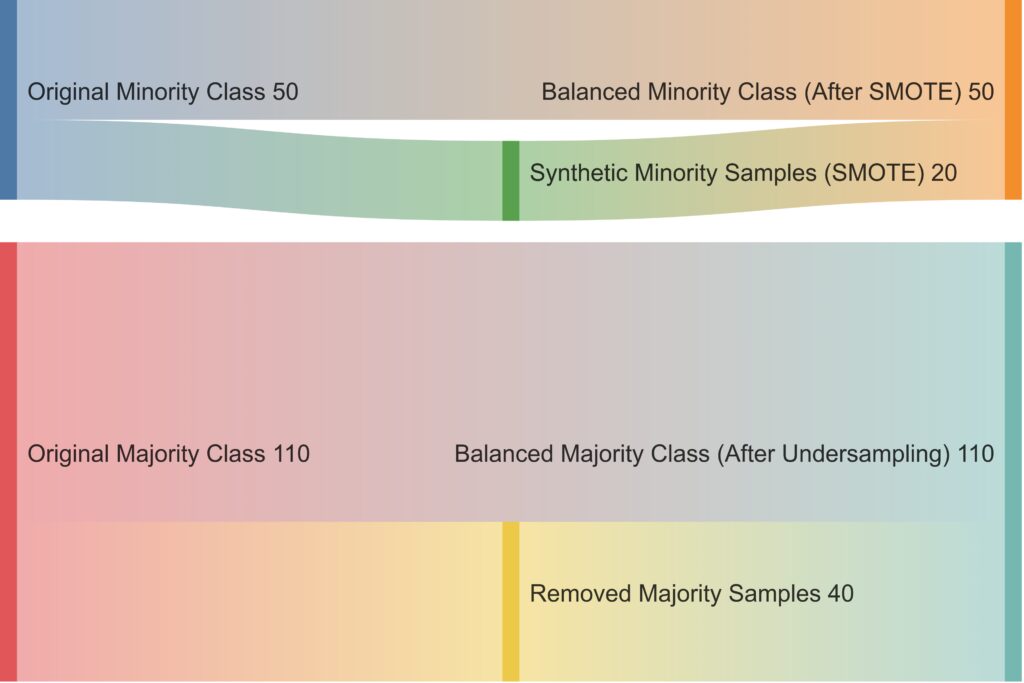

Oversampling involves creating synthetic instances of the minority class to balance the dataset. One of the most common methods is Synthetic Minority Over-sampling Technique (SMOTE), which generates synthetic samples by interpolating between existing minority class instances.

- How it Works: SMOTE takes pairs of neighboring instances within the minority class and creates a new instance somewhere between them. This artificially increases the presence of the minority class, making it easier for KNN to find minority class neighbors.

- Considerations: While effective, oversampling can lead to overfitting. To mitigate this, limit the number of synthetic samples or use SMOTE combined with undersampling of the majority class.

SMOTE: Generates synthetic samples for the minority class to reach balance.

Undersampling: Removes samples from the majority class to achieve similar balance.

2. Undersampling the Majority Class

As the opposite of oversampling, undersampling reduces the majority class instances to balance the dataset. By removing samples from the majority class, this approach prevents KNN from being overwhelmed by the majority, enabling fairer minority class representation.

- How it Works: The algorithm randomly removes majority class instances, aiming to reduce its influence in KNN’s neighborhood.

- Pros and Cons: Undersampling avoids overfitting, but it risks discarding potentially valuable data. It’s best suited for cases where the dataset is large enough that removing samples won’t lead to information loss.

Distance Weighting Techniques for Fairer Classification

1. Weighted Voting with Inverse Distance

In KNN, each neighbor is traditionally given an equal vote regardless of its distance. By assigning weights to neighbors based on their distance to the query point, distance weighting helps mitigate the effect of class imbalance.

- How it Works: Closer neighbors receive higher weights in the vote, meaning their class label has a stronger influence on the prediction. This approach lessens the effect of distant majority class neighbors swaying the classification.

- Practical Tip: Use an inverse distance weighting formula—such as

1/distance—to ensure closer neighbors contribute more significantly to the classification.

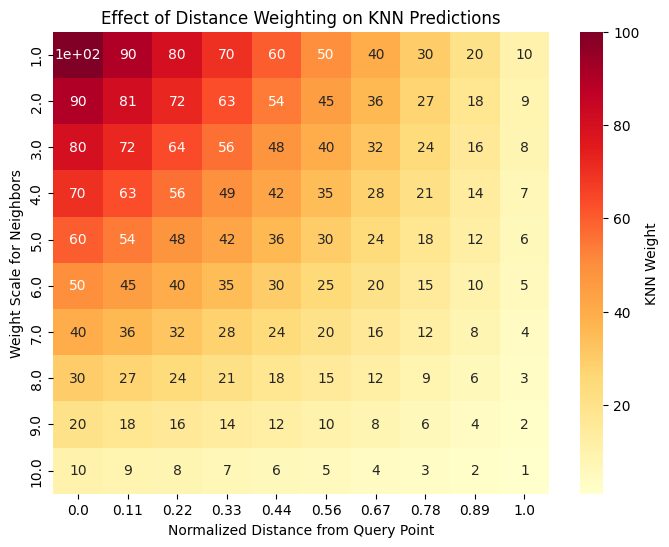

X-axis: Represents the normalized distance from the query point (closer to 0 means closer to the query).

Y-axis: Displays weight scale for neighbors in KNN, with higher values indicating stronger weighting.

Color Intensity: Warmer colors indicate stronger weights for closer neighbors.

2. Adjusting K for Optimal Performance

The choice of K (the number of neighbors considered) can impact how well KNN handles imbalanced classes. Smaller values of K tend to reflect the local distribution, which can be beneficial for detecting minority classes. Conversely, larger K values dilute the minority influence.

- Selecting K: Experiment with different values of K using cross-validation. Smaller K values are usually recommended, as they allow minority classes to influence classification more effectively, especially in imbalanced datasets.

- Cross-Validation for Consistency: To avoid overfitting or under-representation, use stratified cross-validation when tuning K. This ensures each fold reflects the original class distribution, giving a fair measure of K’s effectiveness.

Combining KNN with Ensemble Methods

1. Bagging with Randomized KNN

Bagging (Bootstrap Aggregating) involves training multiple KNN models on random subsets of the data, then averaging their predictions. This technique, known as Randomized KNN, is particularly useful for imbalanced datasets, as each model may capture a unique subset of minority class instances.

- How it Works: By training on different subsets, each KNN model may receive a balanced representation of classes. The ensemble then averages their predictions, improving the likelihood of minority class recognition.

- Advantages: This approach is computationally efficient and reduces the risk of overfitting. Additionally, bagging improves model stability, making it ideal for noisy or complex datasets.

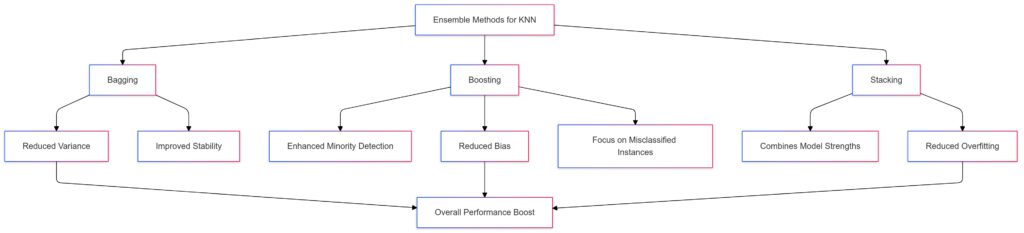

Bagging: Leads to reduced variance and improved stability.

Boosting: Enhances minority detection, reduces bias, and focuses on misclassified instances.

Stacking: Combines model strengths and reduces overfitting.

2. Boosting with KNN for Improved Minority Class Detection

Boosting is an ensemble method where models are trained sequentially, each one focusing more on instances misclassified by previous models. Boosted KNN applies this by training new KNN models to correct errors, which often come from minority class misclassification.

- How it Works: In each iteration, the boosting algorithm emphasizes samples that were previously misclassified, which often belong to the minority class. The resulting model gives more balanced predictions.

- Use Cases: Boosted KNN is effective in high-stakes scenarios, such as detecting fraudulent transactions or predicting rare diseases, as it improves accuracy for critical minority instances.

Data Preprocessing Strategies for Balanced KNN Classification

1. Feature Scaling for Balanced Neighborhoods

In KNN, distance metrics are sensitive to feature scaling, which can affect classification accuracy, especially in imbalanced datasets. Scaling features to a similar range ensures that no feature unduly influences distance calculations, resulting in fairer classifications.

- Scaling Options: Min-max scaling or z-score normalization are commonly used methods. Min-max scaling adjusts feature values to a [0,1] range, while z-score normalization centers data around the mean with a standard deviation of 1.

- Why It Matters: Proper scaling ensures that minority class neighbors aren’t marginalized in the distance calculation, making it easier for KNN to recognize minority instances.

2. Dimensionality Reduction to Enhance Minority Recognition

In high-dimensional datasets, dimensionality reduction methods like Principal Component Analysis (PCA) or t-SNE can help by focusing on features that have the greatest impact on classification. This process often improves KNN performance, particularly with imbalanced data, by clarifying class boundaries.

- How it Works: By reducing irrelevant or redundant features, KNN can better distinguish between classes, giving minority classes a more defined presence.

- Considerations: Apply dimensionality reduction carefully, as excessive reduction can lead to loss of important information. Ideally, balance dimensionality reduction with cross-validation to achieve the best results.

Conclusion: Crafting a Balanced KNN Model for Fairer Predictions

Balancing classes for KNN classification isn’t just a technical improvement; it’s essential for creating fair, reliable models. By combining resampling techniques, distance weighting, ensemble methods, and proper data preprocessing, you can ensure that each class receives fair consideration in KNN predictions.

FAQs

| Challenge | Solution Technique | Expected Outcome |

|---|---|---|

| Class Imbalance | Resampling Methods (e.g., SMOTE, Undersampling) | Balances class distribution, enhancing minority class detection and fairness. |

| Impact of Distance on Bias | Distance Weighting (e.g., inverse distance) | Gives more weight to closer neighbors, reducing bias in predictions for minority classes. |

| Feature Scale Differences | Feature Scaling (e.g., Min-Max, Standard Scaling) | Ensures fair comparison by normalizing features, leading to more balanced neighbor influence. |

| Model Evaluation for Imbalance | Custom Evaluation Metrics (e.g., F1 Score, ROC-AUC) | Provides a balanced assessment by focusing on both precision and recall, improving fairness in performance measures. |

Explanation of Each Technique:

- Resampling Methods: Balances classes, improving model fairness by ensuring the minority class is better represented.

- Distance Weighting: Adjusts influence of neighbors based on proximity, helping reduce bias.

- Feature Scaling: Normalizes features, avoiding issues from scale disparities, leading to balanced neighbor impact.

- Custom Evaluation Metrics: Focuses on balanced metrics for precision and recall, providing a fair evaluation of model performance.

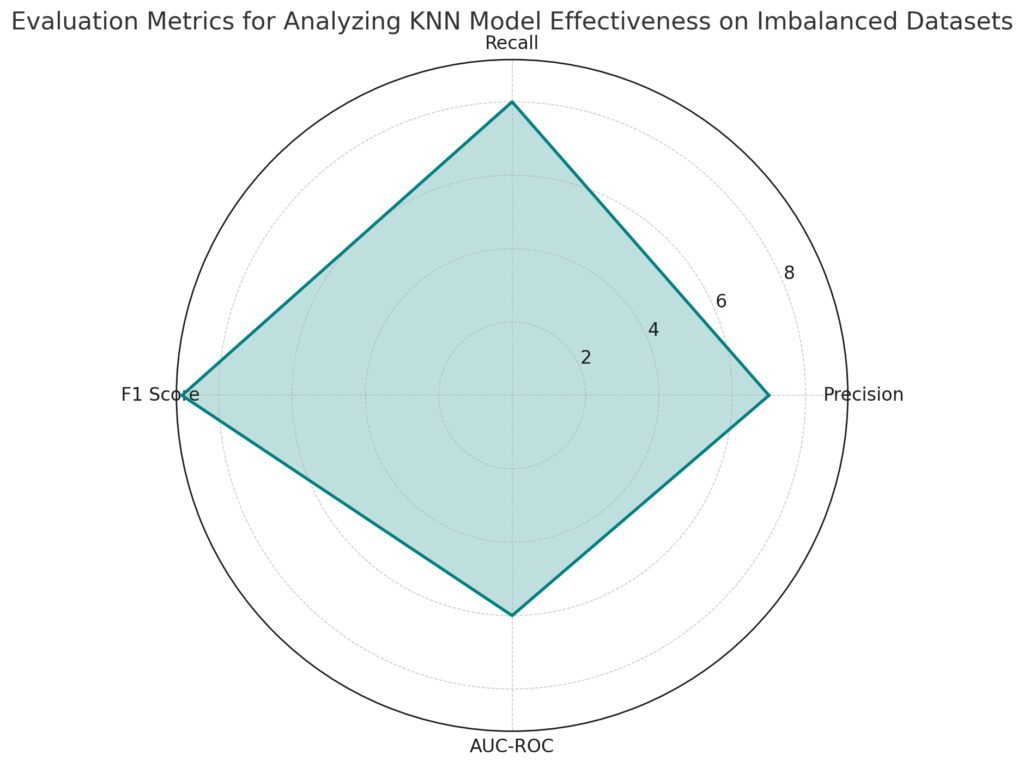

Axes: Each axis represents a distinct evaluation metric—Precision, Recall, F1 Score, and AUC-ROC.

Importance Scores: Simulated scores to highlight each metric’s role in evaluating model effectiveness, with higher values indicating greater importance.

What is SMOTE, and how does it work for KNN?

SMOTE (Synthetic Minority Over-sampling Technique) creates synthetic samples of the minority class by interpolating between instances. This method helps KNN find more balanced neighbors, which can improve classification fairness for minority classes.

Is undersampling a safe technique for KNN with imbalanced data?

Undersampling can help, but it risks losing important majority class information. It works best when the dataset is large enough to support the removal of some majority class instances without impacting model accuracy.

Can adjusting K improve fairness in KNN classification?

Yes! Selecting a smaller K (fewer neighbors) can often improve minority class recognition, as it reflects the local distribution more closely. Cross-validation is essential when tuning K to avoid overfitting.

Can tuning the number of neighbors (K) improve fairness in KNN?

Yes, adjusting K can help. Choosing a smaller K can improve minority class recognition by making the model more sensitive to local patterns. Using cross-validation while tuning K helps to find a balance between capturing minority details and overall performance.

How does distance weighting help with imbalanced data in KNN?

Distance weighting assigns greater importance to closer neighbors, making predictions more influenced by local instances rather than distant, majority-class neighbors. This can lead to more accurate classifications for minority classes in imbalanced datasets.

Are ensemble methods effective for KNN with imbalanced classes?

Yes, ensemble methods like bagging and boosting improve performance with imbalanced classes. Bagging with KNN can capture a diverse set of minority instances, while boosting emphasizes hard-to-classify samples, often those in minority classes, resulting in more balanced predictions.

Why is feature scaling important when handling imbalance in KNN?

In KNN, distance calculations rely on feature scales, so scaling features ensures that no single feature disproportionately influences the neighbors’ distances. Min-max scaling or z-score normalization can help KNN recognize minority classes more fairly across balanced features.

Can dimensionality reduction help improve KNN performance on imbalanced datasets?

Dimensionality reduction (e.g., PCA or t-SNE) can improve KNN’s accuracy by reducing noise and focusing on features most relevant to classification. This can make class boundaries more distinct, helping KNN detect minority classes more accurately in imbalanced datasets.

How does imbalanced data affect KNN’s accuracy in real-world applications?

In real-world applications like medical diagnostics or fraud detection, where minority classes represent critical outcomes, class imbalance can lead to misclassification. KNN may overlook rare but crucial cases, which could result in missed diagnoses or undetected fraud.

Is oversampling better than undersampling for KNN?

Oversampling generally retains all the original data and generates synthetic examples of the minority class, which can improve KNN performance without losing valuable information. However, it can lead to overfitting, especially in small datasets. Undersampling is effective for large datasets but risks data loss and is generally used with caution.

How do I decide between SMOTE and other oversampling techniques for KNN?

SMOTE is one of the most popular oversampling techniques due to its ability to create diverse, synthetic minority samples by interpolating between nearby instances. Other techniques, like random oversampling, simply duplicate samples, which can lead to overfitting. Choose SMOTE when you need a balanced dataset with minimal data redundancy.

How do I know if KNN is the right algorithm for imbalanced data?

KNN works well with smaller datasets and those with clear class boundaries. For severely imbalanced data, you may need to apply distance weighting, resampling, or ensemble methods. If these adjustments still don’t improve performance, you may want to try algorithms more robust to imbalance, like Random Forest or SVM.

What cross-validation techniques work best for imbalanced KNN models?

Stratified cross-validation is ideal for imbalanced datasets, as it ensures each fold has the same class distribution as the original dataset. This helps evaluate KNN performance accurately by maintaining the imbalance ratio across training and validation sets.

Can combining KNN with other algorithms improve fairness in imbalanced datasets?

Yes, combining KNN with other algorithms, such as in hybrid ensemble methods, can improve fairness. For example, KNN can be used with Random Forest or Logistic Regression in a stacked model to balance predictions across classes, especially for datasets with complex patterns or severe imbalance.

What are the best practices for setting distance metrics in imbalanced KNN?

For imbalanced datasets, Euclidean or Manhattan distances are common but might not be optimal. Experiment with distance metrics like Minkowski or Mahalanobis that can adjust based on feature correlations. Selecting a metric that reflects the dataset’s underlying distribution can enhance minority class detection in KNN.

How does boosting KNN handle class imbalance better than standard KNN?

In boosting, each subsequent KNN model places more focus on misclassified instances, which are often minority class instances in imbalanced datasets. This sequential emphasis allows boosted KNN to iteratively improve minority class recognition, offering more balanced predictions than a single KNN model would.

Can KNN handle multi-class imbalanced data effectively?

Yes, but it requires some adjustments. With multi-class imbalanced data, KNN may struggle more, as multiple minority classes can skew predictions toward a dominant class. Oversampling each minority class with SMOTE or distance-weighted KNN can improve accuracy across all classes, but you may need to experiment with parameters for each class balance.

Does KNN work well for high-dimensional imbalanced datasets?

In high-dimensional data, KNN may face the curse of dimensionality, where distances between points become less meaningful, especially in imbalanced settings. Dimensionality reduction techniques like PCA can help focus on the most relevant features, enhancing the model’s performance and its ability to detect minority instances.

How can I prevent overfitting when using SMOTE with KNN?

Overfitting can be an issue if too many synthetic samples are generated, especially in small datasets. To avoid this, try SMOTE combined with undersampling of the majority class, or limit the number of synthetic samples to balance the data without oversaturating the minority class. Cross-validation can help monitor and prevent overfitting.

Can feature selection improve KNN’s performance on imbalanced datasets?

Feature selection is beneficial because it removes irrelevant or redundant features, which can help KNN focus on the features that are most predictive of each class. By reducing noise, feature selection can improve KNN’s accuracy on minority classes, especially if the minority instances are highly distinct in specific features.

How does KNN compare to decision tree-based methods for imbalanced data?

Decision trees and their ensembles (e.g., Random Forests) handle class imbalance more effectively than standard KNN, as they can split based on class distribution at each node. However, KNN with proper distance weighting, resampling, and ensemble methods can be competitive for smaller datasets where neighborhood-based classification is advantageous.

Is it necessary to standardize or normalize data before using KNN on imbalanced datasets?

Yes, standardizing or normalizing data is essential for KNN, as it relies on distance metrics. Standardizing ensures all features contribute equally, preventing certain features from disproportionately influencing KNN’s classification decisions. This helps improve the model’s balance and fairness across classes.

When should I use ensemble methods like bagging with KNN on imbalanced data?

Bagging works well for large, imbalanced datasets where random sampling can help capture more minority class instances without fully replicating the dataset. Ensemble methods, like Bagged KNN or Randomized KNN, create multiple models, each trained on different data subsets. This can increase the chance of minority class representation and improve the overall model’s robustness.

What evaluation metrics should I use to assess KNN on imbalanced datasets?

Accuracy alone is not suitable for imbalanced datasets. Use metrics like F1 score, precision, recall, and AUC-ROC to evaluate KNN performance on imbalanced data, as these provide better insights into how well the model handles minority and majority classes.

Resources

1. Research Papers & Articles

- Learning from Imbalanced Data (He and Garcia)

Read on IEEE Xplore - A Study on Class Imbalance Problem in Machine Learning

ResearchGate Article - Improving KNN with Imbalanced Data (IEEE Xplore)

IEEE Xplore – KNN Imbalance