The rise of machine learning operations (MLOps) alongside the trusted principles of DevOps has redefined software and model delivery pipelines. Integrating these two domains allows businesses to harness the full power of automation, scalability, and innovation. Let’s dive into how these disciplines harmonize and what it takes to achieve a seamless integration.

Understanding the Core: MLOps vs. DevOps

What is MLOps?

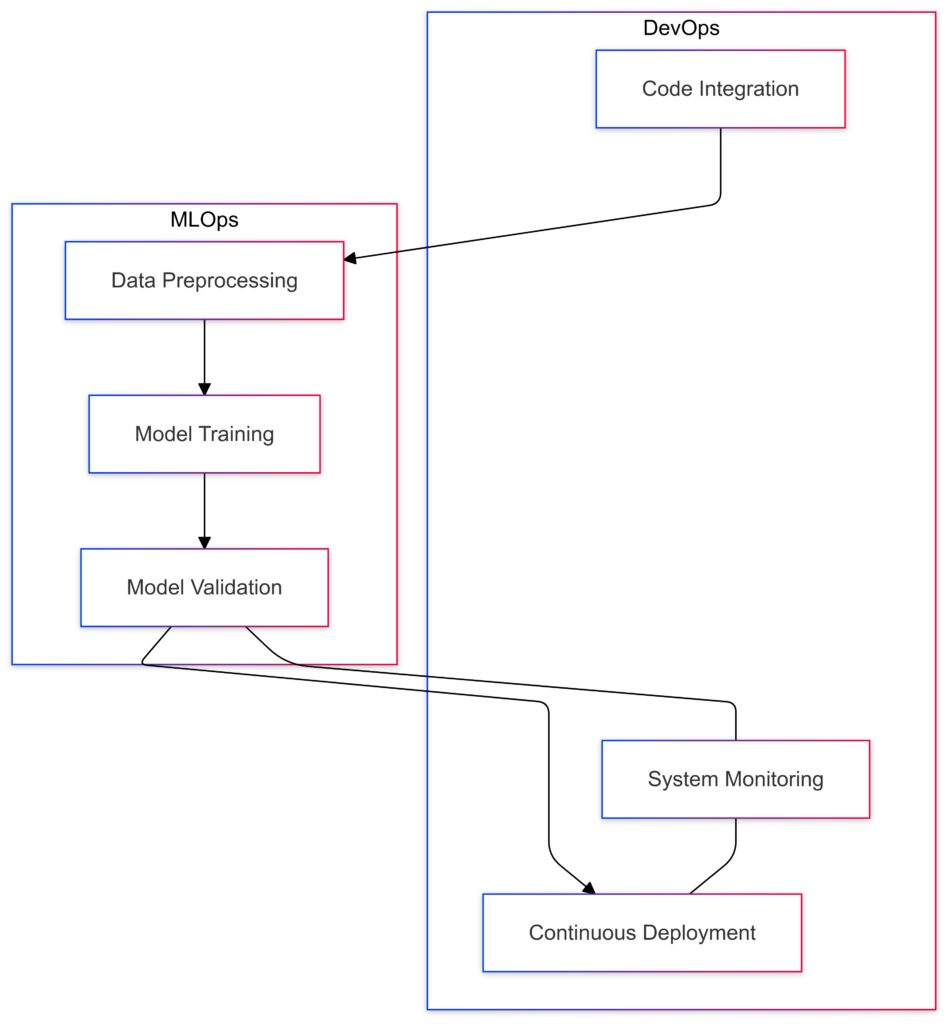

MLOps is the practice of operationalizing machine learning models. It ensures that data, models, and infrastructure work cohesively.

- It emphasizes automating the lifecycle of machine learning models, from training to deployment.

- Key components include data pipelines, version control for models, and monitoring for predictions.

Think of it as the glue that binds your ML experiments into real-world applications.

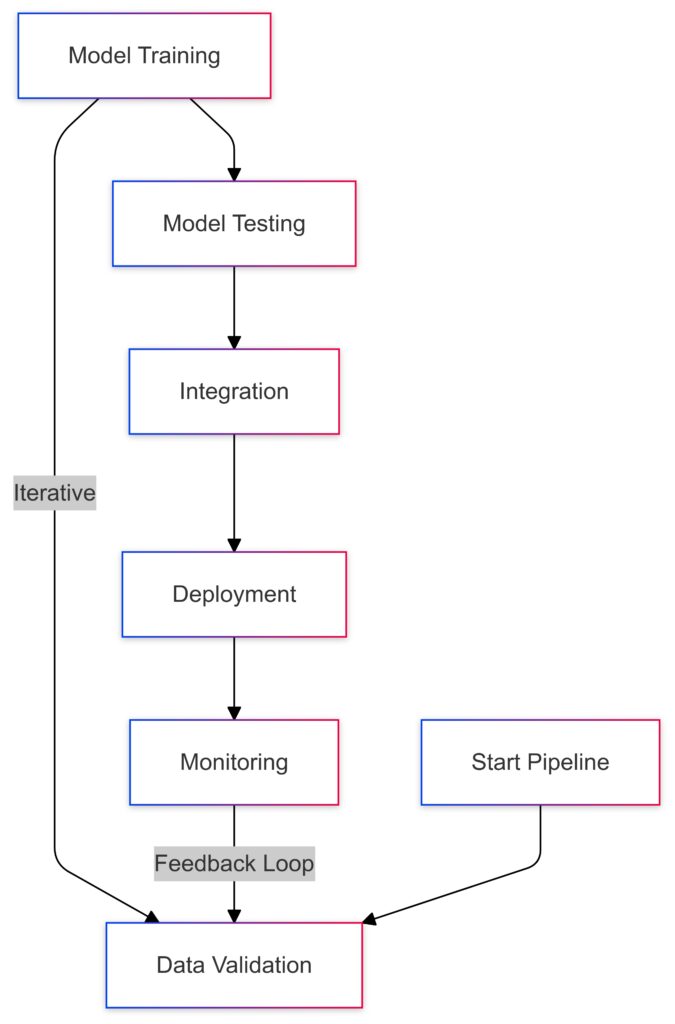

This diagram highlights the workflows in DevOps and MLOps, with their interconnections and shared practices, such as system monitoring and deployment pipelines.

The Philosophy of DevOps

DevOps revolutionized software development with its focus on collaboration, continuous integration (CI), and continuous delivery (CD).

- Developers and IT operations teams share tools, practices, and responsibility.

- Speed and reliability are the hallmark of DevOps practices.

Together, they ensure software changes are delivered rapidly and safely.

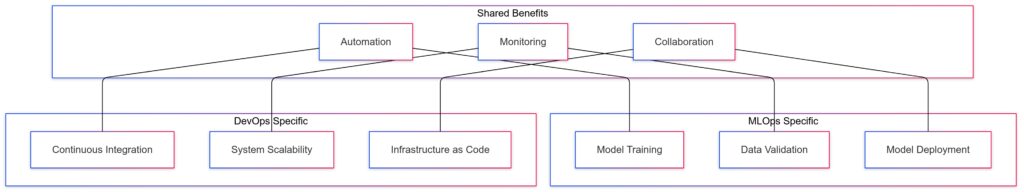

Overlapping Goals and Benefits

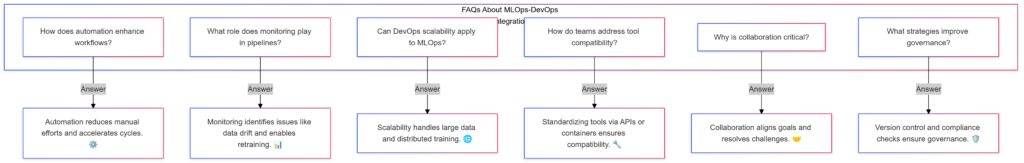

Shared Focus on Automation

Both DevOps and MLOps thrive on automation. While DevOps automates code deployments, MLOps extends this to include data preprocessing, model training, and even hyperparameter tuning.

- Automation reduces human error.

- It accelerates the time from development to production.

Unified Monitoring and Feedback Loops

Monitoring isn’t just for infrastructure in MLOps—it’s also for model accuracy. Integrating DevOps’ monitoring tools with MLOps pipelines enables:

- Real-time insights into system performance.

- Faster remediation of model drift or prediction errors.

Collaborative Culture and Efficiency

Both practices foster collaboration across traditionally siloed teams. For example, MLOps bridges data scientists and engineers, while DevOps links developers and IT professionals. This shared ethos drives innovation.

Key Challenges in Harmonizing MLOps with DevOps

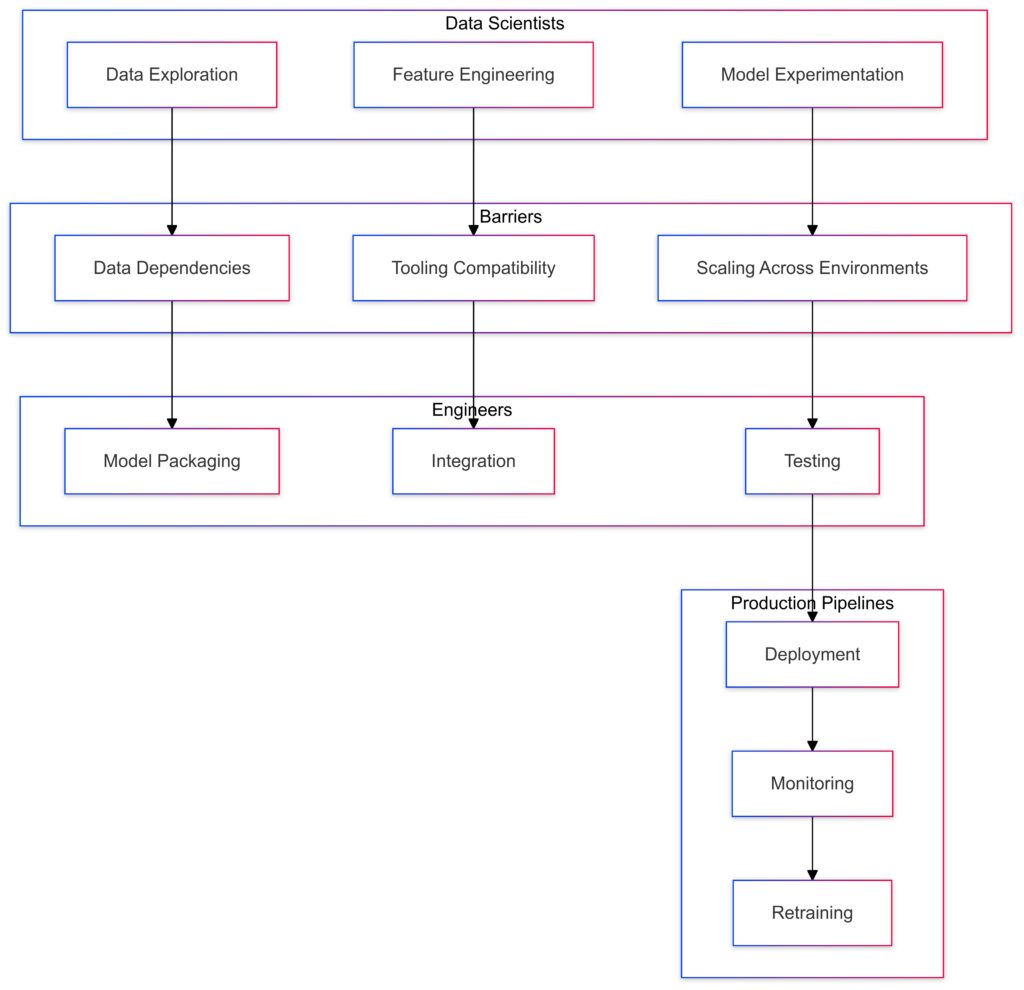

Complex Dependencies

Unlike traditional software, machine learning involves data dependencies and dynamic models that evolve over time.

- Handling these requires unique pipelines.

- Versioning data and models adds complexity compared to simple source code control.

Tooling Compatibility

The DevOps ecosystem relies heavily on tools like Jenkins, Docker, and Kubernetes. MLOps introduces additional tools, such as Kubeflow, MLflow, and TensorFlow Serving.

- Seamless integration requires careful planning.

- Teams must avoid tool sprawl to prevent inefficiencies.

Scaling Across Environments

Machine learning models often require computationally expensive environments for training. Balancing scalability and cost efficiency during deployment is critical.

Layers: Challenges like Data Dependencies, Tooling Compatibility, and Scaling Across Environments are represented as barriers.

Roles:Data Scientists: Engage in data exploration, feature engineering, and model experimentation.

Engineers: Handle model packaging, integration, and testing.

Production Pipelines: Include deployment, monitoring, and retraining.

The challenges act as vertical barriers connecting and influencing the transition between roles.

Aligning Pipelines: Best Practices

Establish End-to-End Workflows

Creating an integrated CI/CD/CT (Continuous Training) pipeline ensures that:

- Data pipelines, model training, and deployments are synchronized.

- Models remain updated with fresh data and retraining cycles.

Leverage Containerization and Orchestration

Containers and orchestration frameworks simplify deploying machine learning models alongside traditional applications.

- Docker can package models with dependencies.

- Kubernetes can manage these containers at scale.

Unified CI/CD/CT pipeline: Connecting data, model training, and deployment for continuous improvement.

Adopt Unified Monitoring Frameworks

Using a single monitoring toolset, like Prometheus or Grafana, allows teams to track:

- System performance metrics.

- Model accuracy and latency.

Next Steps: Building the Bridge

The Role of Cross-Functional Teams

An essential step is forming cross-functional teams. MLOps requires data scientists, ML engineers, and operations teams to collaborate closely. Shared goals and a unified roadmap ensure success.

Establish Governance and Compliance

Compliance becomes crucial as more ML-driven decisions affect business outcomes. Integrating DevOps’ robust security practices with MLOps workflows ensures:

- Secure, compliant pipelines.

- Better accountability through audits and versioning.

Strategies for Seamless MLOps-DevOps Integration

Achieving harmony between MLOps and DevOps requires practical strategies tailored to bridge their differences while enhancing their shared strengths. Here’s how organizations can effectively integrate these practices for maximum impact.

Create Unified CI/CD Pipelines for Code and Models

Continuous integration (CI) in MLOps includes not only code but also datasets and models. Developing unified pipelines ensures:

- Consistent code testing and model validation across the workflow.

- Smooth transitions from experimentation to production.

Key steps for unification:

- Extend CI/CD to handle data changes and automate retraining models when data pipelines update.

- Use tools like MLflow to track model performance and deployment history.

Use Infrastructure as Code (IaC)

DevOps thrives on IaC principles, and MLOps benefits from adopting them as well. Define infrastructure configurations as code to ensure consistent and repeatable deployments.

- Provision environments for model training, testing, and production.

- Tools like Terraform and Ansible enable scalability and automated management.

Example use cases:

- Automating cloud resource provisioning for ML experiments.

- Deploying model-serving platforms with pre-configured environments.

Streamline Data Engineering Practices

Data pipelines are the lifeblood of machine learning models. A robust MLOps strategy requires streamlining data ingestion, preprocessing, and storage.

- Align DevOps’ CI/CD principles with MLOps by automating data pipeline checks and validations.

- Implement data versioning to ensure reproducibility and audit trails.

Best tools for the job:

- Apache Airflow for workflow orchestration.

- DVC (Data Version Control) for tracking datasets like code.

Embrace Feature Stores

Feature stores act as a central repository for storing and sharing machine learning features. They bridge gaps between data engineers, data scientists, and production teams.

- Promote feature reusability across projects.

- Enhance feature governance, ensuring alignment with compliance and quality standards.

Popular feature store platforms include Feast and Tecton, which integrate seamlessly with existing pipelines.

Automate Model Monitoring and Retraining

Continuous monitoring is critical for tracking model drift, prediction accuracy, and production performance. Automating these tasks reduces manual intervention while ensuring reliability.

- Use tools like Prometheus or Seldon Core for monitoring both infrastructure and model metrics.

- Trigger automated retraining workflows when significant model degradation is detected.

Pro tip: Integrate alerts with collaboration platforms like Slack or Teams for instant team notifications.

Scaling Integration Across the Enterprise

Foster a Culture of Collaboration

Integrating MLOps with DevOps is not just about tools—it’s a cultural shift. Encouraging team collaboration minimizes silos and enhances decision-making.

- Host regular cross-functional reviews to align priorities.

- Use shared metrics dashboards to maintain transparency.

Invest in Training and Upskilling

Upskilling both DevOps and MLOps teams ensures everyone understands the nuances of the integrated workflows.

- Offer training on ML lifecycle management tools.

- Encourage certifications in cloud platforms like AWS SageMaker or Google Vertex AI for seamless deployment expertise.

Future-Proofing Your MLOps-DevOps Ecosystem

Finally, ensure your integrated pipeline is flexible enough to adopt new technologies and workflows. Both fields are rapidly evolving, and adaptability will be key to staying ahead.

When done right, harmonizing MLOps with DevOps unleashes a powerful synergy that enhances efficiency, scalability, and innovation. Now, let’s explore examples and success stories!

Real-World Applications of MLOps-DevOps Integration

Understanding how organizations have successfully blended MLOps and DevOps offers valuable lessons. Let’s explore some practical examples and their outcomes.

Revolutionizing Retail with Predictive Analytics

Retailers face constant pressure to anticipate customer demand. By harmonizing MLOps with DevOps, companies:

- Develop real-time demand forecasting models.

- Automate data ingestion from point-of-sale systems into machine learning pipelines.

Case Study:

A global retail chain adopted Kubernetes to orchestrate both application services and ML model deployments.

- Result: Reduced prediction latency by 40%, enhancing inventory accuracy.

Enhancing Fraud Detection in Financial Services

Financial institutions often deal with evolving fraud patterns. Integrating DevOps CI/CD with MLOps allows for:

- Rapid updates to fraud detection models.

- Continuous monitoring of transaction trends.

Example Success:

A fintech company used MLflow for model tracking and Jenkins for deployment automation.

- Result: Models were updated weekly, reducing false positives by 25%.

Accelerating Autonomous Driving

The development of self-driving cars depends heavily on machine learning models. By merging DevOps with MLOps, manufacturers:

- Streamline data pipelines from vehicle sensors.

- Automatically retrain models on new driving scenarios.

Case Study:

An autonomous vehicle firm implemented Airflow for data orchestration and Docker for model packaging.

- Result: Faster deployment cycles enabled safer road testing.

Lessons Learned from Success Stories

Prioritize Data-Driven Decision-Making

Integrating MLOps and DevOps facilitates an end-to-end feedback loop, enabling organizations to quickly adapt models based on real-world data.

Start Small, Scale Incrementally

Rather than attempting a complete overhaul, most organizations began by aligning specific workflows—like model versioning—with DevOps pipelines.

Invest in Tool Compatibility

The best outcomes occurred when organizations chose tools that integrated seamlessly across both domains. Examples include using Kubernetes for orchestration and Grafana for unified monitoring.

The Future of MLOps and DevOps

Trend: Edge Computing Integration

As edge devices become more capable, integrating edge ML deployments into DevOps pipelines will drive new efficiencies.

Evolution of Unified Platforms

Expect growth in platforms that combine DevOps and MLOps capabilities, offering end-to-end solutions for modern AI-driven applications.

By leveraging the strengths of MLOps and DevOps, organizations are poised to achieve unprecedented scalability, reliability, and innovation. The time to embrace this integration is now!

FAQs

Can DevOps tools like Jenkins and Kubernetes be used in MLOps?

Yes, many DevOps tools are widely adopted in MLOps. For instance:

- Jenkins can automate testing and deployment of machine learning models.

- Kubernetes manages scalable deployments for both traditional applications and ML model serving.

Example use case: A data team might use Kubernetes to deploy a fraud detection model that scales during peak transaction periods.

What challenges arise when integrating MLOps with DevOps?

The key challenges include:

- Data dependencies: Unlike DevOps, MLOps pipelines need to manage dynamic datasets.

- Tool compatibility: MLOps tools like MLflow must integrate smoothly with DevOps platforms like Docker.

Example: A team might struggle to version control both the model code and the datasets, but tools like DVC (Data Version Control) simplify this.

Why is monitoring critical in MLOps-DevOps workflows?

Monitoring ensures both system performance and model accuracy remain optimal. Without it, models can experience drift—when predictions degrade over time due to changes in input data.

For example:

- A customer segmentation model might misclassify new customer types. Continuous monitoring flags these errors for retraining.

How can teams start harmonizing MLOps with DevOps?

Begin with small, manageable integrations:

- Use DevOps pipelines to deploy early-stage ML models.

- Gradually adopt MLOps tools for data preprocessing and versioning.

For instance, teams might initially package a model using Docker and deploy it via Jenkins, then later add MLflow for tracking model experiments.

Is it possible to automate retraining ML models within DevOps pipelines?

Yes, you can automate retraining by integrating continuous training (CT) into your CI/CD workflows.

- For example, when a dataset is updated or new data becomes available, pipelines can trigger automatic retraining and deployment of updated models.

- Tools like Kubeflow Pipelines or Apache Airflow are great for managing such automation.

What role does version control play in MLOps and DevOps integration?

Version control ensures reproducibility and traceability across code, data, and models. While DevOps traditionally focuses on code versioning (e.g., with Git), MLOps extends this to:

- Dataset versions for training.

- Model versions to track performance improvements or regressions.

For instance: A financial institution can audit older models and datasets to comply with regulatory requirements.

How do organizations avoid siloed teams in an MLOps-DevOps workflow?

Breaking down silos requires cross-functional collaboration between data scientists, ML engineers, and DevOps teams.

- Shared dashboards and communication tools (e.g., Slack, Jira) foster transparency.

- Encouraging co-ownership of pipelines aligns goals across teams.

Example: A data science team might collaborate with DevOps to deploy an NLP model and rely on DevOps monitoring to fine-tune the deployment environment.

What are some common tools that bridge MLOps and DevOps workflows?

Several tools support seamless integration:

- GitHub Actions for CI/CD workflows.

- MLflow for tracking experiments and managing models.

- Kubernetes for orchestrating deployments at scale.

Example: A startup uses GitHub Actions to validate data pipelines and retrain models before deploying them with Docker on Kubernetes clusters.

How does security factor into MLOps and DevOps integration?

Security is critical for both workflows, but MLOps adds unique risks like data privacy and biased models.

- Incorporate DevOps best practices like secrets management (e.g., with HashiCorp Vault).

- Implement explainable AI techniques to validate that ML models make ethical and secure decisions.

For example: A healthcare company may use encrypted data pipelines to train models while adhering to HIPAA regulations.

Are there best practices for handling data drift in MLOps-DevOps integration?

Yes, managing data drift involves:

- Continuous monitoring of incoming data for changes in distribution.

- Retraining models on updated datasets when drift is detected.

Example: A weather prediction system automatically identifies shifts in climate data and retrains the model to maintain forecast accuracy.

How can DevOps practices enhance MLOps workflows?

DevOps introduces automation, scalability, and reliability to MLOps processes, such as:

- Automating model testing during development.

- Scaling model serving in production environments with load balancers.

For example, CI/CD pipelines can validate machine learning models just like code, preventing untested models from entering production.

What industries benefit the most from integrating MLOps with DevOps?

While most industries can benefit, those with dynamic data environments gain the most, including:

- Finance: Fraud detection and stock predictions.

- Retail: Personalized recommendations and demand forecasting.

- Healthcare: Predictive diagnostics and treatment optimization.

Example: A retail company uses integrated pipelines to adjust recommendation models in real time during sales events.

How do MLOps-DevOps integrations evolve over time?

Initially, organizations may focus on basic integrations, such as deploying simple models. Over time, workflows expand to include:

- Real-time monitoring of production models.

- Automation of the full data-model lifecycle, including retraining.

Example: A gaming company might start by deploying a recommendation model for in-game purchases, later enhancing it with dynamic updates based on player behavior.

Resources

Resources for Learning and Implementing MLOps-DevOps Integration

Online Courses and Tutorials

- Coursera: MLOps Specialization: A comprehensive course covering MLOps fundamentals, pipelines, and scaling strategies.

- Udemy: MLOps with AWS and Kubernetes: Learn how to deploy ML models at scale using Kubernetes and AWS.

- edX: DevOps for AI Workloads: Focused on harmonizing DevOps practices with AI and ML workflows.

Blogs and Articles

- Google Cloud MLOps Guide: Learn MLOps for building scalable, production-ready ML pipelines.

- AWS Machine Learning Blog: Offers practical examples of MLOps pipelines in AWS environments.

- Towards Data Science: Articles covering MLOps, DevOps, and tools like Kubeflow, Airflow, and MLflow.

Tools and Documentation

- Kubeflow: Open-source platform for machine learning workflows on Kubernetes.

- MLflow: Tool for tracking, packaging, and deploying ML models.

- DVC (Data Version Control): Tracks data and model versions, enabling reproducible experiments.

- Apache Airflow: Workflow orchestration for complex pipelines.

Community Forums and Support

- Stack Overflow: Join discussions on MLOps and DevOps challenges or ask specific questions.

- GitHub Discussions: Many MLOps-related repositories, such as MLflow and DVC, have active discussion boards.

- Reddit: Subreddits like r/MachineLearning and r/devops host ongoing conversations on workflows and tools.

Books

- “Building Machine Learning Pipelines” by Hannes Hapke & Catherine Nelson: A practical guide to MLOps practices.

- “Accelerate: The Science of Lean Software and DevOps” by Nicole Forsgren et al.: Foundational DevOps principles that can extend to MLOps.

- “Practical MLOps” by Noah Gift, Alfredo Deza, et al.: Real-world insights into MLOps workflows, tools, and best practices.

Tool-Specific Tutorials and Communities

- Kubernetes Academy: Tutorials for deploying ML models in Kubernetes clusters.

- TensorFlow Extended (TFX): Guides on implementing production-ready ML workflows.

- Prometheus Documentation: Learn to monitor both DevOps and MLOps metrics.

Leverage these resources to refine your skills and achieve seamless integration of MLOps and DevOps workflows!