Secure Multi-Party Computation: Revolutionizing Privacy in Machine Learning

What is Secure Multi-Party Computation (SMPC)?

At its core, Secure Multi-Party Computation (SMPC) is a cryptographic protocol that allows multiple parties to jointly compute a function without revealing their individual inputs.

Imagine this like a group of friends trying to find out their average salary without any one person disclosing their exact number. In SMPC, data stays private throughout the process, with no single party able to access another’s data directly.

The magic behind this protocol is that it prevents leakage of sensitive information, even in scenarios where competing organizations or entities need to collaborate. It’s like achieving the best of both worlds: working together on a problem while keeping your secrets safe. SMPC is gaining traction, especially in fields where privacy is paramount—like finance, healthcare, and, of course, machine learning (ML).

The Privacy Challenge in Machine Learning

We live in a world where machine learning thrives on data. The more data we feed into models, the smarter they get. But here’s the kicker: much of the valuable data is private or sensitive. Think patient medical records, financial transactions, or proprietary business metrics. Sharing this data for collaborative learning can lead to privacy breaches. It’s a conundrum—how do we enable powerful machine learning while safeguarding individual or organizational privacy?

Without proper privacy safeguards, machine learning models can inadvertently expose sensitive information, even if the data was anonymized. SMPC offers a game-changing solution to this problem, allowing multiple parties to contribute data securely without directly sharing it. This way, organizations can pool their data to train models without ever exposing the raw information.

How SMPC Works: A Brief Overview

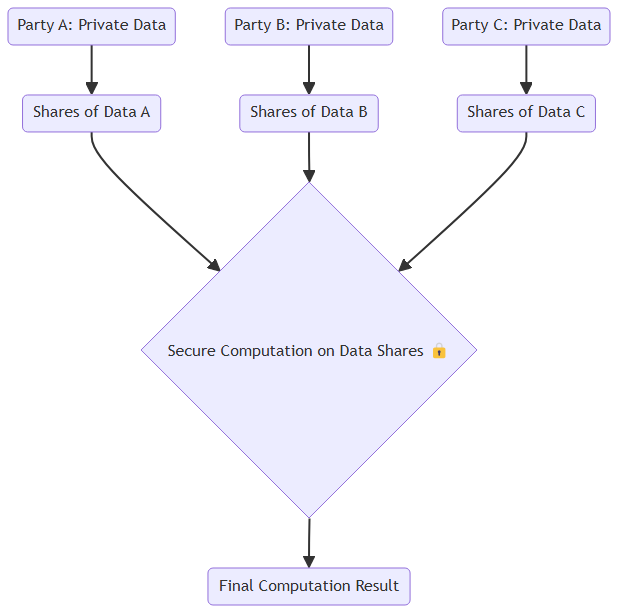

So, how does SMPC actually work? It’s all about splitting the data. When a computation is performed under SMPC, each party breaks their data into pieces (often called “shares”). These pieces are then distributed among all participating parties. Here’s the kicker: none of the individual pieces mean anything on their own. It’s only when all the pieces come together that the actual computation can be carried out.

For example, let’s say three companies want to train a machine learning model but can’t share their data. Each company splits its data into multiple shares, sending a piece to every other company. The computation happens using these shares—nobody gets to see the full picture. The final result is a well-trained model, but no single party ever accessed the raw data from another.

This approach ensures complete privacy, as any intercepted piece of data is useless by itself. SMPC builds on a foundation of mathematical guarantees that ensure privacy, even if some of the parties involved might try to cheat or snoop around.

Benefits of SMPC in Machine Learning

The integration of SMPC into machine learning presents some serious advantages. First and foremost, data privacy is maintained. This is crucial when you’re dealing with sensitive information, such as patient records or confidential financial data. SMPC eliminates the need for trust, meaning that even if the parties involved don’t fully trust each other, they can still collaborate securely.

Another major perk is compliance. SMPC can help organizations stay compliant with stringent privacy regulations like GDPR and HIPAA. These regulations often require strict data protection standards, and SMPC offers a way to meet those requirements while still enabling robust machine learning.

Lastly, SMPC can enhance collaboration. Businesses, research institutions, or governments that previously hesitated to pool their data due to privacy concerns can now work together. This opens up doors to improved model accuracy and better insights, as data from various sources can be leveraged securely.

Real-World Applications of SMPC in ML

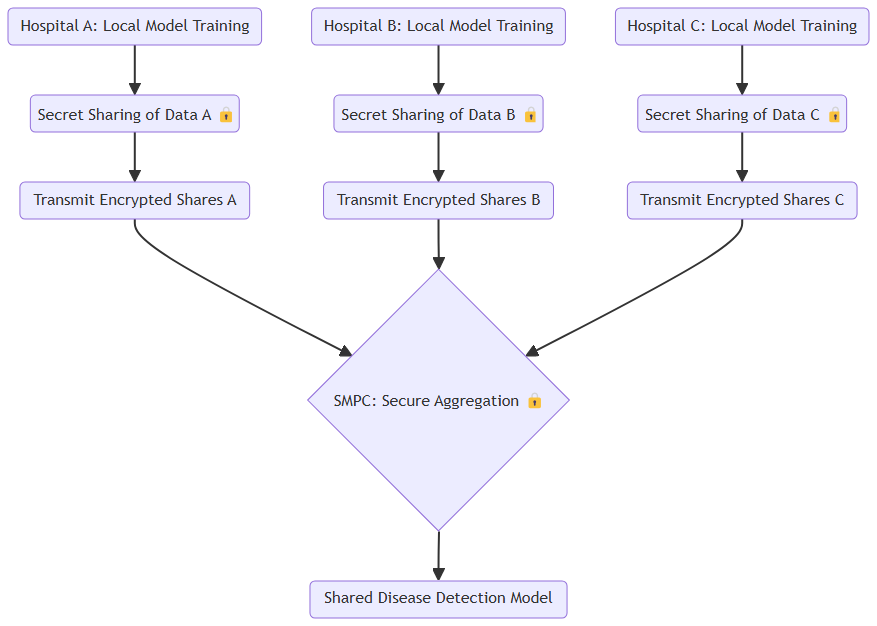

So, where is SMPC actually being used in machine learning today? Well, it’s already making a significant impact in industries like healthcare and finance. In healthcare, for example, hospitals and research centers often possess valuable patient data. However, privacy laws make it difficult for them to share this data with each other or with tech companies that develop medical algorithms.

By employing SMPC, these institutions can collaborate without breaching any privacy rules. Hospitals in different regions can pool their data to train a machine learning model on, say, cancer detection, without exposing patient records. The resulting model is better for everyone, but privacy is never compromised.

In the financial sector, SMPC allows institutions to share information about fraud patterns or suspicious transactions without revealing their clients’ details. By combining their knowledge, banks can better detect fraudulent activities, but they do it without breaching confidentiality.

SMPC vs. Other Privacy-Preserving Techniques

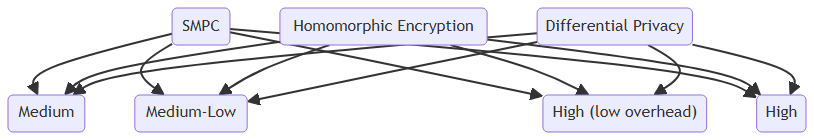

When we talk about privacy-preserving techniques in machine learning, SMPC is just one part of a larger toolkit. It’s essential to understand how it stacks up against other methods like differential privacy and homomorphic encryption.

Differential privacy ensures that individual data points can’t be easily traced back to the original data by adding a layer of statistical “noise.” While it’s highly effective in certain use cases, the drawback is that it can degrade the accuracy of the machine learning model. In contrast, SMPC maintains data integrity, since no noise is added—the model’s accuracy is preserved while still maintaining privacy.

Then there’s homomorphic encryption, which allows computations to be performed on encrypted data. Sounds ideal, right? The problem is, homomorphic encryption is computationally expensive, and processing times can be slow, making it less practical for large-scale ML applications. SMPC, on the other hand, strikes a balance—it offers robust privacy without the heavy computational burden, making it more feasible for real-world applications.

In essence, SMPC isn’t necessarily better than these techniques; it’s about choosing the right tool for the job. In many cases, combining SMPC with other methods can offer enhanced privacy protection.

Why Privacy Matters in the Age of Big Data

Let’s face it, we’re living in the age of Big Data. The sheer volume of personal, financial, and medical data collected daily is staggering. While this data holds the potential for groundbreaking insights, it also poses significant privacy risks. One misstep, and sensitive data can be exposed, leading to massive breaches of trust and costly fines.

Think about it: would you feel comfortable knowing your medical history could be accessed by unauthorized parties? Or that your shopping habits are being analyzed without your consent? That’s why privacy isn’t just a “nice-to-have” feature anymore—it’s a necessity.

With data privacy laws like GDPR and CCPA gaining momentum, the pressure on organizations to safeguard personal data has never been higher. Fines for non-compliance are hefty, and the damage to a brand’s reputation can be irreparable. This is where SMPC comes in. It’s a tool that allows organizations to continue using data without risking privacy violations. More than that, it builds trust with users, letting them know their data is secure, even in collaborative environments.

Key Algorithms Enabling SMPC

Behind the scenes, several key algorithms enable the magic of SMPC. One of the most commonly used methods is secret sharing, where data is split into “shares” and distributed among parties. Only when these shares are combined does the full picture emerge. Popular secret sharing schemes include Shamir’s Secret Sharing and the Additive Secret Sharing method.

Then, there’s garbled circuits, another technique central to SMPC. In this approach, the computation itself is encoded into a kind of encrypted puzzle. The parties involved perform their operations on this garbled data, and only at the end can the correct result be “decoded.” It’s complex but highly effective, especially in scenarios where privacy is paramount.

Lastly, we have oblivious transfer, a cryptographic technique ensuring that one party sends data without knowing what the other party received. This guarantees that sensitive data stays concealed, even when computations require exchanging some information.

These algorithms, when combined, form the backbone of SMPC. While the math might be complicated, the end result is a protocol that keeps privacy intact, even in highly collaborative environments.

Challenges of Implementing SMPC in ML

As powerful as SMPC is, it’s not without its challenges. One major hurdle is the computational complexity involved. While not as computationally intense as homomorphic encryption, SMPC still requires a significant amount of resources, especially when working with large datasets. The complexity of the cryptographic operations can slow down the training process, making it less efficient compared to traditional machine learning.

Another challenge is communication overhead. Since the protocol involves splitting data into shares and distributing them among different parties, there’s a lot of data being passed back and forth. This can lead to slower computation times and higher bandwidth costs.

There’s also the issue of usability. Implementing SMPC requires specialized knowledge of cryptographic techniques, and not every data scientist or ML engineer is familiar with these concepts. Bridging the gap between cryptography and machine learning expertise is essential, but not always straightforward.

Despite these challenges, ongoing research is making SMPC more practical for everyday use in machine learning. As the technology evolves, these barriers will continue to fall, making it easier for organizations to adopt SMPC in their workflows.

Combining SMPC with Federated Learning

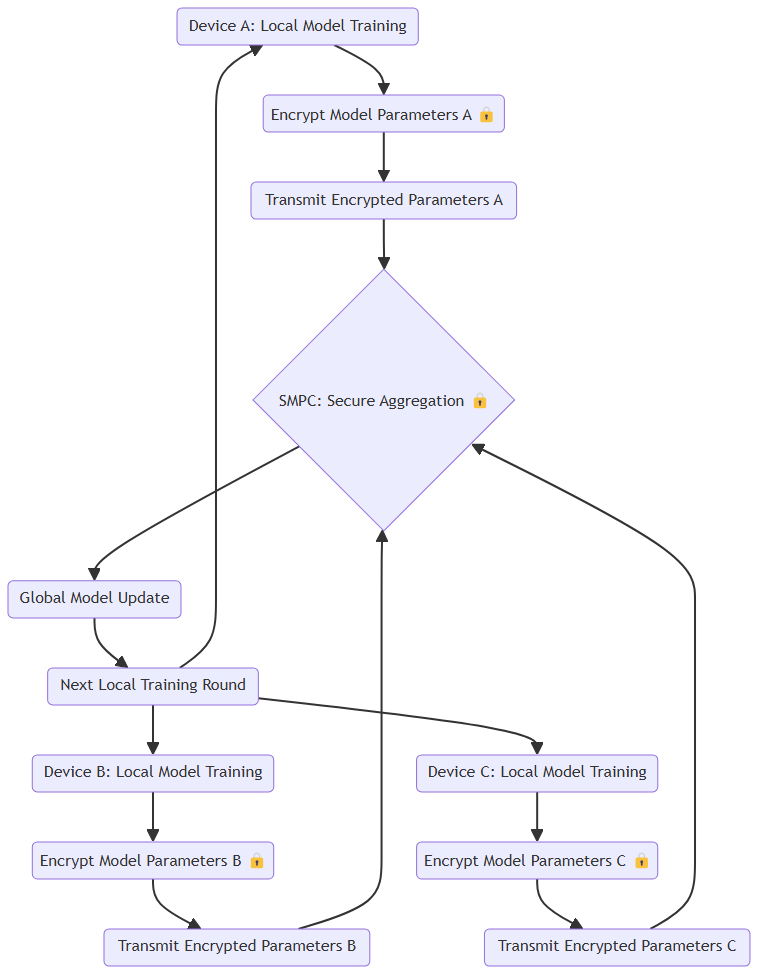

One particularly promising trend is combining SMPC with federated learning. Federated learning allows multiple entities to train a machine learning model together without sharing their raw data. Each party trains a model locally on their own data, and only the model parameters are shared and aggregated to create a global model.

But there’s still some risk—what if the parameters themselves reveal sensitive information? This is where SMPC comes into play. By applying SMPC to the parameter aggregation process, it’s possible to further enhance privacy, ensuring that no sensitive data is leaked, even in the parameter-sharing phase.

This combination creates a privacy powerhouse. Federated learning allows organizations to collaborate without sharing data, while SMPC ensures that even the intermediate results (like model parameters) remain private. It’s a double layer of security that’s particularly useful in healthcare or finance, where privacy is non-negotiable.

The Role of Cryptography in SMPC

At the heart of Secure Multi-Party Computation lies cryptography—the science of securing communication and information. SMPC wouldn’t exist without cryptographic techniques like encryption and secret sharing, which ensure that data remains private during computation. By leveraging mathematical algorithms, SMPC enables parties to perform joint computations while keeping their individual data hidden.

The beauty of cryptography in SMPC is that it provides mathematical guarantees of privacy. Even if a party tries to snoop or cheat, the cryptographic protocols prevent them from learning anything they’re not supposed to know. Whether it’s homomorphic encryption allowing computations on encrypted data or oblivious transfer ensuring one party doesn’t know which data the other accessed, cryptography forms the backbone of SMPC’s security model.

This reliance on cryptography means SMPC can be applied to a wide range of use cases, from financial transactions to healthcare analytics, without worrying about sensitive data being exposed. As cryptographic research advances, SMPC will continue to become more efficient, scalable, and easier to integrate with machine learning.

Scaling SMPC for Large Datasets

One of the most pressing challenges in using Secure Multi-Party Computation for machine learning is scaling it to handle large datasets. Machine learning often requires massive amounts of data, and SMPC introduces computational and communication overheads that can slow down these processes. So, how can SMPC be scaled to work efficiently with big data?

The key lies in optimizing both the cryptographic algorithms and the infrastructure supporting them. Advances in parallel processing and distributed computing help divide the workload, allowing large datasets to be processed faster, even when using cryptographic techniques. Additionally, researchers are developing more efficient protocols that reduce the amount of data exchanged between parties, cutting down on communication costs.

Another approach is combining SMPC with federated learning (as discussed earlier), which minimizes the need to process large datasets centrally. Each party can work on their own local data, and only minimal, private information is shared. With these optimizations, SMPC is becoming more practical for real-world applications that involve massive amounts of data.

Future Trends: SMPC and Artificial Intelligence

The future of Secure Multi-Party Computation (SMPC) in Artificial Intelligence (AI) is full of promise. As AI systems become more complex and data-driven, the need for privacy-preserving techniques like SMPC will only grow. Imagine a world where multiple hospitals across the globe can train a powerful AI model to detect diseases without ever sharing patient data. Or where competing companies can collaborate on fraud detection without giving up their proprietary information.

One exciting development is the integration of SMPC with decentralized AI models, such as those used in edge computing. As IoT devices generate vast amounts of data, SMPC can ensure that AI models trained on this data preserve privacy. We’ll likely see more innovations in combining SMPC with blockchain and other decentralized technologies, allowing for more secure, distributed AI systems.

Moreover, as quantum computing advances, traditional cryptography might face new challenges. However, SMPC is adaptable, and researchers are already exploring post-quantum cryptography to ensure that SMPC remains secure even in a future where quantum computers are prevalent.

Ethical Considerations in Privacy-Preserving ML

Privacy-preserving techniques like SMPC come with significant ethical implications. On the one hand, they offer the promise of maintaining individual privacy in a world where data is king. On the other, there’s a risk of creating a false sense of security. Just because data is hidden during computation doesn’t mean that privacy concerns are fully eliminated.

For example, if the final machine learning model is accessible to all parties, inferences can still be drawn about the data used to train it. This opens up questions about how much privacy is truly being preserved and how we define ethical AI. Transparency in how SMPC is implemented and used is critical to avoid potential misuse.

Additionally, there’s the issue of consent. When multiple organizations collaborate using SMPC, individuals whose data is involved may not always be fully informed about how their information is being used. Ensuring that privacy-preserving techniques respect the rights of data owners and adhere to ethical standards is crucial as the technology evolves.

Adoption Barriers for SMPC in Machine Learning

Despite its potential, Secure Multi-Party Computation faces several barriers to adoption in machine learning. The biggest challenge is the complexity of the cryptographic protocols involved. Most machine learning practitioners are not cryptography experts, and implementing SMPC requires a deep understanding of both fields. This creates a skills gap that needs to be addressed through better education and tools.

Another significant barrier is performance. As mentioned earlier, SMPC introduces additional computational and communication overhead, making it slower and more resource-intensive than traditional machine learning methods. Organizations may be reluctant to adopt SMPC if it significantly slows down their workflows.

Finally, there’s the issue of cost. The infrastructure required to run SMPC efficiently can be expensive, particularly for smaller organizations. Cloud providers and specialized services that offer privacy-preserving machine learning could help lower these costs, but for now, it remains a barrier for widespread adoption.

How SMPC Enhances Collaboration Without Compromising Data

One of the most exciting aspects of Secure Multi-Party Computation (SMPC) is its ability to foster collaboration while maintaining data privacy. In today’s data-driven world, collaboration is key to innovation. However, when sensitive information is involved—such as patient health records, financial transactions, or proprietary business insights—collaborating across organizations becomes risky.

SMPC changes the game by allowing parties to work together on computations or model training without exposing their raw data. For example, two competing companies can now collaborate on creating a fraud detection model by pooling their transaction data. The beauty here is that neither company sees the other’s data, but both benefit from the enhanced model. It’s like having your cake and eating it too—accessing insights without risking data breaches or losing your competitive edge.

The impact of this on industries like healthcare and finance is already being felt. Hospitals that previously couldn’t share data due to privacy regulations can now work together to improve diagnostics. Banks and financial institutions can fight fraud as a united front without exposing sensitive customer information. This makes SMPC a cornerstone for privacy-preserving machine learning, enabling secure collaboration in ways we couldn’t have imagined before.

FAQs

What is Secure Multi-Party Computation (SMPC)?

SMPC is a cryptographic technique that enables multiple parties to collaboratively compute a function on their collective data while keeping their individual inputs private. It ensures that none of the participating parties can access or infer the others’ private data during or after the computation.

Why is SMPC important for privacy-preserving machine learning (ML)?

SMPC is crucial for privacy-preserving ML because it allows multiple organizations or entities to jointly train machine learning models without sharing raw data. This addresses key concerns around data privacy, especially in sensitive industries like healthcare and finance, where privacy regulations are stringent.

How does SMPC work?

In SMPC, each party splits its private data into shares and distributes them among all participants. No single party can reconstruct the full data set from these shares. The parties then perform computations on the distributed shares, and the final result is revealed without any party accessing the others’ raw data.

What are the real-world use cases of SMPC in machine learning?

- Healthcare: Hospitals can collaborate on disease prediction models using patient data without violating privacy regulations like HIPAA.

- Finance: Financial institutions can detect fraud patterns by combining transaction data without sharing sensitive customer information.

- Government: Different departments or organizations can analyze large datasets for public health or policy development without breaching confidentiality.

How does SMPC compare to other privacy-preserving techniques?

SMPC preserves data privacy without adding noise, unlike differential privacy, which can reduce the accuracy of models. Compared to homomorphic encryption, SMPC is more computationally efficient and doesn’t require performing computations directly on encrypted data, which can be slow.

What is the role of cryptography in SMPC?

Cryptography is the backbone of SMPC. Algorithms like secret sharing, oblivious transfer, and garbled circuits enable secure collaboration. These cryptographic techniques ensure that even if some parties are untrusted or malicious, the privacy of the data remains intact.

What are the main challenges in using SMPC for machine learning?

- Computational Complexity: SMPC introduces overhead, making computations slower than traditional ML.

- Communication Overhead: Parties need to exchange data shares frequently, which can be bandwidth-intensive.

- Usability: Implementing SMPC requires expertise in cryptography, and not all ML practitioners are familiar with these protocols.

Can SMPC be used with other privacy-preserving techniques like federated learning?

Yes, SMPC can complement federated learning. In federated learning, model parameters (not raw data) are shared. SMPC can add an extra layer of security by ensuring that even these model parameters are aggregated in a way that preserves privacy. This makes the combined approach even more robust in scenarios where privacy is critical.

What industries benefit most from SMPC in machine learning?

- Healthcare: For securely training AI models on medical data.

- Finance: For fraud detection and anti-money laundering systems.

- Public Sector: For government data analysis and policy formulation.

- Retail and E-commerce: For secure customer data analysis and personalized marketing without breaching privacy.

How does SMPC help with compliance to data privacy regulations?

SMPC allows organizations to collaborate without directly sharing sensitive data, which helps them stay compliant with privacy regulations like the General Data Protection Regulation (GDPR), Health Insurance Portability and Accountability Act (HIPAA), and California Consumer Privacy Act (CCPA). By maintaining privacy during computations, organizations can avoid data breaches and penalties.

What is the future of SMPC in AI and machine learning?

As concerns around data privacy grow, SMPC will become increasingly important in AI and machine learning. Innovations in post-quantum cryptography and scalable SMPC protocols will make it faster and more efficient, allowing broader adoption across industries. SMPC’s role in decentralized AI models and blockchain applications is also expected to expand.

Are there any limitations to using SMPC?

Yes, while SMPC is powerful, it comes with certain limitations:

- Performance Overhead: Computations using SMPC are slower compared to standard methods.

- Complexity: The protocols are complex and require specialized knowledge in cryptography.

- Resource-Intensive: Requires substantial computational resources and bandwidth to handle large datasets efficiently.

What are the key algorithms used in SMPC?

- Secret Sharing: Data is divided into multiple shares and distributed among parties.

- Garbled Circuits: A cryptographic method where a computation is turned into an encrypted puzzle, which is solved collaboratively.

- Oblivious Transfer: Allows one party to send data to another without knowing what the other party received, ensuring privacy.

Can small organizations use SMPC for machine learning?

While SMPC can be resource-intensive, small organizations can benefit from cloud-based platforms that offer privacy-preserving machine learning services. Providers like OpenMined and tools like PySyft make SMPC more accessible, lowering the barrier for small businesses or research institutions to implement these protocols.

Resources

Here are some valuable resources to deepen your understanding of Secure Multi-Party Computation (SMPC) and its role in privacy-preserving machine learning:

Books:

- “Introduction to Modern Cryptography” by Jonathan Katz & Yehuda Lindell

- A great resource to build a strong foundation in cryptography, including protocols like SMPC.

- “Secure Multiparty Computation and Secret Sharing” by Ronald Cramer

- Focuses specifically on SMPC, secret sharing, and the mathematical principles behind these cryptographic techniques.

- “Algorithms for Privacy-Preserving Data Mining” by Michael J. Freedman & Benny Pinkas

- Offers a deep dive into algorithms and methods like SMPC, used in privacy-preserving machine learning.

Research Papers:

- “Secure Multiparty Computation for Privacy-Preserving Machine Learning” by Andrew C. Yao

- A pioneering paper on the theoretical foundations of SMPC.

- “Privacy-Preserving Machine Learning via Secure Multi-Party Computation” (2018) by Riahi, Ghodsi, et al.

- Discusses practical implementations of SMPC in machine learning.

- “Federated Learning: Challenges, Methods, and Future Directions” by Peter Kairouz et al.

- Explores how SMPC can be combined with federated learning for privacy-preserving ML.

Online Courses & Tutorials:

- Coursera: “Cryptography I” by Stanford University

- A beginner-friendly course that covers cryptographic techniques like those used in SMPC.

- edX: “Privacy in Machine Learning” by the University of Edinburgh

- Focuses on privacy-preserving techniques in ML, including SMPC, differential privacy, and homomorphic encryption.

Websites & Communities:

- OpenMined: (https://www.openmined.org/)

- A community of researchers and engineers focused on privacy-preserving AI, offering resources and open-source tools for SMPC and federated learning.

- Microsoft SEAL: (https://github.com/microsoft/SEAL)

- An open-source homomorphic encryption library that complements SMPC in secure computing environments.

- Partisia Blockchain: (https://partisiablockchain.com)

- A project dedicated to developing secure multi-party computation infrastructure, offering resources on applying SMPC in blockchain and machine learning.

Tools & Libraries:

- PySyft: (https://github.com/OpenMined/PySyft)

- A Python library that enables privacy-preserving machine learning through SMPC and other techniques.

- Sharemind: (https://sharemind.cyber.ee/)

- A platform for secure multi-party computations used to develop privacy-preserving applications.

- MP-SPDZ: (https://github.com/data61/MP-SPDZ)

- A framework supporting various secure multi-party computation protocols for privacy-focused applications.