Train AI Models: A Step-by-Step Guide

Training an AI model may seem intimidating, but it’s an exciting way to build tech skills and work with machine learning tools. This beginner’s guide will walk you through the essentials, from understanding the basics to launching your model.

Understanding AI Model Basics

What Is an AI Model?

At its core, an AI model is a set of algorithms trained to make predictions or decisions based on data. By processing a vast amount of information, the model “learns” patterns and correlations. This enables it to, for example, classify images, predict text, or even power chatbots.

Different Types of AI Models

AI models vary depending on their function. Here are a few common types:

- Classification Models: Used for tasks like image recognition or text categorization.

- Regression Models: Ideal for predicting continuous outcomes like prices or temperatures.

- Generative Models: These create new data points, like generating images or writing text.

- Reinforcement Learning Models: Often used in gaming and robotics, they “learn” through trial and error.

Understanding these categories can help you choose the right model for your specific goal.

Choosing the Right Tools and Frameworks

When starting out, selecting beginner-friendly tools is crucial. Here are some popular choices:

- TensorFlow: Google’s open-source library, versatile for deep learning projects.

- PyTorch: Favored by researchers, PyTorch offers easy debugging and a flexible approach.

- Scikit-Learn: Excellent for traditional machine learning, Scikit-Learn is lightweight and beginner-friendly.

Choosing a tool depends on your project type and comfort level. For beginners, Scikit-Learn is ideal for simple projects, while TensorFlow and PyTorch are excellent for diving deeper into deep learning.

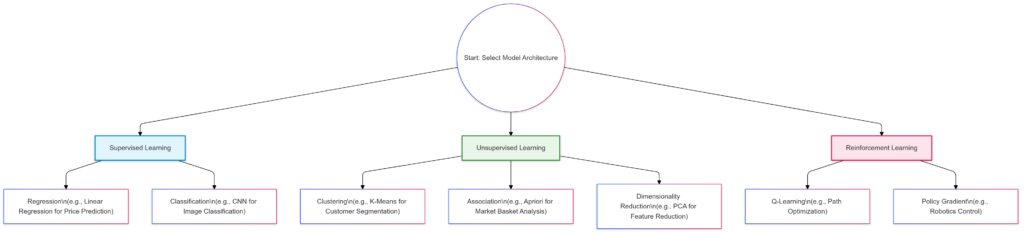

Supervised Learning:Regression: E.g., Linear Regression for price prediction.

Classification: E.g., CNN for image classification.

Unsupervised Learning:Clustering: E.g., K-Means for customer segmentation.

Association: E.g., Apriori for market basket analysis.

Dimensionality Reduction: E.g., PCA for feature reduction.

Reinforcement Learning:Q-Learning: E.g., Path optimization.

Policy Gradient: E.g., Robotics control.

This structure helps streamline model selection based on project requirements, enhancing efficiency in choosing the correct AI solution.

Gathering and Preparing Data

Understanding Data Types

The type of data you use depends on the model’s goal. For example, image data is common in computer vision, while text data is crucial for natural language processing (NLP). Ensure you understand the type of data your model needs to learn effectively.

Finding Quality Data Sources

High-quality data is the backbone of any successful model. Here are some sources to explore:

- Kaggle Datasets: An extensive repository of free datasets covering a wide array of fields.

- UCI Machine Learning Repository: Offers datasets that are ideal for beginners.

- Government Portals: Public data from government sources can be incredibly reliable.

Using real-world data can make your model more robust, and it provides a more realistic learning experience.

Cleaning and Organizing Data

Data rarely comes in a ready-to-use format. You’ll likely need to clean it, which includes:

- Removing Duplicates: Get rid of repeated entries.

- Handling Missing Values: Fill in, interpolate, or remove empty data fields.

- Normalizing Data: Ensures consistency in format (e.g., converting all text to lowercase).

A well-prepared dataset helps your model learn more efficiently and improves accuracy.

Building and Training the Model

Setting Up the Environment

Before building, ensure your environment is ready. Use platforms like Google Colab or Jupyter Notebook, which allow you to code and experiment easily. Colab even offers free GPU access, which can significantly speed up training.

Choosing a Model Architecture

Selecting the right architecture is crucial. Here are a few common ones:

- Convolutional Neural Networks (CNNs): Great for image-related tasks.

- Recurrent Neural Networks (RNNs): Used in NLP and time-series forecasting.

- Transformers: A newer architecture that’s revolutionizing NLP.

The architecture you choose depends on your task. CNNs, for example, are a go-to for any image classification problem, while Transformers shine in text-related tasks.

Training the Model

Training involves feeding your model data and adjusting its internal parameters until it performs well. Here’s a high-level approach:

- Split Data: Use 80% for training and 20% for testing.

- Define Hyperparameters: Set values like learning rate and batch size.

- Train and Validate: Run the model on training data and adjust as needed.

During training, your model learns from the training data, but validating it against new data ensures it generalizes well.

Key stages in the AI model training pipeline, from data collection to deployment.

| Stage | Purpose | Key Activities | Common Tools |

|---|---|---|---|

| Data Collection | Gather raw data for training | – Identify data sources – Data extraction and storage | Python, SQL, Scrapy |

| Data Preprocessing | Prepare data for model input | – Data cleaning (handling missing values) – Data transformation | Pandas, Scikit-Learn, Numpy |

| Model Selection | Choose a model suitable for the problem type | – Evaluate model types (e.g., regression, classification) – Consider performance metrics | TensorFlow, Scikit-Learn, PyTorch |

| Training | Fit the model to the training data | – Feed data into the model – Optimize model parameters through iterations | TensorFlow, Keras, PyTorch |

| Validation | Evaluate the model on unseen data | – Split data into training and validation sets – Measure accuracy, precision, recall | Scikit-Learn, Keras, Matplotlib |

| Hyperparameter Tuning | Optimize model performance by adjusting parameters | – Experiment with different parameter settings – Use grid search or random search | GridSearchCV (Scikit-Learn), Optuna |

| Deployment | Deploy the trained model to production | – Integrate with application – Monitor model performance and retrain if needed | TensorFlow Serving, Docker, Flask |

Summary:

- Data Collection and Preprocessing lay the foundation by ensuring data quality and readiness.

- Model Selection and Training focus on choosing and fitting the model.

- Validation and Tuning refine the model’s performance.

- Deployment completes the pipeline, enabling real-world use and monitoring.

This table highlights the purpose, activities, and tools for each stage, providing a comprehensive view of the model training process. Let me know if you’d like any modifications or additions!

Evaluating and Fine-Tuning Your Model

Measuring Model Performance

Once trained, evaluating your model’s performance is essential. Here are some common metrics:

- Accuracy: The percentage of correct predictions.

- Precision and Recall: Useful for tasks with imbalanced classes.

- Mean Squared Error (MSE): Common in regression tasks.

Evaluating your model on test data reveals how well it may perform in the real world.

Hyperparameter Tuning

Hyperparameters control how a model learns. By adjusting these values, you can improve performance. Techniques like Grid Search or Random Search are common for this purpose. Some frameworks even offer automated tuning options to simplify the process.

Avoiding Overfitting

Overfitting happens when your model performs well on training data but poorly on new data. To combat this:

- Use Dropout Layers: Randomly deactivate neurons during training.

- Regularize the Model: Techniques like L2 regularization can prevent overfitting.

- Expand Data: If possible, get more diverse data to improve generalization.

Preventing overfitting ensures your model works effectively with new, unseen data.

Deploying and Maintaining Your Model

Choosing a Deployment Platform

Once trained, your model is ready for deployment. Here are popular options:

- Flask or FastAPI: Lightweight web frameworks for simple APIs.

- AWS SageMaker: Amazon’s platform that makes model deployment easy.

- Google AI Platform: Integrates seamlessly with TensorFlow and offers scalability.

Choose a platform based on project scope, scalability needs, and budget.

Monitoring and Updating the Model

Once deployed, ongoing monitoring is essential. Regularly check:

- Performance Metrics: Accuracy, speed, and response times.

- Real-World Effectiveness: Make sure it’s achieving the intended goal.

- Data Drift: Over time, your data may evolve, which can impact performance.

Updating and retraining the model ensures it stays relevant and effective in real-world applications.

Securing Your Model

Security is crucial, especially if your model handles sensitive data. Here are a few tips:

- Use Secure APIs: Protect access to your model with secure APIs.

- Regular Updates: Update dependencies and address vulnerabilities.

- Data Encryption: Ensure sensitive data is encrypted both in transit and at rest.

Building a secure model protects both your project and your users.

Troubleshooting and Debugging Common Issues

Identifying Model Errors

When training an AI model, you may encounter errors, especially as you try different architectures and data configurations. Here’s how to tackle some common issues:

- High Error Rates: If the model’s error rate is consistently high, check your data quality and ensure you’re using a suitable model architecture for the task.

- Diverging Loss: If the loss function grows instead of decreasing, the learning rate might be too high. Lower it in increments and re-test.

- Vanishing/Exploding Gradients: If training seems to plateau or fluctuate wildly, especially in deep networks, consider using techniques like batch normalization or gradient clipping.

Recognizing these issues early can save time and improve your model’s accuracy and stability.

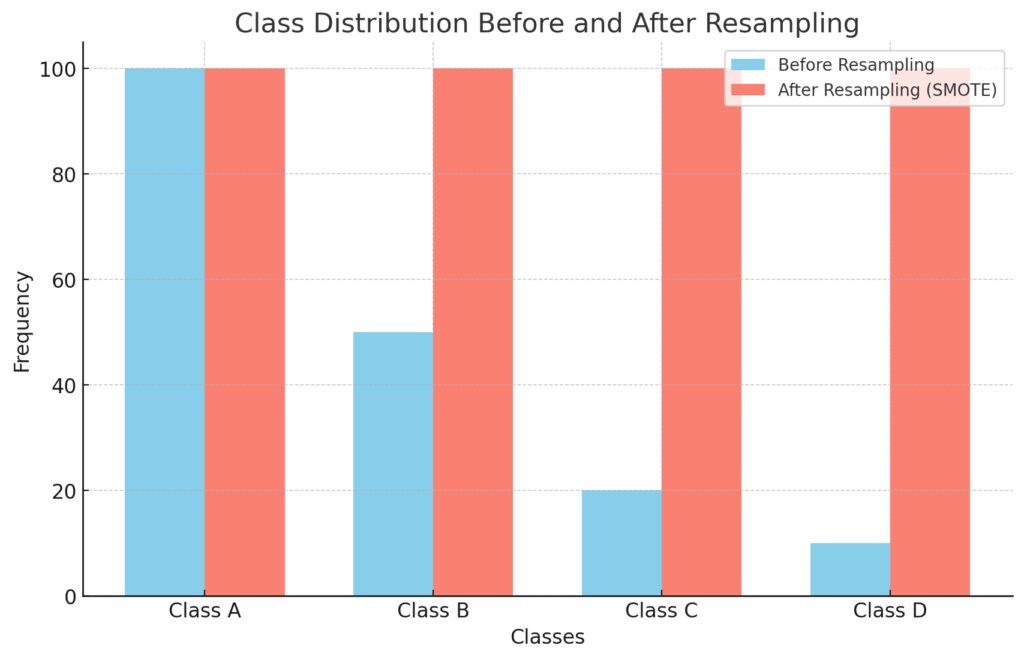

Addressing Data Imbalance

Imbalanced data occurs when one class significantly outweighs others (e.g., 90% “no” and 10% “yes” in a classification task). Here’s how to handle it:

- Resampling: You can oversample the minority class or undersample the majority class.

- Synthetic Data Generation: Using methods like SMOTE (Synthetic Minority Over-sampling Technique), you can generate new samples for the minority class.

- Use Weighted Loss: Apply a weight to each class, which tells the model to pay more attention to the underrepresented class.

Classes: Each class is labeled along the x-axis.

Before Resampling: Shows an imbalanced distribution, with Class A being the most frequent and Class D the least.

After Resampling (SMOTE): Displays a balanced distribution, equalizing the frequency across all classes.

Balancing data improves model robustness and ensures it doesn’t favor one class too heavily.

Managing Computational Constraints

Training large AI models requires significant computing power, which can be challenging for beginners. Here’s how to optimize without needing a supercomputer:

- Batch Processing: Train your model in small batches instead of feeding in all data at once. This reduces memory load.

- Use Pre-trained Models: Pre-trained models are a shortcut for many tasks and are available in frameworks like TensorFlow and PyTorch.

- Cloud Platforms: Services like Google Colab and AWS offer access to high-power GPUs and TPUs, often free or pay-as-you-go.

Optimizing resources is key for practical AI work, especially with limited hardware.

Expanding Your Model’s Capabilities

Exploring Transfer Learning

Transfer learning lets you build on a pre-trained model and fine-tune it for your task, saving time and resources. For instance, BERT (for NLP) and ResNet (for computer vision) models can be adapted to new datasets with minimal modifications. This approach is especially useful when:

- You have limited data.

- The new task is similar to the original model’s task.

- You want to reduce training time.

By leveraging pre-trained models, you can get up and running much faster with impressive results.

Experimenting with Ensemble Learning

In ensemble learning, you combine multiple models to improve performance. Common techniques include:

- Bagging: Combines models trained on different data samples, like in Random Forest.

- Boosting: Sequentially trains models, where each new model corrects previous errors, as in XGBoost.

- Stacking: Involves combining outputs from several models, using another model to make the final prediction.

Ensembles can often outperform individual models, especially in complex tasks.

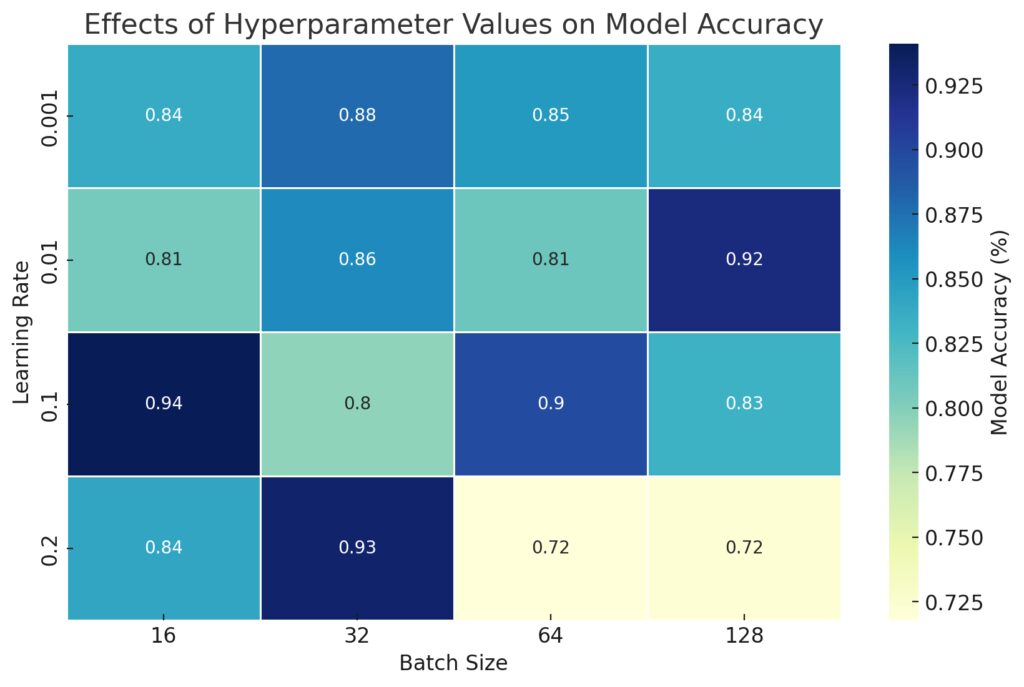

Fine-Tuning with Advanced Hyperparameter Tuning

Beyond basic tuning, advanced techniques help you refine your model’s performance. Bayesian Optimization and Genetic Algorithms can help you zero in on optimal settings without manual adjustments. AutoML tools, such as Google AutoML or H2O.ai, offer automated hyperparameter tuning, which is especially helpful for beginners.

Rows represent various learning rates, and columns show different batch sizes.

Color Gradient: Higher accuracy values are shown in darker shades of blue, helping identify optimal hyperparameter combinations.

Annotations: Each cell displays the model accuracy, making it easy to identify effective settings.

This visualization highlights how specific combinations of learning rate and batch size influence model performance.

Fine-tuning helps squeeze the most performance out of your model and improves consistency across different datasets.

Getting Started with Real-World Applications

Building a Simple Chatbot

Chatbots are a great first project for beginners interested in natural language processing (NLP). Here’s how to start:

- Select an NLP Framework: Tools like NLTK or spaCy can handle text preprocessing.

- Define Responses: Use sequence-to-sequence models to create response patterns, or start with simple rule-based responses.

- Train and Deploy: Use your chatbot in a sandbox environment, testing with real-world phrases.

Chatbots introduce basic NLP, classification, and conversational AI skills, providing a solid base to grow from.

Image Classification

If computer vision interests you, image classification is a great starting project:

- Gather a Dataset: Start with publicly available datasets like CIFAR-10 or MNIST.

- Use CNNs: Convolutional Neural Networks excel at processing images and learning visual patterns.

- Evaluate and Improve: Use data augmentation (e.g., rotating images, flipping horizontally) to improve performance.

With image classification, you’ll learn critical concepts in feature extraction and convolutional architectures.

Creating a Predictive Model

Predictive models are versatile and valuable in real-world applications, like forecasting sales or predicting health outcomes. To build one:

- Choose a Dataset: Gather historical data relevant to the outcome you want to predict.

- Pick an Algorithm: Regression models or decision trees work well for many predictive tasks.

- Test and Adjust: Fine-tune your model based on performance metrics like mean squared error (MSE) or root mean squared error (RMSE).

Predictive models give you a broad understanding of regression techniques and prepare you for more complex real-world problems.

Next Steps: Advancing Your AI Skills

Learning from Open-Source Projects

Studying open-source projects can be incredibly helpful. Platforms like GitHub host thousands of machine learning projects, often complete with code, datasets, and documentation. Reviewing these can help you:

- Understand real-world problem-solving approaches.

- Improve coding skills and understand best practices.

- Gain exposure to diverse projects and data types.

Browsing open-source projects accelerates learning and exposes you to practical applications.

Joining AI Communities

Becoming part of an AI community can help you stay updated and find support. Here are some popular options:

- Kaggle: Engage in competitions and join discussions with AI enthusiasts.

- Reddit Communities: Subreddits like r/MachineLearning offer valuable insights, discussions, and resources.

- AI Meetups: Many cities have AI meetups where you can connect with experts and attend workshops.

Networking and community engagement can provide motivation and guidance on your AI journey.

Building a Portfolio

As you complete projects, building a portfolio can help showcase your skills. Include:

- Detailed Documentation: Describe the problem, approach, and outcome of each project.

- Visualizations: Use charts or sample outputs to illustrate your model’s capabilities.

- GitHub or Personal Website: Keep everything accessible to potential employers or collaborators.

A strong portfolio demonstrates your growth, skills, and dedication to learning.

With this guide, you now have the foundational knowledge to start training your own AI models. Each step you take deepens your understanding and gets you closer to mastering machine learning. Whether you’re working on your first project or diving into advanced techniques, remember that every experience builds your expertise in this fascinating field.

FAQs

What is the minimum amount of data needed to train an AI model?

The amount of data required depends on the model type and the complexity of the problem. For simpler models (like linear regression), a few hundred examples can be enough. However, complex models, like deep learning algorithms, often require thousands or even millions of data points. When data is limited, techniques like data augmentation or transfer learning can help improve model performance.

Do I need a powerful computer to train AI models?

For basic models, you don’t need a high-end computer. Google Colab and Kaggle Notebooks offer free access to GPUs, which are powerful enough for most beginner projects. If you’re working with very large datasets or complex models, such as deep neural networks, using a cloud computing service or investing in a dedicated GPU setup may be beneficial.

How long does it take to train an AI model?

Training time varies widely based on factors like data size, model complexity, and available hardware. Simple models can train in seconds or minutes, while deep learning models on large datasets can take hours or even days. Using efficient batch sizes, adjusting the learning rate, and choosing appropriate architectures can help reduce training time.

Can I use pre-trained models, and how do they work?

Yes, pre-trained models are available and highly recommended for beginners. These models have already been trained on extensive datasets and can be fine-tuned for your specific task. Commonly used pre-trained models include VGG and ResNet for image recognition, or BERT and GPT for text-related tasks. Using pre-trained models allows you to get started quickly and saves on both time and computational resources.

How can I prevent my model from overfitting?

Overfitting happens when a model performs well on training data but poorly on new data. Techniques to prevent overfitting include using regularization (such as L2 regularization), dropout layers, and data augmentation. It’s also helpful to monitor model performance on a validation set and stop training once the validation accuracy starts to plateau or decrease.

What is the difference between supervised, unsupervised, and reinforcement learning?

- Supervised Learning: Uses labeled data, where the outcome is already known, to train models. It’s commonly used for classification and regression tasks.

- Unsupervised Learning: Works with unlabeled data to discover patterns or groupings within the data. Clustering and dimensionality reduction are examples.

- Reinforcement Learning: Involves an agent learning through rewards and penalties in an environment, often used in gaming and robotics.

Each type of learning has unique applications and is chosen based on the nature of the problem.

Are there any beginner-friendly datasets I can use?

Yes, many public datasets are perfect for beginners. Some examples include Iris (for classification), MNIST (for digit recognition), CIFAR-10 (for image classification), and Titanic (for survival prediction). You can find these and many more on Kaggle, UCI Machine Learning Repository, and Google Dataset Search. Working with these datasets helps you build a solid foundation in handling and understanding data.

What skills do I need to start training AI models?

Basic programming knowledge in Python and familiarity with libraries like NumPy and Pandas are essential for data handling. For model building, skills in machine learning frameworks like TensorFlow, PyTorch, or Scikit-Learn are helpful. As you progress, knowledge of data preprocessing, model evaluation, and hyperparameter tuning will make you more proficient.

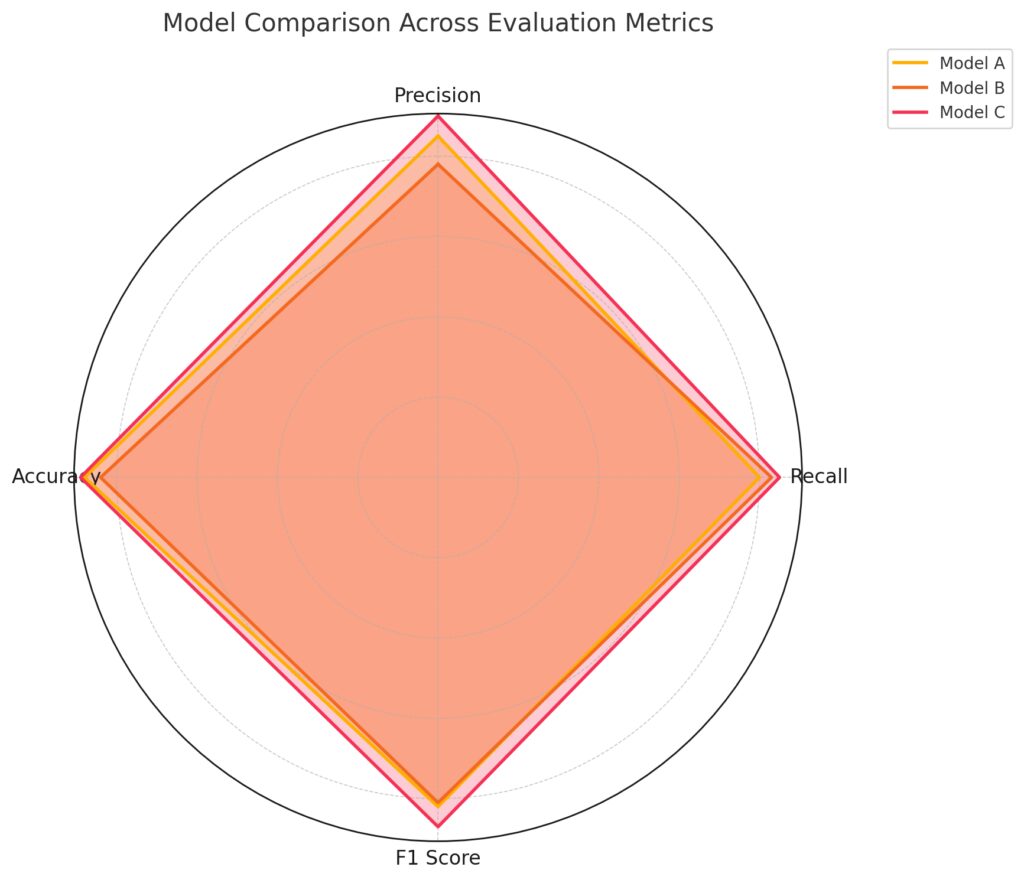

Model Evaluation Metrics

Comparison of model evaluation metrics for precision, recall, F1-score, and accuracy across multiple models.

How do I decide which machine learning algorithm to use?

Choosing the right algorithm depends on your task, data, and goals. For example:

- Linear Regression works well for predicting continuous values (like price or temperature).

- Decision Trees or Random Forests are good for classification tasks with structured data.

- Convolutional Neural Networks (CNNs) are ideal for image data.

- Recurrent Neural Networks (RNNs) or Transformers are popular for text and sequence data.

A good rule of thumb is to start with simpler models to establish a baseline and then experiment with more complex models as needed.

What is the purpose of splitting data into training, validation, and test sets?

Splitting data helps prevent overfitting and ensures the model can generalize to new data. The training set teaches the model, the validation set helps fine-tune hyperparameters and track performance during training, and the test set provides an unbiased evaluation of the model’s accuracy on new data. A common split is 70% training, 15% validation, and 15% test, though this can vary based on the dataset size.

What are hyperparameters, and why are they important?

Hyperparameters are settings that control how a model learns. Examples include the learning rate, batch size, and number of layers in a neural network. Hyperparameters are tuned to improve model performance, but unlike other model parameters, they are set before training and aren’t adjusted during the training process. Optimizing hyperparameters can significantly enhance the accuracy and efficiency of your model.

What is the difference between machine learning and deep learning?

Machine learning refers to algorithms that learn patterns from data without being explicitly programmed for every rule. It includes various methods like linear regression, decision trees, and clustering. Deep learning is a subset of machine learning that uses neural networks with multiple layers (deep neural networks) to model complex patterns, particularly effective for image, audio, and text data. While all deep learning models are machine learning models, not all machine learning models are deep learning.

How do I measure the performance of my model?

Performance metrics depend on the task:

- Accuracy is common for classification tasks but may be misleading if the data is imbalanced.

- Precision and Recall are more informative in cases with imbalanced classes.

- Mean Squared Error (MSE) is used in regression to show how close predictions are to actual values.

- F1 Score combines precision and recall, providing a single metric that balances both.

Selecting appropriate metrics gives you a better understanding of model effectiveness for the specific problem.

Can I use AI models without coding?

Yes, some platforms offer no-code or low-code solutions for building models. Tools like Teachable Machine by Google, Lobe, and Microsoft Azure ML allow you to create, train, and deploy models with minimal coding experience. These platforms are particularly useful for beginners and non-programmers who want to experiment with AI.

How can I make my model faster without losing accuracy?

To improve model speed, consider these options:

- Reduce Model Complexity: Use fewer layers or parameters, especially if you’re working with limited data.

- Optimize Hyperparameters: Fine-tuning hyperparameters like batch size and learning rate can help.

- Use Transfer Learning: Starting with a pre-trained model and fine-tuning it saves training time and can improve accuracy.

- Hardware Acceleration: Utilize GPUs or cloud platforms with hardware accelerators to speed up computations.

Finding the right balance between speed and accuracy is often a process of trial and error.

Are there risks involved in training and deploying AI models?

Yes, AI models come with potential risks, including data privacy concerns, bias in training data, and security vulnerabilities. For instance, if a model is trained on biased data, it may produce unfair outcomes. It’s also important to secure your model’s API and manage access to prevent unauthorized use. Monitoring model performance post-deployment and conducting regular audits can mitigate some of these risks.

What is data preprocessing, and why is it necessary?

Data preprocessing transforms raw data into a clean format suitable for model training. Common steps include:

- Cleaning: Removing duplicates, handling missing values, and fixing errors in the dataset.

- Normalization: Scaling features so they have similar ranges (like rescaling pixel values between 0 and 1).

- Encoding Categorical Data: Converting text categories (e.g., “red,” “blue,” “green”) into numerical values the model can process.

- Feature Engineering: Creating new features or modifying existing ones to help the model learn patterns better.

Proper preprocessing makes the data easier for the model to understand, leading to more accurate predictions.

What’s the difference between feature selection and feature engineering?

Feature selection involves choosing the most relevant variables (features) from your dataset to simplify the model and improve performance. It removes irrelevant or redundant data that could harm accuracy.

Feature engineering, on the other hand, is about creating new features or transforming existing ones to help the model find patterns more easily. For example, in a time-based dataset, you could create a “day of the week” feature to help a model learn weekly patterns.

Both techniques are essential for improving model efficiency and performance.

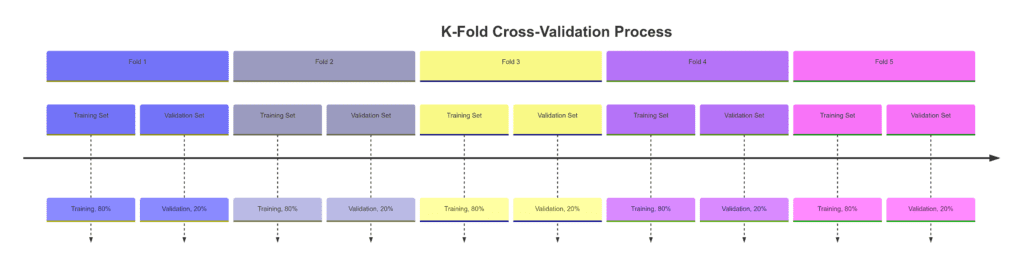

Why is cross-validation used, and how does it work?

Cross-validation is a technique for assessing model performance and reducing overfitting. In k-fold cross-validation, the dataset is divided into k subsets or “folds.” The model trains on k-1 folds and is validated on the remaining fold. This process repeats k times, with each fold used as a validation set once.

Cross-validation provides a more reliable estimate of model performance, as the model is evaluated on different subsets of data, making it less likely to overfit.

Each Fold:Training Set: 80% of the dataset is used for training, colored for clarity.

Validation Set: 20% of the dataset is set aside for validation, rotating through each fold to ensure each part of the dataset is validated once.

This timeline illustrates how data is rotated for training and validation across all folds, ensuring comprehensive model evaluation.

What is model interpretability, and why is it important?

Model interpretability refers to the ability to understand and explain how a model makes decisions. It’s particularly important in industries like healthcare and finance, where transparency and accountability are essential. Interpretable models allow:

- Understanding feature importance: Knowing which features impact the model’s predictions most.

- Building trust: Especially in high-stakes applications, stakeholders need to trust the model’s outcomes.

- Improving models: By seeing how the model uses different features, you can refine and improve it.

Tools like SHAP and LIME help interpret complex models, providing insights into model decision-making.

What are some best practices for managing large datasets?

Handling large datasets can be challenging. Here are a few tips:

- Sampling: Use a representative sample of the dataset for faster experimentation. Once the model is optimized, apply it to the full dataset.

- Data Compression: Compress data formats to save memory without sacrificing too much quality.

- Batch Processing: Instead of processing all data at once, work with smaller batches to reduce memory usage.

- Use Distributed Computing: Frameworks like Apache Spark and Dask can handle large datasets across multiple machines.

Efficiently managing large datasets improves processing speed and model training efficiency.

How can I monitor a deployed AI model?

Monitoring a deployed model is essential for ensuring ongoing performance. Key areas to track include:

- Accuracy and Error Rates: Regularly check performance metrics on new data to catch any declines in accuracy.

- Data Drift: Data may evolve over time, potentially affecting the model’s performance. Monitor for changes in data patterns.

- Latency: Keep an eye on the time it takes for the model to process new inputs and return results.

- Model Retraining: Based on performance, you may need to periodically retrain the model with updated data.

Using tools like MLflow or Amazon SageMaker can help automate monitoring and retraining workflows.

How does reinforcement learning differ from other machine learning types?

In reinforcement learning (RL), an agent learns by interacting with an environment and receiving feedback through rewards or penalties. Unlike supervised learning, where the model learns from labeled data, RL focuses on learning by trial and error. This approach is ideal for tasks where sequential decisions are necessary, such as playing a game, robotics, or optimizing a process.

Reinforcement learning is a powerful approach but requires careful design and large amounts of training to find optimal strategies.

What should I do if my model’s performance suddenly drops?

A sudden performance drop could be due to several factors:

- Data Drift: Changes in the underlying data or features can affect model predictions.

- Model Staleness: If the model hasn’t been retrained in a while, new data patterns may not be reflected.

- Software Updates: Updates in libraries or dependencies can sometimes alter model behavior.

- Input Errors: Ensure the data preprocessing pipeline is functioning correctly, as an error here can affect model performance.

To address the drop, check the data pipeline, test the model on recent data, and consider retraining with updated data.

Resources

Tutorials and Documentation

- TensorFlow Documentation

- PyTorch Documentation

- Kaggle Learn

- Fast.ai Courses

Datasets

- Kaggle Datasets

- UCI Machine Learning Repository

- Google Dataset Search

Model and Code Repositories

- GitHub – Machine Learning Projects

- Hugging Face Model Hub

- Papers with Code

Model Deployment and Cloud Platforms

- Google Colab

- AWS SageMaker

- Microsoft Azure Machine Learning