What is Backpropagation?

Backpropagation is the backbone of most modern deep learning algorithms. It’s a process that optimizes neural networks by propagating errors backward through the layers, adjusting weights to minimize loss.

Interactive backpropagation elevates this process by enabling real-time visualization of these adjustments, providing insights into how models learn.

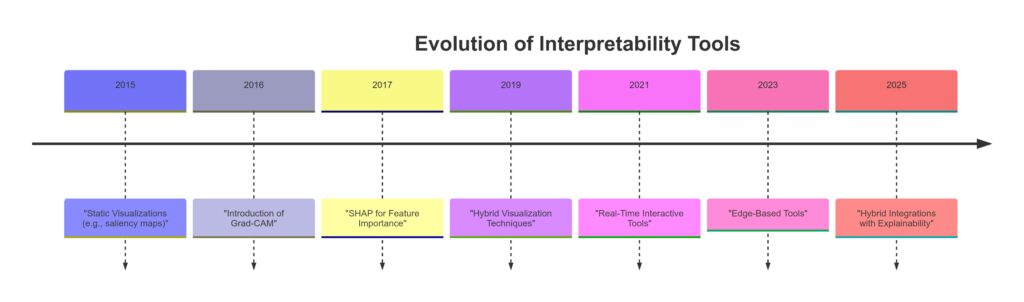

Evolution into Interactive Tools

The move from static to interactive tools has revolutionized how researchers and developers interpret neural networks. Real-time visualization allows users to see inside the “black box,” making AI more accessible and interpretable.

Why Real-Time Visualization Is Critical

Deep learning models are growing in complexity. Without interactive backpropagation, understanding model behavior becomes daunting. Real-time tools:

- Enhance transparency.

- Enable rapid debugging.

- Boost confidence in AI systems.

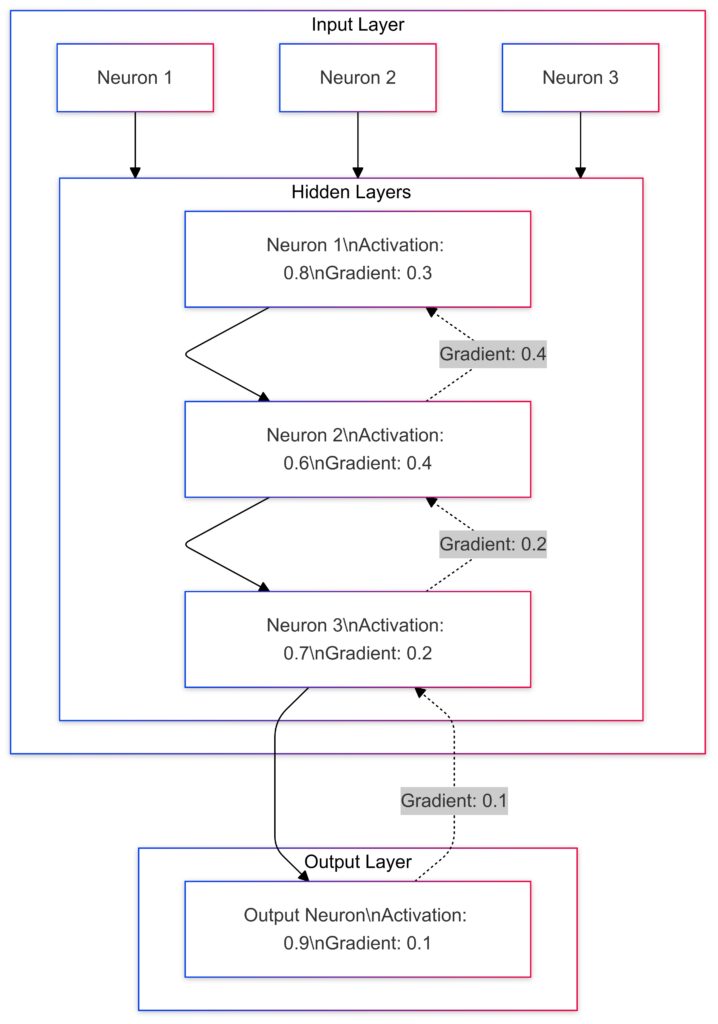

Core Concepts Behind Interactive Backpropagation

Hidden Layers: Neurons with activations and gradients annotated.

Output Layer: Final neuron showing activation and gradient.

Gradient Flow:

Arrows point backward, showing the flow of gradients during backpropagation.

Gradient magnitudes are represented next to the arrows, with contrasting colors indicating their magnitude.

Gradient Descent and Backpropagation Basics

At its core, backpropagation uses gradient descent to adjust weights. Gradients flow backward from the loss function to earlier layers, guiding updates. This iterative process enables networks to learn features step by step.

Key terms:

- Learning rate: How aggressively weights are updated.

- Loss function: Measures prediction error.

Interactive tools overlay these mechanics with visual feedback, illustrating which parts of the data drive the network’s decisions.

Layer-Wise Relevance Propagation (LRP)

LRP takes interpretability a step further by highlighting relevant features in input data. Unlike gradients, LRP distributes relevance scores, helping identify:

- Which pixels in an image contributed most to predictions.

- How critical specific inputs are in classification tasks.

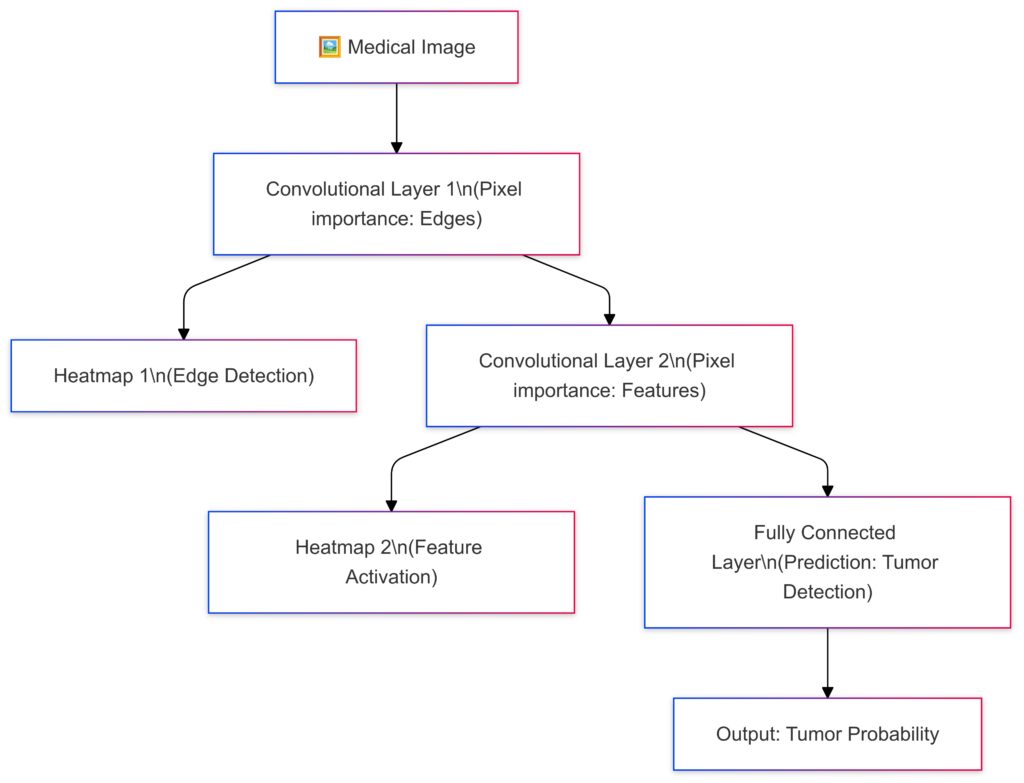

Activation Visualization and Its Significance

Activations represent how layers process information. Real-time visualization of activations helps users:

- Spot dead neurons or ineffective layers.

- Understand feature extraction at different layers.

- Optimize architecture for specific tasks.

For example, convolutional layers might show which edges or textures the network focuses on, offering insight into model comprehension.

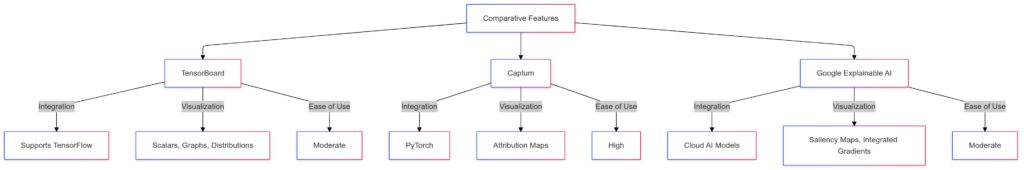

Popular Tools for Real-Time Visualization

Open-Source Frameworks

Several open-source tools simplify interactive backpropagation:

- TensorBoard: Part of TensorFlow, it provides detailed graphs and activation maps.

- Netron: A cross-platform tool for inspecting neural network architectures.

- Captum: PyTorch’s library for model interpretability.

Each framework allows integration with different models, offering varying degrees of customization.

Proprietary Solutions

Beyond open-source tools, proprietary options like DeepDream or Google’s Explainable AI Platform offer advanced visualization features. These often cater to enterprise-level projects, providing:

- Scalable processing.

- Intuitive dashboards.

Features to Look For in Visualization Tools

When choosing a tool, prioritize:

- Ease of integration: Works seamlessly with your frameworks.

- Real-time capabilities: Supports live updates during training.

- Customizable views: Tailors outputs to specific layers or inputs.

Applications of Interactive Backpropagation

Convolutional Layer 1: Focuses on edge detection; a heatmap highlights edges contributing to tumor detection.

Convolutional Layer 2: Identifies higher-level features; the heatmap shows activated regions linked to features.

Fully Connected Layer: Processes feature maps to make the tumor prediction.

Output: Final prediction with tumor probability.

Neural Network Debugging

Interactive backpropagation transforms debugging by:

- Exposing ineffective layers.

- Highlighting vanishing/exploding gradients.

- Identifying overfitting in real time.

This is especially useful for complex architectures like transformers or GANs.

Enhancing Interpretability in AI

For ethical AI, understanding decisions is critical. Interactive visualization tools:

- Make neural networks less opaque.

- Build trust by explaining how models weigh inputs.

- Help regulatory bodies assess AI fairness.

Accelerating Research and Innovation

Faster, clearer insights streamline the experimentation process. Researchers can:

- Test hypotheses in real time.

- Improve architectures without trial-and-error guesswork.

- Collaborate more effectively by visualizing key features and anomalies.

Challenges and Future Trends

Computational Demands

Interactive backpropagation requires significant computational resources. Real-time visualization involves:

- Storing and processing intermediate activations.

- Calculating gradients on-the-fly for large models.

For high-resolution images or massive datasets, this can lead to bottlenecks. Developers often need to:

- Use optimized hardware, such as GPUs or TPUs.

- Implement layer-wise or batch-wise visualization to manage costs.

Scalability Concerns

As neural networks scale, their complexity poses challenges:

- Deep architectures (e.g., GPTs or transformers) make visualizing every layer impractical.

- Dynamic models require flexible tools to handle varying inputs or architectures.

Potential solutions include:

- Aggregating data into summary visualizations.

- Selecting key layers or operations for detailed analysis.

The Future of Interactive AI Explainability Tools

The demand for interpretable AI is driving innovation in backpropagation tools. Emerging trends include:

- Integration with autoML: Automating interpretability for non-expert users.

- Hybrid visualization methods: Combining LRP, Grad-CAM, and SHAP for richer insights.

- Cloud-based visualization services: Offloading computations to scalable cloud environments.

Bridging Accessibility Gaps

For broader adoption, tools must balance power with simplicity. Intuitive GUIs, pre-configured settings, and low-code APIs will help democratize advanced visualization capabilities.

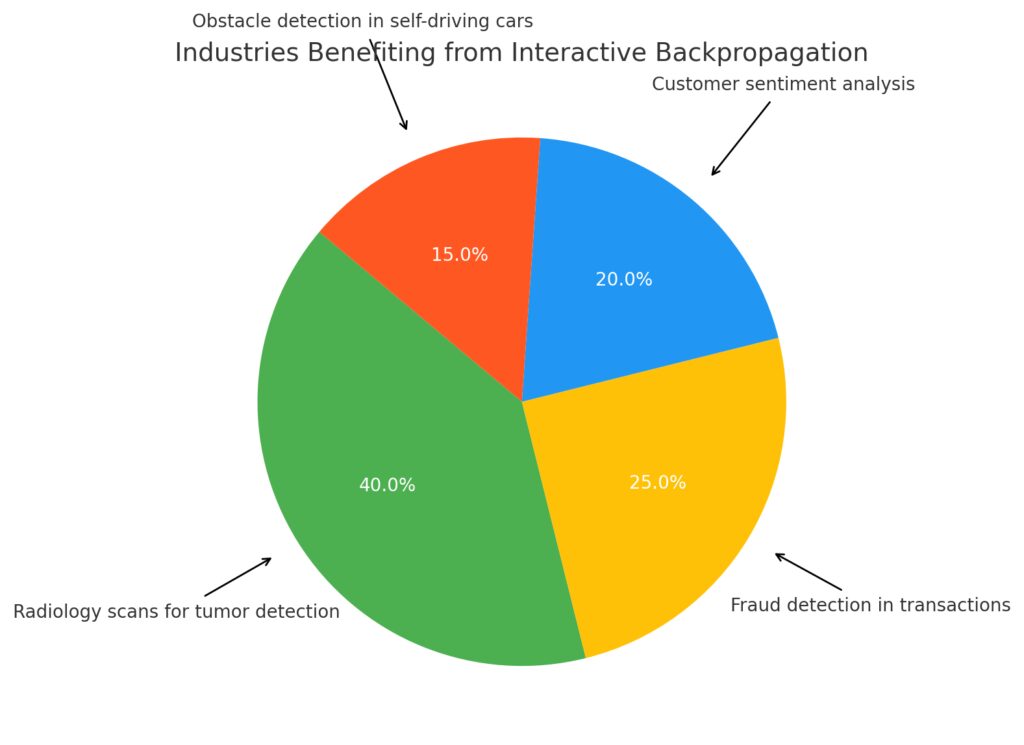

Interdisciplinary Integration

In fields like medicine and finance, interactive backpropagation is moving beyond debugging. It’s being tailored to specific applications, such as:

- Highlighting tumors in radiology scans.

- Tracing anomalies in financial fraud detection.

This cross-domain application promises a future where AI models not only perform but explain their reasoning in domain-specific contexts.

Innovations Driving Interactive Backpropagation Forward

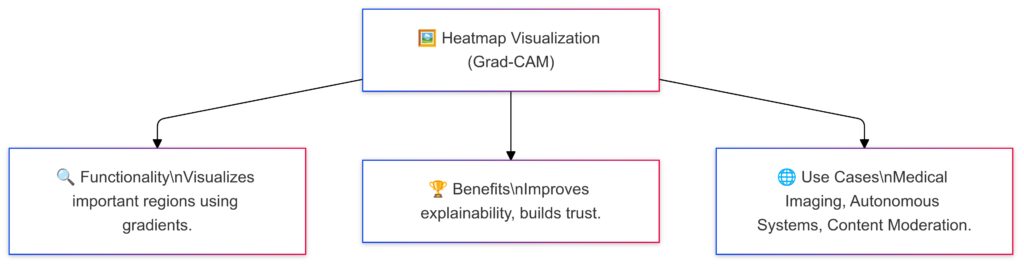

Hybrid Visualization Techniques

The fusion of multiple visualization strategies is reshaping interactive backpropagation. Methods like Grad-CAM, SHAP, and Integrated Gradients are being combined to:

- Provide multi-layered insights.

- Highlight both low-level features and high-level decision paths.

For instance, Grad-CAM can pinpoint regions in an image that influence a decision, while SHAP quantifies the importance of individual features.

Neuro-symbolic Integration

Emerging tools incorporate symbolic reasoning into neural networks, making their logic more interpretable. By integrating symbolic logic:

- Models can justify decisions in human-readable formats.

- Complex decisions become transparent and explainable.

Edge Computing and Lightweight Tools

With the rise of edge AI, lightweight versions of interactive visualization tools are being developed. These tools prioritize efficiency:

- Optimized for deployment on IoT devices.

- Capable of running real-time analysis without heavy computational loads.

This innovation is critical for applications like autonomous vehicles and wearable devices.

Real-World Impact of Interactive Backpropagation

Proportion of industries adopting interactive backpropagation, with applications like healthcare diagnostics and financial modeling.

Transforming Healthcare AI

Interactive backpropagation is improving trust and usability in healthcare applications:

- Highlighting critical areas in diagnostic imaging.

- Enabling real-time feedback for clinicians during model use.

For example, visual tools can explain why a model flags certain patterns in X-rays or CT scans, aiding diagnoses.

AI in Education

In education, interactive tools are being used to teach students about neural networks. They:

- Make abstract concepts more tangible.

- Help students visualize training dynamics, making learning engaging.

Platforms with intuitive dashboards allow learners to experiment with networks, fostering deeper understanding.

Boosting AI Adoption in Enterprises

Businesses using AI face skepticism about trustworthiness. By offering explainable insights, interactive backpropagation tools:

- Enhance transparency with stakeholders.

- Allow employees to understand and validate AI-driven decisions.

This has been especially impactful in finance, retail, and legal tech.

Comparing Popular Tools and Frameworks

Open-Source Versus Proprietary Solutions

Open-source tools like TensorBoard and Captum excel in flexibility. They allow:

- Seamless customization.

- Free access for small-scale projects.

However, proprietary tools often lead in:

- Scalability for enterprise use.

- Intuitive user interfaces for non-experts.

Which Tool Suits Your Needs?

Consider:

- TensorBoard: Ideal for TensorFlow users seeking detailed logs and graphs.

- Captum: Best for PyTorch models requiring interpretability.

- Explainable AI (Google): Suitable for enterprises needing end-to-end solutions with prebuilt integrations.

Matching tools to your framework and goals ensures maximum efficiency.

Conclusion

Interactive backpropagation has redefined how we understand neural networks. Its evolution into real-time, visual tools empowers users to explore the inner workings of AI models, enhancing transparency, trust, and performance.

With innovations like hybrid techniques, edge computing, and domain-specific applications, the future of this field promises unprecedented accessibility and precision. As these tools grow in sophistication, they will play a central role in demystifying AI across industries, bridging the gap between complex models and human understanding.

FAQs

Is interactive backpropagation suitable for beginners in AI?

Yes, interactive backpropagation tools are often designed with user-friendly interfaces, making them suitable for beginners. They simplify complex concepts by providing visual aids and actionable insights. Many frameworks, like TensorBoard or Netron, include intuitive dashboards, allowing learners to explore neural networks without diving deep into code.

Example: A beginner experimenting with a simple image classifier in TensorFlow can use TensorBoard to visualize training progress and spot underperforming layers.

What are the main limitations of interactive backpropagation?

Despite its advantages, interactive backpropagation has some limitations:

- Computational cost: Real-time visualization can be resource-intensive, especially for large datasets or deep architectures.

- Scalability: Visualizing every layer or neuron is impractical in highly complex models.

- Bias risks: Highlighted features might oversimplify decision processes, leading to misinterpretation.

Example: In a deep neural network with millions of parameters, focusing on a single layer might not provide the full picture, potentially missing nuanced interactions between layers.

How is interactive backpropagation different from traditional backpropagation?

Traditional backpropagation calculates gradients to adjust weights during training but offers no insights into how decisions are made. Interactive backpropagation adds a visualization layer, making these processes observable and interpretable. It’s not just about training but understanding the why behind predictions.

Example: While traditional backpropagation adjusts a model to better classify dogs vs. cats, interactive backpropagation shows whether the network is focusing on fur texture or other features to make its decision.

Can interactive backpropagation be applied to non-image data?

Absolutely! While often associated with images, interactive backpropagation works for text, tabular, and even audio data. Tools like Captum or Explainable AI can visualize important words in text classification tasks, key features in spreadsheets, or specific frequencies in audio processing.

Example: In sentiment analysis, interactive tools can highlight influential words like “excellent” or “disappointing,” making it clear why a review was classified as positive or negative.

What’s the role of heatmaps in interactive backpropagation?

Heatmaps are a common visualization technique used to display relevance scores or activations. They show which regions or inputs contributed most to a model’s prediction, providing an intuitive way to interpret results.

Example: In a medical imaging model, a heatmap might highlight suspicious regions of a lung X-ray that the AI associates with potential abnormalities, assisting radiologists in making accurate diagnoses.

Are there any challenges in interpreting visual outputs?

Yes, interpreting visual outputs can be challenging due to:

- Ambiguity: Not all visualizations clearly explain why a model made a decision.

- Over-reliance on visualization: Heatmaps or activations may oversimplify complex interactions in deep networks.

Example: A Grad-CAM heatmap might highlight irrelevant regions in an image if the network is misaligned, misleading users into thinking the model focuses on meaningful features.

How does interactive backpropagation ensure ethical AI practices?

By enhancing transparency, interactive backpropagation supports ethical AI development. It helps:

- Detect biases in training data.

- Justify decisions to stakeholders.

- Improve fairness and accountability.

Example: In hiring models, interactive backpropagation can reveal if demographic factors, like gender or age, disproportionately influence predictions, helping developers adjust and mitigate biases.

What role does interactive backpropagation play in AI research?

Interactive backpropagation accelerates AI research by enabling:

- Faster debugging of experimental models.

- Clear insights into how novel architectures behave.

- Identification of optimization bottlenecks.

Example: A researcher developing a custom convolutional architecture for object detection can use tools like Captum to refine feature extraction in early layers, optimizing performance for specific tasks.

Can interactive backpropagation integrate with cloud platforms?

Yes, many interactive visualization tools now offer cloud integration. Platforms like Google’s Explainable AI and AWS SageMaker support interactive backpropagation, making it easier to process large datasets and train models remotely.

Example: A data scientist using Google Cloud AI can visualize training dynamics in real-time, even for large-scale NLP models, without worrying about local hardware limitations.

Is interactive backpropagation important for unsupervised learning?

While more commonly used in supervised tasks, interactive backpropagation can still aid in unsupervised learning. It can help analyze cluster formations, visualize latent space representations, and identify influential features during model training.

Example: In a clustering task for customer segmentation, tools can reveal which customer behaviors or demographics are driving certain clusters, providing actionable business insights.

How does interactive backpropagation handle multimodal data?

Interactive backpropagation can work with multimodal data by visualizing the contribution of each data type (e.g., text, image, and audio) within a unified framework. Tools adapted for multimodal networks allow researchers to see how each modality contributes to the final prediction.

Example: In a model combining audio and video for emotion recognition, interactive backpropagation can show how facial expressions (video) and tone of voice (audio) interact to determine emotional states like happiness or anger.

What makes Grad-CAM a popular choice for interactive backpropagation?

Grad-CAM (Gradient-weighted Class Activation Mapping) is widely used because of its simplicity and effectiveness. It overlays heatmaps onto input images, highlighting areas most responsible for a decision, without requiring major changes to the network.

Example: In a dog-vs-cat classifier, Grad-CAM might highlight the tail or ears of an animal to show why it classified the image as a “dog,” offering intuitive visual explanations.

Can interactive backpropagation detect biases in datasets?

Yes, interactive backpropagation is a powerful tool for uncovering biases. By visualizing which features or inputs a model focuses on, developers can identify patterns that reflect dataset imbalances or discriminatory factors.

Example: In a facial recognition system, if interactive tools reveal that the model performs better on lighter skin tones, developers can address the imbalance by improving dataset diversity.

How is interactive backpropagation used in natural language processing (NLP)?

In NLP tasks, interactive backpropagation highlights specific words, phrases, or sentence structures that influence predictions. Tools like Captum and SHAP are often used to explain predictions for tasks such as sentiment analysis, machine translation, or question answering.

Example: In a sentiment analysis task, an interactive tool might emphasize words like “amazing” or “disappointed,” revealing the linguistic features driving the positive or negative classification.

What challenges arise when using interactive backpropagation in large-scale systems?

For large-scale systems, challenges include:

- Data volume: Managing high-dimensional data from massive inputs like images or text sequences.

- Computation time: Real-time visualization for large models (e.g., transformers) can be resource-intensive.

- Model complexity: It’s harder to interpret intertwined feature representations in deeply layered networks.

Example: Training a transformer for document summarization might generate extensive attention maps, making it difficult to determine which layers or tokens are most critical for the model’s outputs.

How does interactive backpropagation improve model debugging?

Interactive backpropagation enables faster and more targeted debugging by visualizing activations, gradients, and relevance scores. This helps identify:

- Layers or neurons contributing little to predictions.

- Features causing misclassifications.

- Bottlenecks in training efficiency.

Example: A developer noticing poor performance in an image classifier might use visualization to discover that the network focuses on irrelevant background features, like shadows, rather than the objects of interest.

Are there ethical concerns with interactive backpropagation?

While interactive backpropagation enhances transparency, there are ethical considerations:

- Oversimplification: Visualizations might not capture all nuances, leading to incorrect conclusions.

- Potential misuse: Insights from visualizations could be exploited, such as optimizing models to bypass security systems.

Example: A model designed to detect deepfake videos could inadvertently reveal vulnerabilities if interactive tools expose specific features it relies on, such as pixel inconsistencies.

Can interactive backpropagation help with adversarial attack defense?

Yes, interactive backpropagation can aid in detecting and defending against adversarial attacks by highlighting unusual patterns in feature relevance. These tools can show when models are overly sensitive to minor input changes, which is often exploited in adversarial attacks.

Example: In an adversarial image attack, a visualization might reveal that the model’s focus shifts drastically to irrelevant pixels after a slight noise injection, signaling a potential vulnerability.

What are some domain-specific adaptations of interactive backpropagation?

Many industries tailor interactive backpropagation for their specific needs:

- Healthcare: Tools are designed to integrate with diagnostic workflows, such as highlighting abnormalities in medical scans.

- Autonomous vehicles: Focus on real-time decision-making, visualizing which environmental features (e.g., road signs, pedestrians) affect driving decisions.

- Marketing and e-commerce: Reveal customer preferences by showing which behaviors drive product recommendations.

Example: In healthcare, interactive backpropagation might help radiologists understand why a model flagged a specific region in an MRI as potentially cancerous, ensuring alignment with medical expertise.

How is interactive backpropagation evolving with generative AI models?

Generative models like GANs and diffusion models present unique challenges for interpretability, but interactive backpropagation is adapting by:

- Visualizing feature contributions to generated outputs.

- Highlighting which training samples influence specific generative elements.

- Showing how latent spaces are structured for better manipulation.

Example: In a GAN generating realistic faces, interactive backpropagation might reveal which parts of the latent vector control attributes like hair color or facial expression, aiding model refinement.

Resources

Online Tutorials and Courses

- Coursera: “Explainable AI (XAI) for Computer Vision” by IBM

Offers hands-on experience with visualization techniques like Grad-CAM and SHAP.

Explore course - Fast.ai Course on Deep Learning

Includes lessons on understanding and debugging neural networks using interpretability tools.

Visit Fast.ai - Khan Academy: Backpropagation Intuition

A beginner-friendly tutorial for grasping the mathematical foundations of backpropagation.

Start learning

Tools and Frameworks

- TensorBoard

A tool for visualizing model architecture, training progress, and activations in TensorFlow.

Learn more - Captum

PyTorch’s library for interpretability, supporting Gradient Shap, Integrated Gradients, and more.

Get started - Netron

A lightweight, open-source model visualization tool for inspecting architectures.

Download here - SHAP (SHapley Additive Explanations)

A framework for explaining predictions, useful for both tabular and deep learning models.

Explore SHAP - Google’s Explainable AI Platform

A cloud-based solution for deploying and interpreting AI models at scale.

Visit Google Cloud

Communities and Forums

- Stack Overflow

A great place to ask questions about integrating and troubleshooting visualization tools.

Visit Stack Overflow - Reddit: r/MachineLearning

Discussions on the latest advancements in AI interpretability and visualization.

Join the conversation - Kaggle

Explore kernels demonstrating interactive backpropagation with real datasets and competitions.

Go to Kaggle

Open Datasets for Experimentation

- ImageNet

The go-to dataset for training and visualizing neural networks in image classification tasks.

Access ImageNet - MNIST

A beginner-friendly dataset for experimenting with digit classification and interactive backpropagation.

Find MNIST here - IMDB Dataset

Popular for NLP tasks like sentiment analysis, ideal for visualizing text data relevance.

Explore dataset

GitHub Repositories

- DeepDream Visualization

Google’s repository for generating dream-like visuals from neural networks.

Explore on GitHub - TorchRay

A library for visualizing and interpreting PyTorch models, including saliency maps and Grad-CAM.

Check it out - Lucid

A flexible library by Google for creating interpretability visualizations in TensorFlow.

Visit GitHub