Do Different Heads Focus on Unique Features?

Understanding how multi-head attention works is key to grasping the inner mechanics of transformer models like BERT or GPT. These attention heads seem like independent entities, but do they really focus on unique aspects of the data?

Let’s dive deep into the topic to uncover the role of these heads and whether they offer distinct perspectives.

What Is Multi-Head Attention?

Core Concept of Attention Mechanisms

The attention mechanism allows models to focus on specific parts of input data while processing it. This was a breakthrough in enabling models to understand context better, particularly in sequential data like text.

Why Multiple Heads?

Multi-head attention uses multiple parallel attention mechanisms (heads) within a single layer. Each head processes the input data differently due to unique parameterizations. These outputs are then combined, providing a richer representation of the input.

Key Advantages

- Captures different relationships within the data.

- Reduces the risk of overfitting by diversifying attention patterns.

- Improves generalization by learning complementary features.

How Do Attention Heads Differ?

Initialization and Learning Dynamics

Each head is initialized with distinct weights, meaning they start with unique biases in how they interpret the input data. During training, each head learns to specialize in capturing specific aspects of the data—like positional relationships, syntactic dependencies, or semantic roles.

Heatmaps and Visualization

Researchers often use attention heatmaps to analyze head behavior. These visualizations reveal:

- Some heads focus on local connections (e.g., nearby words in a sentence).

- Others capture long-range dependencies, connecting distant but contextually important words.

Empirical Findings

Studies like “Attention is not Explanation” and “Analyzing the Structure of Attention” have shown:

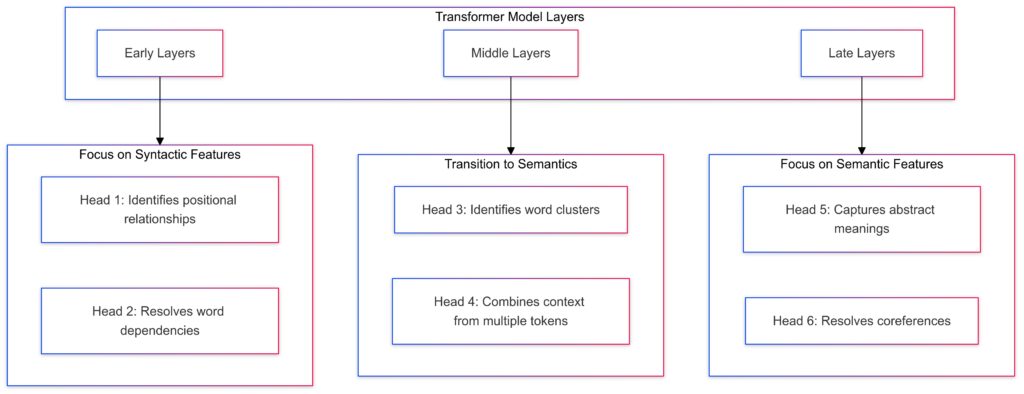

- Heads in early layers often attend to positional and syntactic features.

- Later layers’ heads focus on semantic meanings and abstract patterns.

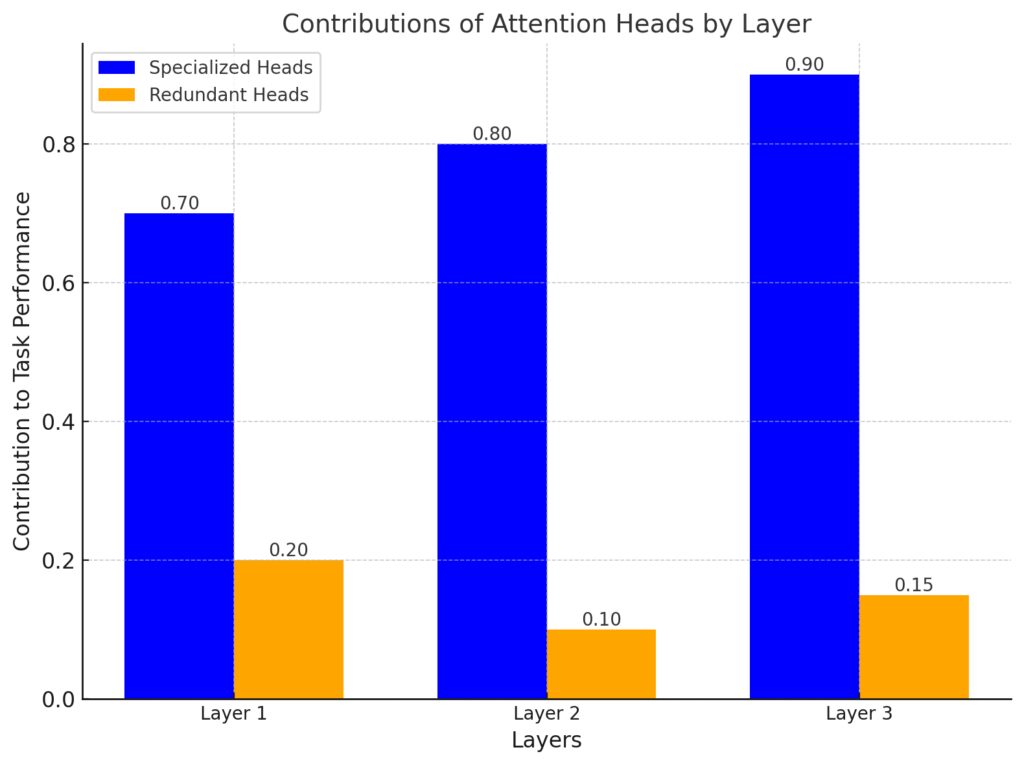

Do All Heads Contribute Equally?

X-Axis: Represents the layers of the transformer model (Layer 1, Layer 2, Layer 3).

Y-Axis: Shows the contribution of attention heads to task performance.

Blue (Specialized Heads): High contributions to task performance.

Orange (Redundant Heads): Minimal impact on task performance.

Contributions increase in later layers for specialized heads, suggesting deeper layers may play a more critical role.

Specialized heads significantly outperform redundant heads across all layers.

Head Redundancy

Not all heads are equally useful. Research has shown that some heads may be redundant, contributing little to overall model performance. For instance, pruning experiments where a subset of heads is removed often result in minimal performance degradation.

Specialized vs. General Heads

- Some heads are highly specialized, focusing on tasks like identifying named entities or question-answer relations.

- Others distribute their focus more broadly, acting as general-purpose heads.

Layer-Specific Functions

The layer position also influences the role of the heads:

- Lower layers focus on foundational features like grammar.

- Middle layers handle interactions between entities.

- Higher layers emphasize task-specific representations.

Real-World Implications of Unique Head Behavior

Model Interpretability

Understanding which head focuses on what feature can help explain why a model makes specific predictions. For instance:

- In machine translation, specific heads often align words between languages.

- In sentiment analysis, some heads might attend to polarizing words like “love” or “hate.”

Optimizing Model Efficiency

By identifying redundant heads, researchers can design pruned models that achieve similar accuracy with fewer parameters. This is especially important in deploying transformers for edge devices or real-time applications.

Domain Adaptation

Knowing the focus of attention heads can aid in fine-tuning models for specific domains, like medical data or legal texts, by emphasizing certain heads over others.

Tools and Techniques for Analyzing Attention

Attention Roll-Out

This technique visualizes how attention propagates through layers, offering insights into cumulative focus areas.

Attention Maps

By visualizing where each head “looks,” we can infer patterns of redundancy or specialization.

Probing Tasks

Probing tasks involve testing whether attention heads encode specific features like part-of-speech tags or dependency relations.

Myths and Challenges of Interpreting Attention Heads

As fascinating as multi-head attention is, understanding it isn’t always straightforward. Misconceptions abound, and interpreting the roles of individual heads poses significant challenges. Let’s tackle some common myths and hurdles to uncover the truth.

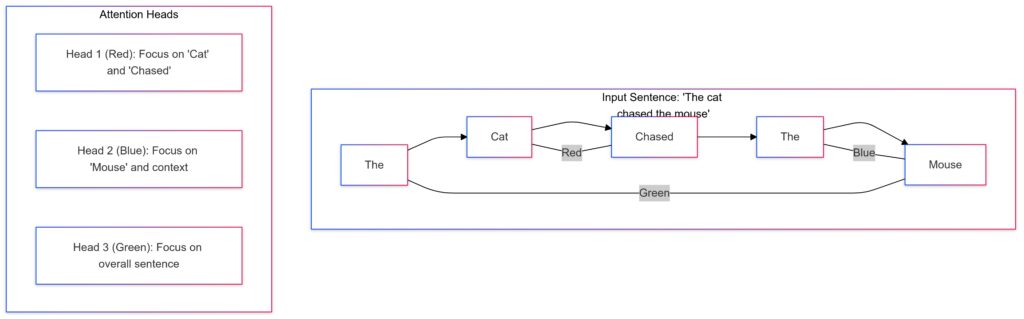

Myth 1: Attention Is Explanation

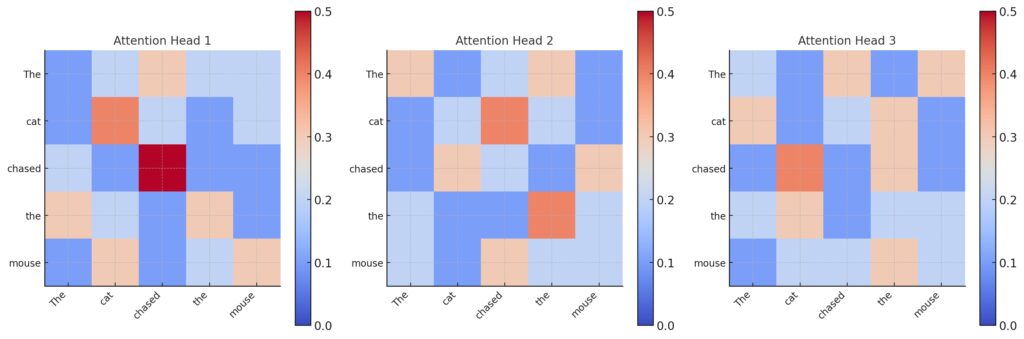

The Fallacy of Attention Visualizations

"The", "cat", "chased", "the", "mouse").Heatmap Values:Each cell shows the attention weight between two tokens.

Higher weights (brighter colors) indicate stronger focus.

Head Details:

Head 1: Focuses on syntactic relationships (e.g., “cat” and “chased”).

Head 2: Balances positional and contextual focus.

Head 3: Highlights semantic relationships, such as abstract meanings.

It’s tempting to believe that attention maps offer a complete explanation of model behavior. However, attention only reflects where the model “looks,” not why it makes decisions.

- A high attention score doesn’t always correlate with feature importance.

- The model may rely on hidden states or other transformations instead.

Supporting Evidence

Research like “Attention is not Explanation” highlights that gradient-based attributions or SHAP values often provide deeper insights into what drives predictions.

Myth 2: All Heads Serve Unique Functions

Overestimating Head Specialization

While it’s true that heads focus on different features, there’s considerable overlap in what they learn. This redundancy is deliberate—it makes models more robust to noise and parameter changes.

Pruning Experiments

Studies show that removing up to 50% of heads often has little effect on accuracy. This challenges the assumption that every head contributes a distinct, indispensable feature.

Challenge 1: Quantifying Redundancy

Measuring Head Utility

Quantifying how much each head contributes to model performance is tricky. Tools like mutual information or importance scores attempt this but require extensive computational resources.

Head Merging

Some approaches aggregate attention scores across heads, reducing redundancy but potentially losing nuanced patterns.

Challenge 2: Visualizing Attention in Depth

Complex Interactions

Attention mechanisms are layered. Visualizing individual heads doesn’t always capture cross-layer dependencies. A head might pass its focus to another layer, creating intricate relationships.

Temporal Dynamics

In tasks like machine translation, attention patterns shift significantly depending on input length and contextual changes. Tracking these changes adds another layer of complexity.

Challenge 3: Disentangling Attention from Output

Are Heads Just “Middlemen”?

Attention heads don’t directly produce outputs—they provide weighted values for subsequent computations. Interpreting heads in isolation risks ignoring the transformations applied later.

Head Ablation Studies

Ablation studies, where heads are disabled during inference, help identify their impact but don’t reveal how heads interact collectively to achieve outputs.

Complexity of Interpreting Attention Heads

Understanding the contribution of a single head requires analyzing the entire network.

Nodes (Attention Heads):

Represented as circles with varying sizes to indicate importance.

Red (Head 1, Head 5): High importance.

Blue (Head 2, Head 4): Moderate importance.

Gray (Head 3): Low importance.

Connections:

Lines indicate dependencies or interactions between attention heads.

Complex web of interactions highlights the challenge of isolating individual head behaviors.

Challenges:

Dependencies between heads create interpretability complexity.

Misconceptions About Layer-Wise Roles

Early Layers and Syntax

It’s often said that early layers capture syntax, but recent findings show syntactic patterns are distributed across multiple layers. This challenges assumptions about layer specialization.

Later Layers and Semantics

Higher layers aren’t purely semantic; they can also revisit and refine lower-level syntactic information. The interaction between layers is more iterative than hierarchical.

Bridging the Gap: Making Attention More Interpretable

Modular Interpretability Tools

Tools like BERTViz or Transformers Interpret allow researchers to inspect individual attention heads more effectively.

Unified Interpretability Frameworks

Efforts are underway to integrate attention heads, gradients, and outputs into a single framework for holistic analysis.

Future Directions

As transformers continue evolving, the focus is shifting to dynamic architectures where heads adapt their focus based on input needs. This could reduce redundancy while preserving specialization.

Future Trends and Applications of Multi-Head Attention

Multi-head attention is not just a theoretical construct—it has real-world implications and exciting research directions. In this stage, we’ll discuss cutting-edge advancements, practical use cases, and future trends shaping the evolution of attention mechanisms.

Dynamic Multi-Head Attention: The Next Frontier

Adapting Attention On-the-Fly

Traditional multi-head attention uses a fixed number of heads. Recent innovations, such as dynamic head selection, allow models to activate specific heads based on input requirements.

- This reduces computational costs while maintaining high performance.

- Models dynamically scale the number of heads for simpler or more complex tasks.

Context-Driven Head Specialization

Future designs might enable heads to adapt their focus based on input properties, such as text length or content complexity, enhancing efficiency.

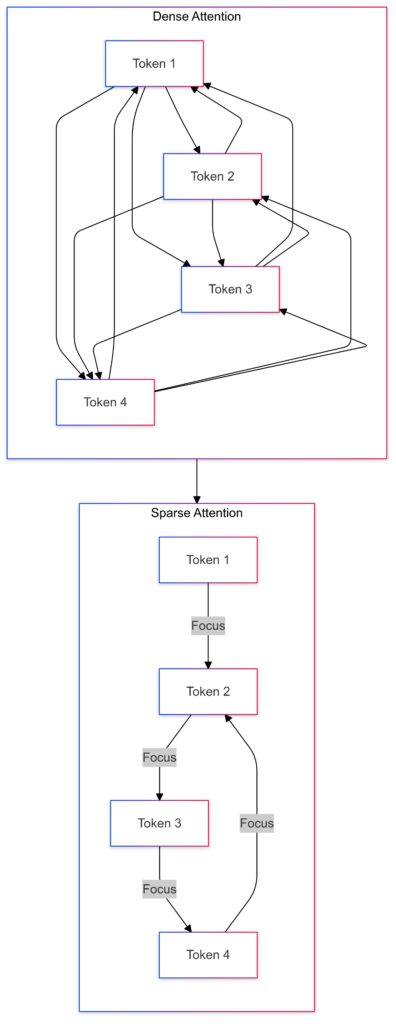

Sparse Attention Mechanisms

Flow Visualization: Dense Attention vs Sparse Attention

- Dense Attention:

- Every token attends to every other token.

- Shown with arrows connecting all tokens, representing a fully connected attention pattern.

- Sparse Attention:

- Each token focuses only on specific local or critical tokens.

- Shown with selective arrows, illustrating limited and focused attention.

Key Insights:

Sparse Attention optimizes resources by limiting focus to essential relationships.

Dense Attention provides comprehensive connections but is computationally expensive.

The Challenge of Scalability

While multi-head attention is powerful, its quadratic complexity makes it costly for long sequences. Sparse attention introduces selective focus, where only relevant portions of input are attended to.

- Models like Longformer and Big Bird implement sparse attention, making transformers feasible for larger datasets.

- This approach reduces redundancy, forcing heads to specialize in key features.

Balancing Sparsity and Representation

Ensuring heads maintain diverse perspectives while optimizing resources is a major challenge in sparse attention research.

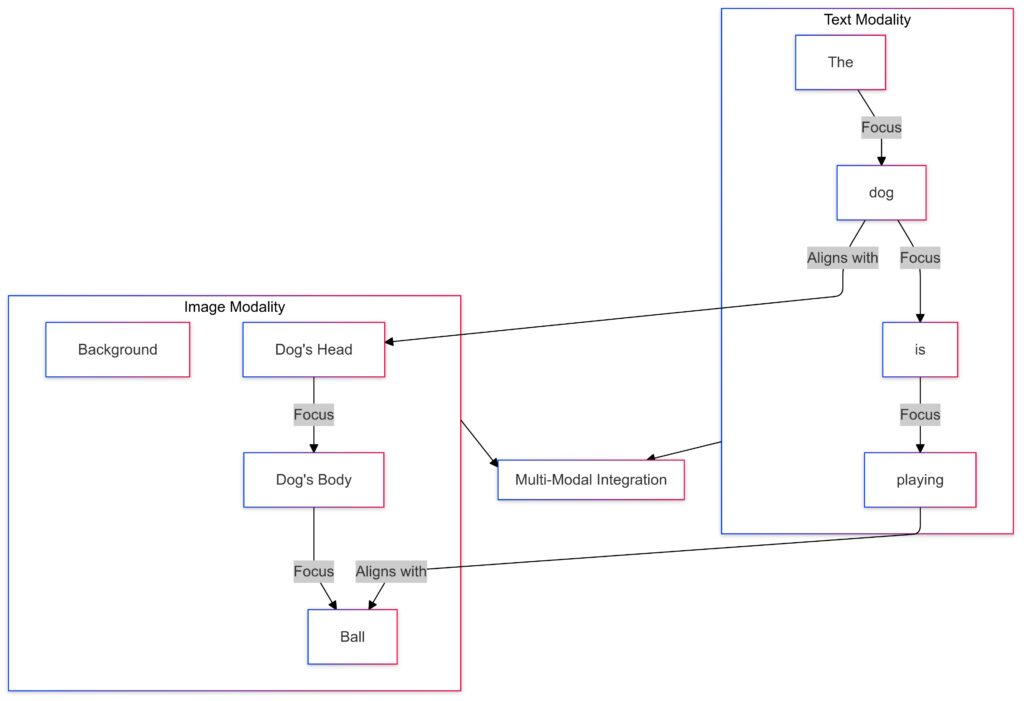

Multi-Modal Attention: Expanding Beyond Text

Unified Attention Across Modalities

Multi-head attention is now being applied to combine different data types—like text, images, and audio—in multi-modal models such as CLIP or DALL·E.

- Separate heads can specialize in different modalities, learning unique representations.

- Cross-modal attention integrates these perspectives, enabling tasks like image captioning or video understanding.

Applications in Industry

- Healthcare: Analyzing patient records and medical images simultaneously.

- Retail: Integrating product descriptions with visual imagery for personalized recommendations.

Enhanced Interpretability for Trustworthy AI

Explaining Decisions in Real-Time

For models deployed in critical domains, interpreting attention patterns is crucial. Future research focuses on integrating real-time explanation mechanisms into transformer-based systems.

Attention as a Debugging Tool

Attention maps can highlight data biases or model failures, helping engineers refine training processes. For instance:

- Detecting gender or racial bias in language models.

- Identifying misaligned focus in translation models.

Practical Applications of Multi-Head Attention

Text Modality:

Attention heads focus on relationships between words in the sentence

"The dog is playing".Example:

"dog" aligns with "playing".Image Modality:

Attention heads highlight different regions of the image.

Example: The dog’s head, body, and the ball.

Multi-Modal Integration:

Combines text and image modalities to align features.

Example:

"dog" aligns with the dog’s head region."playing" aligns with the ball region.Natural Language Processing (NLP)

Multi-head attention continues to revolutionize translation, summarization, and question answering systems.

- Advanced chatbots use specialized heads for understanding intent, context, and emotion.

- Multi-turn dialogue systems benefit from heads tracking conversational history.

Computer Vision

Vision transformers (ViTs) leverage attention heads to identify spatial relationships and object features in images.

- Heads in early layers focus on edges and textures, while later layers capture high-level patterns.

Reinforcement Learning

In tasks like game playing or robotics, multi-head attention is applied to model environmental interactions, enabling better decision-making under uncertainty.

The Road Ahead: Challenges and Opportunities

Balancing Efficiency and Effectiveness

Scaling transformers while preserving their accuracy is a major goal. Innovations like low-rank approximations and efficient token representations are promising.

Towards Universal Transformers

Efforts are underway to design universal attention mechanisms that generalize across domains without retraining, reducing costs for adapting models to new tasks.

Ethical Considerations

As attention becomes more interpretable, ethical questions arise about user privacy, data biases, and the potential misuse of explainable AI. Ensuring ethical deployment will be as critical as technical progress.

Final Summary: Interpreting Multi-Head Attention

Multi-head attention is a cornerstone of modern AI models, enabling them to process data with remarkable depth and versatility. Across this exploration, we’ve uncovered how attention heads contribute to these models’ power, analyzed their unique and overlapping roles, and discussed challenges and future trends.

Key Takeaways

- How Multi-Head Attention Works

Multi-head attention diversifies focus by processing input through multiple independent heads, each capturing unique relationships in the data. This redundancy improves robustness and performance. - Unique and Overlapping Functions

- Early heads capture syntactic features, while later heads focus on semantic meanings.

- Some heads are specialized, while others provide general-purpose coverage.

- Redundancy ensures reliability but can also lead to inefficiencies.

- Challenges in Interpretation

- Attention is not always an explanation of model decisions.

- Disentangling the contributions of individual heads and their interactions with the entire model remains complex.

- Visualization tools and ablation studies help but are not exhaustive.

- Emerging Trends

- Dynamic attention adapts head usage based on input complexity.

- Sparse attention improves scalability for long-sequence tasks.

- Multi-modal attention integrates text, images, and other data types, pushing transformers into new domains.

- Real-World Applications

- In NLP, multi-head attention enhances translation, summarization, and conversational AI.

- Vision Transformers revolutionize image recognition and spatial understanding.

- Reinforcement learning benefits from attention-driven modeling of environmental dynamics.

Looking Forward

The evolution of multi-head attention is reshaping AI in profound ways. From making models more interpretable to enabling dynamic and multi-modal capabilities, the field is set to tackle challenges like efficiency, scalability, and ethical deployment.

Understanding attention mechanisms not only deepens our grasp of AI’s inner workings but also empowers researchers and practitioners to push the boundaries of what’s possible.

With this exploration, you now have a comprehensive understanding of multi-head attention’s role, its intricacies, and its potential for the future.

FAQs

Can attention heads be optimized for specific tasks?

Yes, fine-tuning allows attention heads to specialize for particular domains or tasks.

For instance:

- In sentiment analysis, certain heads might be trained to focus on words with strong emotional valence, like “amazing” or “terrible.”

- In legal document summarization, specific heads could learn to focus on critical clauses or dates.

By tailoring heads to the nuances of a task, models achieve better precision and relevance.

Are there tools to visualize attention heads?

Several tools help researchers and practitioners analyze and interpret multi-head attention:

- BERTViz: Offers interactive visualizations of attention maps for transformer-based models.

- Transformers Interpret: Provides insights into individual heads and their contributions to predictions.

For example, in question answering, these tools can highlight which heads focus on the question and which heads track relevant parts of the context.

How does multi-head attention work with long sequences?

For long sequences, attention heads can struggle due to quadratic complexity, as each token attends to all others. Sparse attention mechanisms and long-sequence models like Longformer address this by limiting each head’s focus to local windows or critical tokens.

Example: In a long document about climate change, heads might focus on:

- The introduction for key terms like “global warming.”

- Specific sections that mention mitigation strategies or policy changes.

This approach ensures efficiency without sacrificing important context.

What happens when heads from different layers interact?

Attention heads in one layer often pass information to heads in the next layer. This iterative process builds increasingly abstract representations.

For instance:

- In image captioning, early-layer heads in a Vision Transformer might identify shapes or edges, passing this information to mid-layer heads that detect objects.

- Higher-layer heads combine this data with contextual text information to generate a coherent description, such as “A brown dog playing in the park.”

Are there cases where attention heads fail?

Yes, attention heads can fail when the model encounters:

- Ambiguous data: Heads might scatter focus across irrelevant tokens, diluting meaningful relationships.

- Data biases: If training data is biased, heads might amplify this, such as overemphasizing gendered words in certain contexts.

For example, in a sentence like “The doctor treated his patients,” biased training might lead heads to incorrectly associate “doctor” exclusively with male pronouns.

How does pruning attention heads impact performance?

Pruning removes heads that contribute little to model accuracy, making transformers more efficient. Studies show models can perform nearly as well with significantly fewer heads.

Example: In BERT, pruning heads in middle layers (where redundancy is higher) often has a minimal impact, as the remaining heads adapt to fill the gap.

What are the ethical implications of attention mechanisms?

Interpretability in attention mechanisms raises ethical concerns, such as:

- Bias detection: Attention visualizations can expose biases in model predictions, which is vital for creating fair AI systems.

- Transparency in decisions: In high-stakes domains like healthcare, understanding attention patterns ensures predictions can be trusted.

For example, in predicting loan approvals, attention heads focusing disproportionately on irrelevant features (like ZIP codes) may indicate potential bias.

What’s the difference between self-attention and multi-head attention?

Self-attention calculates relationships between all tokens in a sequence, assigning attention scores. Multi-head attention extends this by splitting the input into multiple subspaces and applying self-attention separately in each head.

Think of it like analyzing a painting:

- Self-attention is one person examining the entire painting.

- Multi-head attention is a group of people focusing on different aspects—some study the brushstrokes, others the color palette, and others the overall theme.

How does multi-head attention handle context in sequential data?

Multi-head attention excels at understanding context by comparing every token in a sequence with every other token, considering their relationships holistically. Each head contributes by focusing on different aspects of context:

- One head might track the subject-object relationship.

- Another could focus on time indicators or negations.

Example: In the sentence “She didn’t like the movie because it was too slow,”

- One head might attend to “movie” and “slow” for semantic understanding.

- Another head might capture “didn’t” to understand the sentiment.

How does multi-head attention perform in multilingual models?

In multilingual models like mBERT or XLM-R, attention heads must bridge cross-lingual understanding. Some heads specialize in aligning similar structures across languages, while others adapt to language-specific nuances.

Example: In translating “Je t’aime” (French) to “I love you” (English):

- One head may focus on word alignment between “Je” and “I.”

- Another might capture semantic equivalence between “t’aime” and “love you.”

Heads that specialize in shared linguistic patterns help models generalize across diverse languages.

How do attention heads contribute to pretraining and fine-tuning?

During pretraining, attention heads learn general patterns like syntax and semantic relationships. During fine-tuning, these heads adapt to task-specific needs, like classification or summarization.

Example: In pretraining on generic text, heads may focus on identifying part-of-speech relationships. When fine-tuning for sentiment analysis, some heads adjust to track sentiment-heavy words like “great” or “awful.”

How does multi-head attention compare to convolutional layers in vision?

Both multi-head attention and convolutional layers extract features from input, but their approaches differ:

- Convolutional layers use fixed-size kernels to capture local patterns (e.g., edges in an image).

- Attention heads dynamically learn which parts of the input are important, regardless of position.

Example: In an image of a dog running on grass:

- Convolutional layers might detect the dog’s edges and textures.

- Attention heads could focus on contextual relationships, like the dog’s movement and its environment.

This makes attention mechanisms more flexible for tasks like object recognition or scene understanding.

Why is multi-head attention considered robust?

Multi-head attention is robust because of its diversity and redundancy. Even if some heads fail to learn effectively, others compensate by focusing on different or overlapping features.

Example: In a document summarization task, one head might fail to detect a key sentence due to noise. Another head focusing on broader context might still capture the sentence’s relevance. This built-in fault tolerance enhances reliability.

What are some advanced variations of multi-head attention?

Researchers have proposed several enhancements to improve multi-head attention:

- Cross-attention: Allows one sequence (e.g., a query) to focus on another (e.g., a document). Used in encoder-decoder models like T5.

- Relative Positional Encoding: Helps heads understand relationships between tokens, regardless of their absolute positions. Critical for long-sequence tasks.

- Dynamic Weight Sharing: Reduces computational costs by sharing parameters across heads without sacrificing performance.

Example: Cross-attention in machine translation helps the decoder focus on relevant parts of the source sentence when generating each target word.

What challenges remain in interpreting attention heads?

Despite advancements, understanding attention heads fully is still a work in progress. Challenges include:

- Aggregating attention maps: Individual maps can be noisy or unclear, making it hard to extract meaningful insights.

- Head interaction: Heads don’t operate in isolation; understanding how they collectively produce outputs is complex.

- Bias and fairness: Attention mechanisms can reflect biases in training data, leading to unfair predictions.

Example: In a gender classification task, a head might overemphasize irrelevant tokens (e.g., “nurse” or “engineer”) if the training data contains stereotypes.

How does multi-head attention compare to RNNs?

While recurrent neural networks (RNNs) process input sequentially, multi-head attention processes all input in parallel. This provides several advantages:

- Speed: Multi-head attention handles long sequences faster than RNNs, which process step-by-step.

- Contextual understanding: Attention captures global context better, while RNNs struggle with long-range dependencies.

Example: In story summarization, RNNs may lose track of events from the beginning of the story. Attention heads, however, can focus on key details from both the start and end simultaneously.

Resources

Foundational Papers and Research

- “Attention Is All You Need” (Vaswani et al., 2017):

This seminal paper introduced the transformer architecture and the concept of multi-head attention.

Read it on arXiv - “BERT: Pre-training of Deep Bidirectional Transformers for Language Understanding” (Devlin et al., 2018):

Explains how transformers and multi-head attention are leveraged for NLP tasks.

Read it on arXiv - “Longformer: The Long-Document Transformer” (Beltagy et al., 2020):

Introduces sparse attention for handling long sequences efficiently.

Read it on arXiv - “Visualizing and Understanding Self-Attention in Transformers” (Vig, 2019):

Explores techniques for interpreting attention heads using visual tools.

Read it on arXiv

Tools for Visualization and Analysis

- BERTViz:

A Python library for visualizing attention in transformer models, allowing exploration of head-specific focus.

GitHub Repository - Transformers Interpret:

Provides interpretability tools for models built using the Hugging Face Transformers library.

GitHub Repository - TensorFlow Playground for Attention:

An interactive tool to experiment with attention mechanisms and visualize their impact.

Playground Link

Advanced Topics and Blogs

- “Explaining Multi-Head Attention in Transformers” by Analytics Vidhya:

A comprehensive blog post with examples and visualizations.

Read Here - “Understanding Sparse Attention in NLP” by Hugging Face:

Explains the mechanics of sparse attention and its benefits. - “Attention Rollout: Cumulative Attention Maps for Transformers” by Clarity AI:

A blog discussing how attention rollouts offer a holistic view of multi-head attention.

Read Here

Open-Source Libraries and Implementations

- Hugging Face Transformers:

A widely used library for implementing and fine-tuning transformers with multi-head attention.

GitHub Repository - PyTorch Examples for Attention:

Examples of attention-based models implemented in PyTorch.

GitHub Repository - Transformers from Scratch:

A hands-on guide to building transformers, including multi-head attention, from the ground up.