Monitoring real-time data is essential for identifying unusual patterns, spotting errors, and mitigating risks, especially in fields like finance, cybersecurity, and IoT systems. Kullback-Leibler (KL) divergence is a powerful statistical tool for identifying anomalies in these high-stakes environments.

This article explores how KL divergence can be applied in real-time anomaly detection, the advantages it brings, and practical examples to deepen your understanding.

Understanding KL Divergence in the Context of Anomaly Detection

What is KL Divergence?

KL Divergence is a metric that quantifies the difference between two probability distributions, often referred to as the reference and target distributions. Mathematically, it measures how one distribution diverges from another, typically how much information is “lost” when one distribution approximates another.

In practical terms, KL divergence provides a way to detect when current data deviates from expected patterns. Because of its sensitivity to changes in distribution, it’s perfect for spotting anomalies in real-time data streams where deviations can indicate anything from operational errors to cyber threats.

Why KL Divergence is Ideal for Real-Time Applications

KL divergence is particularly useful in real-time applications for several reasons:

- Immediate Sensitivity: KL divergence quickly captures changes in data distribution, allowing for prompt anomaly detection.

- Non-Symmetrical Advantage: It quantifies the information “lost” moving from one distribution to another, making it ideal for finding anomalies that don’t symmetrically match historical patterns.

- Scalability: KL divergence calculations are computationally light, making them feasible for large, continuous datasets like those found in finance or IoT.

These characteristics make KL divergence a go-to choice for anomaly detection in mission-critical applications, where immediate action is essential.

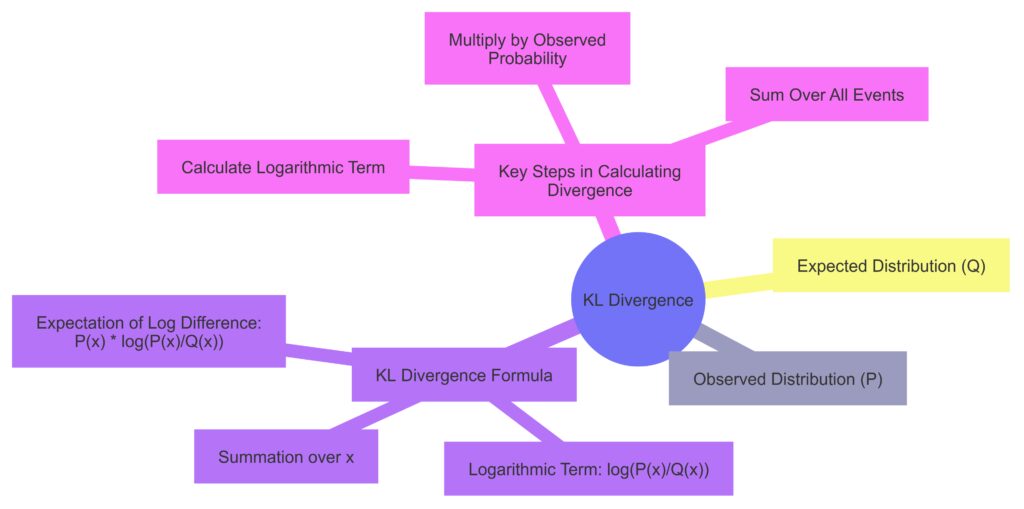

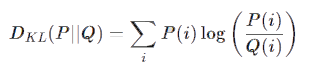

Mathematical Formula of KL Divergence

The formula for KL divergence is as follows:

Here, P represents the distribution of observed data, and Q represents the baseline or expected distribution. The resulting value tells us the degree of deviation between these distributions. A higher value suggests a greater divergence from the expected pattern, indicating a potential anomaly.

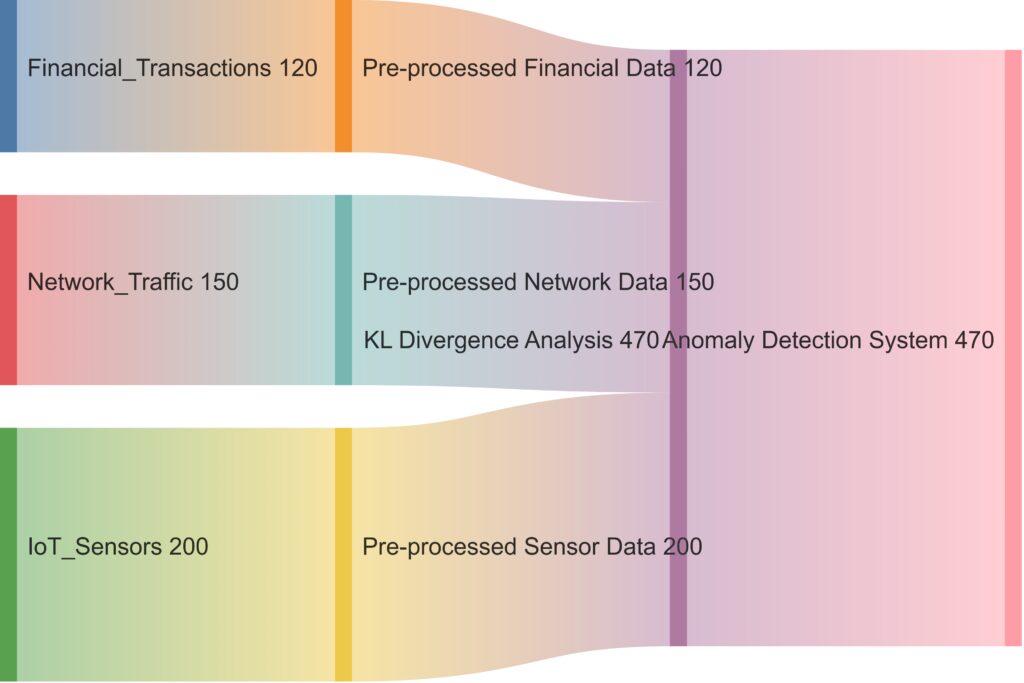

Implementing KL Divergence for Anomaly Detection in Real-Time Data Streams

Step 1: Setting Up Baseline Data

The first step in using KL divergence for real-time anomaly detection is establishing a baseline distribution. This baseline is usually derived from historical data that represents normal behavior for the monitored process or system. Depending on the application, baselines can vary widely—from daily stock price movements in finance to typical sensor readings in an industrial setup.

- Gather Historical Data: Collect data over a significant time period to capture normal variations.

- Calculate Probability Distributions: Transform the data into probability distributions that represent the expected state.

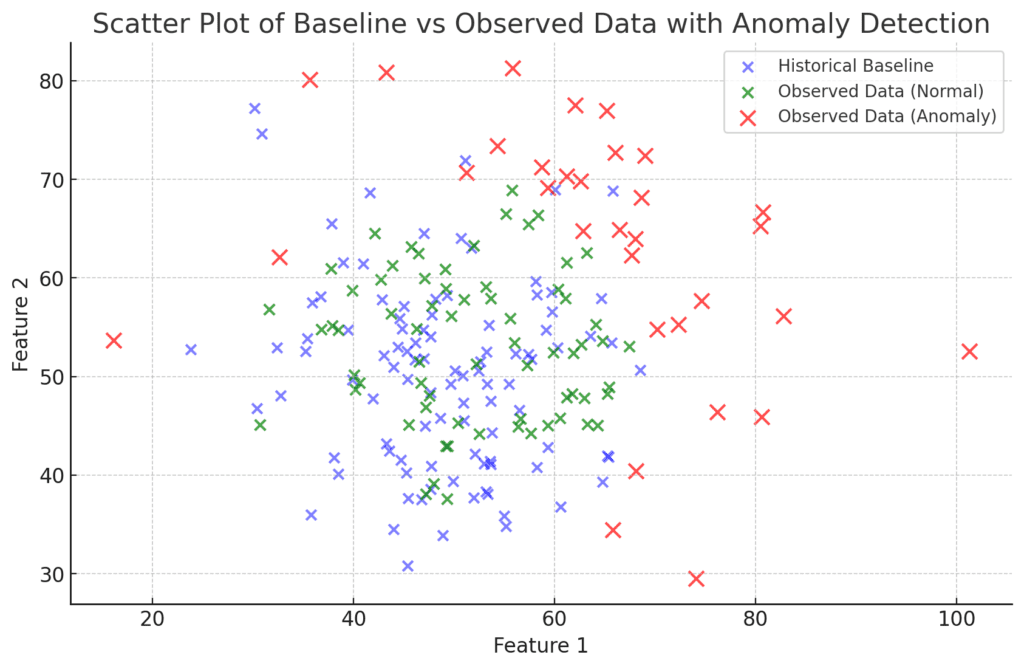

Blue points represent the historical baseline data.

Green points show real-time observed data within normal thresholds.

Red points indicate anomalies, which are outliers based on a specified threshold distance.

The size variation for red points highlights detected anomalies, allowing for easy identification

Step 2: Monitoring Real-Time Data

Once the baseline is set, the real-time data is continuously captured and evaluated against this baseline. This real-time data represents the current distribution, denoted as PPP, which will be compared to the baseline distribution Q.

To detect anomalies, compute KL divergence periodically, such as every minute in high-frequency trading or every second for IoT sensor data.

- Compute Real-Time Distribution: Aggregate recent data points to form a real-time distribution.

- Apply the KL Divergence Formula: Using the KL formula, calculate the divergence value between the baseline and real-time distributions.

Step 3: Setting Thresholds for Anomalies

Determining an appropriate threshold for KL divergence is critical to ensure accurate anomaly detection. This threshold often requires fine-tuning and can vary depending on the nature of the data and the frequency of anomalies.

- Dynamic Thresholds: For fluctuating environments like stock markets, thresholds may adjust based on volatility.

- Fixed Thresholds: In stable settings, such as temperature readings in a manufacturing plant, fixed thresholds might suffice.

When the divergence surpasses the threshold, it flags an anomaly, prompting further analysis or automated responses like alerts or system adjustments.

Advantages of KL Divergence for Detecting Real-Time Irregularities

Fast and Efficient Anomaly Detection

KL divergence allows for quick comparisons of distributions, making it especially effective for real-time applications. In many cases, anomaly detection systems need to handle massive data volumes without compromising speed. KL divergence’s low computational requirements support high-speed processing, which is vital in time-sensitive industries like fraud detection.

Works Well with Unsupervised Learning

A significant benefit of KL divergence is its compatibility with unsupervised learning. Unlike supervised methods that require labeled data, KL divergence doesn’t depend on predefined categories. Instead, it uses a baseline distribution, which can be derived from unlabeled data, making it perfect for unsupervised learning scenarios.

Adaptability Across Various Data Types

From temperature readings to network traffic data, KL divergence can adapt to multiple data types, including continuous, discrete, and even multivariate distributions. This flexibility enables companies across industries to use KL divergence for diverse applications, such as energy usage monitoring, healthcare data analysis, or server load balancing.

Practical Applications of KL Divergence in Real-Time Data Analysis

Financial Market Anomaly Detection

Financial markets operate with vast quantities of real-time data, making anomaly detection essential to prevent losses. Using KL divergence, financial institutions can monitor deviations in stock prices, trading volumes, or market volatility. When KL divergence shows an unusual spike, it could signal irregular trading activity or potential fraud, enabling timely interventions.

- Stock Price Monitoring: KL divergence can track price movements and detect unusual spikes or drops, suggesting potential market manipulation.

- Algorithmic Trading Adjustments: For algorithmic trading, KL divergence can adjust algorithms in response to rapid market changes, helping prevent trading losses.

Cybersecurity and Intrusion Detection

In cybersecurity, detecting anomalous patterns in network traffic is crucial for identifying potential security breaches. By setting a KL divergence threshold, systems can alert IT teams to unusual data flows, login patterns, or data access rates, which often precede cyber attacks.

- Network Traffic Analysis: KL divergence can highlight unusual traffic, such as a spike in data requests, which may indicate a Distributed Denial of Service (DDoS) attack.

- User Behavior Tracking: For user accounts, KL divergence helps identify deviations from normal behavior, such as accessing files at unusual times or from different IP addresses.

IoT and Industrial Monitoring

For the Internet of Things (IoT) and industrial applications, sensors continuously collect data on factors like temperature, humidity, and machinery status. KL divergence helps flag irregular sensor readings, suggesting equipment malfunctions or environmental issues that need immediate attention.

- Predictive Maintenance: KL divergence can signal machinery wear and tear, allowing for predictive maintenance that reduces unplanned downtime.

- Environmental Monitoring: In industrial setups, monitoring environmental data with KL divergence helps maintain safety standards and operational efficiency.

Advanced Applications of KL Divergence in Real-Time Systems

Fraud Detection in E-commerce and Banking

In the fight against fraud, e-commerce platforms and banks continuously analyze customer behavior to spot fraudulent activity. Here’s how KL divergence helps:

- Transaction Pattern Analysis: By calculating KL divergence between normal transaction patterns and real-time transactions, banks can detect irregularities such as unusually high spending or transactions from foreign locations.

- Payment Method Validation: Monitoring payment method distribution (e.g., credit card, debit card, mobile wallet) with KL divergence helps flag unauthorized payment methods or unusual purchase volumes.

With anomalies in transaction patterns flagged early, companies can protect customers and prevent losses from unauthorized transactions.

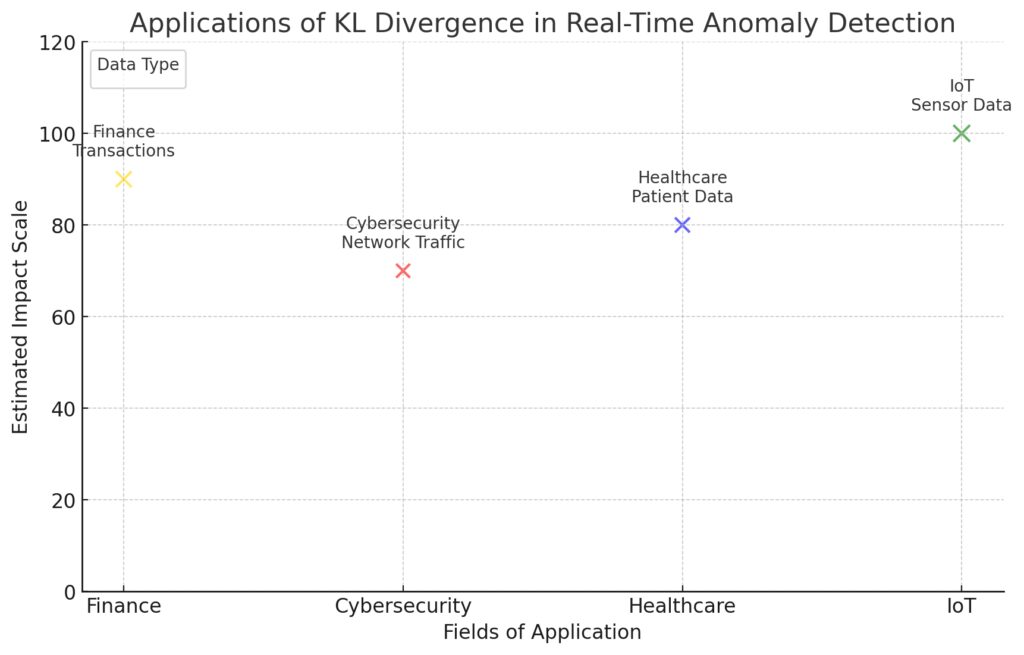

Bubble Size: Represents the estimated impact or scale of each application field.

Bubble Color:Gold for financial transactions in the finance sector,

Red for network traffic in cybersecurity,

Blue for patient data in healthcare,

Green for sensor data in IoT.

This chart visually conveys both the scale of impact and the types of data monitored across different sectors, illustrating KL divergence’s application in anomaly detection across varied fields.

Healthcare Monitoring and Predictive Analysis

In healthcare, anomaly detection can be life-saving. Wearable devices, remote patient monitoring, and diagnostic tools often use KL divergence to identify deviations in patients’ vitals, helping to detect issues early on.

- Vital Sign Monitoring: Using KL divergence on baseline vitals, hospitals can detect critical anomalies in heart rate, blood pressure, or respiratory rate.

- Predictive Health Analytics: In chronic illness management, KL divergence can identify when a patient’s vitals are likely to indicate worsening symptoms, allowing for timely intervention.

In these cases, KL divergence enhances remote monitoring by providing an immediate alert when patients’ data deviates from expected norms.

Quality Control in Manufacturing

In manufacturing, maintaining consistent product quality is essential. Using KL divergence to monitor quality control metrics, such as material thickness, temperature, or output consistency, helps detect variations that could indicate production flaws.

- Product Consistency: KL divergence ensures real-time monitoring of product parameters, flagging irregularities to reduce defective items.

- Process Stability: By assessing machinery parameters, KL divergence can detect potential faults or wear, enabling predictive maintenance.

This application ensures that issues are spotted in time, minimizing waste and keeping production lines running efficiently.

Optimizing KL Divergence Thresholds for Enhanced Anomaly Detection

Setting the Initial Thresholds

Selecting the right threshold is key to successful anomaly detection. Here are steps to consider for initial threshold settings:

- Analyze Historical Data: Start by analyzing past data and identifying typical variance levels.

- Experiment with Low Values: Initially, use a lower threshold to capture more anomalies. This provides insight into the data’s natural fluctuations.

- Increase for Stability: If too many false positives occur, raise the threshold incrementally until only significant deviations trigger alerts.

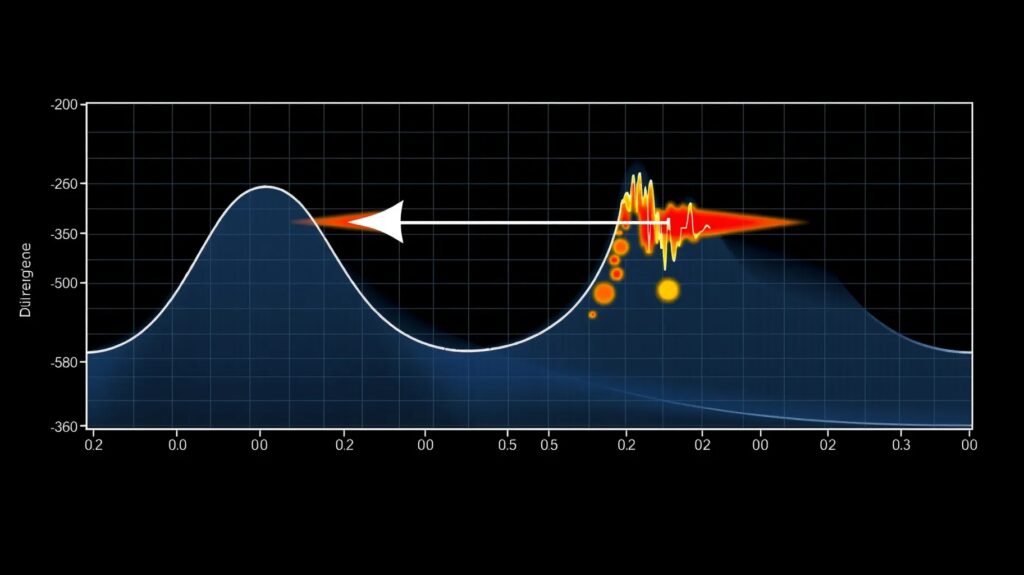

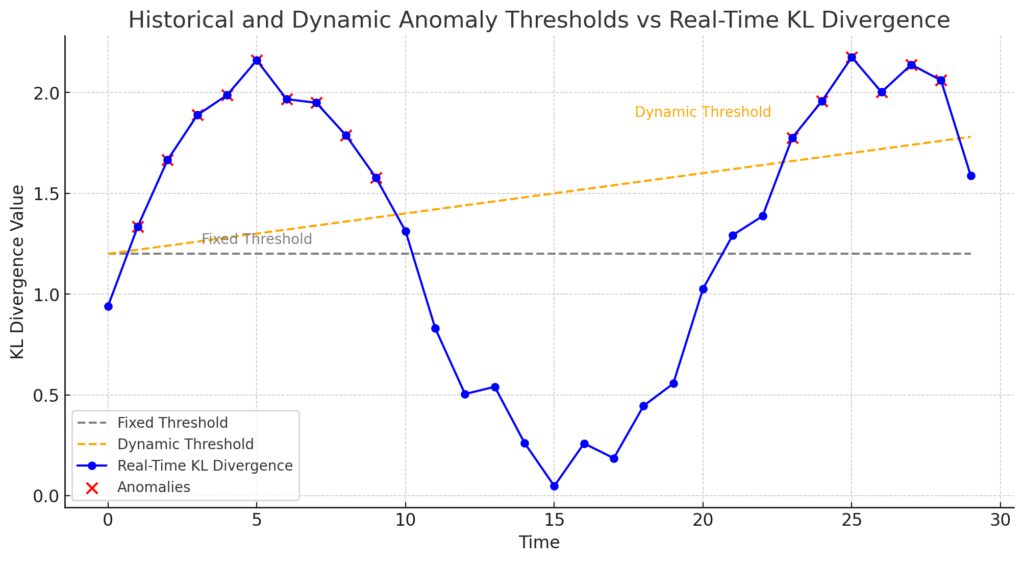

Fixed Threshold: Shown in gray as a constant benchmark.

Dynamic Threshold: Adjusts over time (in orange) to account for evolving baseline characteristics.

Real-Time KL Divergence: Plotted in blue, with anomalies marked in red where values exceed the dynamic threshold.

Annotations highlight the difference between fixed and dynamic thresholds, illustrating the system’s sensitivity to real-time changes and evolving benchmarks for anomaly detection.

Dynamic Threshold Adjustments

In many real-time applications, static thresholds may lead to false positives or missed anomalies. Dynamic thresholds, which adjust based on environmental or data changes, often provide more accurate results.

- Time-of-Day Adjustments: Certain systems, like network traffic or retail sites, experience natural fluctuations throughout the day. Implementing time-dependent thresholds helps prevent normal peaks from being flagged as anomalies.

- Seasonal Adjustments: In applications like energy usage monitoring, seasonal changes can alter baseline values. Incorporating a seasonal component into the threshold can improve accuracy over time.

Implementing Sliding Windows for Precision

To enhance accuracy, consider applying a sliding window approach, which continuously monitors recent data. Sliding windows help in identifying trends over short periods, making it easier to detect anomalies even in highly dynamic environments.

- Define Window Size: Choose an appropriate window size based on the frequency of data updates, like 5-minute or hourly windows for high-frequency data.

- Calculate Divergence for Each Window: Within each window, calculate KL divergence and compare it to the threshold.

- Set Trigger Levels for Sustained Anomalies: To avoid reacting to single-event anomalies, establish a trigger for sustained anomalies over several windows.

Balancing False Positives and Missed Anomalies

Setting the ideal KL divergence threshold requires balancing false positives (normal events flagged as anomalies) and missed anomalies (true anomalies that go unflagged). Here’s how to fine-tune this balance:

- Penalty Scoring: Assign higher penalties to missed anomalies in critical systems to prevent system failure.

- Threshold Binning: Use multiple levels of thresholds, e.g., mild, moderate, severe, with corresponding alert levels, to help prioritize response efforts.

- Regular Reviews: Regularly review the system’s performance, adjusting thresholds based on recent anomalies to maintain accuracy.

Real-World Considerations and Challenges of Using KL Divergence

Handling Data Sparsity

In some cases, data may not be consistent enough for a reliable baseline, making KL divergence calculations tricky. For example, a sudden spike in network traffic at random intervals could throw off the threshold settings. To address this, consider:

- Combining with Other Methods: Use additional anomaly detection methods, like z-score or machine learning models, to strengthen results.

- Synthetic Data Augmentation: Generate synthetic data based on expected behavior to better understand baseline thresholds.

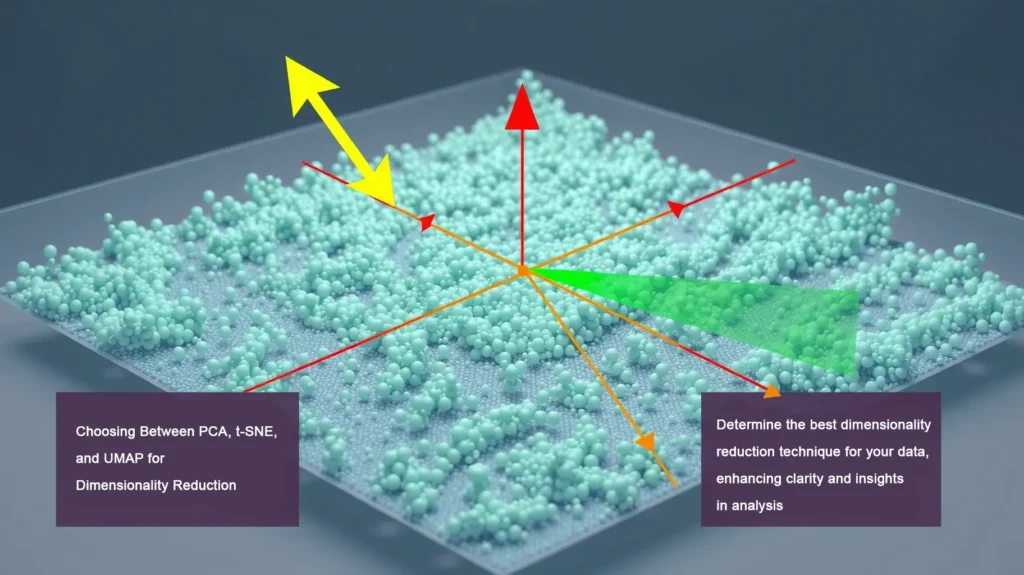

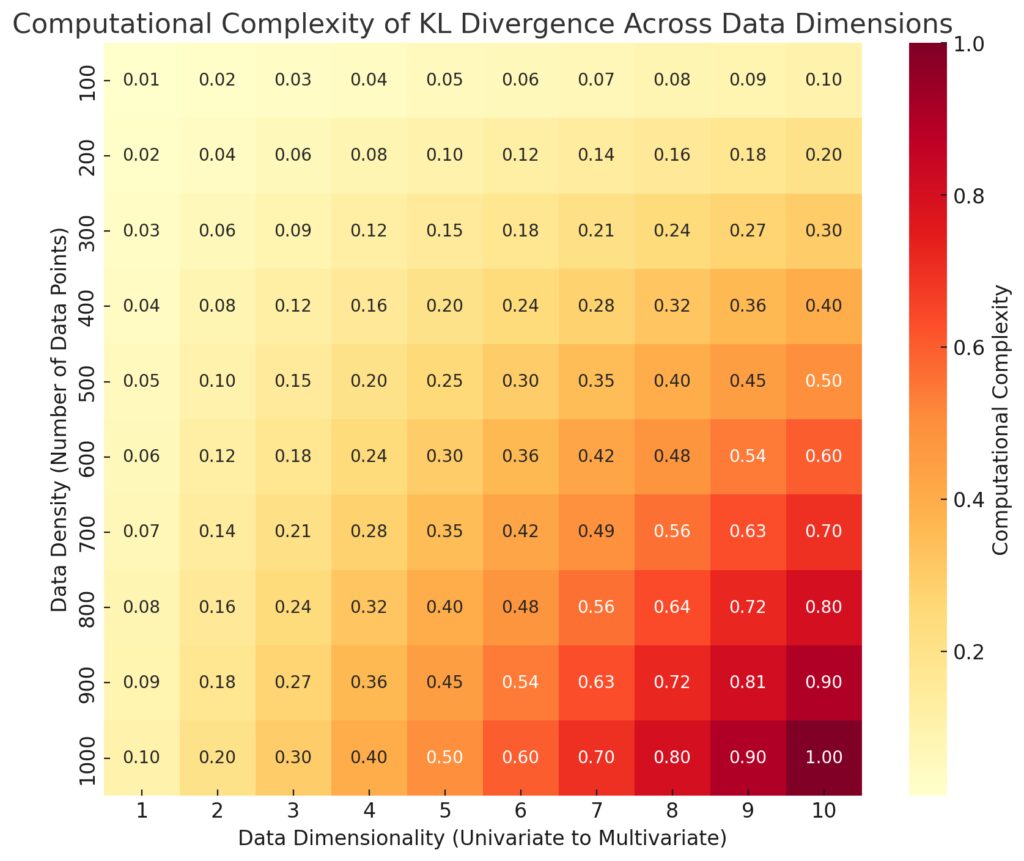

X-axis: Data dimensionality, ranging from univariate (1 dimension) to higher-dimensional multivariate data (up to 10 dimensions).

Y-axis: Data density, showing the number of data points, from 100 to 1000.

Color Gradient: Complexity increases (from yellow to dark red) as both data density and dimensionality rise, illustrating the computational challenges of KL divergence in high-dimensional, dense datasets.

Managing High-Dimensional Data

KL divergence is more complex when dealing with high-dimensional data, such as multi-variable sensor readings or combined network parameters. Here are some ways to tackle this:

- Dimensionality Reduction: Use techniques like Principal Component Analysis (PCA) to reduce data dimensions, which helps simplify the distributions without losing critical information.

- Multi-Feature Divergence Calculation: Calculate KL divergence for individual features, then use the cumulative score to detect an anomaly.

Continuous Learning and Adaptation

Anomaly detection systems often benefit from machine learning that allows them to continuously adapt to new data. Integrating a feedback loop lets the system learn from false positives and refine its thresholds over time.

- Feedback Mechanisms: Allow users or automated systems to validate each flagged anomaly, feeding back results to the algorithm.

- Adaptive Thresholding: Use machine learning models to dynamically adjust thresholds based on recent data, seasonal trends, and learned patterns.

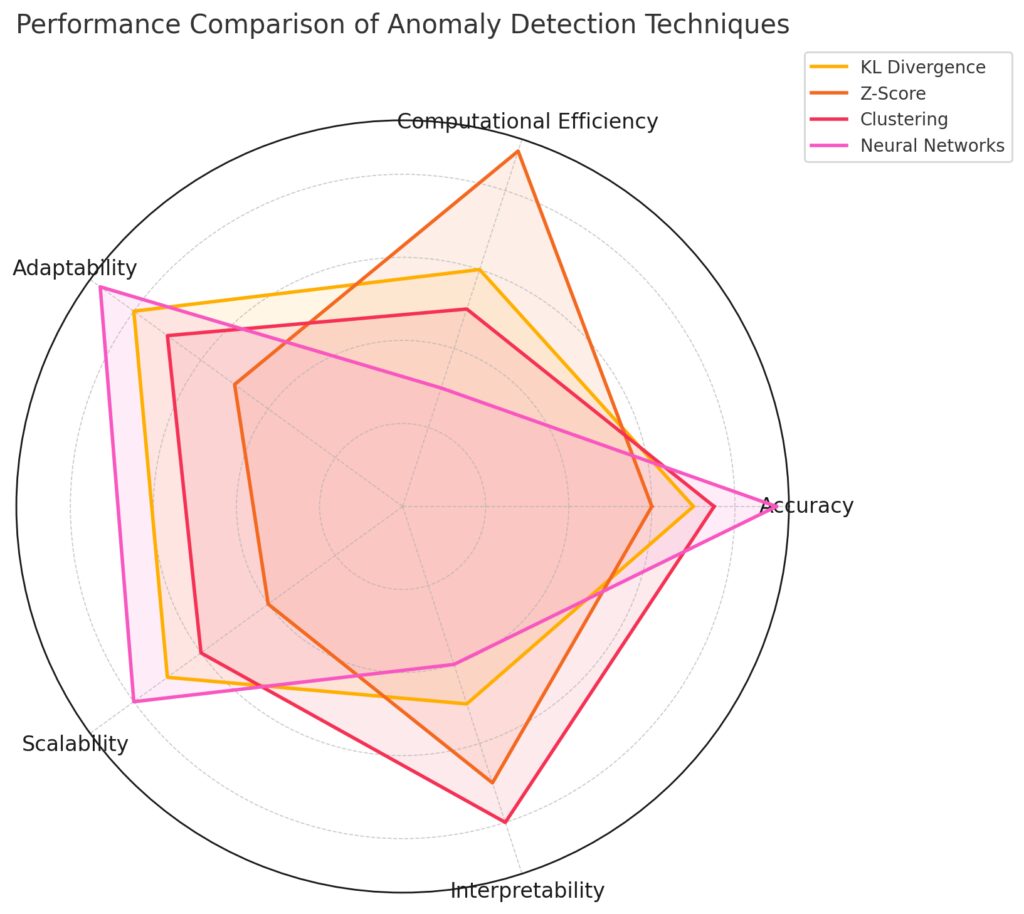

Metrics: Accuracy, computational efficiency, adaptability, scalability, and interpretability.

Technique Profiles:KL Divergence shows strength in adaptability and scalability.

Z-Score scores high in computational efficiency and interpretability.

Clustering balances interpretability and accuracy.

Neural Networks excel in accuracy and adaptability but show lower computational efficiency.

With these advanced applications, optimization techniques, and practical considerations, you’re now equipped with the knowledge needed to make KL divergence a powerful tool in real-time anomaly detection. It’s clear that KL divergence offers a versatile, scalable, and adaptive approach for handling anomalies across multiple industries and data environments.

Key Takeaways and Conclusion: Leveraging KL Divergence in Anomaly Detection

KL divergence has proven itself as an effective tool for detecting anomalies in real-time data streams. By identifying deviations in data distributions, it empowers organizations to act swiftly in high-stakes scenarios, from finance and cybersecurity to healthcare and industrial applications. Here’s a quick summary of what we’ve covered and how KL divergence can be applied in your own anomaly detection initiatives.

Summary of Key Points

- Understanding KL Divergence: KL divergence measures how one probability distribution differs from another, making it ideal for real-time monitoring.

- Real-Time Applications: From stock price monitoring to IoT sensor readings, KL divergence offers a sensitive, unsupervised method to detect irregularities.

- Advanced Techniques: Dynamic thresholds, sliding windows, and threshold binning ensure that KL divergence can flexibly adapt to data changes without triggering too many false positives.

- Practical Challenges: High-dimensional data, data sparsity, and continuously changing baselines are manageable through techniques like dimensionality reduction and continuous learning.

Final Thoughts on Implementing KL Divergence

For businesses aiming to proactively detect irregularities in their data flows, KL divergence offers a low-complexity yet highly accurate approach to detecting the unexpected. As data environments grow in complexity, combining KL divergence with machine learning models for adaptive thresholding and continuous feedback can make it even more effective. This way, real-time anomaly detection becomes not just about spotting outliers but about continuously learning and evolving to protect and optimize critical systems.

FAQs on KL Divergence in Anomaly Detection

What types of data work best with KL divergence?

KL divergence works well with both continuous and discrete data types, as well as multivariate distributions. It’s versatile enough for applications ranging from stock price analysis and industrial quality control to network traffic monitoring. For high-dimensional data, techniques like dimensionality reduction can help KL divergence remain effective by focusing on key features without overwhelming the calculation.

How are thresholds set when using KL divergence for anomaly detection?

Thresholds in KL divergence-based anomaly detection are typically set based on historical data, experimentation, and tuning to avoid too many false positives or missed anomalies. Dynamic thresholds are often useful, adjusting based on time-of-day patterns or seasonal variations. Regularly reviewing and adjusting thresholds based on recent data ensures the system stays aligned with evolving patterns.

Can KL divergence be used with machine learning?

Yes, KL divergence can be a valuable feature within machine learning models for anomaly detection. In fact, combining KL divergence with adaptive machine learning algorithms allows for real-time adjustment of thresholds and more accurate anomaly detection as the system learns from feedback. KL divergence can also serve as an input to clustering algorithms or neural networks that specialize in identifying outliers.

What are the limitations of using KL divergence in real-time data?

KL divergence can face limitations when dealing with very sparse or highly noisy data, as it relies on having a reliable baseline distribution. High-dimensional data can also complicate the calculations, though this is often mitigated by techniques like dimensionality reduction or separating features before calculating divergence. Additionally, selecting the right threshold requires careful tuning to avoid excessive false positives or missed anomalies.

How is KL divergence used to detect anomalies in cybersecurity?

In cybersecurity, KL divergence is often used to monitor network traffic and user behavior for signs of irregularities, like unusual login attempts or data access patterns. By setting a baseline based on normal network patterns, cybersecurity systems can use KL divergence to spot deviations that indicate potential intrusions or cyber-attacks, such as Distributed Denial of Service (DDoS) activity or unauthorized access attempts.

What are some industries where KL divergence is commonly applied for anomaly detection?

KL divergence is applied across diverse industries, including finance, healthcare, manufacturing, cybersecurity, and energy management. Its ability to adapt to different types of data and identify anomalies in real-time makes it particularly valuable in these fields. For instance, it’s used in finance to detect fraud, in healthcare to monitor patient vitals, and in manufacturing to ensure consistent product quality.

How does KL divergence handle real-time data changes?

KL divergence is particularly well-suited to detect gradual or sudden shifts in real-time data distributions. As new data comes in, the system recalculates the divergence between the current data and the baseline distribution. For highly dynamic systems, such as stock markets or IoT sensor networks, a sliding window approach can be used to adjust the baseline to recent data, ensuring that KL divergence detects anomalies relevant to the most current conditions.

Can KL divergence detect both minor and major anomalies?

Yes, KL divergence can identify both minor fluctuations and major deviations, depending on the threshold set. Lower thresholds allow the system to catch more subtle, minor shifts, which is beneficial in sensitive environments like financial trading or cybersecurity where small changes may indicate emerging threats. Higher thresholds focus on major deviations, ideal for applications where only large-scale anomalies are of concern, such as predictive maintenance in manufacturing.

How does KL divergence compare to z-score in anomaly detection?

While both KL divergence and z-score can detect anomalies, they function differently. Z-score detects outliers based on a single variable’s deviation from its mean, which works well for univariate data. KL divergence, however, measures divergence across entire distributions, making it more effective for multivariate or complex data structures where anomalies may arise from shifts in the entire dataset rather than a single variable. This makes KL divergence more robust for multifactor anomaly detection.

What are the computational demands of KL divergence?

KL divergence is generally computationally light, making it feasible for real-time applications. Since it requires only the distribution of observed data and baseline data, it performs well with continuous data inputs. In high-dimensional data, however, computational demands may increase, necessitating optimization techniques like dimensionality reduction. For resource-limited applications, such as IoT devices, KL divergence remains viable if paired with sliding windows and focused feature selection.

Is KL divergence suitable for use in unsupervised anomaly detection?

Yes, KL divergence is inherently suited for unsupervised anomaly detection because it doesn’t rely on labeled data. By simply comparing real-time data with a baseline distribution, it can detect anomalies without needing predefined “normal” or “abnormal” categories. This capability makes it ideal for applications like network traffic analysis, where patterns may constantly shift, and labeled datasets are either impractical or unavailable.

How frequently should KL divergence calculations be performed in real-time applications?

The frequency of KL divergence calculations depends on the specific needs of the application and the data update rate. For high-frequency trading or cybersecurity, recalculating KL divergence every few seconds to a minute ensures timely detection of anomalies. For less sensitive applications, like daily energy monitoring or periodic equipment checks, hourly or daily calculations may be sufficient. Sliding window techniques allow for tailored frequency settings that capture relevant trends without overwhelming computational resources.

Can KL divergence detect anomalies in multivariate time series data?

Yes, KL divergence can handle multivariate time series data, making it suitable for complex applications such as predictive maintenance and financial portfolio monitoring. By applying KL divergence to each feature or using a combined distribution, it can monitor changes across multiple dimensions. For efficient handling, data preprocessing techniques like Principal Component Analysis (PCA) can reduce the dataset to a manageable form, making KL divergence calculations faster and more reliable for real-time detection.

What role does KL divergence play in predictive maintenance?

In predictive maintenance, KL divergence is used to monitor equipment parameters—like temperature, vibration, and operational speed—that could indicate wear and tear. By comparing real-time data distributions with baseline conditions, KL divergence can detect subtle changes signaling early mechanical issues. This proactive approach allows for maintenance before a breakdown, reducing costs and minimizing downtime by addressing potential issues well in advance.

Are there any preprocessing steps required before applying KL divergence?

Yes, some preprocessing may be needed for optimal KL divergence performance. Normalizing or standardizing data helps ensure consistent comparisons, especially for multivariate data with different scales. In applications with noisy data, applying smoothing or filtering techniques can reduce erratic spikes that may otherwise trigger false positives. For large datasets, sampling can help streamline computations without sacrificing accuracy, especially in cases where computational efficiency is critical, such as IoT or edge computing.

Resources

Tools and Libraries

- SciPy Library (Python)

SciPy Documentation (for KL divergence function) - TensorFlow and PyTorch

TensorFlow KL Divergence Documentation

PyTorch KL Divergence Documentation - Streamlit

Streamlit Homepage (for real-time data visualization and monitoring)

Blogs and Websites

- Towards Data Science

Towards Data Science KL Divergence Articles - Analytics Vidhya

Analytics Vidhya KL Divergence and Anomaly Detection - Google AI Blog

Google AI Blog (search for articles related to anomaly detection and KL divergence)