Kolmogorov Complexity and the Quest to Model Human Cognition

As artificial intelligence advances, scientists, mathematicians, and philosophers alike are grappling with a fascinating question: is intelligence compressible?

In other words, can we represent the essence of human cognition in a simplified, compressed format without losing its core functionality or creativity? The concept of Kolmogorov Complexity provides a lens through which to examine this question, offering a mathematical foundation for understanding the boundaries of complexity, patterns, and the potential for AI to model aspects of human intelligence.

This article delves into Kolmogorov Complexity, exploring how it connects to AI, the limits of compressing human intelligence, and why cognitive models may never capture everything that makes human intelligence unique.

Understanding Kolmogorov Complexity: The Basics of Compression

What Is Kolmogorov Complexity?

Kolmogorov Complexity, named after mathematician Andrey Kolmogorov, refers to the minimum length of a program (written in some fixed programming language) that can produce a particular string or dataset. Essentially, it’s a measure of how much a dataset can be compressed.

For example, if you have a sequence like “1010101010,” Kolmogorov Complexity would assess how short a program could be written to replicate this sequence exactly. Since “1010101010” has a simple, repeating structure, a compact program can describe it. On the other hand, a completely random string would require a longer program because no simpler, underlying structure exists.

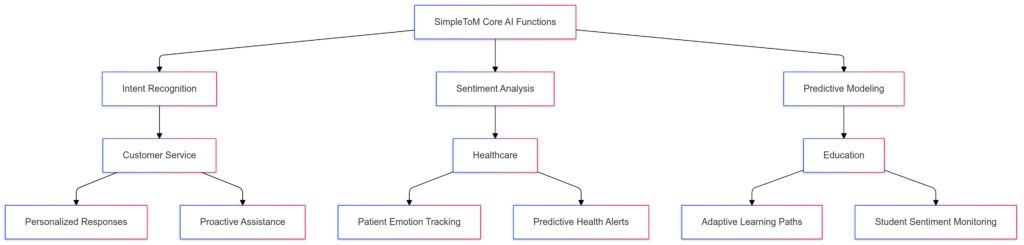

Applications:Customer Service: Includes Personalized Responses and Proactive Assistance.

Healthcare: Features Patient Emotion Tracking and Predictive Health Alerts.

Education: Offers Adaptive Learning Paths and Student Sentiment Monitoring.

Applying Kolmogorov Complexity to Patterns in Intelligence

Kolmogorov Complexity implies that structured data can be reduced, but random data resists compression. When we consider intelligence, particularly human intelligence, this idea becomes provocative. If our thoughts, decisions, and even creative outputs have structure, could they theoretically be compressed?

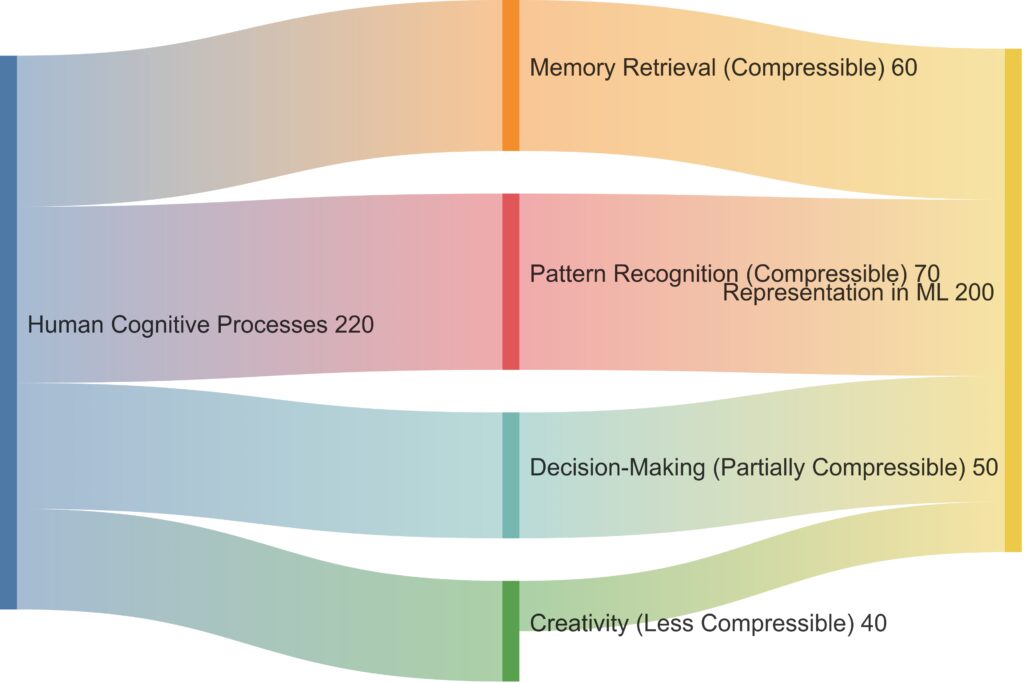

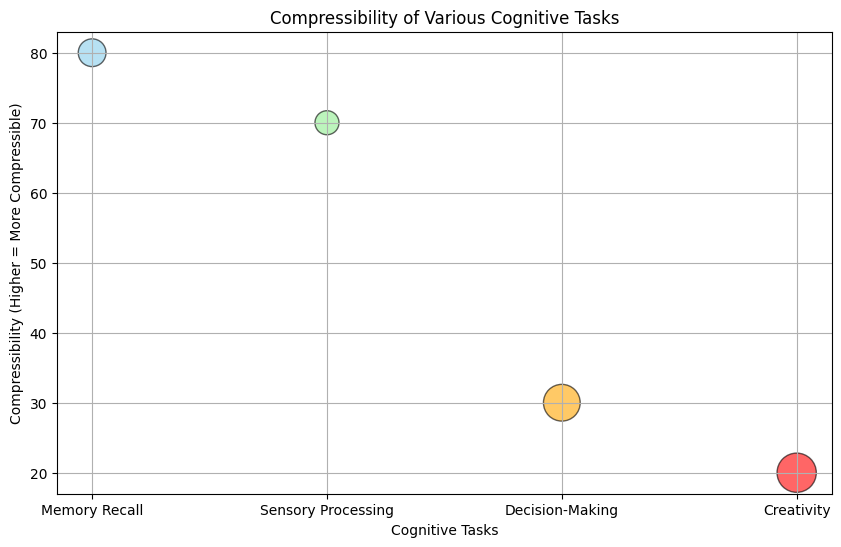

Some neuroscientists and cognitive scientists propose that certain aspects of human cognition, like memory retrieval and sensory processing, are inherently patterned and thus potentially compressible. However, randomness and individuality in decision-making may introduce complexity that challenges simple compression.

Decision-Making: Partially compressible, with some loss of complexity.

Creativity: Less compressible, with significant information loss in the transition to machine learning models.

Key Limitations of Kolmogorov Complexity

Despite its allure, Kolmogorov Complexity is limited by computational boundaries. It’s theoretically impossible to calculate the absolute Kolmogorov Complexity of most strings because we cannot know for certain if a shorter, unknown program exists that could generate it. For cognitive science, this suggests there may always be nuances of human thought that remain beyond complete, compressible understanding.

Intelligence and Compression: Can Human Cognition Be Encoded?

Breaking Down Cognitive Processes

Human cognition involves numerous processes—pattern recognition, memory, decision-making, and creativity. Each of these processes has unique levels of complexity and structure. Memory, for instance, relies on associations, which could hypothetically be simplified. But creativity, a domain often characterized by novelty and unpredictability, might resist such reductions.

In fields like machine learning, AI systems are already being trained to model aspects of human cognition by identifying and compressing patterns in vast datasets. But are these models capable of achieving true intelligence, or are they merely mimicking patterns without genuine understanding?

The Limits of Pattern Recognition in AI

Artificial intelligence systems, particularly those that use deep learning, excel at recognizing and reproducing patterns. This pattern recognition capability has led to breakthroughs in image processing, natural language processing, and even art generation. However, AI struggles with contextual understanding—it can replicate language but often fails to grasp the subtleties or implications behind it.

X-axis: Displays cognitive tasks such as Memory Recall, Sensory Processing, Decision-Making, and Creativity.

Y-axis: Indicates compressibility, where higher values represent more compressibility.

Bubble Size: Represents task complexity (e.g., larger bubbles for lower compressibility, like Decision-Making and Creativity).

For true human-like intelligence, a model would need to integrate not just patterns but also intention, emotion, and situational awareness. These elements are notoriously difficult to quantify and compress, hinting that while intelligence may have compressible components, it’s not fully reducible.

Kolmogorov Complexity vs. Algorithmic Complexity in Human Thought

The Role of Algorithmic Complexity in Understanding the Mind

Algorithmic complexity, closely related to Kolmogorov Complexity, refers to the computational difficulty of solving a problem or generating an outcome. In the human mind, algorithmic complexity might apply to complex reasoning or decision-making processes where multiple factors are weighed and balanced.

This kind of complexity contrasts with simple, compressible patterns because it often requires contextual data and dynamic decision-making, which can’t easily be reduced. For example, writing a novel or solving an ethical dilemma involves layers of understanding and personal experience that defy simplistic compression.

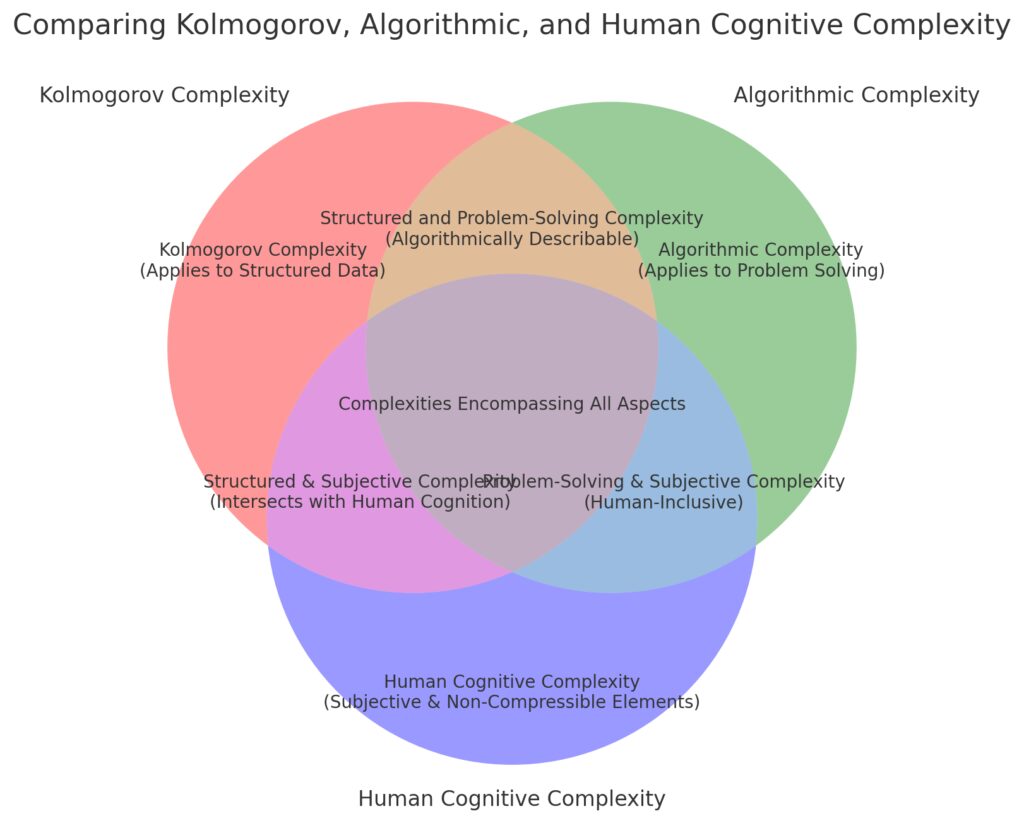

Algorithmic Complexity: Relates to problem-solving tasks, where processes are algorithmically definable.

Human Cognitive Complexity: Includes subjective and non-compressible elements, extending beyond structured and algorithmic definitions.

This diagram highlights overlaps where:

Kolmogorov and Algorithmic complexities intersect for structured, problem-solving data.

Human Cognitive Complexity partially overlaps with both but also includes unique cognitive elements that are subjective and not easily compressible.

Can Cognitive Models Capture This Complexity?

Some argue that a sufficiently advanced model could capture algorithmic aspects of intelligence, like logic and structured reasoning. However, the difficulty lies in modeling higher-level cognitive functions, such as creativity, that might operate in ways closer to randomness or unpredictability. As such, we may achieve a model that simulates intelligence but lacks the organic complexity of the human mind.

In essence, while Kolmogorov Complexity may help us understand compressible aspects of cognition, the higher-order aspects of human intelligence remain elusive.

Unpacking Human Intelligence: Where Compression Falls Short

Non-linear Thinking and Emotional Contexts

Humans are unique in their ability to think non-linearly, jumping from one idea to another in seemingly unrelated ways. This “lateral thinking” style often incorporates emotional contexts and past experiences that are incredibly hard to quantify or compress. For instance, a memory triggered by a particular smell involves sensory data, emotions, and contextual details that give it depth but resist reduction.

Such richness in human thought highlights the limitations of current cognitive models. While Kolmogorov Complexity might capture the “what” of certain thought patterns, it struggles with the “why” behind them—the meaning we assign to our experiences.

The Complexity of Empathy and Social Intelligence

Empathy is another dimension of human intelligence that defies simple explanation. Understanding others’ feelings, intentions, and perspectives involves interpreting subtle cues and context, a process that emerges over years of personal experience. The relational, dynamic nature of empathy makes it especially challenging to compress into a program or mathematical model.

Attempts to model empathy in AI, such as sentiment analysis, fall short of true comprehension. They capture words and phrases associated with emotions but lack the nuanced understanding of the situational context or cultural background that makes human empathy complex.

Creativity: The Ultimate Test of Compressibility?

Can AI Truly Be Creative?

Creativity is often regarded as the pinnacle of human intelligence, encompassing imagination, innovation, and the ability to produce something novel and meaningful. The unpredictable and deeply personal nature of creativity poses the biggest challenge to models seeking to replicate human cognition. Kolmogorov Complexity would argue that creativity resists compression because it often involves combinations and innovations that are context-dependent and unique.

Current AI systems can generate impressive creative works, from paintings to music compositions. However, these are usually based on patterns from existing works rather than true invention. AI’s creativity may be compelling, but it lacks the originality and intention that characterize human creativity.

The “Human Factor” in Creative Thought

Human creativity is also tied to motivation and purpose. When a person writes a song, it may be to process a personal experience, convey an idea, or connect with an audience. These intentional layers add depth and meaning that are absent in machine-generated creativity. This suggests that while parts of intelligence are compressible, the core of what makes us human may forever defy reduction to patterns or programs.

In the quest to compress and replicate human intelligence, Kolmogorov Complexity provides both a fascinating tool and a reminder of the inherent limitations in capturing the essence of the mind. Understanding intelligence through compression reveals as much about the limits of AI as it does about the complexity and richness of human cognition.

The Mystery of Self-Awareness: Can It Be Encoded?

Understanding Self-Awareness in Human Cognition

Self-awareness—the capacity to reflect on one’s thoughts, emotions, and actions—is a cornerstone of human intelligence that sets us apart from machines. Unlike external behaviors or choices, self-awareness involves an internal sense of identity and introspection that’s hard to pinpoint or represent through data.

Kolmogorov Complexity provides tools to analyze pattern-based behaviors, but it stumbles when attempting to represent an inner sense of self. Self-awareness is not merely a pattern but an ongoing, adaptive process that changes with new experiences. Modeling this would mean capturing a constantly evolving state that defies stable compression.

The Challenge of Encoding Conscious Experience

Consciousness is often described as a “hard problem” because it involves subjective experience—something no algorithm or program can fully replicate. While neuroscientists and cognitive scientists have attempted to understand consciousness through neural activity and patterns, they have yet to find a way to compress or quantify it in a way that mirrors human self-awareness.

From a Kolmogorov perspective, consciousness is uniquely resistant to compression because it doesn’t merely generate outputs based on inputs; it interprets, feels, and reflects, which adds an intangible layer to human intelligence.

Language, Metaphor, and the Limits of Meaning

How Language Expands Cognitive Complexity

Human language is a powerful example of our cognitive complexity, going beyond simple information transfer to include metaphor, nuance, and abstraction. We often speak in metaphors and idioms, using language to express ideas that are more than the sum of their parts. This inherent flexibility is a huge obstacle to compressing or encoding intelligence, as language reflects more than literal meaning.

Natural language processing (NLP) in AI has made strides in capturing basic language patterns, yet it lacks true comprehension. While AI can generate plausible sentences, it fails to grasp deeper meanings or context, which limits its ability to truly mimic human thought. Kolmogorov Complexity might help in encoding vocabulary and structure, but capturing how humans use language to convey abstract thought remains a different challenge altogether.

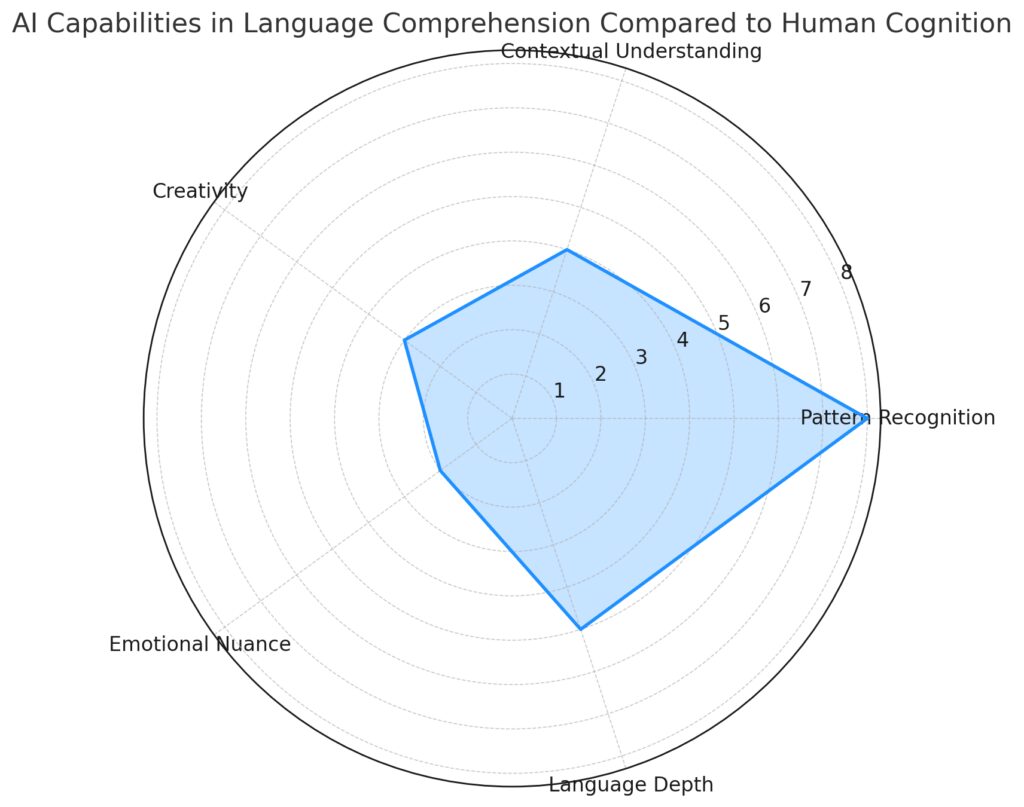

Contextual Understanding, Creativity, and Emotional Nuance: AI scores lower, highlighting current limitations in fully capturing these human language comprehension aspects.

Language Depth: Intermediate score, indicating some progress but with room for growth.

This chart visually represents the gaps in AI’s ability to model complex, nuanced human language comprehension compared to simpler pattern recognition tasks. Let me know if you need further adjustments!

The Power of Storytelling and Context in Human Communication

Storytelling is another uniquely human trait that resists simple modeling. When people tell stories, they weave together memories, emotions, and imagined scenarios in ways that create resonance. A single story may contain multiple layers of meaning based on the teller’s intent, the listener’s perspective, and cultural context.

This depth of context is challenging to capture or compress into a static model. AI-generated stories might follow plot patterns, but they lack the subjective resonance that makes human storytelling meaningful, highlighting where compression of intelligence hits a wall.

Embracing the Complexity: What Compression Teaches Us About AI’s Future

AI’s Role in Augmenting, Not Replacing, Human Cognition

As much as Kolmogorov Complexity reveals about intelligence, it also emphasizes the irreplaceable aspects of human cognition that resist compression. Rather than seeking to fully replicate human intelligence, AI’s greatest potential may lie in augmenting it. Machine learning models excel at recognizing and processing patterns at scale, offering support in areas like data analysis, language translation, and predictive modeling.

By working alongside humans, AI can enhance productivity, speed up data-driven tasks, and help explore scientific inquiries. But fully replacing human intelligence is unlikely, as machines lack the context, intentionality, and emotional resonance that make human intelligence unique.

Redefining Intelligence Beyond Compression

While Kolmogorov Complexity gives us valuable insights into the patterns within intelligence, it doesn’t capture the holistic nature of human cognition. Intelligence may be partly compressible, but its full richness lies in its resistance to simplification. This invites a rethinking of AI’s goals, moving beyond simple replication toward more integrated and context-aware systems that complement human thought.

Ultimately, the journey to model intelligence illuminates as much about the boundaries of AI as it does about the complexity of the human mind. The quest to compress intelligence might inspire breakthroughs, but it also reaffirms the uniqueness of human cognition—full of nuance, emotion, and creativity that remain just beyond the reach of algorithms.

Resources

- Kolmogorov Complexity Basics

- “Kolmogorov Complexity” (Scholarpedia) – A straightforward introduction to Kolmogorov Complexity by experts in the field. This covers key concepts and applications in computer science and AI.

Read on Scholarpedia - “An Introduction to Kolmogorov Complexity” (Stanford Encyclopedia of Philosophy) – This entry provides a philosophical perspective on Kolmogorov Complexity, exploring its implications for understanding patterns, randomness, and information in human cognition and AI.

Read on Stanford Encyclopedia of Philosophy

- “Kolmogorov Complexity” (Scholarpedia) – A straightforward introduction to Kolmogorov Complexity by experts in the field. This covers key concepts and applications in computer science and AI.

- The Role of Compression in AI and Cognition

- “Intelligence is Compression” by Marcus Hutter – This paper discusses how compression relates to intelligence modeling in AI, applying Kolmogorov Complexity to concepts of learning and prediction.

Read the paper on arXiv - “Pattern Recognition and Machine Learning” by Christopher Bishop – While a textbook rather than a single link, it’s often available in PDF form through institutional access and explores how pattern recognition underpins AI’s attempt to model aspects of human intelligence.

Overview on Springer

- “Intelligence is Compression” by Marcus Hutter – This paper discusses how compression relates to intelligence modeling in AI, applying Kolmogorov Complexity to concepts of learning and prediction.

- AI, Creativity, and the Limits of Modeling Human Cognition

- “The Ethics and Governance of Artificial Intelligence” (Harvard University) – This resource covers the ethical considerations in replicating human cognition, including the limits of current AI to fully model self-awareness, creativity, and emotional intelligence.

Read on Harvard’s site - “Can AI Be Truly Creative?” (MIT Technology Review) – This article dives into how AI generates creative outputs and whether it can capture the nuances of human creativity, exploring the boundaries of compression and innovation in machines.

Read on MIT Technology Review

- “The Ethics and Governance of Artificial Intelligence” (Harvard University) – This resource covers the ethical considerations in replicating human cognition, including the limits of current AI to fully model self-awareness, creativity, and emotional intelligence.

FAQs

Can Kolmogorov Complexity fully describe human cognition?

No, Kolmogorov Complexity alone cannot fully describe human cognition. While it offers insights into structured, pattern-based aspects of intelligence, human cognition includes layers like self-awareness, context, emotional understanding, and creativity that defy simple reduction. The subjective and context-rich nature of human experiences adds a complexity that resists being fully compressed or encoded in AI models.

What are the challenges of using Kolmogorov Complexity in AI?

One key challenge is that Kolmogorov Complexity cannot be directly calculated in practice for most datasets, meaning we often work with estimates rather than exact measurements. Additionally, human intelligence involves non-linear thinking, emotional reasoning, and cultural contexts that are hard to quantify. While Kolmogorov Complexity helps in compressing and recognizing patterns, it falls short when dealing with cognitive functions that lack predictable patterns.

Can artificial intelligence systems be truly creative?

Current AI systems, such as those using deep learning, are impressive at pattern recognition and can generate creative works by mimicking existing data. However, AI creativity is based on pattern-based synthesis rather than true novelty or intent. While AI can produce outputs that seem creative, it does so by processing vast amounts of existing data, not by generating genuinely new ideas or insights in the way humans do.

What are the limitations of AI in mimicking human intelligence?

AI systems excel at processing and generating patterns from large datasets, but they struggle with contextual understanding, self-awareness, and empathy. These elements of human intelligence involve complex interplays of memory, culture, and subjective experience, making them difficult to reduce to patterns. AI often lacks an internal sense of purpose or intent, which limits its ability to replicate the depth of human cognition.

How does Kolmogorov Complexity relate to data compression?

Kolmogorov Complexity is foundational to understanding data compression. Data that has a pattern or regular structure can often be compressed because a shorter program can recreate it. For instance, a repeating sequence of numbers can be represented with a simple loop, rather than storing each number individually. However, truly random data is “incompressible” because no shorter program can generate it, requiring each piece of information to be stored explicitly.

Could Kolmogorov Complexity help us model self-awareness?

Currently, Kolmogorov Complexity can’t model self-awareness directly, as self-awareness involves subjective experience and reflection—factors that resist algorithmic simplification. Although some structured aspects of thought could theoretically be simplified, self-awareness relies on constantly adapting, experiential processing, which is difficult to represent within the framework of Kolmogorov Complexity.

How is Kolmogorov Complexity different from algorithmic complexity?

Kolmogorov Complexity focuses on the length of the shortest program that can generate a specific dataset, while algorithmic complexity refers more generally to the computational resources (time, memory) required to execute a program. Kolmogorov Complexity is about the data’s structure and compressibility, while algorithmic complexity measures how demanding it is to compute or solve a particular problem. Both concepts intersect in AI when trying to replicate cognitive tasks: some tasks are complex because they require intricate programs, while others are complex due to high computational demands.

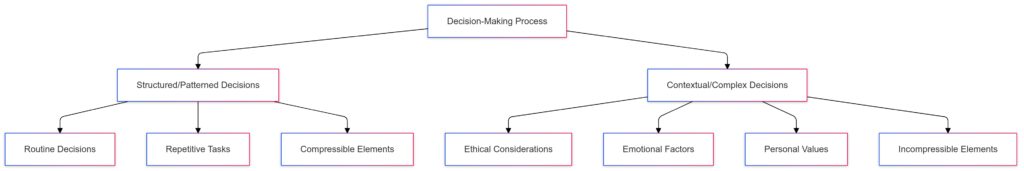

Can human decision-making be compressed or simplified?

Some aspects of decision-making can be simplified, especially those based on repetitive patterns, routines, or rules. Basic decisions, like “if-then” scenarios, can be encoded relatively easily. However, many human decisions involve emotion, ethical considerations, and personal values, which vary by context and individual experience. These elements introduce layers of complexity that are difficult to reduce or compress, suggesting that while some decision-making may be simplified, fully capturing human reasoning in a model remains challenging.

Contextual/Complex Decisions: Covers ethical considerations, emotional factors, and personal values, representing incompressible elements.

What is the role of randomness in human intelligence?

Randomness is a core aspect of human cognition, particularly in creativity, intuition, and problem-solving. While pattern-based processes like memory recall are somewhat predictable, creativity often relies on combining seemingly unrelated ideas, introducing an element of randomness. This randomness makes certain aspects of intelligence hard to compress since they lack predictable patterns. In Kolmogorov Complexity, random data has high complexity because it cannot be simplified, highlighting how unpredictable aspects of cognition resist compression.

Why can’t AI understand context like humans do?

Context in human intelligence includes cultural knowledge, emotional nuance, and situational awareness, all of which influence how we interpret information. AI can analyze and mimic patterns within data, but it often lacks the flexibility to adapt to new contexts that weren’t explicitly programmed. Context requires an understanding of subjective and relational dynamics—qualities that go beyond straightforward data patterns and resist being fully compressed or encoded.

How does Kolmogorov Complexity apply to machine learning?

In machine learning, Kolmogorov Complexity can help identify simpler models that achieve high accuracy without unnecessary complexity, which is known as Occam’s Razor in AI. By finding compact representations, machine learning algorithms can avoid overfitting (where a model is too complex and specific to its training data). While Kolmogorov Complexity is more theoretical, its principles inform approaches like model compression, helping to streamline algorithms for efficiency.

Can Kolmogorov Complexity measure creativity?

Kolmogorov Complexity is limited in capturing creativity because creativity often involves non-repetitive, novel combinations of ideas that defy simple patterns. A truly creative work may have high Kolmogorov Complexity because it doesn’t follow an easily identifiable structure or program. Although AI can produce outputs that mimic creative styles, these outputs are generally variations on existing patterns, not genuinely new ideas, limiting the ability of Kolmogorov Complexity to quantify creativity in full.

What role does Kolmogorov Complexity play in data science?

Kolmogorov Complexity underpins data compression and pattern recognition in data science, providing insights into how data can be simplified without losing essential information. In areas like data cleaning and feature selection for machine learning, Kolmogorov Complexity helps identify redundant or unnecessary data. However, calculating exact Kolmogorov Complexity for real-world data is often impractical, so data scientists rely on approximation techniques inspired by its principles.

Could Kolmogorov Complexity explain consciousness?

While Kolmogorov Complexity helps explain structured patterns in data, consciousness involves subjective experiences and interpretations that go beyond structural patterns. Consciousness isn’t solely a pattern of neural activity; it’s a state of awareness that includes sensations, emotions, and a sense of self. These elements introduce levels of variability and subjectivity that resist compression, making Kolmogorov Complexity insufficient for fully explaining consciousness.

Why is Kolmogorov Complexity important in understanding intelligence?

Kolmogorov Complexity gives us a way to think about the compressibility of intelligence, providing insights into how much of our thought and behavior can be represented in simplified models. If intelligence processes contain compressible patterns, they may be easier to model and simulate with AI. However, areas that involve random or highly unique patterns are less compressible, indicating the limits of modeling human intelligence. Kolmogorov Complexity helps us identify these boundaries, highlighting where human intelligence remains distinct.

How does Kolmogorov Complexity relate to information theory?

Kolmogorov Complexity is closely related to information theory, particularly concepts like entropy, which measures the unpredictability or randomness in data. While entropy quantifies the average amount of information in a dataset, Kolmogorov Complexity provides a measure of the minimal description length needed to represent that data. In AI and cognition, understanding both helps to explore which cognitive processes can be encoded as structured information and which involve randomness that resists compression.

Can Kolmogorov Complexity improve AI interpretability?

Kolmogorov Complexity can potentially aid in AI interpretability by helping to simplify models, making them easier to understand. If a model can be compressed to capture only the essential patterns needed for accurate predictions, it can avoid unnecessary complexity. This concept aligns with efforts to develop more interpretable, streamlined models that aren’t overly complex or obscure. However, interpretability remains challenging for complex models like neural networks, where the “compressed” understanding of a system might still lack human comprehensibility.

What are “compressible” versus “incompressible” aspects of intelligence?

Compressible aspects of intelligence include processes with clear patterns, like certain types of memory recall, basic sensory processing, and routine behaviors. These aspects can often be represented in simplified models. Incompressible aspects, on the other hand, involve unique experiences, creative insights, and spontaneous or emotional responses, where there is no simple pattern to follow. These incompressible elements resist being reduced to formulas or programs, highlighting the limits of compressing human cognition.

Is there a practical way to measure Kolmogorov Complexity?

In practice, exact Kolmogorov Complexity can’t be computed because it’s impossible to know if we’ve found the shortest program that represents a dataset. However, practical approximations are used in fields like data compression and machine learning. These approximations involve simplifying data by identifying redundancies and unnecessary patterns, which can help create streamlined models. While they’re not exact measures of Kolmogorov Complexity, they provide functional insights into a dataset’s compressibility.

How does Kolmogorov Complexity influence natural language processing?

Kolmogorov Complexity impacts natural language processing (NLP) by guiding how patterns in language can be identified and compressed. Languages contain rules and structures that make certain phrases or sentences predictable, meaning they’re partially compressible. However, language also includes idioms, metaphors, and context-driven nuances that resist full compression. In NLP, Kolmogorov Complexity helps balance efficient processing with the need for models that can handle the unpredictability and contextual depth of human language.

Could Kolmogorov Complexity help in understanding human learning?

Kolmogorov Complexity offers a perspective on learning as the process of identifying and compressing patterns. Human learning often involves recognizing regularities and simplifying them into concepts, skills, or routines that can be applied to new situations. In this way, learning itself could be seen as a type of “compression,” where complex experiences are distilled into actionable knowledge. However, human learning also includes experiences and insights that don’t fit neat patterns, challenging the limits of this framework.

Is Kolmogorov Complexity used in neuroscience?

While Kolmogorov Complexity is not a direct tool in neuroscience, its principles have inspired approaches to analyzing brain activity patterns and compressing data from neuroimaging. In neuroscience, researchers aim to identify patterns in brain data, such as consistent neural pathways in response to stimuli, which can provide insights into how the brain processes and stores information. However, because neural activity includes many unpredictable factors, Kolmogorov Complexity has its limits in capturing the brain’s full complexity.

Can emotions be compressed or quantified with Kolmogorov Complexity?

Emotions are challenging to compress because they are subjective, context-dependent, and highly variable. While certain emotional expressions may follow predictable patterns, the underlying experience and meaning of an emotion vary widely among individuals and situations. AI models can detect certain emotional patterns, like facial expressions or tone, but these are surface-level cues that don’t fully represent the depth or complexity of emotional experiences, which resist simple pattern-based compression.

How does Kolmogorov Complexity impact ethical considerations in AI?

Kolmogorov Complexity raises ethical considerations around how much we should try to simplify and replicate human intelligence. Efforts to compress and model human cognition risk oversimplifying elements like empathy, intuition, and personal experience, which are fundamental to human decision-making. When AI is used in sensitive areas, such as mental health or justice, ethical concerns arise around whether these systems can fairly and accurately represent complex human needs and behaviors without losing essential nuances.

What does Kolmogorov Complexity reveal about the future of AI?

Kolmogorov Complexity suggests that while AI can replicate certain structured aspects of human intelligence, it will likely face challenges in fully capturing the complexity of human thought, emotion, and self-awareness. As AI advances, it may excel in tasks that involve identifiable patterns, but the more nuanced, subjective, and creative elements of intelligence might always resist complete compression. This underscores the potential for AI to work as a complement to, rather than a replacement for, human cognition in the future.