Understanding Leaky ReLU in AI

What is Leaky ReLU?

Leaky ReLU (Rectified Linear Unit) is an activation function used in neural networks. It fixes a common issue with standard ReLU, which is the “dying ReLU problem.” In ReLU, neurons output zero for negative values, but Leaky ReLU allows a small, nonzero slope for negative inputs. This ensures that the network retains learning capacity even in cases of negative signals.

The formula for Leaky ReLU is straightforward:

- [math]f(x)=x [/math] for [math]>0 [/math]

- [math]f(x)=αx [/math] for [math] x ≤0 [/math] where [math]α[/math] is a small constant like 0.01.

Why Edge Devices Need Efficient Activations

Edge devices are constrained by power, memory, and computational resources. Traditional AI models demand heavy computation, which isn’t feasible on these devices. Leaky ReLU offers computational simplicity, reducing latency and energy consumption while maintaining strong learning capabilities.

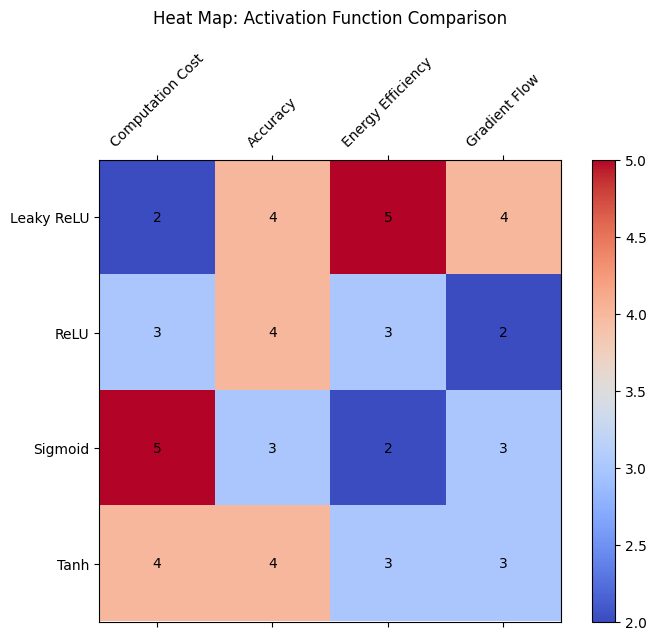

Comparison with Other Activation Functions

Color-coded values: High-intensity values will appear in red, and low-intensity values in blue.

Custom Labels: Activation functions and metrics are clearly labeled.

Leaky ReLU has distinct advantages over alternatives:

- ReLU: Suffers from inactive neurons; Leaky ReLU avoids this.

- Sigmoid and Tanh: Require more computation and can saturate gradients, making them less ideal for edge systems.

This efficiency makes Leaky ReLU a preferred choice for low-power AI systems.

The graph visualizes the Leaky ReLU’s characteristic behavior, making it easy to interpret the role of the activation function in neural networks.

The Role of Leaky ReLU in Lightweight Neural Networks

Supporting Quantized Models

Quantized models use fewer bits (e.g., 8-bit integers) instead of 32-bit floating points to save memory and power. Leaky ReLU’s simple arithmetic is ideal for such models, avoiding the complexity of more sophisticated functions.

Enhancing Robustness in Edge AI

Leaky ReLU enables models to learn better from diverse input distributions, particularly in noisy environments where edge devices often operate. This is crucial for applications like voice recognition or autonomous navigation.

Optimizing Performance on Resource-Constrained Hardware

The computational efficiency of Leaky ReLU fits well with microcontrollers and single-board computers like Raspberry Pi. It minimizes the load on processors, extending battery life without compromising accuracy.

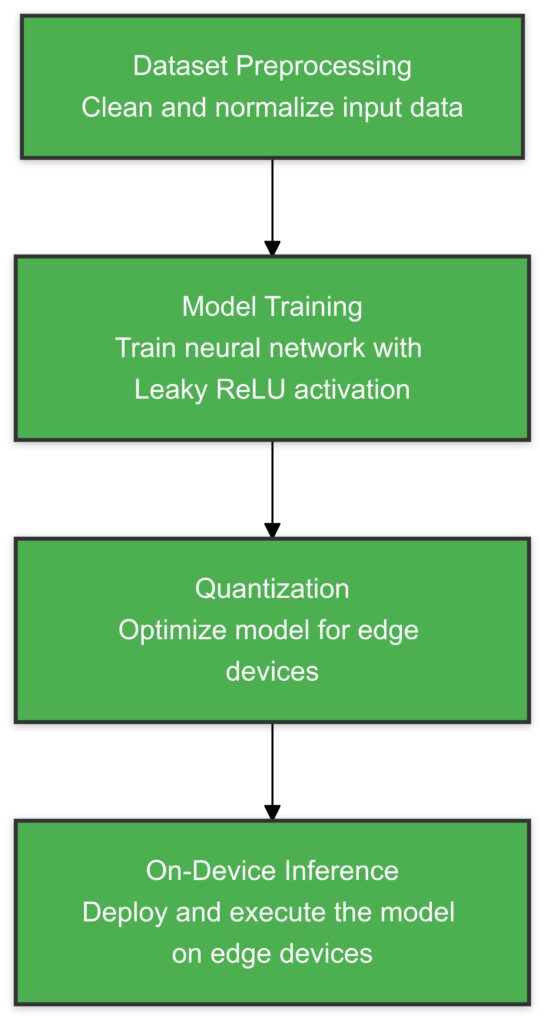

The process of deploying a lightweight Leaky ReLU-based neural network for edge devices, from training to on-device inference.

Power Efficiency in Low-Power AI Systems

Reduced Processing Overhead

Unlike other activations, Leaky ReLU requires minimal mathematical operations. This directly translates to lower energy consumption, making it suitable for devices with limited energy sources like IoT sensors.

Compatibility with Hardware Accelerators

AI accelerators, such as Google’s Coral TPU or Nvidia Jetson Nano, support Leaky ReLU efficiently. These accelerators are designed to run optimized neural networks, and the lightweight nature of Leaky ReLU enhances their performance further.

Adaptive AI Models for Prolonged Operation

Leaky ReLU’s simplicity allows edge systems to focus on adaptive learning, which improves performance over time without excessive retraining or energy spikes.

Leaky ReLU is the most efficient activation function in terms of energy consumption.

Sigmoid has the highest energy usage, followed by ReLU and Tanh.

This chart emphasizes the efficiency of Leaky ReLU for energy-constrained environments, such as edge computing.

Key Applications of Leaky ReLU in Edge AI

Smart Home Devices

Devices like thermostats, cameras, and assistants rely on real-time processing. Leaky ReLU supports rapid inference with minimal energy draw, ensuring reliable functionality.

Wearable Technology

Wearables need lightweight AI for health tracking, such as heart rate or motion detection. Leaky ReLU helps conserve battery life while delivering accurate results.

Autonomous Vehicles

From drones to self-driving cars, edge AI must process sensor data rapidly. Leaky ReLU’s efficiency plays a crucial role in maintaining real-time decision-making on constrained systems.

Smart Cameras: [0.8, 0.9] – Excels in both accuracy and efficiency.

Wearables: [0.6, 0.8] – High efficiency but moderate accuracy.

Industrial IoT: [0.7, 0.7] – Balanced in accuracy and efficiency.

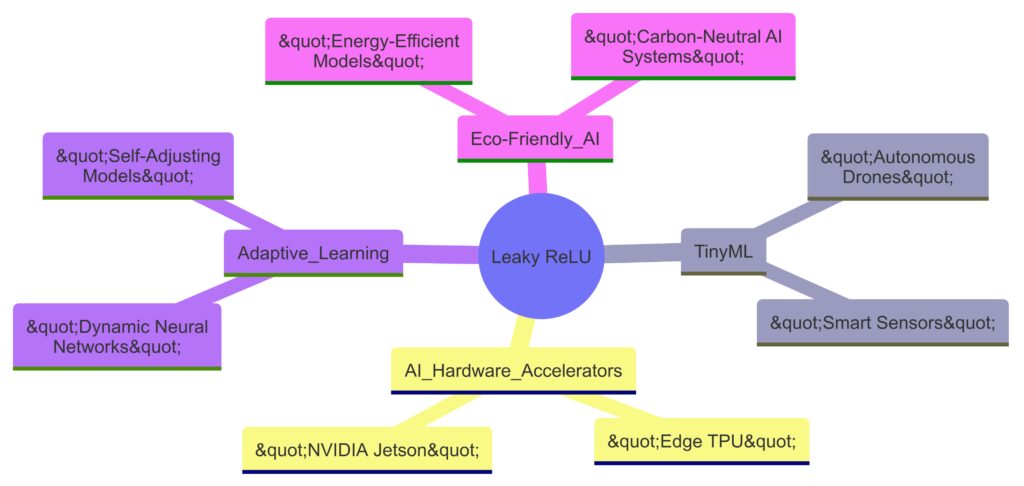

Adapting AI Models with Leaky ReLU for the Future

Focus: Minimal resource usage for edge devices.

AI Hardware Accelerators:Examples: Edge TPU, NVIDIA Jetson.

Role: Efficiently deploying Leaky ReLU for edge AI.

Adaptive Learning:Concepts: Dynamic Neural Networks, Self-Adjusting Models.

Benefit: Improved adaptability in dynamic environments.

Eco-Friendly AI:Applications: Energy-Efficient Models, Carbon-Neutral Systems.

Impact: Reducing the environmental footprint of AI systems.

Balancing Accuracy and Efficiency

AI developers are constantly seeking a balance between model accuracy and power consumption. Leaky ReLU bridges this gap, enabling efficient AI without significant accuracy trade-offs.

Custom Architectures for Edge Computing

Custom neural networks tailored for edge devices often rely on modular activation functions like Leaky ReLU. This ensures scalability and compatibility across a range of hardware.

Deployment Strategies for Leaky ReLU in Edge Devices

Integrating Leaky ReLU in Pre-trained Models

Leaky ReLU is easy to integrate into pre-trained models, especially for transfer learning tasks on edge devices. By replacing more resource-intensive activations with Leaky ReLU, developers can adapt existing models to fit constrained environments. This process is straightforward and doesn’t require starting from scratch.

For example:

- Swap ReLU with Leaky ReLU in convolutional layers of CNNs.

- Fine-tune the model on edge-relevant datasets.

Utilizing Frameworks Optimized for Edge AI

Popular frameworks like TensorFlow Lite, PyTorch Mobile, and ONNX simplify deploying Leaky ReLU-enabled models on edge hardware. These tools support optimizations like quantization and pruning while preserving the benefits of Leaky ReLU.

Key considerations:

- Quantization: Reduces model size, ensuring compatibility with edge processors.

- Hardware-specific optimizations: Ensures smooth integration with AI accelerators.

Leveraging On-Device Inference

On-device inference reduces latency by processing data locally rather than relying on cloud servers. Leaky ReLU enhances this by ensuring models remain computationally efficient and responsive.

This is especially useful for applications like:

- Real-time image classification in security cameras.

- Audio event detection in smart speakers.

Advancements in AI Chip Design for Activation Efficiency

The Role of Specialized AI Hardware

Modern AI chips, such as Apple’s Neural Engine and Arm Cortex processors, are designed with energy efficiency in mind. These processors accelerate functions like Leaky ReLU to minimize power usage without sacrificing throughput.

FPGAs and Custom ASICs

Field-Programmable Gate Arrays (FPGAs) and custom ASICs allow developers to hardwire efficient functions like Leaky ReLU directly into the hardware. This reduces the need for software-based operations, further boosting performance.

Dynamic Scaling in AI Chips

Advanced chips now feature dynamic scaling, where power usage adjusts to workload demands. Leaky ReLU’s ability to handle a wide range of inputs seamlessly aligns with this trend, ensuring consistent output under varying conditions.

Exploring Real-World Use Cases

Industrial IoT (IIoT)

In factories, edge devices monitor machinery, track production, and detect anomalies. Leaky ReLU enhances these applications by improving model robustness and reducing energy costs for sensors and gateways.

Precision Agriculture

Leaky ReLU-driven models power drones and sensors for tasks like monitoring crop health and predicting weather patterns. These systems benefit from low-latency processing, ensuring timely decisions in the field.

Healthcare Applications

In portable medical devices, Leaky ReLU supports accurate and real-time diagnostics. Whether it’s an AI-powered glucose monitor or a mobile ECG reader, the function ensures efficiency without draining power.

Addressing Challenges in Edge Deployment

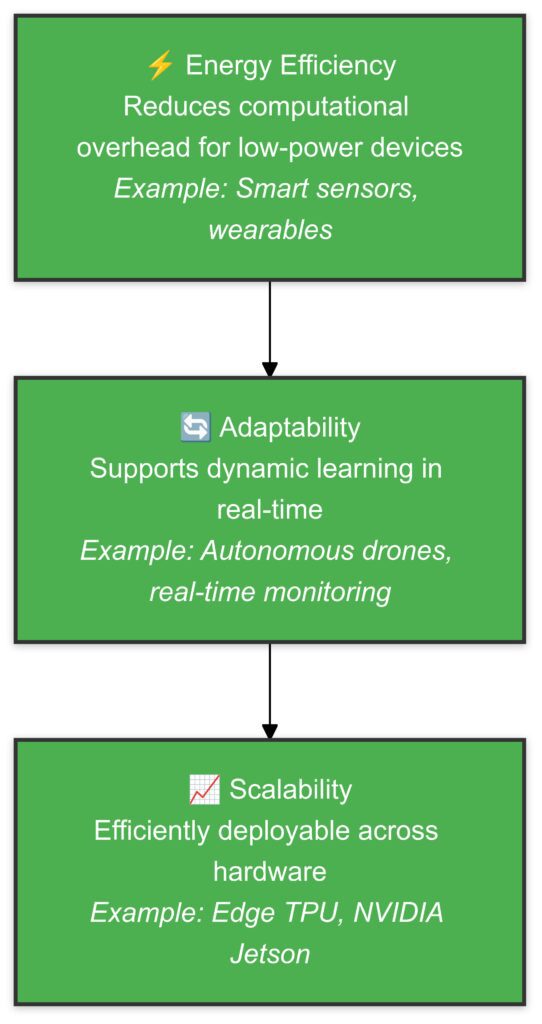

Energy Efficiency:

- ⚡ Reduces computational overhead for low-power devices.

- Examples: Smart sensors, wearables.

Adaptability:

- 🔄 Enables dynamic learning in real-time environments.

- Examples: Autonomous drones, real-time monitoring.

Scalability:

Examples: Edge TPU, NVIDIA Jetson.

📈 Scalable across various hardware platforms.

Balancing Complexity with Simplicity

One challenge of using neural networks on edge devices is finding the right balance between model complexity and device limitations. Leaky ReLU’s simplicity helps overcome this by allowing lightweight architectures to compete with more complex ones.

Mitigating Energy Constraints

For battery-powered devices, efficient activations like Leaky ReLU can significantly extend operational life. However, optimizing other aspects of the model—like memory allocation and data transfer—is also crucial.

Ensuring Scalability Across Devices

Leaky ReLU-based models can scale across a variety of edge devices, from IoT modules to smartphones. This scalability ensures compatibility across diverse hardware without requiring separate model designs.

Tools for Optimizing Leaky ReLU in AI Workflows

Profiling and Benchmarking Tools

Tools like TensorBoard, Nvidia Nsight, and Edge Impulse allow developers to monitor performance metrics, including the impact of Leaky ReLU on power and accuracy.

Compression Techniques

Model compression methods, such as pruning and weight-sharing, work well with Leaky ReLU. These techniques trim unnecessary parameters while maintaining activation efficiency.

Future Trends in Low-Power AI and Leaky ReLU Integration

The Evolution of Edge AI Architectures

As edge computing continues to grow, neural networks are being tailored for specific use cases and constraints. Leaky ReLU is at the forefront of this evolution, enabling custom lightweight architectures that perform efficiently without bloating computational demands.

Emerging trends include:

- TinyML Frameworks: These focus on deploying ultra-small neural networks optimized with functions like Leaky ReLU.

- Hybrid AI Models: Combining local processing with periodic cloud updates, leveraging Leaky ReLU for the on-device component.

AI-powered Sensors and Microcontrollers

Microcontrollers with embedded AI capabilities, such as the ESP32 and STM32 series, are now prevalent in IoT devices. Leaky ReLU suits these platforms perfectly due to its:

- Minimal processing overhead.

- Ability to handle sensor input with high variability (e.g., temperature, pressure).

This innovation allows even the smallest devices to run AI models effectively.

Adaptive AI and Continual Learning

Edge devices are increasingly adopting continual learning techniques to adapt to new data without full retraining. Leaky ReLU plays a crucial role by:

- Handling incremental updates efficiently.

- Supporting dynamic learning environments, such as adapting to new voice patterns in smart assistants.

Cross-Hardware Compatibility

AI models with Leaky ReLU are becoming more portable across diverse hardware ecosystems. Tools like ONNX make it easier to deploy these models across:

- CPUs (e.g., Intel and AMD).

- GPUs (e.g., Nvidia and Mali).

- TPUs and AI accelerators.

This flexibility reduces development time and ensures broader application support.

Innovations in Hardware Support

Low-Power Neural Processing Units (NPUs)

NPUs are becoming a common feature in edge hardware, designed to accelerate specific AI operations. Leaky ReLU benefits from these optimizations by running faster and more efficiently on NPUs than more complex activations.

Enhanced Energy Efficiency with Neuromorphic Chips

Neuromorphic computing mimics the human brain, offering extreme energy efficiency. Leaky ReLU fits well within these frameworks because of its biological inspiration and adaptability.

Examples:

- IBM’s TrueNorth.

- Intel’s Loihi chip.

Thermal Management for AI Systems

Managing heat is crucial in compact devices. Leaky ReLU reduces the processing load, helping edge devices maintain lower operating temperatures, which extends their lifespan and reliability.

Broader Implications for AI Development

Democratization of AI on Edge Devices

With the rise of Leaky ReLU-enabled lightweight models, even non-specialists can implement edge AI solutions. This shift will empower industries like agriculture, healthcare, and education in remote areas with low-cost AI tools.

Bridging the Urban-Rural Divide

By leveraging efficient AI models powered by Leaky ReLU, organizations can deploy affordable solutions for underserved regions. From mobile health units to smart irrigation systems, the possibilities are vast.

Sustainability in AI Design

Leaky ReLU contributes to the larger trend of creating eco-friendly AI systems. By reducing computational waste and energy demands, it supports the movement toward green technology.

Emerging Research and Advancements

Hybrid Activation Functions

Researchers are experimenting with combinations of activation functions to optimize specific tasks. Leaky ReLU often forms the foundation for these hybrids due to its balance of simplicity and effectiveness.

AutoML for Edge AI

AutoML platforms are automating the design of neural networks for edge devices. These tools frequently select Leaky ReLU for activation, given its proven efficiency.

Real-Time Edge AI Monitoring

New tools for real-time monitoring of edge AI systems are being developed, ensuring that models like those using Leaky ReLU maintain optimal performance under varying conditions.

Wrapping Up

Leaky ReLU stands out as a critical enabler for efficient, low-power AI systems on edge devices. Its simplicity and versatility make it a cornerstone of modern edge AI architectures, with exciting advancements continually expanding its role in creating smarter, greener, and more accessible technology solutions.

FAQs

How does Leaky ReLU solve the “dying ReLU” problem?

Leaky ReLU addresses the dying ReLU problem by introducing a small slope for negative inputs instead of outright zeroing them. This means neurons remain active and contribute to learning, even when they receive negative values.

Example: In a speech recognition model running on a smart assistant, traditional ReLU might deactivate neurons, causing the model to miss subtle sound variations. Leaky ReLU ensures that even low-amplitude inputs (like soft speech) contribute to the system’s understanding.

Why is Leaky ReLU ideal for edge devices?

Leaky ReLU’s simplicity in computation makes it power-efficient, which is essential for edge devices with limited resources. It avoids heavy operations like exponentiation or division, common in other activations like Sigmoid or Tanh.

Example: A wearable fitness tracker using Leaky ReLU can perform activity classification while consuming less battery, enabling the device to last longer between charges.

Can Leaky ReLU work with quantized models?

Yes, Leaky ReLU is highly compatible with quantized models. Its lightweight arithmetic translates seamlessly to lower-precision formats like 8-bit integers, which are common in edge AI to save memory and power.

Example: In an IoT-based air quality monitor, using a quantized model with Leaky ReLU ensures accurate predictions while keeping the device small and energy-efficient.

What types of edge applications benefit most from Leaky ReLU?

Leaky ReLU is versatile and can enhance a wide range of real-time and low-power applications, such as:

- Smart cameras for security or wildlife monitoring.

- Portable medical devices like ECG or glucose monitors.

- Autonomous robots navigating dynamic environments.

Example: A home security camera using Leaky ReLU can detect movement quickly without draining its battery, making it perfect for off-grid locations.

How does Leaky ReLU compare to other activation functions?

Leaky ReLU balances simplicity and performance, unlike Sigmoid or Tanh, which can lead to gradient saturation. It also avoids the neuron inactivation issues found in standard ReLU.

Example: In a drone’s obstacle detection model, Leaky ReLU allows for faster and more robust processing of varying input intensities, reducing the likelihood of crashes or missteps.

What is the role of Leaky ReLU in model compression?

Leaky ReLU works well with pruned or sparse neural networks, which reduce the number of parameters to save memory and computation. Its efficiency ensures minimal performance degradation even in compressed models.

Example: A smart thermostat using a compressed neural network with Leaky ReLU can learn temperature patterns efficiently while operating on a low-power chip.

Is Leaky ReLU hardware-friendly?

Absolutely. Leaky ReLU integrates seamlessly with AI accelerators, NPUs, and even general-purpose processors. Its simplicity minimizes hardware stress, making it ideal for thermal and energy management.

Example: A microcontroller in a soil sensor uses Leaky ReLU to predict irrigation needs without overheating or requiring active cooling.

How does Leaky ReLU support adaptive learning in edge devices?

Leaky ReLU facilitates continual learning by maintaining learning capacity over time, even for negative input values. This adaptability is crucial in environments where data evolves dynamically.

Example: A smart assistant trained on Leaky ReLU can adapt to new user accents or speech patterns without requiring complete retraining.

Is Leaky ReLU suitable for hybrid AI systems?

Yes, Leaky ReLU fits well in hybrid AI architectures, where part of the model runs on the edge, and another part is cloud-based. Its efficiency on the edge component ensures quick local responses, while the cloud handles complex tasks.

Example: A smart appliance like a refrigerator could use Leaky ReLU for real-time tasks like temperature control while offloading long-term data analytics to the cloud.

Can Leaky ReLU help with edge AI scalability?

Leaky ReLU supports scalable edge AI solutions by enabling models to perform well across diverse devices, from small IoT sensors to more powerful AI accelerators.

Example: A fleet of delivery drones with varying hardware configurations can all run the same Leaky ReLU-powered model, ensuring consistent performance across devices.

How does Leaky ReLU contribute to energy efficiency?

Leaky ReLU minimizes computation by requiring only basic arithmetic operations, such as multiplication and addition. This simplicity reduces the energy consumed per operation, extending the battery life of devices.

Example: A wearable sleep tracker using Leaky ReLU can monitor sleep patterns overnight without significant battery drain, making it more practical for continuous use.

Can Leaky ReLU handle noisy input data in edge environments?

Yes, Leaky ReLU is effective in handling noisy or variable input data, which is common in edge environments. Its nonzero slope for negative values allows the model to learn subtle patterns even in less-than-perfect datasets.

Example: An industrial IoT sensor analyzing vibrations from heavy machinery can still detect anomalies despite background noise, thanks to Leaky ReLU’s robustness.

Is Leaky ReLU suitable for time-sensitive applications?

Leaky ReLU is ideal for real-time applications due to its computational efficiency. It processes inputs faster than more complex activation functions, ensuring timely responses in critical scenarios.

Example: A traffic monitoring system at a smart intersection uses Leaky ReLU to classify vehicle types in real-time, preventing bottlenecks.

How does Leaky ReLU enhance the performance of edge accelerators?

Edge accelerators, such as Google’s Coral TPU or Nvidia Jetson Nano, are optimized for lightweight and efficient operations. Leaky ReLU fits seamlessly, maximizing their computational power while minimizing delays.

Example: A drone equipped with an AI accelerator can use Leaky ReLU to enhance object detection capabilities while maintaining long flight durations.

What role does Leaky ReLU play in TinyML?

TinyML focuses on deploying machine learning on extremely small devices. Leaky ReLU’s low computational demand and effectiveness in shallow networks make it a natural choice for TinyML applications.

Example: A battery-operated wildlife tracker with TinyML uses Leaky ReLU to classify animal sounds, providing accurate insights without frequent battery replacements.

Can Leaky ReLU handle extreme edge conditions, such as low-power modes?

Leaky ReLU is highly reliable under low-power or energy-saving modes, where every computation must be efficient. Its simplicity ensures that it continues to perform effectively without draining resources.

Example: A solar-powered water quality sensor operating in remote areas uses Leaky ReLU to analyze data efficiently, even during cloudy days with minimal power input.

How does Leaky ReLU contribute to edge device longevity?

By reducing computational strain and managing energy consumption, Leaky ReLU helps extend the overall lifespan of edge devices. Less heat generation and power usage mean reduced wear and tear on hardware.

Example: A long-term environmental monitoring station benefits from Leaky ReLU-powered AI, enabling it to function for years without hardware upgrades or replacements.

Can Leaky ReLU be combined with other activation functions?

Yes, Leaky ReLU can be combined with other activation functions in hybrid models to optimize performance for specific tasks. This allows developers to leverage its efficiency while benefiting from other functions’ specialized strengths.

Example: In a medical imaging device, Leaky ReLU might be used in early layers for feature extraction, while Swish or GELU could refine results in later layers for increased precision.

How does Leaky ReLU support multimodal AI models?

Leaky ReLU integrates well into multimodal AI models, where data from different sensors or sources is processed simultaneously. Its flexibility ensures smooth operation across diverse input types.

Example: A smart car analyzing visual data from cameras and distance data from LiDAR sensors can use Leaky ReLU to merge and interpret inputs in real-time.

What is the future potential of Leaky ReLU in edge AI?

Leaky ReLU’s simplicity and adaptability position it as a cornerstone for future low-power, high-efficiency AI systems. As edge AI evolves, the function will likely play a critical role in enabling smarter, more responsive devices.

Example: Emerging technologies like AI-powered hearing aids may use Leaky ReLU for real-time noise suppression and speech enhancement, revolutionizing personal assistive devices.

Resources

Foundational Reading on Activation Functions

- Deep Learning by Ian Goodfellow, Yoshua Bengio, and Aaron Courville

This comprehensive book offers an in-depth understanding of activation functions, including ReLU and its variants like Leaky ReLU. Ideal for beginners and experts alike. - “Rectified Linear Units Improve Restricted Boltzmann Machines” by Vinod Nair and Geoffrey Hinton

The original paper discusses the benefits of ReLU, laying the groundwork for understanding Leaky ReLU.

Research Papers and Articles on Edge AI

- “TinyML: Enabling Energy-Efficient Machine Learning on the Edge”

Explore the growing field of TinyML and how activation functions like Leaky ReLU optimize performance. - “Quantized Neural Networks for Edge Computing”

Learn about the role of quantization and how Leaky ReLU fits into models designed for edge devices. - “Efficient Neural Network Architectures for Low-Power AI Applications”

This article highlights design strategies for edge systems, with a focus on activation function efficiency.

Tutorials and Hands-On Resources

- TensorFlow Lite and PyTorch Mobile Documentation

Detailed guides for deploying lightweight models with Leaky ReLU on edge devices.- TensorFlow Lite: https://www.tensorflow.org/lite

- PyTorch Mobile: https://pytorch.org/mobile/home/

- ONNX (Open Neural Network Exchange)

A tool to convert models for edge deployment, compatible with Leaky ReLU. Learn more: https://onnx.ai.

Online Courses and Tutorials

- “Edge AI and TinyML: Deploying AI on Microcontrollers with TensorFlow Lite” (Coursera)

Offers practical examples and projects involving Leaky ReLU in low-power AI applications. - Fast.ai’s Practical Deep Learning for Coders

Covers key concepts like ReLU variants and practical deployments for real-world use cases.

Link: https://www.fast.ai/

Open-Source Libraries and Tools

- Edge Impulse

A platform for building AI models for edge devices, leveraging functions like Leaky ReLU for lightweight deployments. Link: https://www.edgeimpulse.com/ - NVIDIA Jetson Community Resources

Tutorials and guides for deploying AI on NVIDIA Jetson hardware with Leaky ReLU-based models.

Link: https://developer.nvidia.com/embedded/jetson-community

Communities and Forums

- Reddit: r/MachineLearning and r/TinyML

Join discussions about low-power AI solutions and share insights on Leaky ReLU in edge applications. - Stack Overflow

Search for solutions or ask questions about implementing Leaky ReLU in specific frameworks.

Videos and Webinars

- “Understanding Activation Functions in Deep Learning” (YouTube)

Numerous channels like StatQuest and deeplizard explain ReLU and Leaky ReLU in an accessible way. - Edge AI Summit (On-Demand Sessions)

A conference showcasing edge AI advancements, including talks on efficient activation functions like Leaky ReLU.

Link: https://edgeaisummit.com/