Enter lightweight CNNs—a game-changing innovation designed for edge devices such as smartphones, IoT sensors, and even drones.

These efficient models are pushing AI to the edge, allowing complex tasks like image recognition, object detection, and more to run locally without relying on powerful cloud infrastructure.

In this article, we’ll explore how lightweight CNNs are transforming AI, why they’re essential for edge computing, and what the future holds for these compact yet powerful models.

The Need for Lightweight CNNs on Edge Devices

Why Edge Devices Require Smaller Models

Edge devices are often constrained by limited processing power, memory, and battery life. Unlike data centers or cloud-based platforms, these devices can’t handle the resource-heavy computations of traditional Convolutional Neural Networks (CNNs).

The solution? Lightweight CNNs—smaller, more efficient models designed to perform complex tasks using significantly less power and memory.

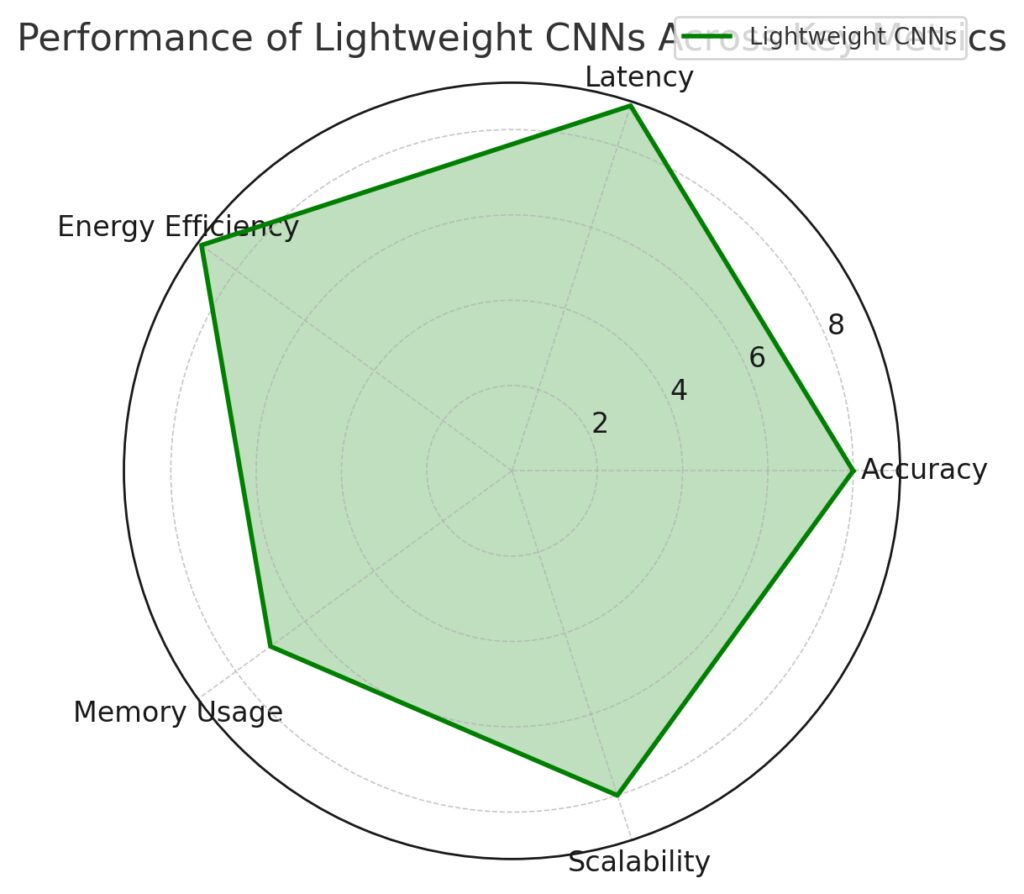

Lightweight CNNs bring the following benefits to edge devices:

- Reduced latency: Processing data locally means faster decision-making without the need to send data to the cloud.

- Enhanced privacy: Keeping data on the device improves privacy, especially for sensitive information like medical data or personal photos.

- Lower energy consumption: Efficient models minimize battery usage, making them ideal for mobile devices and IoT systems.

The Challenges of Traditional CNNs on Edge Devices

Traditional CNNs, though powerful, come with high computational costs due to:

- Deep architectures: Multiple layers with millions of parameters increase memory and processing requirements.

- Large datasets: Training these models requires vast amounts of data and computational resources.

- High energy usage: Running such models on smaller devices drains batteries quickly and can cause overheating.

For edge computing to thrive, AI models must be optimized for efficiency without sacrificing accuracy.

The Architecture of Lightweight CNNs

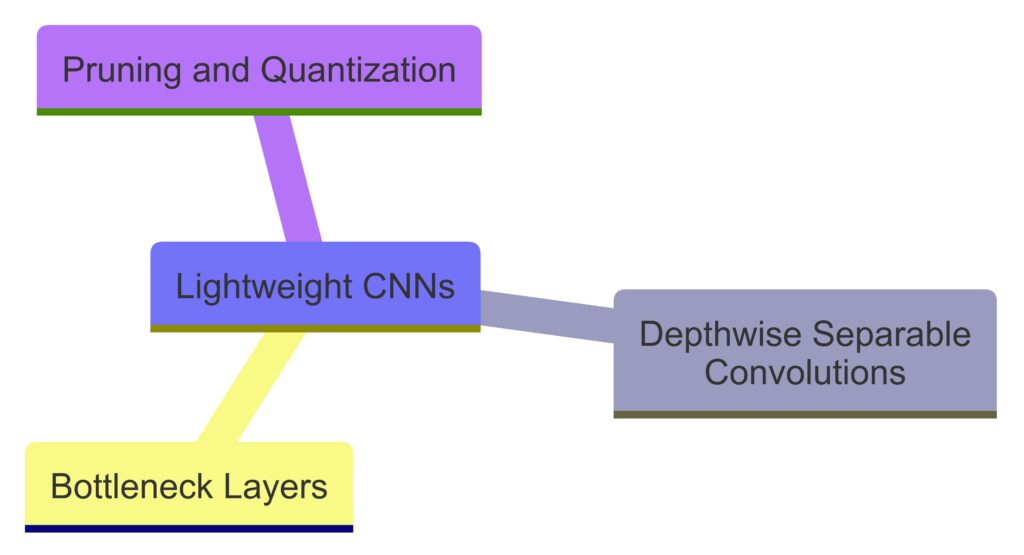

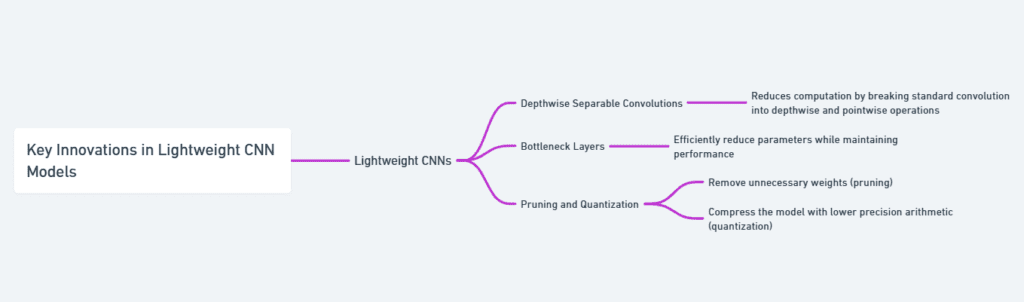

Key Innovations in Lightweight Models

Several innovations in CNN architecture have enabled the development of lightweight models suitable for edge devices:

- Depthwise separable convolutions: Introduced in models like MobileNet, this method breaks standard convolutions into two simpler steps: depthwise convolutions (applied per channel) and pointwise convolutions (combining channels). This reduces the number of computations significantly.

- Bottleneck layers: Found in models like EfficientNet, bottleneck layers compress the number of channels in intermediate layers, reducing memory and computation needs while maintaining performance.

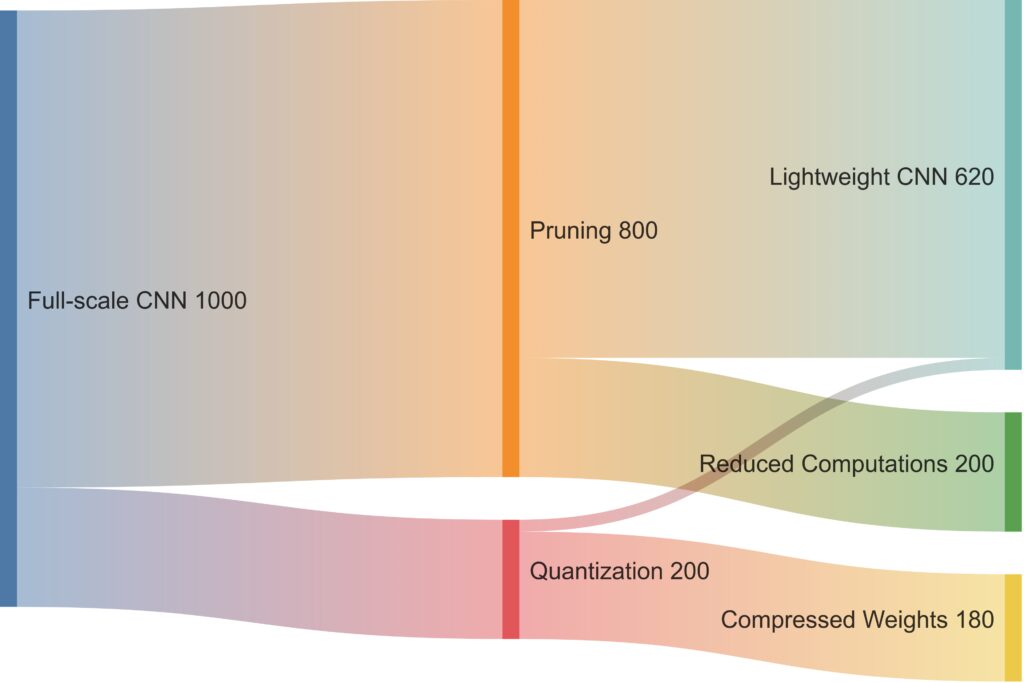

- Pruning and quantization: These techniques remove unnecessary weights (pruning) or reduce the precision of weights (quantization), resulting in smaller models that run more efficiently.

Popular Lightweight CNN Models

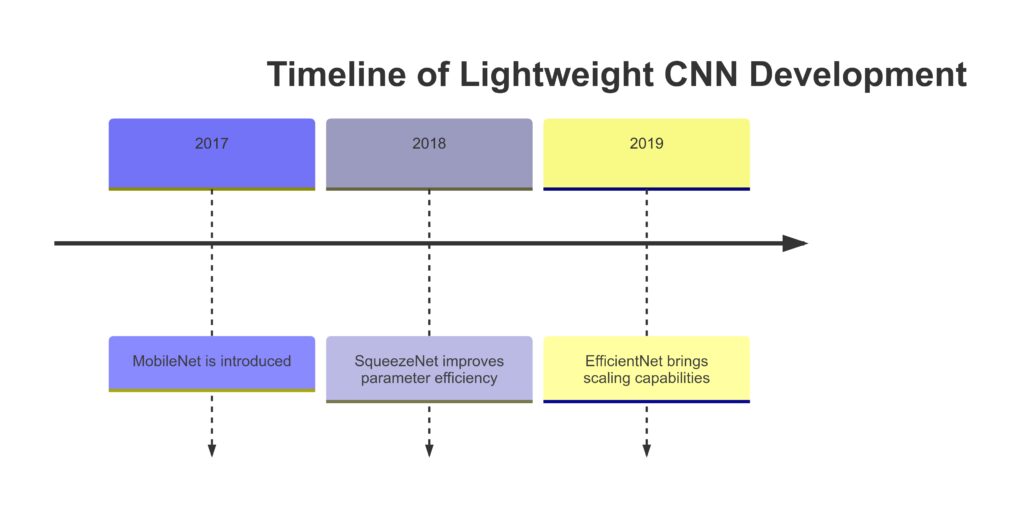

Several lightweight CNN models are optimized for edge computing:

- MobileNet: Designed for mobile and embedded vision applications, MobileNet uses depthwise separable convolutions to reduce the model size and computation without significantly sacrificing accuracy.

- SqueezeNet: This model aims to achieve AlexNet-level accuracy with 50x fewer parameters, making it highly efficient for edge devices.

- EfficientNet: By scaling up or down based on available resources, EfficientNet balances accuracy and model size, making it ideal for a wide range of devices, from smartphones to embedded systems.

Applications of Lightweight CNNs in Edge Computing

Real-Time Object Detection on Mobile Devices

One of the most common applications of lightweight CNNs is real-time object detection. Models like MobileNet-SSD can run on smartphones, identifying objects in live video streams. This opens up possibilities for applications in:

- Augmented reality (AR): Lightweight CNNs enable real-time detection of objects in AR apps without needing cloud resources.

- Smartphone photography: AI-assisted features like automatic scene detection and real-time enhancements depend on the efficiency of these models.

Edge-Based AI for IoT Devices

IoT devices often operate in environments where cloud connectivity is limited or unavailable. Lightweight CNNs allow IoT systems to process data locally, enabling:

- Smart home automation: Devices like smart cameras and voice assistants can run AI models locally to enhance security or control smart appliances without constant cloud connectivity.

- Industrial IoT: In manufacturing or agriculture, lightweight CNNs enable predictive maintenance and real-time monitoring of equipment, reducing downtime and operational costs.

Healthcare at the Edge: AI-Powered Diagnostics

Lightweight CNNs are making significant strides in edge-based healthcare:

- Wearable devices: AI-driven wearables can monitor vital signs or detect abnormalities like arrhythmias in real-time, providing immediate feedback to users and healthcare providers.

- Portable diagnostics: Lightweight models enable medical devices to analyze images (e.g., ultrasound scans) directly on-site, especially in remote or under-resourced areas without reliable internet access.

The Future of Lightweight CNNs and Edge AI

Further Optimizing CNNs for Efficiency

As edge computing continues to grow, future developments in lightweight CNNs will focus on further optimizing efficiency. Some of the expected advancements include:

- Neural architecture search (NAS): Automating the design of CNN architectures to find the most efficient models for specific edge tasks.

- Energy-aware training: Training models to be aware of and minimize energy consumption during inference on edge devices.

- Federated learning: A framework that allows edge devices to collaboratively train models without sending data to a central server, improving both privacy and efficiency.

Expanding Use Cases for Edge AI

As lightweight CNNs improve, their applications will continue to expand across industries:

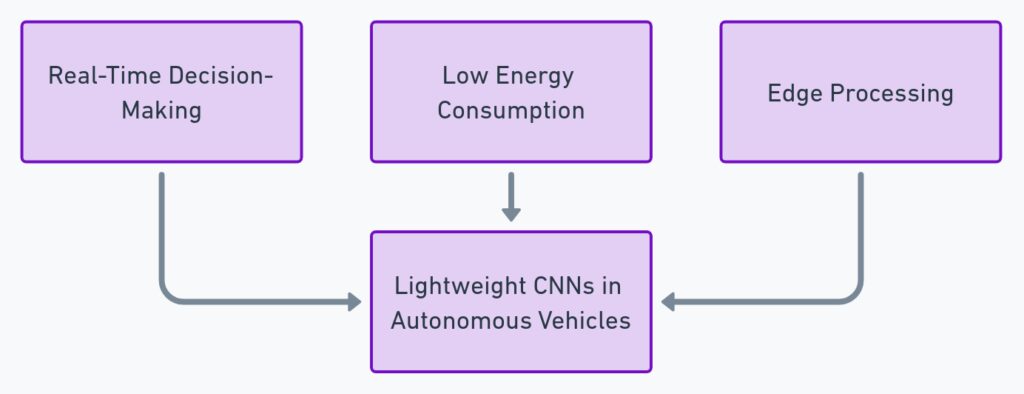

- Autonomous vehicles: AI on edge will enable self-driving cars to make faster decisions without relying on cloud processing.

- Smart cities: Edge-based CNNs will help manage city infrastructure, from traffic flow to energy distribution, in real-time.

- Environmental monitoring: Lightweight models in drones or sensors will track and analyze environmental data like air quality or wildlife movements in remote locations.

Conclusion: AI’s Journey to the Edge

Lightweight CNNs are ushering in a new era of AI-powered edge computing, where powerful machine learning models can run efficiently on resource-constrained devices. This development is transforming industries, from healthcare and IoT to mobile computing and beyond.

As AI continues its journey to the edge, the focus on creating even more efficient models will ensure that devices everywhere—no matter how small—can benefit from the incredible capabilities of CNNs.

Further Reading or Resources:

- MobileNet Architecture: Learn more about MobileNet

- EfficientNet Explained: Explore EfficientNet

- Edge AI and IoT: Read more on Edge AI

FAQs

How do lightweight CNNs differ from traditional CNNs?

Traditional CNNs are designed to handle large-scale tasks with high computational demands, while lightweight CNNs focus on reducing model size and computation through techniques like depthwise separable convolutions, bottleneck layers, and pruning. This makes them suitable for low-power edge devices while still performing complex tasks.

What are some popular lightweight CNN models?

Several lightweight CNN architectures have been developed for edge devices:

- MobileNet: Uses depthwise separable convolutions to reduce computational cost.

- SqueezeNet: Designed to achieve high accuracy with significantly fewer parameters.

- EfficientNet: Scales efficiently for different hardware, balancing accuracy and model size.

How do lightweight CNNs improve real-time object detection?

Lightweight CNNs like MobileNet-SSD enable edge devices to perform real-time object detection with minimal delay. They process video frames quickly, identifying objects in real time, making them ideal for applications like augmented reality (AR) or smartphone photography where quick responses are crucial.

Can lightweight CNNs be used in IoT devices?

Yes, lightweight CNNs are widely used in IoT systems, where edge processing is essential. IoT devices like smart cameras, sensors, and home automation systems rely on lightweight CNNs for local data analysis, reducing the need for constant cloud communication. This enhances real-time performance and ensures data privacy.

How do lightweight CNNs contribute to healthcare?

In healthcare, lightweight CNNs enable wearable devices and portable diagnostic tools to run AI algorithms locally. This allows for real-time monitoring of vital signs, early detection of health issues, and fast analysis of medical images like ultrasounds—all without relying on cloud-based systems, making diagnostics more accessible in remote areas.

What techniques make lightweight CNNs efficient?

Several techniques are employed to make lightweight CNNs efficient:

- Depthwise separable convolutions: Break down convolutions into simpler operations, reducing computational cost.

- Pruning: Removes unnecessary parameters, shrinking model size.

- Quantization: Reduces the precision of weights to decrease memory usage without a significant loss of accuracy. These optimizations make lightweight CNNs more suitable for running on low-power devices.

What are the future trends for lightweight CNNs?

The future of lightweight CNNs includes advancements like:

- Neural architecture search (NAS) to automatically design the most efficient CNN architectures for specific edge tasks.

- Federated learning, where models are trained collaboratively across multiple edge devices without sending data to the cloud.

- Further optimization for energy efficiency, enabling longer battery life for edge devices while running AI models.

How do lightweight CNNs benefit autonomous vehicles?

In autonomous vehicles, lightweight CNNs enable real-time decision-making by processing sensor and camera data locally. This reduces reliance on cloud infrastructure, allowing vehicles to detect obstacles, pedestrians, and road signs quickly and efficiently, improving both performance and safety.

What are the main advantages of using lightweight CNNs?

Lightweight CNNs offer several key advantages for edge devices:

- Low latency: Processing data directly on the device eliminates delays associated with sending data to the cloud, ensuring faster responses.

- Energy efficiency: Optimized architectures reduce computational power and battery consumption, allowing devices to operate for longer periods.

- Cost-effective: Reduced hardware requirements mean that lightweight CNNs can run on affordable devices, expanding AI access.

- Enhanced privacy: By processing data locally, sensitive information (like personal images or health data) doesn’t need to be transmitted, improving data security.

How do lightweight CNNs enable real-time AI applications?

Lightweight CNNs can process data quickly and efficiently, which is essential for real-time AI applications like object detection, speech recognition, or predictive maintenance. Since these models are designed to perform complex tasks with reduced computational demand, they enable edge devices to react to inputs (e.g., identifying objects in video or monitoring equipment health) without noticeable delay.

What is depthwise separable convolution, and why is it important for lightweight CNNs?

Depthwise separable convolution is a technique used to split standard convolutions into two parts: depthwise (which filters each input channel separately) and pointwise (which combines these filtered outputs). This reduces the number of computations significantly, making CNNs faster and more efficient. It’s a key innovation in models like MobileNet, allowing them to be deployed on low-resource devices without sacrificing much accuracy.

How do lightweight CNNs contribute to the growth of edge AI?

Lightweight CNNs are driving the expansion of edge AI by enabling AI algorithms to run locally on resource-constrained devices. This makes AI accessible in remote locations, mobile environments, and areas with limited internet connectivity. The ability to process data in real time on devices like smartphones, drones, and IoT sensors enhances the capabilities of these devices, pushing AI-powered applications beyond centralized cloud systems.

Can lightweight CNNs be deployed for environmental monitoring?

Yes, lightweight CNNs are increasingly being used in environmental monitoring. Edge devices like drones, satellite sensors, and ground-based IoT systems equipped with lightweight CNNs can process environmental data (e.g., monitoring wildlife, detecting forest fires, or measuring air quality) in real time. These AI-driven systems allow for faster responses and more accurate predictions without needing constant cloud connectivity.

What are the limitations of lightweight CNNs?

Although lightweight CNNs are highly efficient, they have some limitations:

- Reduced accuracy: Compressing models and reducing parameters can lead to a slight drop in accuracy compared to larger, more complex models.

- Limited scalability: Some lightweight models may struggle with highly complex tasks that require deeper architectures or larger datasets.

- Specialized tasks: While lightweight CNNs excel in specific applications, they may not perform as well in general-purpose tasks where large, powerful models are needed.

How do lightweight CNNs improve battery life in mobile devices?

Lightweight CNNs are designed to perform AI computations using fewer resources, which directly reduces power consumption. By optimizing how the model processes data, edge devices like smartphones can run AI-powered applications (e.g., real-time image processing or voice assistants) without draining the battery quickly. This makes these models ideal for mobile devices that need to balance performance with energy efficiency.

How do pruning and quantization help reduce the size of CNNs?

Pruning removes unnecessary parameters or neurons from the CNN after training, focusing the model on the most critical connections, which reduces the model size and computational load. Quantization further reduces model size by lowering the precision of weights and activations (e.g., from 32-bit floating-point to 8-bit integers), significantly decreasing memory usage without greatly impacting performance. Together, these techniques help make CNNs more suitable for edge devices with limited hardware.

What role do lightweight CNNs play in smart cities?

In smart cities, lightweight CNNs are employed in devices that need to analyze large amounts of data locally. Applications include:

- Traffic management: Analyzing video feeds from cameras to optimize traffic flow and reduce congestion.

- Public safety: Using AI for real-time monitoring in surveillance systems to detect unusual activity.

- Energy management: Processing data from sensors to monitor and optimize energy consumption across buildings and public infrastructure, all without sending data to a centralized server.

How does federated learning benefit lightweight CNNs on edge devices?

Federated learning allows multiple edge devices to train a shared AI model without sharing their raw data. Instead, each device processes its data locally, and only updates to the model’s parameters are sent to a central server. This technique is highly beneficial for lightweight CNNs, as it improves data privacy and reduces the need for constant data transmission, while enabling devices to collaboratively improve AI models.