Convolutional Neural Networks (CNNs) are notorious for being computationally heavy. Enter ResNet, a game-changer.

The idea behind ResNet is simple yet powerful: add skip connections, allowing networks to go deeper without facing the vanishing gradient problem.

But here’s the challenge: Standard ResNets, while amazing, are too bulky for mobile and edge devices.

That’s why lightweight ResNet variants are now gaining serious attention.

These versions are designed to deliver top-tier accuracy with much lower computational overhead. In this article, we’ll explore the most efficient ResNet variants tailored for resource-constrained environments like mobile and edge AI.

The Need for Lightweight CNNs in Edge Devices

Edge devices, such as smartphones, drones, and IoT gadgets, have limited computing power. Running standard ResNet on such devices is unrealistic.

Why?

Because these models require substantial memory and power. Traditional ResNet architectures can easily drain battery life and processing resources.

Mobile and edge applications, however, demand quick, efficient models. From real-time object detection to augmented reality, you need fast and energy-efficient models. This is where lightweight variants of ResNet shine.

How ResNet Variants Achieve Lightweight Efficiency

Lightweight ResNet variants are developed by making strategic architectural tweaks to reduce complexity.

- Reduced Depth: Some variants reduce the number of layers while retaining essential skip connections.

- Bottleneck Blocks: By using bottleneck structures, these networks keep the depth but decrease the number of computations in each layer.

- Parameter Reduction: Lightweight models optimize the number of parameters, making them more memory-friendly.

The goal is to find a balance between accuracy and efficiency. The challenge? Making sure these tweaks don’t sacrifice performance.

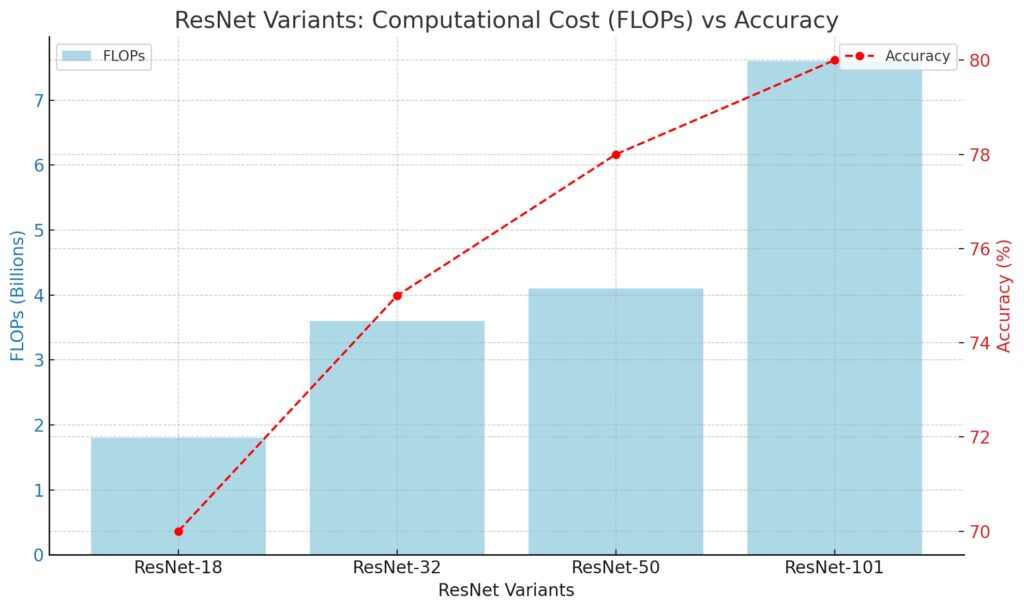

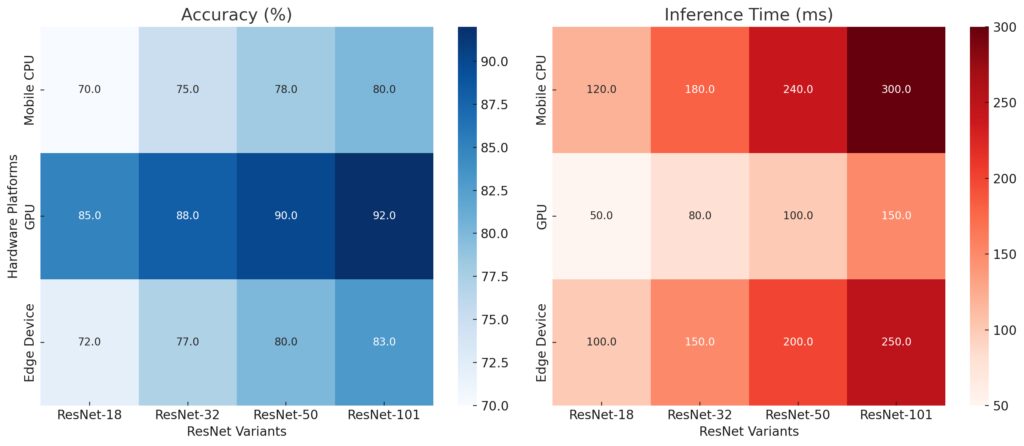

ResNet-18: A Compact Powerhouse

One of the most common lightweight ResNet variants is ResNet-18.

Why is it so popular?

Because it’s simple, efficient, and delivers decent accuracy. ResNet-18 cuts down the number of layers to 18 while still keeping the core principles of the ResNet family.

It’s particularly good for applications that need moderate accuracy but can’t afford the overhead of deeper models like ResNet-50.

In real-time image recognition on mobile devices, ResNet-18 is a strong contender because it balances speed and performance without requiring excessive computing resources.

Mobile-Optimized ResNet: The Rise of ResNet-50

If you’re looking for more accuracy but still want something that runs on edge devices, ResNet-50 variants have you covered. But to use ResNet-50 in edge computing, researchers have been smart about pruning and quantizing the model.

Pruning: This process removes unnecessary weights and connections, which reduces the complexity of the model without sacrificing much accuracy.

Quantization: Converts weights from 32-bit floating points to 8-bit integers, drastically reducing the size of the model and making it faster.

With these techniques, ResNet-50 can be adapted for mobile and IoT devices without eating up too much memory or power.

ResNet-32: A Middle Ground for Performance and Efficiency

For edge computing tasks that require more accuracy than ResNet-18 but don’t demand the full computational power of ResNet-50, ResNet-32 offers a balanced option. It’s a great choice for applications like drone navigation or real-time analytics where both speed and precision are key.

ResNet-32 has more layers than ResNet-18, offering a slight boost in performance. However, it remains much lighter compared to deeper variants, making it a solid compromise between size and accuracy.

What’s notable about ResNet-32 is its ability to handle more complex tasks without significantly increasing computational load, making it a popular choice in scenarios where power efficiency is crucial but some model depth is still needed.

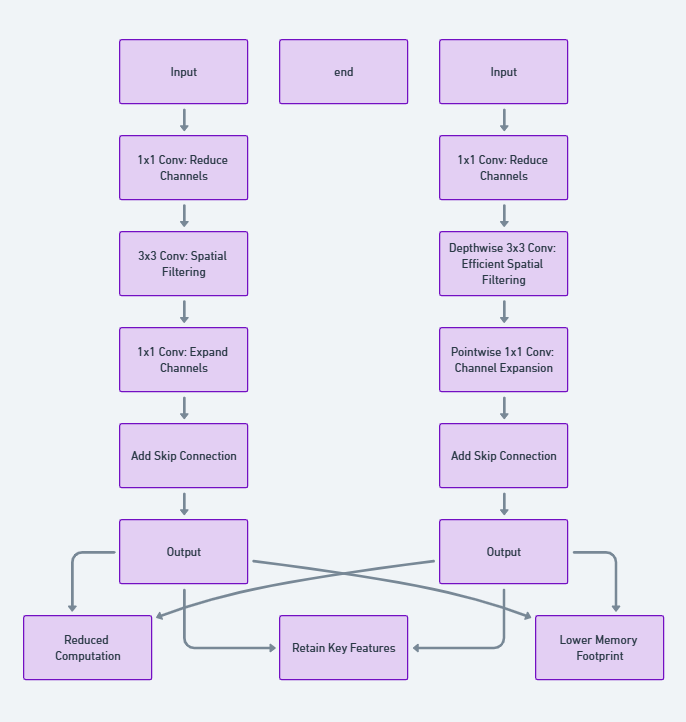

The Magic of Depthwise Separable Convolutions

One of the smartest innovations in the quest for lightweight models is depthwise separable convolutions. Though not originally part of the ResNet family, many mobile-friendly ResNet variants incorporate this trick to reduce computation costs.

A regular convolution applies a filter to all input channels simultaneously. This is computationally heavy. Depthwise separable convolutions break this into two smaller steps: one applies filters to each channel separately, and the other merges the results.

This dramatically cuts down the number of operations, speeding up inference without sacrificing too much accuracy. Some lightweight ResNet variants, particularly those optimized for mobile AI tasks, leverage this strategy to great effect.

GhostNet: Taking Lightweight to the Next Level

Another fascinating development in lightweight models is GhostNet. Though not a ResNet by strict definition, GhostNet uses similar principles to streamline computation while keeping performance high.

GhostNet achieves this by generating “ghost” features that approximate more complex ones. This drastically reduces the number of filters and computations while retaining most of the network’s effectiveness.

GhostNet-inspired modifications are often applied to ResNet variants to improve their performance in resource-constrained environments. If you’re interested in pushing the limits of lightweight models for mobile apps, looking into GhostNet’s architecture is a great idea.

ResNet-110: When Depth Is Still Essential

There are some mobile and edge applications where accuracy and model depth can’t be sacrificed. In such cases, models like ResNet-110 are still used, albeit in highly optimized forms.

In scenarios like autonomous vehicles or industrial IoT, having an ultra-precise model can be the difference between success and failure. ResNet-110 offers the depth needed for extremely detailed tasks but often requires quantization and model pruning to make it viable on edge devices.

Though heavy, optimizations can make ResNet-110 a powerful tool even in low-power environments when tasks demand precision over raw speed.

Squeeze-and-Excitation Blocks: Boosting Efficiency with Better Features

A significant improvement in ResNet variants, particularly for mobile use cases, is the incorporation of Squeeze-and-Excitation (SE) blocks. These blocks adaptively recalibrate feature maps, helping the model focus on the most relevant information.

How does this help mobile and edge computing?

By recalibrating the features, SE blocks allow the model to be more selective with its computations, reducing waste and improving efficiency. This is critical for lightweight models, especially when they’re deployed on devices with limited hardware.

SE blocks, combined with ResNet’s architecture, give these models an extra boost in performance without adding too much complexity, which is perfect for real-time applications like facial recognition on smartphones.

EfficientNet: An Alternative to Lightweight ResNet

Although ResNet is hugely popular, it’s worth mentioning that EfficientNet has emerged as another contender in the race for lightweight models.

EfficientNet uses a compound scaling technique to balance network width, depth, and resolution, which results in better accuracy with fewer parameters. For mobile and edge use cases, some EfficientNet variants outperform ResNet in terms of both speed and power consumption.

While not technically a ResNet variant, EfficientNet is often compared to it due to its efficient design principles and applicability to mobile AI.

Choosing the Right Variant for Your Application

So, how do you pick the right lightweight ResNet variant for your mobile or edge application? It all depends on your use case.

- For basic tasks like real-time image recognition or low-power applications, ResNet-18 or ResNet-32 works best.

- For slightly more demanding tasks, you can go for an optimized version of ResNet-50, leveraging techniques like quantization and pruning to make it edge-friendly.

- For high-precision tasks where depth matters, consider ResNet-110 but be ready to implement significant optimizations.

Remember, lightweight ResNet variants are not one-size-fits-all. Your model choice should reflect the specific computational limitations and accuracy needs of your application.

By tweaking and refining standard ResNet architectures, developers are now able to run deep learning models on mobile and edge devices without compromising too much on performance. With advancements in model compression, SE blocks, and novel methods like GhostNet, the future of mobile AI looks promising!

Model Compression: A Crucial Step for Edge AI Success

One of the most critical techniques for deploying deep learning models on edge devices is model compression. Without it, even lightweight versions of ResNet may be too large to run efficiently on resource-constrained devices.

Model compression includes a variety of methods such as:

- Pruning: Removes redundant weights and neurons, shrinking the model while maintaining most of its original accuracy.

- Quantization: Converts model weights from high-precision formats like 32-bit floats to more compact representations, such as 8-bit integers, reducing the model’s size and memory requirements.

- Knowledge Distillation: A method where a smaller, student network learns to mimic the behavior of a larger, more accurate teacher network.

All these techniques are vital to making ResNet variants viable for real-world mobile AI applications.

ResNet on Mobile: Real-World Use Cases

Lightweight ResNet models are already being used in a variety of real-world mobile applications. From facial recognition systems in smartphones to object detection in augmented reality (AR) applications, ResNet’s streamlined versions are perfect for tasks requiring both speed and accuracy.

One area where ResNet shines is in mobile healthcare applications. For example, edge devices that assist in medical diagnostics use optimized ResNet variants to analyze images, enabling healthcare professionals to detect conditions like skin cancer or retinopathy quickly, right at the point of care.

These lightweight models are also making waves in smart city technologies. From traffic management systems to smart surveillance, edge devices equipped with lightweight CNNs like ResNet are helping cities become more efficient and secure.

The Future of Lightweight CNNs: What’s Next?

As edge computing grows, so does the need for more efficient models. One of the most promising future trends is the rise of neural architecture search (NAS). This technology uses AI to design the most efficient model architectures automatically.

This could mean even more optimized ResNet variants, perfectly tailored for edge applications. Combining NAS with techniques like pruning and quantization could lead to even lighter models without sacrificing the accuracy mobile and edge applications require.

Another exciting trend is the development of hardware-specific optimizations. Chips like Google’s Edge TPU or NVIDIA’s Jetson are designed to accelerate inference for specific neural networks. Future lightweight ResNet variants could be even more efficient when running on such specialized hardware.

Conclusion: ResNet’s Future in Mobile and Edge AI

In the world of edge computing, balancing efficiency with performance is essential. Lightweight ResNet variants provide a powerful solution for mobile and edge devices that need to handle complex tasks without exhausting resources.

From ResNet-18 to ResNet-50, and the use of cutting-edge techniques like depthwise separable convolutions and quantization, developers can now leverage deep learning on even the most resource-limited devices. This shift is empowering a new generation of AI-powered applications, ensuring that edge devices can handle the challenges of real-time, on-device computing.

FAQs

What makes ResNet-18 ideal for mobile applications?

ResNet-18 is a compact version of the ResNet family, with just 18 layers, making it more efficient in terms of computation and memory usage. Its smaller size allows it to run on mobile devices without requiring excessive power, while still delivering solid accuracy in image recognition tasks.

How do lightweight ResNet variants achieve efficiency?

Lightweight ResNet variants reduce model size and computation by:

- Reducing the number of layers.

- Using bottleneck blocks and depthwise separable convolutions.

- Applying pruning and quantization techniques to minimize the model’s parameters and memory footprint.

What are depthwise separable convolutions, and how do they help?

Depthwise separable convolutions break down the standard convolution process into two steps: first, applying filters to individual channels (depthwise), and second, combining the results (pointwise). This drastically reduces the number of computations and is widely used in mobile-friendly architectures to improve efficiency.

How does pruning improve ResNet performance for edge devices?

Pruning removes unnecessary weights and neurons from a neural network, reducing its size without significantly affecting accuracy. This leads to a faster, more efficient model that is ideal for running on edge devices with limited resources.

What is quantization, and why is it important for mobile AI?

Quantization involves converting high-precision weights (such as 32-bit floating points) into lower-precision formats like 8-bit integers. This reduces the model’s memory requirements and speeds up inference, making it ideal for real-time applications on mobile and edge devices.

Can ResNet-50 be used on mobile devices?

Yes, but ResNet-50 typically needs model compression techniques like pruning and quantization to make it suitable for mobile and edge devices. These optimizations help reduce the model’s size and resource consumption while maintaining high accuracy.

What are Squeeze-and-Excitation (SE) blocks?

Squeeze-and-Excitation (SE) blocks improve the performance of CNNs by recalibrating the feature maps. In lightweight ResNet models, SE blocks help the network focus on the most relevant features, increasing efficiency and accuracy while adding minimal computational overhead.

How does GhostNet differ from ResNet?

GhostNet is a lightweight neural network architecture that uses fewer filters and computations than ResNet by generating “ghost” feature maps. Although it’s not part of the ResNet family, GhostNet-inspired optimizations can be applied to ResNet variants to further reduce computation in mobile applications.

What are the most common applications of lightweight ResNet variants?

Lightweight ResNet variants are widely used in:

- Real-time image recognition on mobile devices.

- Augmented Reality (AR) applications.

- Drones and autonomous vehicles for object detection and navigation.

- Healthcare AI, for mobile diagnostics and medical imaging.

- Smart city technologies, like traffic management and surveillance.

What are the limitations of lightweight ResNet variants?

The main limitation is that while lighter models reduce computation, they often sacrifice some accuracy. This trade-off may not be suitable for applications requiring high precision, like medical diagnostics or detailed object detection.

Are there alternatives to lightweight ResNet for mobile AI?

Yes, EfficientNet is a popular alternative that balances model depth, width, and resolution to deliver high accuracy with fewer parameters. MobileNet and ShuffleNet are also efficient architectures designed specifically for mobile and edge devices.

How can I decide which ResNet variant to use for my project?

Choosing the right variant depends on your accuracy requirements, computational constraints, and use case.

- For basic tasks, ResNet-18 or ResNet-32 is sufficient.

- For higher accuracy needs, consider an optimized version of ResNet-50.

- For applications requiring the deepest models, ResNet-110 (with optimizations) may be suitable.

Resources

- Research on ResNet Variants:

This research paper from Microsoft introduces the original ResNet architecture and outlines its improvements:

Deep Residual Learning for Image Recognition by He et al.

A must-read to understand how the ResNet architecture works and how it evolved. - Model Compression Techniques:

To dive deeper into model optimization strategies like pruning and quantization, this Google AI blog provides excellent examples and insights:

Efficient Model Compression for Edge AI. - Mobile AI Benchmarking:

The Papers with Code platform is a great resource to track the latest ResNet implementations and how they perform on mobile AI tasks:

Papers with Code – ResNet Benchmarks.