An In-Depth Comparative Analysis

LlamaRank: Revolutionizing AI-Driven Document Ranking

LlamaRank is an innovative AI-driven reranking model developed by Salesforce AI Research, designed to enhance the accuracy and relevance of document retrieval in enterprise applications. As the latest advancement in Retrieval-Augmented Generation (RAG) systems, LlamaRank significantly improves the precision of search results, making it a powerful tool for industries relying on large-scale data management, such as customer support, legal, and software development.

What Makes LlamaRank Stand Out?

LlamaRank is built upon Llama3-8B-Instruct, fine-tuned with data synthesized from larger models like Llama3-70B and human-annotated datasets. This training allows LlamaRank to excel in tasks that require high relevancy and accuracy, particularly in code search and complex document queries.

Key features of LlamaRank include:

- Improved Search Accuracy: LlamaRank enhances the relevancy of documents retrieved in the initial search phase by reordering them based on a more sophisticated analysis. This leads to more accurate and coherent responses in enterprise RAG systems.

- Scalability and Low Latency: The model is optimized for speed, capable of processing a large number of documents quickly without sacrificing accuracy. This makes it ideal for real-time applications where low latency is crucial.

- Support for Larger Document Sizes: LlamaRank can handle document sizes up to 8,000 tokens, double the capacity of many competing models, allowing for more comprehensive analysis of longer texts.

Applications and Use Cases

LlamaRank’s ability to refine and prioritize search results makes it invaluable across various industries:

- Enterprise Search: In businesses with vast amounts of internal documents, LlamaRank helps in retrieving the most relevant information quickly, improving decision-making and operational efficiency.

- Customer Support: The model enhances the quality of responses in customer support systems by ensuring that only the most pertinent information is fed into the language model for generating replies.

- Code Search: For developers, LlamaRank offers superior performance in locating relevant code snippets or documentation within extensive codebases, significantly boosting productivity.

To fully appreciate how LlamaRank, GPT-4, Google Bard, and Claude stack up against each other, it’s essential to dive deeper into their architectural differences, benchmark performances, real-world applications, and specific use cases.

1. Architectural Differences and Training Approaches

LlamaRank is built upon the LLaMA (Large Language Model Meta AI) framework, known for being open-source and highly customizable. This framework allows LlamaRank to be tailored specifically for ranking tasks, leveraging fine-tuning techniques to optimize performance on information retrieval and content recommendation systems. Its architecture is designed to handle large-scale ranking problems efficiently, making it a go-to for specialized applications in domains such as search engines and personalized content feeds.

GPT-4, developed by OpenAI, is the latest iteration in the GPT series. It employs a transformer-based architecture with a massive number of parameters (reportedly in the hundreds of billions), allowing it to excel at a wide range of natural language processing (NLP) tasks. GPT-4’s architecture is designed for general-purpose use, meaning it can handle everything from casual conversation to complex problem-solving, creative writing, and even multimodal tasks (e.g., processing text alongside images).

Claude by Anthropic focuses heavily on safety and ethical considerations in AI deployment. Its architecture is optimized for maintaining nuanced and contextually appropriate conversations. Claude’s training incorporates Constitutional AI, which involves using principles to guide the AI’s behavior, ensuring that it is less likely to produce harmful outputs. This makes Claude particularly strong in scenarios where the ethical implications of AI use are paramount.

Google Bard, leveraging Google’s LaMDA and later PaLM models, is designed to interact seamlessly with Google’s ecosystem. Its architecture is built to optimize conversational capabilities, aiming to be highly interactive and engaging. Bard’s integration with Google’s search infrastructure provides it with up-to-date information retrieval capabilities, making it a promising tool for real-time information access and dialogue.

2. Benchmark Performances

When it comes to benchmark performance, these models demonstrate varying strengths depending on the task:

- LlamaRank is highly effective in ranking and retrieval tasks. It has shown superior performance in benchmarks that require precise ranking of search results, such as MS MARCO or TREC Deep Learning benchmarks. Its ability to fine-tune on specific datasets allows it to outperform more generalized models like GPT-4 in these niche areas.

- GPT-4 consistently ranks at the top across a broad spectrum of NLP benchmarks, including SuperGLUE, OpenAI’s own benchmark suite, and various creative tasks like text generation and summarization. Its versatility allows it to adapt well to both structured tasks and open-ended creative challenges, making it the most robust model overall in terms of performance.

- Claude performs exceptionally well in tasks that require ethical reasoning and bias mitigation. It has shown strong results in safety-focused benchmarks and tasks requiring sensitive content moderation. Additionally, its performance in natural language understanding tasks is solid, though it is often outperformed by GPT-4 in purely technical benchmarks.

- Bard has demonstrated competent performance in benchmarks related to conversational AI and real-time information retrieval. However, it has faced challenges in accuracy during its early stages, particularly in complex tasks that require deep reasoning or advanced knowledge synthesis. Despite these issues, Bard’s potential lies in its ability to integrate and process real-time data, which is an area where other models, particularly LlamaRank and GPT-4, are less specialized.

3. Real-World Applications

Each model has found its niche in real-world applications:

- LlamaRank excels in environments that require personalized content delivery, such as recommendation systems, e-commerce platforms, and search engines. Its fine-tuning capabilities allow it to be deployed in scenarios where the accuracy of ranking is critical, such as in legal document retrieval or academic research databases.

- GPT-4 is widely used in applications that require a high degree of linguistic flexibility and creativity. These include content generation for marketing, automated customer service, educational tools, and even virtual assistants in healthcare that require the ability to process and generate a wide range of text types. GPT-4’s versatility makes it a top choice for enterprises needing an all-encompassing solution.

- Claude is increasingly being adopted in sectors where ethical considerations are paramount, such as finance, law, and public policy. Its ability to provide balanced, fair, and safe outputs makes it suitable for applications in content moderation, sensitive communications, and AI governance tools.

- Google Bard is positioned to be a powerful tool in the realm of information retrieval, particularly where real-time data access is critical. Its integration with Google’s search capabilities makes it an excellent choice for news aggregation, digital assistants, and any application requiring up-to-the-minute information synthesis.

4. Specific Use Cases and Strengths

- LlamaRank is highly effective in academic research and legal tech due to its fine-tuning capabilities, which allow it to rank highly relevant documents with precision. It is also popular in e-commerce, where accurate product recommendations are crucial for sales conversions.

- GPT-4 finds its strength in creative industries such as content marketing, scriptwriting, and interactive storytelling. Its ability to generate human-like text with a high degree of creativity makes it a preferred tool for these applications.

- Claude is particularly strong in customer service applications that require ethical considerations, such as in healthcare or mental health counseling chatbots. Its design to minimize harmful outputs is a significant advantage in these sensitive areas.

- Bard is best suited for digital assistants and applications that require a high level of interaction with real-time data. Its conversational AI capabilities make it ideal for tasks where ongoing dialogue and information refinement are needed.

Reranking Enhances Search and RAG Systems

In a typical RAG pipeline, the process begins with an initial retrieval phase where a large set of potentially relevant documents or data points are collected using fast but sometimes less accurate methods like semantic search. This is where the reranker steps in:

- Initial Retrieval: The system retrieves a broad set of documents that might be relevant to the query.

- Reranking: The reranker then evaluates these documents based on more sophisticated criteria, such as context and nuanced relevance to the query, and reorders them accordingly.

- Final Selection: The top-ranked documents are then used as context for the AI model to generate the most accurate and contextually appropriate responses.

This process significantly boosts the accuracy of search results and minimizes the occurrence of hallucinations—incorrect or misleading information generated by AI models—by improving the relevance of the input data.

Salesforce’s Evaluation of LlamaRank

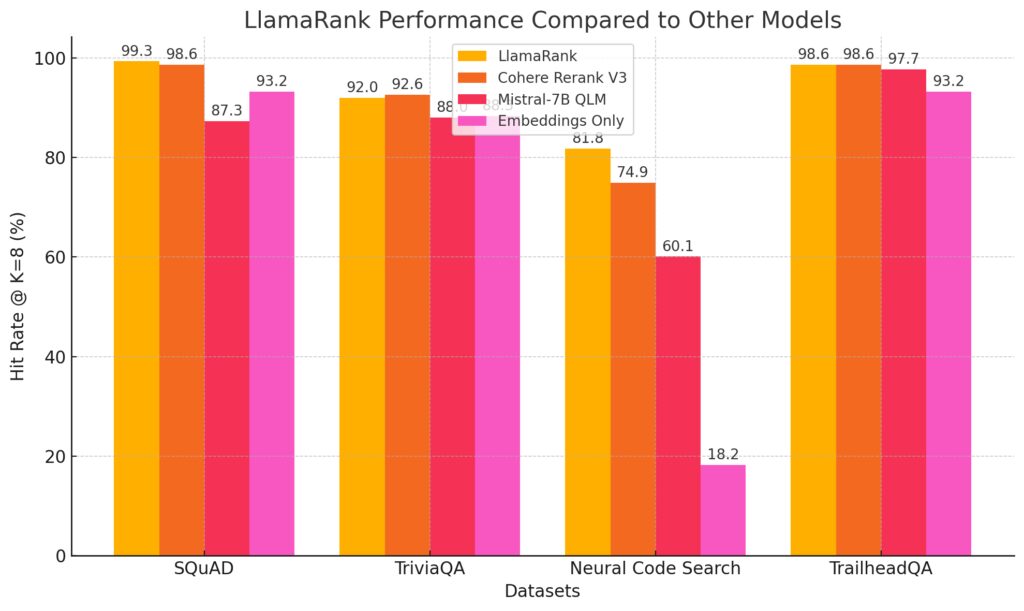

Salesforce evaluated LlamaRank, their state-of-the-art reranking model, using four public datasets to benchmark its performance:

- SQuAD: A question-answering dataset based on Wikipedia, widely used for evaluating the accuracy of AI models in understanding and generating human-like responses.

- TriviaQA: This dataset focuses on trivia-style questions sourced from general web data, testing the model’s ability to handle diverse and unstructured data.

- Neural Code Search (NCS): Curated by Facebook, this dataset is designed to evaluate the model’s performance in retrieving relevant code snippets, making it particularly useful for developers.

- TrailheadQA: A collection of questions and documents from Salesforce’s Trailhead platform, used to test the model’s ability to handle enterprise-specific queries.

Here’s a comparative table summarizing the key aspects of LlamaRank, GPT-4, Claude, and Google Bard:

| Aspect | LlamaRank | GPT-4 | Claude | Google Bard |

|---|---|---|---|---|

| Developer | Meta | OpenAI | Anthropic | |

| Architecture | LLaMA-based, fine-tuned for ranking tasks | Transformer-based with hundreds of billions of parameters | Transformer-based, optimized for safety and ethics | LaMDA and PaLM-based, designed for conversational AI |

| Primary Use Cases | Search engines, content recommendation, legal and academic research | General-purpose NLP, creative writing, multimodal tasks | Ethical AI, customer service, content moderation | Real-time information retrieval, digital assistants |

| Strengths | High customization, open-source, excellent for ranking | Versatile, high fluency, and creativity, handles diverse tasks well | Emphasis on reducing bias, ethical reasoning, and privacy | Real-time data access, conversational abilities |

| Weaknesses | Niche focus, less versatile in general NLP tasks | High computational cost, requires significant resources | Limited in technical jargon understanding, still prone to errors | Accuracy issues in complex reasoning, still developing |

| Performance on Benchmarks | Strong in ranking-specific benchmarks (e.g., MS MARCO) | Top-tier in diverse benchmarks (e.g., SuperGLUE) | Excels in safety-focused and ethical reasoning benchmarks | Competent but behind in creative and complex reasoning tasks |

| Ease of Use | Highly customizable for developers, open-source | User-friendly API but complex for non-technical users | Straightforward integration, focus on ethical deployment | Easy integration within Google’s ecosystem, still evolving |

| Customization | High – Open-source and community-driven | Moderate – API-based customization | Moderate – Focused on ethical deployment | Low – Primarily designed for Google’s use cases |

| Cost | Varies, generally lower due to open-source nature | High – due to computational resources required | Cost-effective, cheaper than GPT-4 | Not widely available for custom deployment |

| Real-World Applications | Academic research, e-commerce, legal tech | Creative industries, education, healthcare | Customer service, sensitive communications | News aggregation, digital assistants |

In the field of AI-driven document reranking, several models have gained prominence due to their advanced capabilities in improving search accuracy and relevance. Here are some of the leading reranking models besides LlamaRank:

1. Cohere Rerank V3

- Overview: Developed by Cohere, this model is widely used for improving search relevance in various applications, including e-commerce, customer support, and enterprise search. It specializes in reordering search results to better match user queries based on semantic understanding.

- Strengths: Cohere Rerank V3 is known for its high accuracy across general document and code search tasks, making it a versatile choice for different industries.

2. Mistral-7B QLM

- Overview: Mistral-7B QLM is a reranking model designed to handle a variety of search tasks, from simple document retrieval to more complex query interpretations. It focuses on improving relevancy by leveraging large-scale language models.

- Strengths: This model is particularly effective in domains requiring nuanced understanding of the context, though it may not perform as well in highly specialized areas like code search.

3. Microsoft Turing-NLRv3

- Overview: Part of Microsoft’s Turing family of models, Turing-NLRv3 is a neural language reranking model that enhances search accuracy by reordering search results based on deep contextual understanding. It’s integrated into various Microsoft products, including Bing and Azure search services.

- Strengths: This model excels in natural language processing tasks, especially in large-scale search engines where user intent and context are critical for delivering relevant results.

4. OpenAI’s Rerank Models

- Overview: OpenAI has developed reranking models that are integrated into their broader AI offerings, such as their GPT series. These models are designed to refine search results, especially in complex query situations where simple keyword matching isn’t sufficient.

- Strengths: OpenAI’s rerank models are particularly strong in understanding and processing natural language queries, making them suitable for applications in customer support, knowledge management, and more.

5. Google BERT Re-Ranker

- Overview: Google’s BERT model, when fine-tuned as a reranker, significantly improves search relevance by understanding the context of queries and documents. It’s widely used in Google’s search engine to enhance the accuracy of search results.

- Strengths: BERT’s deep understanding of language nuances allows it to excel in tasks where the precise interpretation of user queries is essential.

6. Jina AI’s Finetuner

- Overview: Jina AI’s Finetuner is a framework that allows developers to fine-tune pre-trained models, including rerankers, to improve the relevancy of search results in specific contexts. It is particularly popular in domain-specific search applications.

- Strengths: The ability to fine-tune models on custom datasets makes Finetuner a powerful tool for organizations with specialized search needs.

Comparing the performance of LlamaRank to other reranking models across different datasets. The chart shows the Hit Rate @ K=8 for each model, with LlamaRank consistently achieving higher scores, particularly in the Neural Code Search and TrailheadQA datasets. This highlights LlamaRank’s effectiveness in enterprise-specific applications and code search tasks.

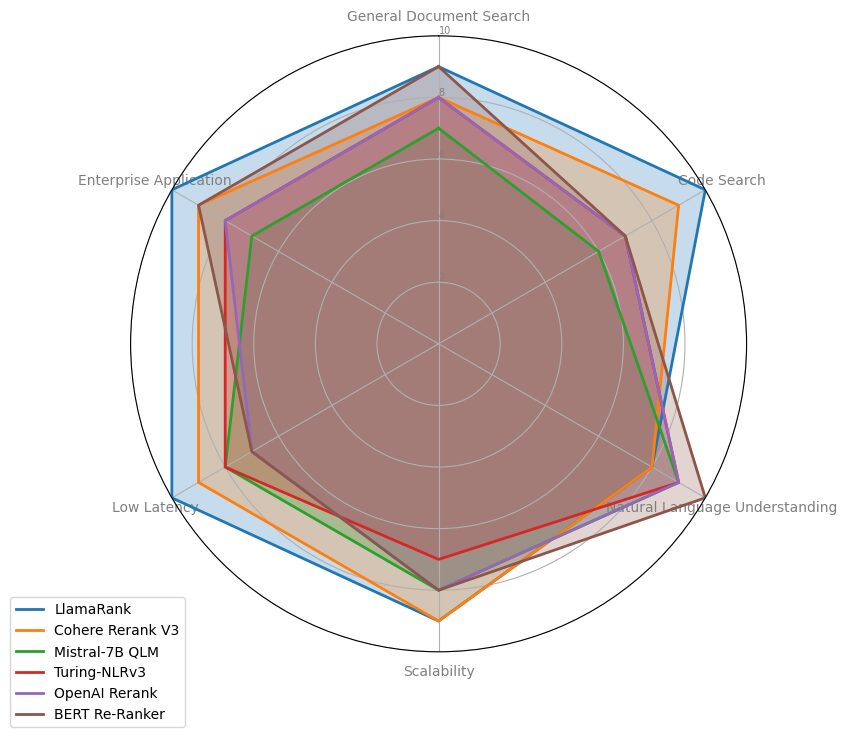

Comparing the Strengths of various Reranking Models

The radar chart compares the strengths of various reranking models across different dimensions, such as general document search, code search, natural language understanding, scalability, low latency, and enterprise application. This visualization highlights the diverse capabilities of each model, with LlamaRank leading in several key areas like code search and enterprise application.

Conclusion

In summary, LlamaRank stands out for specialized ranking tasks, particularly where customization and precision are paramount. GPT-4 offers unparalleled versatility across a wide array of applications, making it the best choice for most general-purpose AI needs. Claude is ideal for ethically sensitive applications, offering strong performance in scenarios where safety and bias mitigation are critical. Google Bard, while still developing, shows promise in real-time information retrieval and conversational interfaces.

The best model for any given task will depend on the specific requirements of the application—whether it be the need for creative output, ethical considerations, real-time data processing, or precise ranking. Each model continues to evolve, and staying informed about the latest developments will be key to leveraging their full potential.