Introduction to Sequence Processing

From predicting stock prices to translating languages, sequence processing is at the heart of many advanced AI applications. Sequence data, where each element is dependent on the previous one, requires models that can capture long-term dependencies.

LSTM networks (Long Short-Term Memory) have long been the go-to solution, offering a way to remember important information over long sequences while forgetting irrelevant details.

However, they aren’t perfect. This is where attention mechanisms come into play, taking sequence processing to the next level.

But how exactly does combining LSTM with attention create a powerful synergy? Let’s break it down!

What are LSTM Networks?

LSTM networks are a special type of recurrent neural network (RNN) designed to remember information over long sequences. Unlike traditional RNNs, which suffer from the vanishing gradient problem, LSTM units use gates to control the flow of information, ensuring important data is remembered while irrelevant information is discarded.

Each LSTM cell contains three gates:

- The forget gate determines what part of the previous data should be forgotten.

- The input gate decides which new information should be stored.

- The output gate controls what part of the memory to output.

Thanks to these gates, LSTM models can capture long-term dependencies, making them a preferred choice for time series, language modeling, and other sequential tasks.

Limitations of Traditional LSTM Networks

Despite their success, LSTMs have limitations. One major issue is that they still struggle with very long sequences. The longer the sequence, the more difficult it becomes for the model to remember distant dependencies, even with its advanced gating mechanism. In tasks like machine translation or document summarization, where every word is not equally important, traditional LSTMs might allocate too much memory to less significant parts of the sequence.

Furthermore, LSTMs process sequences step by step, making it harder for the model to focus on the most relevant elements of the sequence at once. This leads to inefficiency in capturing important context from distant parts of the input.

Introduction to Attention Mechanisms

Attention mechanisms address the limitations of LSTMs by allowing the model to focus on specific parts of the input sequence—regardless of their distance from the current position. The core idea is to assign different weights (importance) to each element of the input sequence, letting the model prioritize more relevant information while downplaying less crucial parts.

In simple terms, attention allows the model to “pay attention” to specific parts of the sequence, rather than treating all inputs equally. This is especially useful in tasks where certain elements of the sequence play a more critical role in the output, such as focusing on key words in a sentence during translation.

How Attention Enhances LSTM Performance

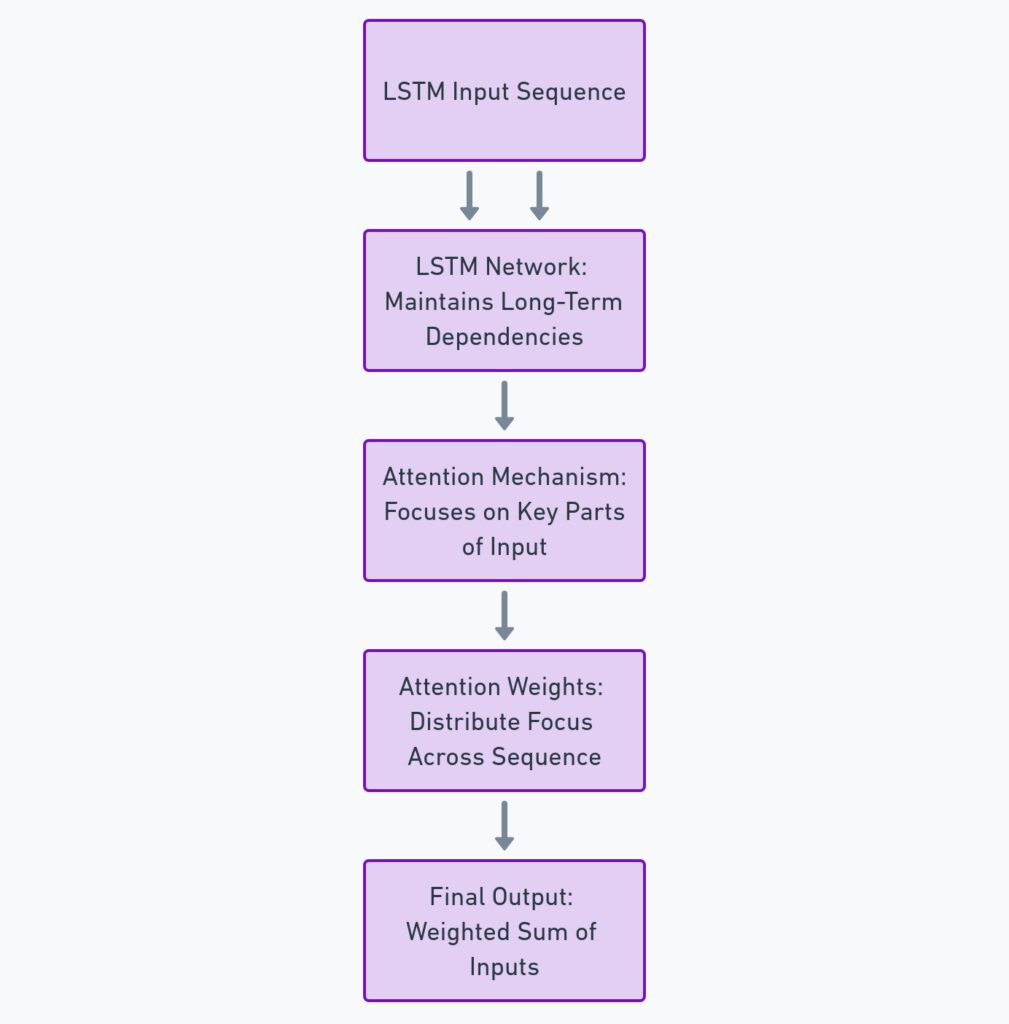

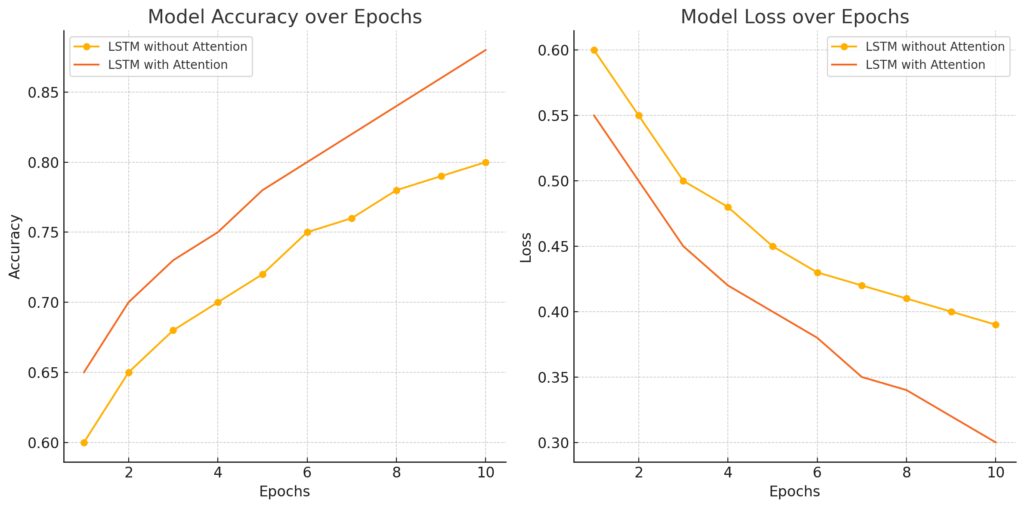

By combining attention mechanisms with LSTM networks, we get the best of both worlds. While LSTM networks maintain memory over long sequences, attention mechanisms allow the model to focus on important parts of the sequence at each step.

This combination dramatically improves sequence processing for tasks that require handling long dependencies or extracting meaningful information from complex data.

For instance, in neural machine translation, attention allows the model to focus on relevant words in the source sentence for each word in the translated sentence, improving translation quality. Similarly, in speech recognition, attention helps LSTM models pinpoint key sounds or words, making them more accurate in predicting the output.

Breaking Down the Attention Mechanism

So, how does attention actually work? Let’s break it down:

- Score Calculation: First, the model computes a score for each element in the input sequence to determine its relevance to the current output being generated. These scores can be calculated using various techniques, such as dot product, multiplicative attention, or additive attention.

- Softmax Function: Next, the scores are passed through a softmax function, which converts them into probabilities. These probabilities represent how much attention should be given to each part of the input sequence.

- Weighted Sum: The model then calculates a weighted sum of the input sequence elements, where each element is weighted according to its importance. This weighted sum becomes the final context vector for generating the output at the current time step.

By this process, the model can selectively focus on specific parts of the sequence at each time step, rather than relying solely on sequential memory as LSTMs do.

The Power of Combining LSTM and Attention

When LSTM networks and attention mechanisms are combined, they form a highly effective duo for sequence processing tasks. While LSTMs are good at maintaining context over long sequences, attention adds a layer of precision by allowing the model to selectively focus on relevant parts of the input. This leads to more efficient and accurate processing, especially in tasks with complex, long-range dependencies.

For example, in sequence-to-sequence models, such as language translation, LSTM captures the overall structure of a sentence, while attention highlights the most important words, ensuring better translation quality. Similarly, for time series prediction, attention can help the model focus on past data points that are more predictive of future values, improving forecasting accuracy.

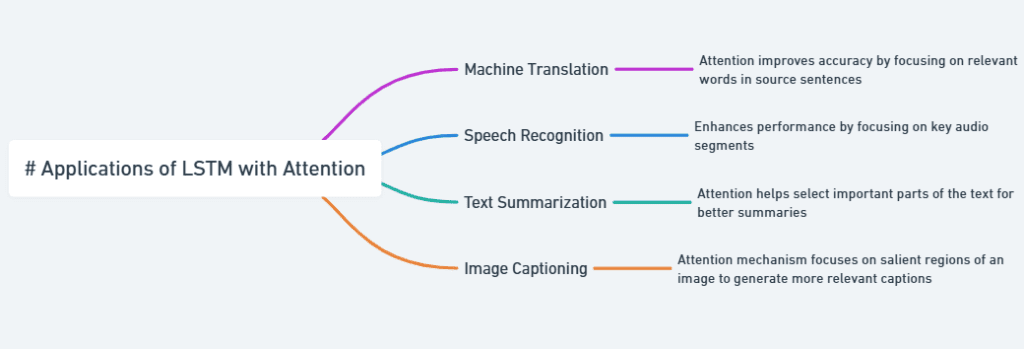

Real-World Applications of LSTM with Attention

The combination of LSTM and attention is widely used in various real-world applications, including:

- Machine Translation: In translation systems like Google Translate, combining LSTM with attention allows the model to focus on relevant words in the source language while generating each word in the target language, leading to more contextually accurate translations.

- Speech Recognition: For tasks like speech-to-text, attention mechanisms help LSTM models focus on key sound patterns, improving accuracy in recognizing words, even in noisy environments.

- Text Summarization: LSTM with attention enables models to generate concise summaries by focusing on the most critical information from the input text, rather than summarizing everything equally.

- Image Captioning: In image captioning, attention helps LSTMs focus on specific regions of an image while generating descriptive text, resulting in more relevant and detailed captions.

LSTM with Attention for Natural Language Processing (NLP)

In NLP, the combination of LSTM and attention has revolutionized tasks like text classification, sentiment analysis, and language modeling. By attending to key phrases or words, the model is able to better understand context and nuance. For instance, in sentiment analysis, the attention mechanism allows the model to focus on emotionally charged words, leading to more accurate predictions of sentiment.

In question-answering systems, attention mechanisms are particularly helpful, as they direct the model’s focus to the relevant parts of a document or paragraph to generate the correct answer.

The Role of Attention in Machine Translation

The game-changing role of attention in machine translation cannot be overstated. Traditional LSTM models often struggle to translate long or complex sentences accurately because they can’t efficiently remember the entire input sequence. By adding attention, the model doesn’t need to rely on memory alone.

Instead, attention directs the model to focus on specific parts of the source sentence when generating the corresponding part of the translated sentence. This results in better alignment between the source and target languages, making translations more precise and fluent, even for longer texts.

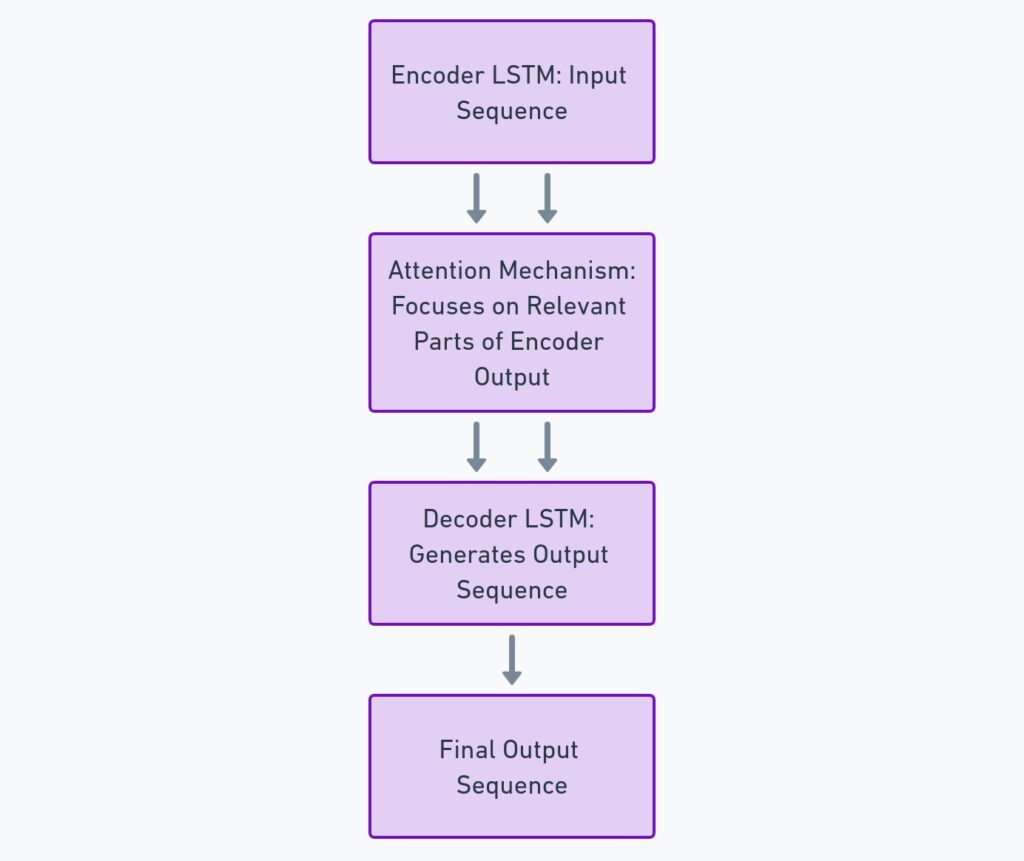

Sequence-to-Sequence Models with LSTM and Attention

Sequence-to-sequence (Seq2Seq) models are a common architecture used in tasks like language translation, chatbots, and speech recognition. In these models, an encoder (often an LSTM) processes the input sequence and produces a context vector, while a decoder generates the output sequence.

When combined with attention, the decoder doesn’t have to rely solely on the fixed context vector from the encoder. Instead, the attention mechanism allows the decoder to look back at the entire input sequence at each step, dynamically adjusting which parts of the input are most relevant for generating the next token in the output.

This results in a more powerful Seq2Seq architecture that can handle longer sequences and complex dependencies with greater accuracy.

LSTM and Attention in Image Captioning

Image captioning is another powerful application where LSTM with attention shines. In this task, the model must generate a descriptive caption for an image. The challenge is that not every part of the image is equally important for describing what’s happening. This is where the attention mechanism comes in.

An LSTM network processes the image’s features, but the attention mechanism focuses on specific regions of the image while generating each word in the caption. For instance, if the caption is “A dog playing with a ball,” the model would focus on the dog while generating the word “dog” and then shift attention to the ball when generating “ball.” This approach results in captions that are more contextually relevant and detailed.

Key Benefits of Using Attention in Sequence Processing

The combination of LSTM and attention brings several key benefits to sequence processing:

- Improved Long-Range Dependency Handling: While LSTMs are good at remembering past information, attention mechanisms allow the model to focus on important elements regardless of their position in the sequence. This is particularly helpful for long sequences where key details might be far apart.

- Dynamic Focus: Attention gives the model the flexibility to dynamically adjust which parts of the input it focuses on, making it more adaptive. This leads to better performance in tasks like translation, where some words are more relevant than others.

- Parallel Processing: In some applications, attention mechanisms can enable more parallel processing of sequence data, which speeds up computation. This is an advantage over traditional LSTM models, which process data sequentially and can be slower for long inputs.

- Enhanced Interpretability: One often-overlooked benefit of attention is that it makes models more interpretable. By visualizing the attention weights, we can see which parts of the input the model is focusing on, offering insights into how the model is making its predictions.

Challenges in Integrating Attention with LSTM

Despite the benefits, integrating attention mechanisms with LSTM isn’t without its challenges. One issue is that attention mechanisms can introduce computational overhead. Calculating attention weights for every element of the input sequence adds complexity, particularly for very long sequences, which can slow down training and inference.

Another challenge is that tuning the model to balance LSTM’s memory capabilities with attention’s focusing ability requires careful design. If the attention mechanism isn’t well-calibrated, it may overlook important parts of the sequence or over-focus on irrelevant details.

Lastly, some models may become overly reliant on attention and fail to leverage the LSTM’s internal memory effectively. This can lead to problems in tasks that require strong sequential understanding in addition to focusing on specific parts of the input.

Popular Frameworks for Implementing LSTM with Attention

Several popular frameworks and libraries make it easy to implement LSTM with attention for sequence processing tasks:

- TensorFlow: TensorFlow offers built-in support for LSTM and attention mechanisms through its Keras API. You can easily define LSTM models with attention layers for NLP, machine translation, or image captioning tasks.

- PyTorch: PyTorch provides highly flexible tools for building custom attention layers and integrating them with LSTM models. Its dynamic computation graph makes it ideal for experimenting with new architectures.

- Hugging Face Transformers: While best known for transformer models, Hugging Face also supports hybrid models that combine LSTM and attention for sequence tasks.

Future Directions: LSTM, Attention, and Beyond

As sequence processing continues to evolve, the combination of LSTM and attention remains a powerful tool. However, newer architectures like Transformers are beginning to outperform traditional LSTM models in some tasks by using attention exclusively. Transformers handle long sequences better and are capable of more parallel processing, making them faster and more efficient for large datasets.

That said, LSTM with attention still has its place, especially in tasks where sequential memory is important, such as speech recognition, time series forecasting, and certain NLP tasks. We can expect continued innovation in hybrid models that combine the best features of LSTM, attention, and transformer architectures.

These hybrid approaches will likely lead to even more powerful tools for processing complex sequences in the years to come, bridging the gap between sequential memory and dynamic focus!

FAQs

What are LSTM networks?

LSTM (Long Short-Term Memory) networks are a type of recurrent neural network (RNN) that can remember information over long sequences of data. They overcome the limitations of traditional RNNs by using gates (forget, input, and output) to control what information is remembered and what is discarded. LSTMs are widely used in time series forecasting, language modeling, and tasks that require understanding long-term dependencies in sequential data.

What are the limitations of traditional LSTM models?

While LSTMs are effective at remembering information over longer sequences, they still struggle with very long dependencies. As sequences get longer, the model may have trouble focusing on distant but important elements of the input. Additionally, LSTMs process data sequentially, which makes them inefficient in handling long sequences and can miss important contextual clues that may not be adjacent to the current input.

What is the role of attention mechanisms in sequence processing?

Attention mechanisms allow models to focus on specific parts of the input sequence that are more relevant to the task at hand. By assigning different weights to different parts of the sequence, attention mechanisms help the model selectively process important information, improving performance in tasks like machine translation, speech recognition, and image captioning. This dynamic focus on relevant information is particularly useful for handling long sequences, where not all elements contribute equally to the output.

How do attention mechanisms enhance LSTM performance?

When combined with LSTMs, attention mechanisms help address the limitations of LSTM models. LSTMs provide the memory to track long sequences, while attention mechanisms add the ability to focus on key elements within the sequence, even if they are far apart. This synergy improves the model’s ability to capture relevant information without relying solely on the sequential memory of LSTM, leading to better performance in tasks like translation and time series forecasting.

How does the attention mechanism work?

The attention mechanism operates in three main steps:

- Score Calculation: A score is assigned to each element in the input sequence based on its relevance to the current output being generated. This score can be computed using methods like dot product, multiplicative, or additive attention.

- Softmax Function: The scores are converted into probabilities using a softmax function, determining the importance of each element.

- Weighted Sum: The model computes a weighted sum of the input elements, with higher-weighted elements contributing more to the final output. This allows the model to focus on important parts of the sequence at each time step.

What are the real-world applications of LSTM with attention?

The combination of LSTM and attention is widely used in several real-world applications, including:

- Machine Translation: Attention mechanisms in translation systems allow the model to focus on specific words in the source sentence while generating the translation, improving the alignment between languages.

- Speech Recognition: In speech-to-text tasks, attention mechanisms help the model focus on critical sound patterns or words, improving recognition accuracy.

- Text Summarization: LSTM with attention enables the model to generate concise summaries by focusing on the most important parts of the text.

- Image Captioning: In image captioning, attention helps the model focus on specific regions of the image while generating descriptive text, improving the quality of the generated captions.

Why is attention critical for machine translation tasks?

In machine translation, each word in the target sentence may depend on different parts of the source sentence. LSTMs alone struggle with this because they process input sequentially and may lose track of important details. Attention mechanisms allow the model to focus on the relevant words or phrases in the source sentence for each word it generates in the target sentence, leading to more accurate and contextually relevant translations.

How do sequence-to-sequence models benefit from attention?

Sequence-to-sequence (Seq2Seq) models, which are used for tasks like translation, speech recognition, and text generation, benefit greatly from attention mechanisms. In Seq2Seq models, an encoder LSTM processes the input sequence into a context vector, and a decoder LSTM generates the output sequence. Attention enhances this by allowing the decoder to focus on specific parts of the input sequence at each time step, rather than relying solely on the fixed context vector from the encoder.

What are the benefits of combining LSTM with attention mechanisms?

- Better handling of long-range dependencies: Attention mechanisms allow the model to focus on important elements of the sequence, regardless of their position, improving performance in tasks that require understanding long-range dependencies.

- Dynamic focus: Attention allows the model to dynamically adjust its focus on different parts of the input sequence, leading to more accurate predictions.

- Improved parallel processing: Attention can sometimes enable more parallel processing, especially in transformer-based models, making the computation more efficient.

- Increased interpretability: Attention mechanisms provide insight into which parts of the input sequence the model is focusing on, making it easier to understand and interpret model predictions.

What challenges are associated with integrating attention mechanisms with LSTMs?

The main challenges include increased computational complexity. Calculating attention weights for every part of the sequence can add computational overhead, especially for long sequences. Additionally, balancing attention with LSTM’s memory capabilities can be tricky, as a poorly tuned attention mechanism may cause the model to focus on the wrong parts of the sequence. Over-reliance on attention can also cause issues, where the model disregards the benefits of LSTM’s sequential memory in favor of attending to more obvious details.

What tools are available for implementing LSTM with attention?

Several popular frameworks and libraries support the implementation of LSTM with attention, including:

- TensorFlow: TensorFlow’s Keras API makes it easy to implement LSTM networks with attention mechanisms for a variety of tasks like NLP, machine translation, and time series analysis.

- PyTorch: PyTorch offers a flexible environment for creating custom attention layers and integrating them with LSTM models. It’s especially useful for experimenting with new architectures.

- Hugging Face: Hugging Face’s model hub provides ready-to-use LSTM and attention models for tasks like language translation and text summarization.

What does the future hold for LSTM and attention mechanisms?

As transformer models and self-attention mechanisms gain popularity, they are outperforming traditional LSTMs in many sequence-based tasks. However, LSTM with attention remains relevant for tasks where sequential memory is important, such as speech recognition, time series forecasting, and certain NLP tasks. In the future, we may see more hybrid models that combine the strengths of LSTM, attention, and transformers to handle complex sequence processing tasks more effectively.

Resources for Learning and Implementing LSTM with Attention Mechanisms

- Understanding LSTM Networks by Christopher Olah

A highly detailed blog post that breaks down the workings of LSTM networks, with easy-to-understand explanations and visual aids. Perfect for grasping the core concepts behind LSTM before adding attention mechanisms.- Understanding LSTM Networks

- Attention Mechanism Explained

This article introduces the attention mechanism and its applications in deep learning, particularly in natural language processing and sequence modeling tasks like machine translation.- Attention Mechanism in Neural Networks

- Seq2Seq with Attention Model in TensorFlow

A comprehensive guide to building sequence-to-sequence models with LSTM and attention in TensorFlow. This tutorial walks through code implementations and explains the inner workings of attention mechanisms in machine translation tasks.- TensorFlow Seq2Seq with Attention

- PyTorch’s Seq2Seq Translation with Attention Tutorial

This tutorial covers building a Seq2Seq model with attention in PyTorch. It provides detailed code examples for implementing LSTM and attention for machine translation.- PyTorch Seq2Seq Tutorial

- Attention Is All You Need

This is the groundbreaking paper that introduced the transformer architecture, which heavily relies on attention mechanisms. While this paper focuses on self-attention rather than LSTM-based models, it is a must-read for understanding the power of attention in modern AI. - Machine Learning Mastery’s LSTM with Attention Tutorial

This article provides a beginner-friendly tutorial on how to implement attention mechanisms on top of LSTM networks for NLP tasks using Keras, a high-level deep learning API in Python.- LSTM with Attention Mechanism in Keras

- Seq2Seq with Attention: A Practical Implementation

A step-by-step practical implementation of sequence-to-sequence models with attention using LSTM. It explores how to structure the model for tasks like translation and text generation.- Seq2Seq with Attention

- Neural Machine Translation by Jointly Learning to Align and Translate

This paper introduced the use of attention mechanisms in neural machine translation (NMT). It explains how attention enables the model to align words in source and target languages. - Keras Documentation: Seq2Seq and Attention Layers

The official Keras documentation provides examples of sequence-to-sequence models with attention mechanisms, offering a straightforward way to implement these models in TensorFlow.- Keras Seq2Seq Example

- Advanced NLP with Hugging Face

Hugging Face provides state-of-the-art pre-trained models, including those using attention mechanisms. This resource is useful for applying LSTM with attention to text-based tasks like translation, summarization, and classification.