Understanding Recurrent Neural Networks (RNNs)

Recurrent Neural Networks (RNNs) are a game-changer when it comes to processing sequential data.

They’re powerful because they allow information to be shared across different steps in a sequence, ideal for time-series data, natural language processing, and even speech recognition.

But, RNNs have their limitations. That’s where LSTM (Long Short-Term Memory) and GRU (Gated Recurrent Unit) come in—they’re special types of RNNs designed to overcome these issues. Both LSTMs and GRUs are excellent for handling long sequences, but which one is right for your project?

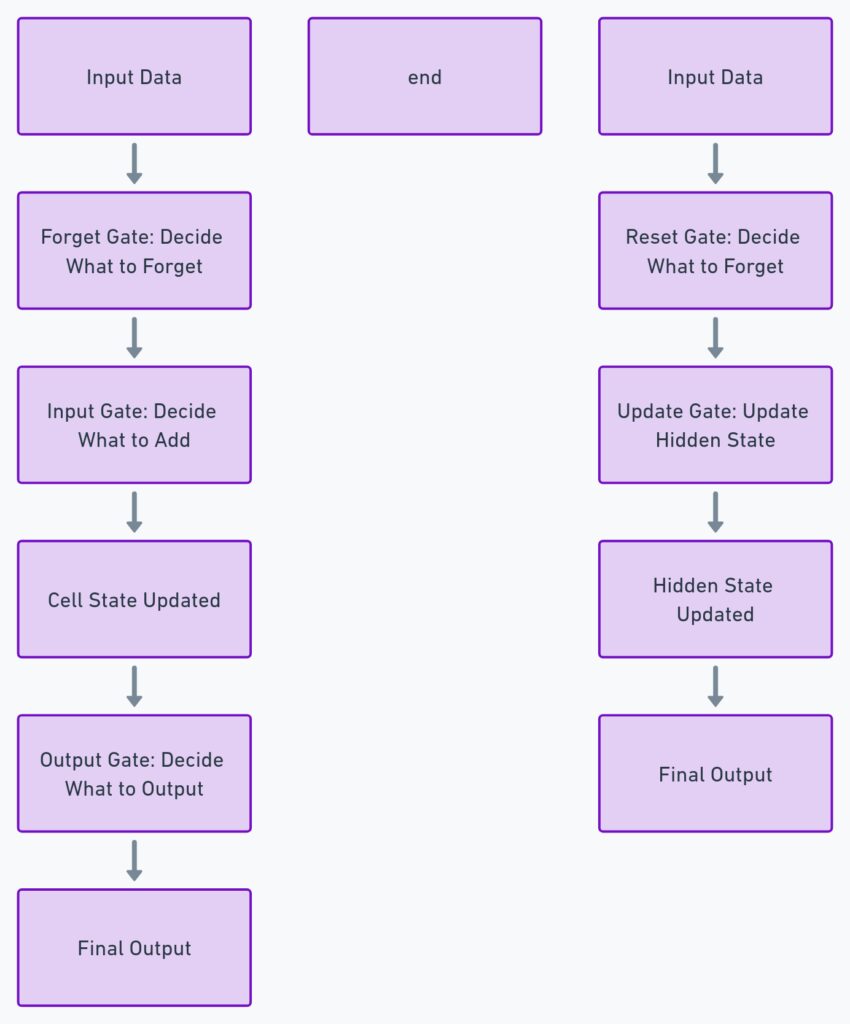

What is LSTM and How Does It Work?

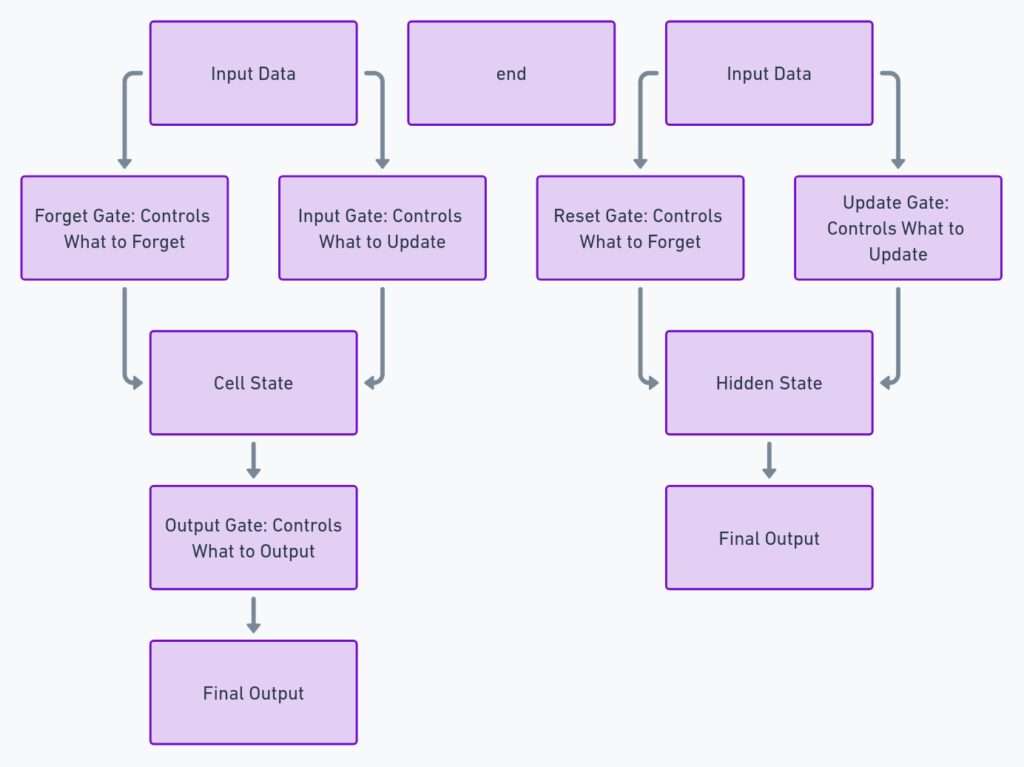

LSTMs were introduced to solve the infamous vanishing gradient problem that traditional RNNs face. They can retain information for a long time by using “gates” to control the flow of information. The three types of gates in an LSTM are:

- Input gate: Decides what information to add to the current state.

- Forget gate: Decides what information to discard.

- Output gate: Determines the final output.

These gates make LSTMs incredibly flexible. They can store and retrieve information over long sequences, which is why they’re popular for tasks like speech recognition and machine translation.

GRU: A Simpler Alternative?

GRUs are a streamlined version of LSTMs. While LSTMs have three gates, GRUs only use two—an update gate and a reset gate. This simplification makes them computationally cheaper and faster to train.

Despite being simpler, GRUs perform similarly to LSTMs on many tasks. For short to medium-length sequences, GRUs are often preferred because they reduce the amount of memory and processing power needed.

Key Differences Between LSTM and GRU

While both models belong to the same family, their subtle differences can have a significant impact on your project.

- Complexity

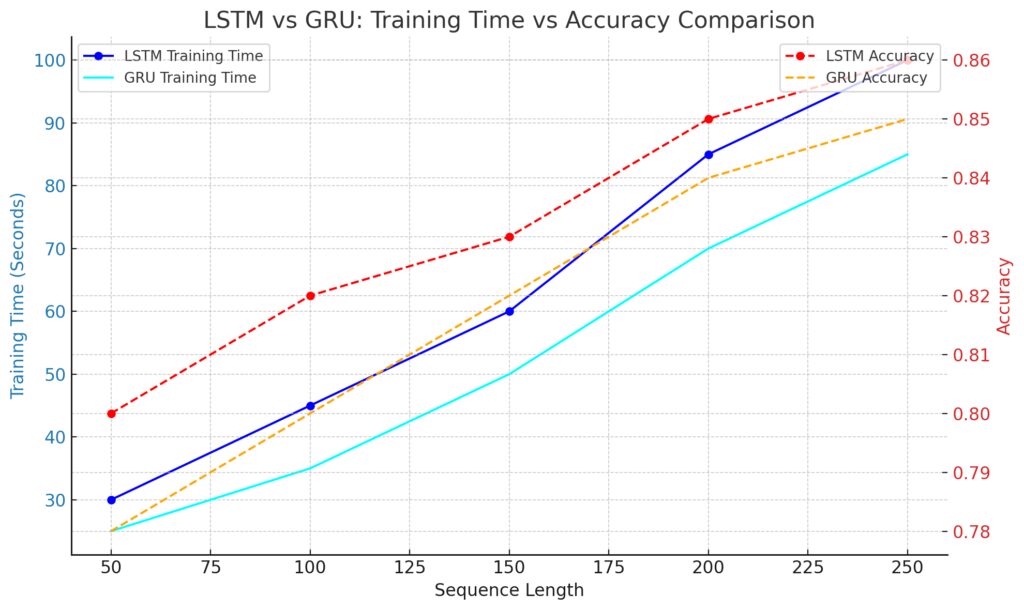

LSTMs are more complex because they have more gates to control. If your problem requires remembering information for extended periods, this complexity can be a strength. GRUs, on the other hand, are simpler and faster, which is ideal for real-time applications or when computational resources are limited. - Training Speed

GRUs are faster to train than LSTMs because of their simpler architecture. If your project involves a lot of data and quick iterations, GRUs could save you valuable time without compromising accuracy. - Performance on Long Sequences

LSTMs tend to outperform GRUs on longer sequences due to their ability to “forget” less important information effectively. If you’re working on a problem involving lengthy dependencies—like language modeling or DNA sequencing—LSTMs might be the better choice. - Memory Efficiency

GRUs consume less memory than LSTMs. When your project has hardware limitations (like mobile AI), GRUs offer a lighter, faster solution without sacrificing much performance. - Data-Specific Strengths

For tasks that need to capture subtle nuances in long-term dependencies (e.g., video analysis), LSTMs might give better results. However, for speech analysis or tasks requiring shorter sequences, GRUs are more than sufficient.

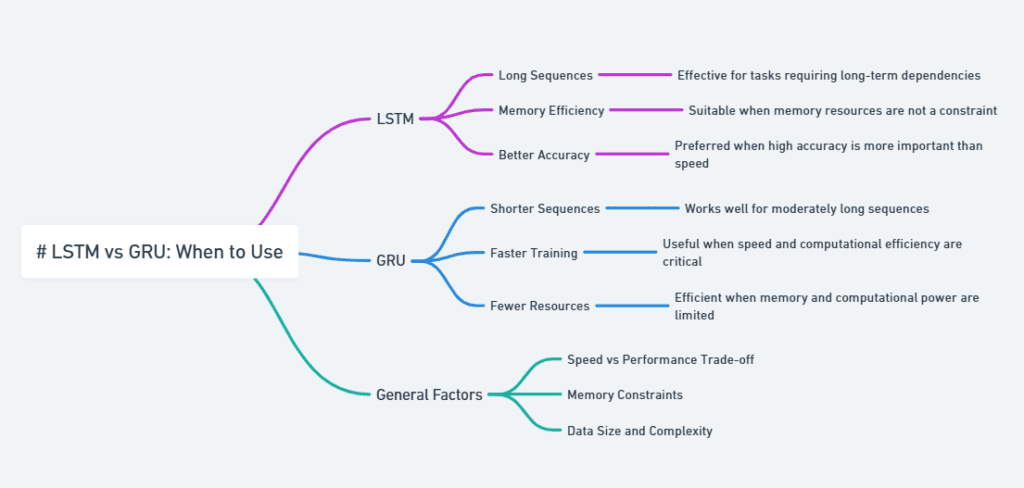

Which One Should You Choose?

The right choice between LSTM and GRU depends on your project’s specific needs. Let’s break it down.

Speed vs. Accuracy Trade-Off

If your AI project demands high-speed processing, GRUs might be your go-to. They are quicker to train and use fewer resources than LSTMs, making them perfect for projects with limited computing power or time constraints. Consider GRUs for tasks like chatbots or speech recognition where near-real-time responses are needed.

But if you’re handling complex tasks that require understanding long sequences of data with intricate dependencies, LSTMs will likely give you better results. Think about language translation or video captioning, where the context stretches far back in time.

How Much Data Do You Have?

In situations where there’s a lot of data to process, LSTM models are typically better equipped to handle the extra complexity. They’re designed to keep track of long-term patterns, which often show up when you’re working with massive datasets. Natural language processing (NLP) tasks, especially those involving documents or books, may benefit from the deeper understanding that LSTMs can offer.

On the flip side, if you have less data or if the sequence lengths are relatively short, GRUs should get the job done faster. For example, when handling stock market predictions over short time periods, GRUs might edge out LSTMs simply because they don’t need as much computational power to deliver similar accuracy.

Applications Where LSTMs Shine

LSTMs truly shine when dealing with sequential data where context from far back in the timeline matters. In machine translation, for example, the meaning of a sentence often depends on words far back in the sentence. LSTMs excel here because they can hold onto that long-term information more effectively than GRUs.

Another area where LSTMs dominate is speech recognition. Speech involves subtle nuances over time, like tone and pauses, which makes LSTM networks better at understanding the context behind the spoken words.

GRU’s Strengths in Real-Time Applications

When you need real-time results, GRUs are typically the better option. Their lighter structure allows them to train faster, making them a popular choice for real-time signal processing or video surveillance.

For developers who need quick iterations and feedback during development, GRUs’ faster training speed offers an undeniable advantage. Mobile apps and embedded systems also benefit from GRUs’ efficient use of resources.

Final Thoughts Before You Decide

While LSTMs and GRUs both serve as powerful tools for AI and machine learning, the decision comes down to your specific project requirements. Do you need speed, simplicity, or more nuanced long-term understanding? Take into account the data complexity, project size, and real-time processing needs when making your choice.

Ultimately, you might find that experimenting with both architectures is the best way to determine which model suits your use case.

FAQs: LSTM vs. GRU

1. What is the main difference between LSTM and GRU?

The primary difference lies in their architecture. LSTMs (Long Short-Term Memory) use three gates—input, forget, and output gates—to manage the flow of information. GRUs (Gated Recurrent Units), on the other hand, have a simpler structure with only two gates—update and reset gates. This makes GRUs faster to train but LSTMs better suited for handling more complex tasks requiring long-term dependencies.

2. Which is faster: LSTM or GRU?

GRU is faster than LSTM due to its simpler design. It has fewer parameters to train, which leads to quicker training times and lower computational cost. This makes GRU a better choice for real-time applications and projects with limited computational resources.

3. When should I choose LSTM over GRU?

You should choose LSTM over GRU when your project involves long sequences or when you need to capture long-term dependencies in your data. Applications like language translation, speech recognition, and video analysis often benefit from LSTM’s more complex architecture, which can retain information over longer periods.

4. Can GRU handle long-term dependencies like LSTM?

Yes, GRUs can handle long-term dependencies, but LSTMs are generally more effective in retaining very distant information. For projects with longer sequences or where subtle changes over time are critical, LSTM may perform better. However, for many tasks involving shorter or medium-length sequences, GRUs perform just as well.

5. Which model is better for small datasets: LSTM or GRU?

For smaller datasets or shorter sequences, GRUs are often more efficient because they train faster and require fewer resources. If your project doesn’t demand extensive context or long-term memory, GRUs offer a simpler, quicker alternative without sacrificing much performance.

6. Can I use both LSTM and GRU in the same project?

Yes, you can use both in the same project. Some researchers and developers opt to experiment with both LSTMs and GRUs to compare their performance or even combine them in a hybrid model. Ultimately, the decision depends on your data and performance requirements.

7. Which model is more memory-efficient: LSTM or GRU?

GRU is generally more memory-efficient because it has a simpler structure with fewer gates. This leads to a reduced number of parameters, making it lighter in terms of memory usage. For projects with memory constraints, such as those on mobile or embedded systems, GRUs are often the preferred option.

8. Are there tasks where LSTM significantly outperforms GRU?

Yes, tasks involving long-term dependencies and complex sequences like language modeling, machine translation, or complex time-series forecasting often see better results with LSTMs. This is because LSTMs are designed to manage and remember information over longer time spans more effectively than GRUs.

9. Which model should I use for time-series data?

Both LSTM and GRU perform well on time-series data. If the time-series is long and requires remembering data from far back in time, LSTM might be the better choice. For shorter time-series or when computational efficiency is important, GRU offers faster training times with comparable accuracy.

10. How do LSTM and GRU handle the vanishing gradient problem?

Both LSTM and GRU are designed to handle the vanishing gradient problem that traditional RNNs face. They use gates (input, forget, update, and reset) to control the flow of information, allowing them to retain important information over longer sequences and prevent the gradient from vanishing during training.

11. Are there any alternatives to LSTM and GRU for sequence modeling?

Yes, Transformers have become a popular alternative to LSTM and GRU models, especially for tasks involving long sequences. Transformers don’t rely on sequential data processing and instead use attention mechanisms, making them highly efficient for large-scale tasks in natural language processing (NLP) and beyond.

12. What’s better for real-time applications: LSTM or GRU?

For real-time applications like chatbots, speech recognition, or signal processing, GRU is generally the better choice because it is faster and more computationally efficient. Its simpler design allows for quicker responses, which is crucial in real-time scenarios.

13. Do LSTMs and GRUs work with other neural networks?

Yes, both LSTMs and GRUs can be combined with other neural network architectures. They are often stacked with fully connected layers, Convolutional Neural Networks (CNNs), or Attention mechanisms to enhance their performance in tasks like image captioning or audio-to-text transcription. You can use LSTMs or GRUs as part of more complex models, depending on the needs of your AI project.

14. Which model is more suitable for mobile or embedded systems?

GRUs are generally more suitable for mobile or embedded systems due to their smaller size and faster processing times. Because GRUs use fewer parameters, they consume less memory and computational power, which makes them ideal for applications running on resource-constrained devices like smartphones or IoT devices.

15. Is there a significant difference in accuracy between LSTM and GRU?

In many cases, LSTM and GRU deliver comparable results in terms of accuracy, especially on tasks involving short-to-medium sequences. However, LSTMs might outperform GRUs slightly on longer and more complex sequences, especially in tasks requiring precise long-term memory like video analysis or long-text generation.

16. Can I adjust hyperparameters in both LSTM and GRU?

Yes, both LSTM and GRU models allow you to fine-tune hyperparameters such as learning rate, batch size, sequence length, number of hidden units, and the number of layers. Adjusting these parameters helps tailor the model’s performance to your specific use case, improving both training speed and accuracy.

17. How does the architecture impact overfitting?

Due to their more complex architecture, LSTMs might be more prone to overfitting on smaller datasets if not carefully tuned. GRUs, being simpler, are less likely to overfit. To prevent overfitting with both models, you can use techniques like dropout, L2 regularization, and early stopping during training.

18. What role do activation functions play in LSTM and GRU?

Both LSTMs and GRUs use sigmoid and tanh activation functions to control information flow. The sigmoid function helps determine whether to “forget” or “update” certain information in the gates, while the tanh function scales the information within the cell state. In GRUs, these activation functions are simplified compared to LSTMs, contributing to faster computation times.

19. Are LSTMs or GRUs used in natural language processing (NLP) tasks?

Both LSTMs and GRUs are popular in NLP tasks such as text generation, sentiment analysis, and machine translation. LSTMs were traditionally favored due to their ability to handle longer sentences and complex language dependencies. However, GRUs have become increasingly popular in NLP because of their faster performance, particularly in real-time applications like chatbots or speech-to-text systems.

20. How does the vanishing gradient problem affect RNNs, and how do LSTMs/GRUs solve it?

The vanishing gradient problem occurs in traditional RNNs when gradients become too small to significantly update the model weights, which leads to poor learning over long sequences. LSTMs and GRUs solve this by using gated mechanisms that allow gradients to flow more freely over long sequences, preserving important information and avoiding vanishing gradients during backpropagation.

21. Which model requires more computational resources: LSTM or GRU?

LSTMs require more computational resources than GRUs because of their complex architecture with more gates and parameters. This makes LSTMs slower to train and more memory-intensive. If you’re working with large datasets and extensive sequences, LSTMs can require more GPU or TPU resources for efficient training.

22. Is it possible to switch between LSTM and GRU easily?

Yes, it’s relatively easy to switch between LSTM and GRU models because they share a similar role in processing sequential data. The structure of your neural network doesn’t need drastic changes—most frameworks like TensorFlow and PyTorch allow you to replace an LSTM layer with a GRU layer with minimal adjustments.

23. What are the limitations of LSTM and GRU models?

While LSTMs and GRUs are excellent for processing sequential data, they can struggle with very long sequences, especially compared to newer architectures like Transformers. Transformers use attention mechanisms to focus on relevant parts of the sequence, avoiding the sequential limitations that LSTMs and GRUs face. Training times for LSTMs can also be longer, which may become a bottleneck for large-scale projects.

24. Are there any pre-trained models using LSTM or GRU?

Yes, you can find pre-trained models that leverage both LSTM and GRU architectures in various machine learning libraries, such as Hugging Face’s Transformers, TensorFlow, and Keras. These pre-trained models are available for common tasks like sentiment analysis, text classification, and speech recognition, helping you to save time on training from scratch.

25. How do I decide between LSTM, GRU, and Transformer models?

The choice between LSTM, GRU, and Transformer models depends on your project requirements. If you’re working with long sequences and need to capture long-term dependencies, LSTMs are a solid choice. If speed and resource efficiency are priorities, especially for shorter sequences, GRUs might be better. For tasks involving very long sequences and more complex attention, Transformers could outperform both LSTM and GRU.

Resources

An Empirical Evaluation of GRUs vs. LSTMs (arXiv Preprint)

This paper presents a detailed empirical study comparing GRUs and LSTMs across multiple datasets and tasks. It offers valuable insights into when GRUs might be preferable and when LSTMs might outperform, based on different use cases.

Link to arXiv preprint