Introduction: The Magic of Sequence Processing

When it comes to sequence data—like text, time series, or speech—Long Short-Term Memory (LSTM) networks have long been the go-to tool.

They excel at remembering long-term dependencies while avoiding the infamous vanishing gradient problem. But, like every great technology, LSTMs can be taken to the next level. Enter the Attention Mechanism. This duo has become an unbeatable force in natural language processing (NLP), speech recognition, and more.

So, what makes the combination of LSTM and Attention such a game-changer? Let’s dive into the specifics.

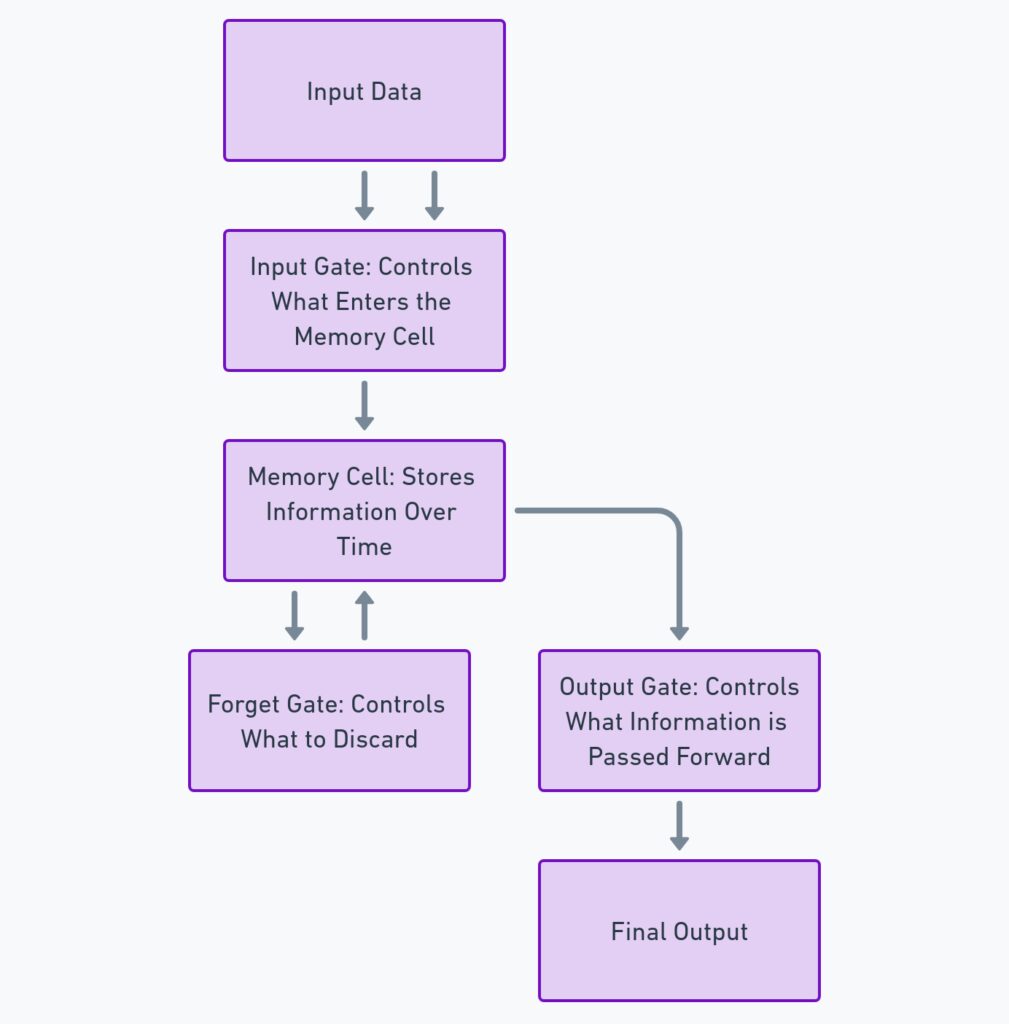

The Role of LSTM in Sequence Processing

LSTMs are specifically designed to overcome the limitations of traditional Recurrent Neural Networks (RNNs). By using memory cells, they are capable of capturing long-range dependencies in sequential data. Think of them as powerful tools for remembering both recent and distant past information.

Yet, LSTMs alone aren’t perfect. They still struggle with extremely long sequences where older information might fade. In highly complex data like natural language, LSTMs can miss important patterns hiding deeper in the sequence.

This is where Attention Mechanisms step in, adding an extra layer of sophistication.

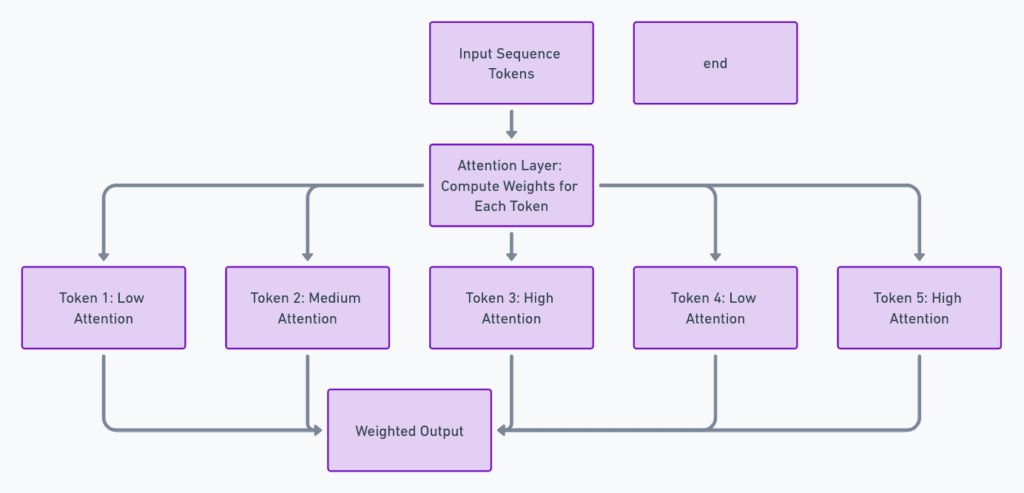

Why Attention Mechanisms are Vital

Unlike LSTMs, which process sequences step by step, the Attention Mechanism allows the model to weigh each part of the sequence differently. Instead of treating all input elements equally, attention lets the model focus on the most relevant parts.

Consider this: when reading a paragraph, you don’t process every word with the same importance. Some words or sentences stand out more depending on the context. Attention mimics this behavior, giving more weight to the most critical components.

Combining attention with LSTM helps models capture relationships that might otherwise be lost in translation—literally!

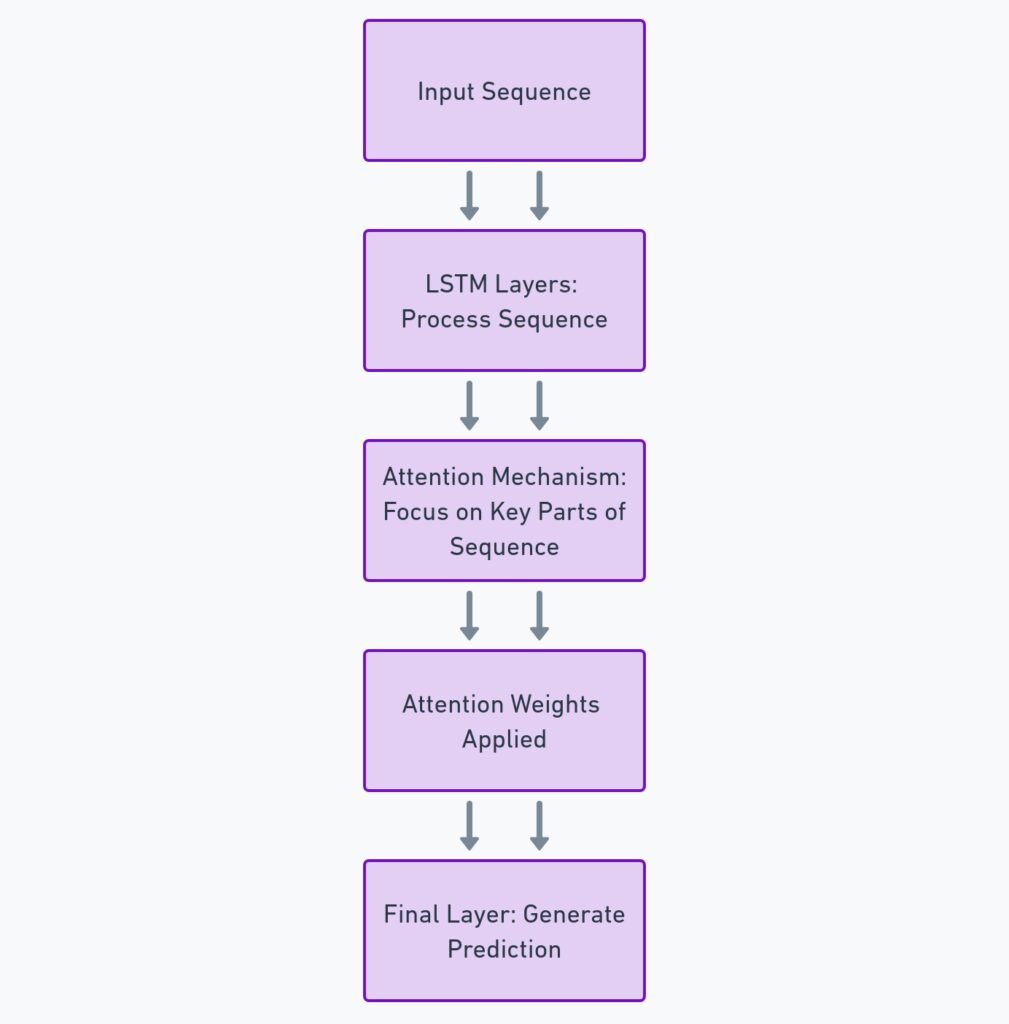

How Attention Enhances LSTM Performance

Attention, when paired with LSTM, solves a key limitation: it allows the model to attend to any point in the sequence, regardless of its position. This dynamic adjustment means the model is no longer biased toward recent inputs.

For example, in machine translation, early words in a sentence can have as much impact on the meaning as later words. Thanks to Attention, the model can focus on these early words more effectively.

This combination enhances performance in text summarization, speech recognition, and even complex time-series forecasting.

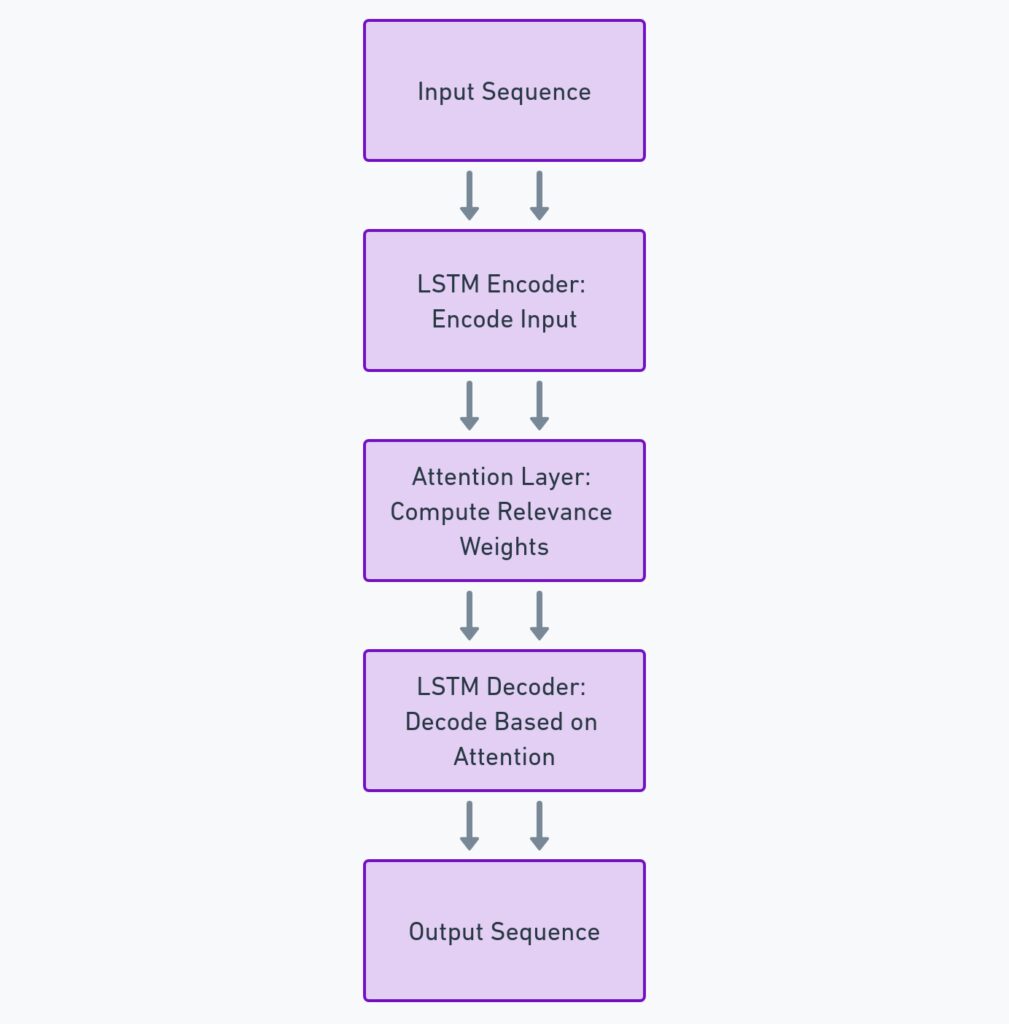

The Flow of Information: A Peek Under the Hood

In practice, the attention mechanism works by generating a set of weights for each input in the sequence. These weights represent how much attention the model should pay to each token when making predictions.

When combined with LSTM, the model gets the best of both worlds: it can remember important details through its memory cells and selectively pay attention to critical points in the sequence. This selective focus often leads to better predictions, especially in tasks like image captioning or question answering.

It’s like giving the model not only a good memory but also the ability to sharpen its focus on the most useful information at just the right time.

Real-World Applications: Where the Duo Shines

One of the most compelling use cases for LSTM and Attention is in machine translation. Google Translate, for instance, uses an Attention-enhanced neural network to provide more accurate translations by considering all parts of a sentence, not just the most recent words.

Another hotbed for this combination is speech recognition. Systems like Google Assistant and Siri rely heavily on LSTM networks to process speech sequences, while Attention helps them focus on key segments to better understand context and intent.

For financial applications like time-series forecasting, combining LSTM with Attention has made predictions about stock market trends or economic indicators far more accurate. These systems learn from past trends but can emphasize specific past events that may be more significant than others.

Optimizing Sequence-to-Sequence Models with Attention

LSTM-based sequence-to-sequence (Seq2Seq) models were a breakthrough in handling sequential data, such as translating text from one language to another. However, without Attention, these models would often degrade in performance for long sequences.

By integrating Attention, Seq2Seq models have gained remarkable accuracy. The decoder in these models can now focus on relevant parts of the input sequence without being constrained by their order in the sentence. As a result, translation quality, text summarization, and even image captioning have improved significantly.

Tackling Long-Term Dependencies: LSTM’s Strengths and Limitations

LSTMs are excellent for processing sequential data, especially when it comes to long-term dependencies. This is because their internal memory cells allow them to selectively remember or forget information over long periods. Unlike traditional RNNs, which struggle with remembering earlier inputs, LSTMs can store relevant information far back in the sequence.

However, LSTMs still have their challenges. When the sequence is too long or contains highly complex dependencies, LSTMs can struggle to effectively remember the most relevant information. Their ability to capture long-range interactions is limited by the fixed size of their memory cells. As the model processes each time step, older inputs may still become diluted, reducing their importance.

This is where Attention mechanisms come in, offering a critical enhancement to the standard LSTM architecture.

The Emergence of Attention in Neural Networks

The introduction of Attention in neural networks has been a game-changer. Attention layers can dynamically focus on specific parts of the input sequence, allowing the model to assign different levels of importance to each input. By doing this, the model no longer relies solely on the most recent data or the fixed capacity of LSTM memory.

The Transformer architecture—a network built entirely on Attention—took this concept even further, revolutionizing NLP. However, even before Transformers became widely popular, combining LSTM and Attention proved to be an incredibly effective way to balance long-term memory retention with flexible, dynamic focus on relevant data.

For tasks like text generation, speech synthesis, and image captioning, Attention allows the model to not only remember key aspects of a sequence but also pinpoint where to look for useful information at each step.

How Attention Mechanisms Work in Practice

In practice, an Attention mechanism operates by computing attention scores for each input token, which are then converted into weights. These weights dictate how much influence each part of the sequence has on the current output.

For instance, in machine translation, if a word at the beginning of a sentence is crucial for determining the meaning of the sentence, Attention will assign it a higher weight, ensuring it plays a more significant role in the final prediction.

The combination of Attention and LSTM allows for more context-aware predictions. This is because, rather than depending only on nearby elements, the model can “look back” at any part of the sequence—dynamically and on-demand.

One of the key advantages of Attention is that it makes LSTM networks far better suited for tasks with long input sequences, such as document summarization or translation of long paragraphs.

Visualizing the Impact of Attention in LSTM Networks

A great way to understand how LSTM and Attention work together is to visualize what’s happening under the hood. For instance, in an LSTM-Attention hybrid for image captioning, the model learns to focus on different parts of the image at different times. When generating each word in a caption, Attention will highlight a particular region of the image that is most relevant to the current word being predicted.

This targeted focus creates a dynamic interaction between the memory of the LSTM and the context-sensitive Attention weights. The result is a more accurate, coherent sequence output, whether it’s generating text or captions.

In text-based tasks like question answering, Attention enables the model to zero in on the section of the text that holds the answer, improving performance significantly compared to a simple LSTM. Without Attention, the model would struggle to sift through all the irrelevant information to find what’s essential.

Enhancing Neural Networks for Better Generalization

Attention-enhanced LSTMs also offer improved generalization. In traditional LSTM models, performance can degrade when faced with longer or more complex sequences than those seen during training. With Attention, the model can adapt to new, more complex inputs by selectively focusing on the most critical parts.

This becomes especially valuable in tasks like time-series analysis, where the model needs to generalize from past data and apply that knowledge to future predictions. By focusing on the most influential points in the series, Attention makes the model more flexible and capable of dealing with unseen data.

LSTM and Attention in Natural Language Processing (NLP)

The combination of LSTM and Attention has had a significant impact on Natural Language Processing (NLP). Before the advent of this dynamic duo, models like standard RNNs and basic LSTMs were somewhat limited in handling long, complex sentences. They often struggled to maintain the context, leading to poor performance in tasks like machine translation and text summarization.

When LSTMs are paired with Attention, however, the model can process the sequence more intelligently. This combination allows the network to not only remember relevant words but also attend to them selectively during prediction. In machine translation, for instance, the model can effectively translate long sentences by focusing on the most contextually relevant words at each step, even if they appeared much earlier in the sentence.

In tasks like sentiment analysis, LSTM-attention models excel at recognizing which parts of a sentence contribute most to the sentiment, making them much more accurate in determining whether a review is positive or negative.

Attention Improves Text Summarization

Text summarization, a complex NLP task, requires a model to condense a long document into a shorter version while maintaining the key points. LSTMs on their own often generate summaries that lose the essence of the original text, especially for long articles. But LSTM models equipped with Attention can analyze the entire document and emphasize the most important parts.

For example, when summarizing a news article, an LSTM model with Attention will focus on the headline, the lead paragraph, and any sections where important events are discussed. This allows the model to extract the core ideas while ignoring less relevant details.

The result? More coherent, contextually accurate summaries that preserve the intent and information of the original document.

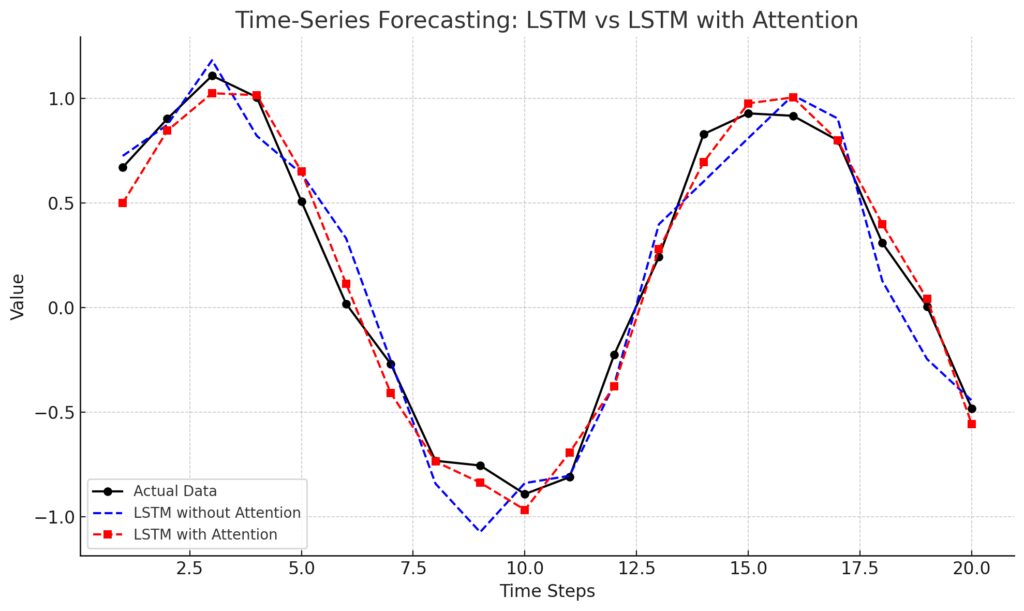

Time-Series Forecasting with LSTM and Attention

The use of LSTMs for time-series forecasting has already proven effective, especially for data with long-term dependencies. But the integration of Attention takes things a step further. Time-series data often involves important trends or events that occur at irregular intervals. LSTMs on their own may miss these critical moments, especially if they happen far back in the data.

With Attention, the model can focus on these key moments, assigning higher importance to the most relevant time steps. This approach has significantly improved forecasting accuracy in fields like finance, weather prediction, and energy consumption.

For instance, in stock market forecasting, combining LSTM and Attention allows the model to focus on pivotal past events, such as major economic announcements or significant market shifts, giving it the ability to make better predictions about future trends.

Enhancing Speech Recognition with Attention and LSTM

Speech recognition is another area where the LSTM-attention combination shines. LSTMs are naturally well-suited for processing audio data because they can capture the sequential nature of speech. However, speech data is often complex, with multiple layers of meaning and varying emphasis on different sounds.

When you add Attention to the mix, the model becomes even more powerful. It can dynamically focus on important parts of the speech, such as key phrases or intonation changes, which are critical for understanding context. This makes the model better at understanding conversational nuances and more accurate at tasks like voice commands or transcription.

Products like Siri and Google Assistant leverage these kinds of models to process and interpret voice inputs more naturally, allowing for smoother interactions between humans and machines.

The Future of LSTM and Attention: Moving Towards Transformers

Although the LSTM-attention combination has been revolutionary, the emergence of Transformers and pure Attention-based models has started to change the landscape. Transformers, which rely entirely on self-attention mechanisms, have been shown to outperform LSTMs on a wide range of tasks.

However, LSTM with Attention still holds its ground in specific domains, especially when the sequence length or the type of task benefits from having memory cells to capture long-term dependencies.

In some hybrid approaches, LSTM models with integrated Attention layers are being used alongside Transformer models to strike a balance between memory-based sequence learning and the ability to focus on important parts of the input data. This means the combination of LSTM and Attention still has a crucial role to play in certain applications where both long-term memory and dynamic focus are required.

Combining LSTM and Attention in Image Captioning

One of the most visually exciting applications of LSTM and Attention is image captioning. In this task, the goal is to generate a natural language description for an image, capturing its most important features. While convolutional neural networks (CNNs) are often used to extract the visual features from images, LSTMs handle the sequence of words to form the caption.

When Attention is added to the mix, the model can focus on different parts of the image while generating each word in the caption. For example, when the model needs to generate a word like “dog,” the Attention mechanism will highlight the region of the image where the dog appears, giving the LSTM the context it needs to produce a relevant word.

This method, known as visual attention, dramatically improves the accuracy and relevance of the captions generated. Models like Show, Attend, and Tell have become benchmarks for this task, using LSTM and Attention to create more human-like descriptions of images.

Addressing Data Scarcity with LSTM and Attention

One significant challenge in AI is dealing with data scarcity. Many models require vast amounts of labeled data to perform well, which is not always available. However, LSTM models combined with Attention have shown promising results in overcoming this limitation.

Attention mechanisms enable models to make better use of limited data by focusing on the most informative parts of the input, reducing the need for large datasets. In fields like medical diagnosis, where acquiring labeled data is expensive and time-consuming, LSTM-attention models can analyze critical features, like specific symptoms or patient history, without needing a huge dataset.

Moreover, in low-resource languages, where training data for NLP tasks like translation or speech recognition is scarce, the Attention-enhanced LSTM models can still provide competitive performance by optimizing the use of the available data.

Reducing Computational Load: A Balanced Approach

While Attention mechanisms have boosted the performance of LSTMs, there’s a trade-off: computational complexity. Attention requires extra calculations, especially for long sequences, where it computes relevance scores for every input token. This can lead to significant processing overhead, making models slower or more resource-intensive.

To mitigate this, researchers have developed more efficient attention mechanisms. For instance, local attention or sparse attention focuses only on nearby or highly relevant parts of the input sequence, reducing computational demands without sacrificing accuracy.

This optimization is crucial in real-time applications, such as real-time translation or speech-to-text systems, where minimizing latency is essential. By using selective forms of attention, models can maintain high performance while operating efficiently.

Cross-Modal Applications: Extending LSTM and Attention to New Domains

Beyond NLP and image-related tasks, the LSTM and Attention duo is being explored in cross-modal applications, where multiple types of data are processed together. These models can handle multimodal inputs—such as combining text and images, or text and audio—by learning how to integrate information from different sources.

In video analysis, for instance, LSTM networks can track the temporal evolution of a scene, while Attention focuses on important frames or actions. This can be used for video summarization or action recognition, where both time and content need to be considered.

In robotics, LSTM with Attention is being used to improve systems that need to interact with their environment by analyzing both visual and audio inputs. This helps robots focus on the most important features in their surroundings, such as obstacles or verbal commands, making them more adaptive and efficient in real-world scenarios.

The Role of LSTM and Attention in Personalized Recommendations

In personalized recommendation systems, such as those used by Netflix or Spotify, understanding user behavior over time is critical. LSTM networks are well-suited for capturing user preferences because they can model long-term interactions and evolving interests. However, not all user interactions are equally important.

By incorporating Attention, recommendation models can focus on key interactions—such as a movie the user watched multiple times or a song they played repeatedly—and weigh them more heavily when making new recommendations. This results in more accurate and personalized suggestions.

For e-commerce, Attention-enhanced LSTMs can analyze purchase history and browsing patterns, emphasizing significant actions like a purchase or items added to a wishlist. This improves the ability of the system to predict and recommend products that align more closely with the user’s current preferences.

Resources

“Attention Is All You Need” – Vaswani et al. (2017)

This seminal paper introduces the Transformer model and outlines how the Attention mechanism works in detail, marking a turning point in NLP. It’s a great resource to understand how Attention evolved from being a supporting tool to a primary architecture in deep learning.

Read the paper here.

“Understanding LSTM Networks” – Chris Olah

Chris Olah’s blog post is a widely referenced resource for understanding the inner workings of LSTM networks. It breaks down the complexity of LSTM cells with visual aids and simplified explanations.

“Show, Attend and Tell: Neural Image Caption Generation with Visual Attention” – Xu et al. (2015)

This paper presents how Attention mechanisms are applied to image captioning tasks, combining CNNs, LSTMs, and visual Attention. It’s a great read for understanding cross-modal Attention applications.

Read the paper here.

“A Gentle Introduction to LSTM Autoencoders” – Machine Learning Mastery

A practical resource that explains how LSTM autoencoders can be used for sequence prediction tasks, such as time-series forecasting, with examples in Python.

“Neural Machine Translation by Jointly Learning to Align and Translate” – Bahdanau et al. (2014)

This foundational paper introduces the Attention mechanism in sequence-to-sequence models, explaining how it enhances LSTM networks in machine translation tasks.

Read the paper here.