Mastering Large Language Models in Just 3 Weeks? It might sound ambitious, but with a focused plan and the right mindset, it’s absolutely achievable!

By the end of this journey, you’ll have a strong understanding of Large Language Models (LLMs) like GPT and BERT, and you’ll be able to implement, fine-tune, and even deploy these cutting-edge AI models. Let’s break it down into a week-by-week guide.

What Are Large Language Models? A Simple Introduction

Before diving in, it’s essential to get a clear understanding of what LLMs are and why they’ve become so significant in the AI world.

LLMs are a type of artificial intelligence (AI) designed to understand, interpret, and generate human language. They are trained on massive amounts of text data, using that information to make predictions about language patterns, context, and meaning.

Some of the most well-known LLMs today are GPT-3, BERT, and T5. They’re used in everything from virtual assistants like Siri and Alexa to customer service chatbots and even content generation tools.

At their core, LLMs rely on deep learning techniques, particularly neural networks, to process and generate language. Over the next three weeks, you’ll uncover how these models work and how to put them to use effectively.

Week 1: Mastering the Basics of AI and Machine Learning

To really get a grasp of LLMs, you need to start with a solid foundation in AI and machine learning (ML) concepts. Week 1 is all about laying that groundwork.

Understanding Machine Learning Fundamentals

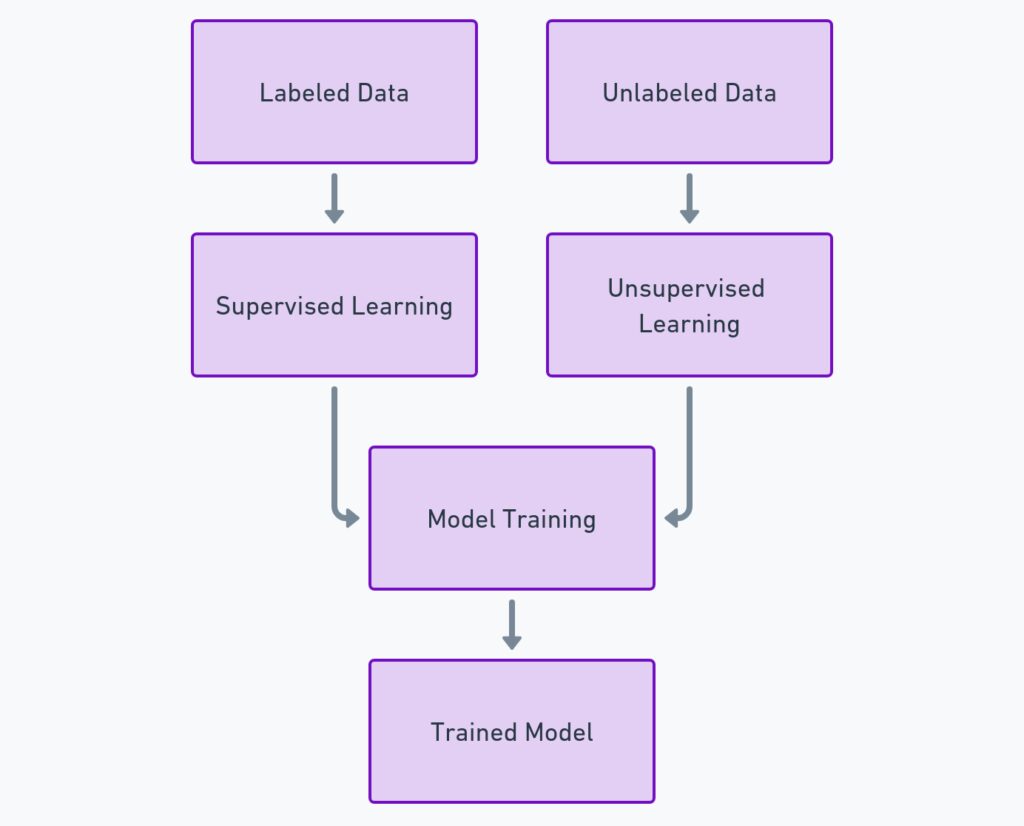

At its core, machine learning is about teaching machines to learn from data without being explicitly programmed. The two most common types of ML are supervised and unsupervised learning.

- Supervised learning: The model learns from labeled data (e.g., training an email filter using examples of spam and non-spam emails).

- Unsupervised learning: The model identifies patterns in data without labeled outcomes (e.g., clustering customers based on shopping behavior).

Focus on understanding the basics of how models are trained, validated, and tested.

The Role of Natural Language Processing (NLP)

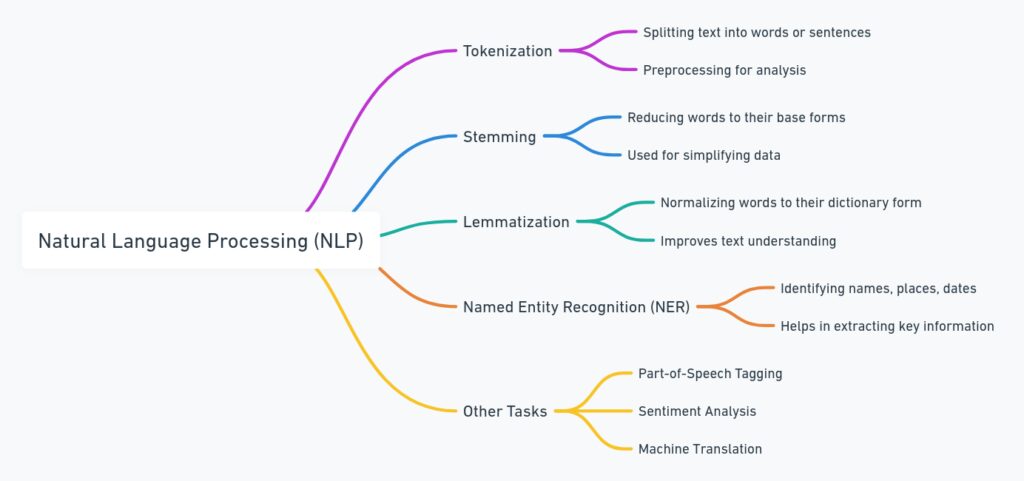

Natural Language Processing (NLP) is the field that makes LLMs possible. It focuses on how computers interpret and understand human language. This includes processes like:

- Tokenization: Breaking down sentences into individual words or phrases.

- Stemming and Lemmatization: Reducing words to their root forms.

- Named Entity Recognition (NER): Identifying proper names, places, organizations, etc.

Spend time exploring these fundamental NLP tasks, as they will be the building blocks for your understanding of LLMs.

Neural Networks 101: How They Work

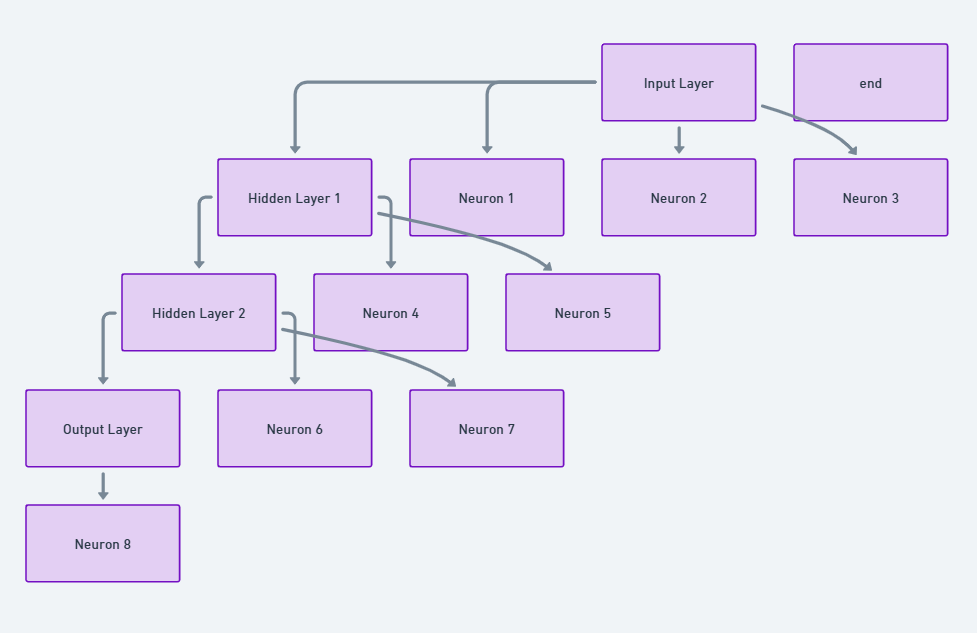

Since LLMs are powered by deep learning, it’s crucial to get familiar with neural networks. A neural network is composed of layers of interconnected nodes (neurons) that process data by learning from patterns.

Neural networks learn by passing data through multiple layers and adjusting their “weights” during training. This helps the model get better at predicting outcomes.

In this week, try exploring basic neural networks with simple Python libraries like TensorFlow or PyTorch. Begin with small projects, such as creating a model that can classify text or predict the next word in a sentence.

Week 2: Getting to Grips with Transformer Models

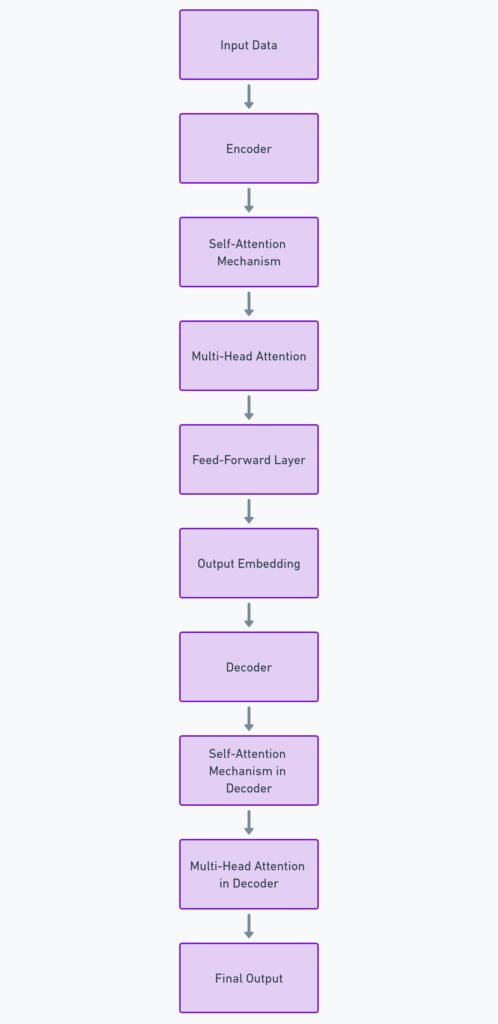

Once you’ve covered the foundational concepts, it’s time to get deeper into the heart of LLMs—the Transformer model. Introduced in the paper “Attention Is All You Need,” Transformers changed the game for NLP.

What Makes the Transformer Architecture Special?

Before Transformers, models like RNNs (Recurrent Neural Networks) and LSTMs (Long Short-Term Memory) were dominant. However, they struggled with long-term dependencies in text.

Transformers overcame this limitation through the self-attention mechanism. This allows the model to focus on different parts of the input sequence simultaneously, improving its ability to understand context, even in long text passages.

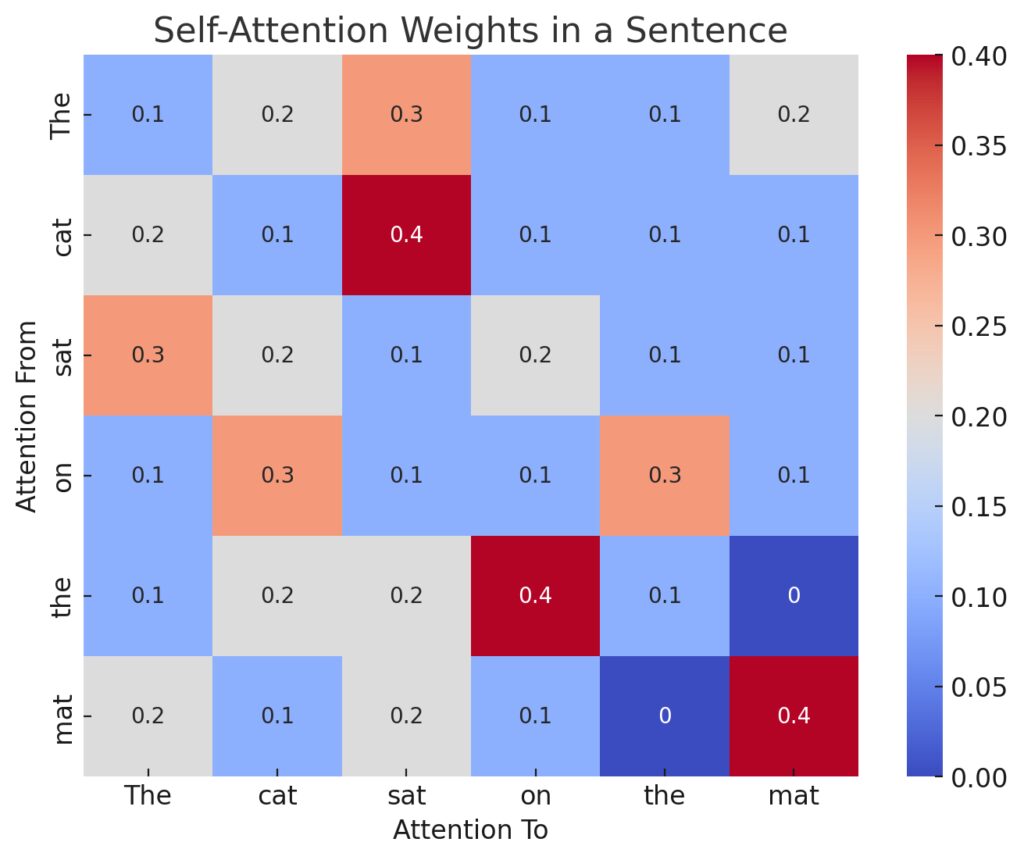

Breaking Down Self-Attention and Multi-Head Attention

The self-attention mechanism is the core innovation of Transformers. It allows the model to assign “attention” weights to different words in a sentence, giving more importance to certain words based on context. This ensures that, when predicting the next word, the model considers all parts of the sentence, not just the preceding words.

Multi-head attention takes this concept further by allowing the model to focus on different parts of the sequence at the same time. Each “head” attends to a different part, providing richer context.

Here, focus on:

- How attention works in Transformers.

- How to calculate attention weights.

- Why Transformers are so much more efficient than previous models like RNNs.

Understanding Pretrained Models: BERT vs. GPT

By this stage, you’ve probably heard a lot about models like BERT and GPT. Both are based on the Transformer architecture, but they serve different purposes:

- BERT: Primarily a reading comprehension model, BERT uses bidirectional encoding, meaning it looks at the entire sentence when making predictions. It’s great for tasks like question-answering and text classification.

- GPT: A generative model that excels at text generation, GPT works unidirectionally, predicting the next word in a sequence based on the preceding words. This makes it perfect for tasks like text completion and creative content generation.

Understanding the strengths and limitations of these models will help you choose the right tool for the job.

When diving into pretrained models, two of the most prominent players are BERT (Bidirectional Encoder Representations from Transformers) and GPT (Generative Pretrained Transformer).

Although both are based on the Transformer architecture, they are built with different goals in mind and are tailored to distinct tasks. Below is a table that compares BERT and GPT, highlighting their architecture, use cases, and strengths.

| Aspect | BERT | GPT |

|---|---|---|

| Architecture | Bidirectional (analyzes both left and right context) | Unidirectional (predicts the next word from left to right) |

| Model Type | Encoder-only (focuses on understanding context) | Decoder-only (focuses on generating text) |

| Training Objective | Masked Language Model (MLM) – predicts masked words | Causal Language Model (CLM) – predicts next word in sequence |

| Best For | Understanding tasks (e.g., question answering, sentiment analysis, classification) | Text generation tasks (e.g., content creation, dialogue systems) |

| Context Processing | Processes context in both directions, resulting in better comprehension of meaning | Processes context sequentially, making it strong for creative text continuation |

| Use Cases | Sentiment analysis, named entity recognition, sentence classification, search queries | Text completion, chatbots, content generation, summarization |

| Model Size | Typically smaller than GPT models (e.g., BERT-base has 110M parameters) | Generally larger (e.g., GPT-3 has 175B parameters) |

| Key Strength | Deep understanding of word relationships and context within a sentence | Fluent text generation, capable of creating coherent and contextually relevant text |

| Example Task | Answering a question like “Who is the president of the USA?” | Writing a paragraph on “How AI is transforming industries” |

| Deployment Examples | Google Search, digital assistants for comprehension tasks | OpenAI’s GPT-3 API, chatbots like ChatGPT |

Week 3: Hands-On with LLMs – Build, Fine-Tune, and Deploy

With the theory under your belt, Week 3 is all about getting hands-on. You’ll now dive into the practical application of LLMs, focusing on fine-tuning and deployment.

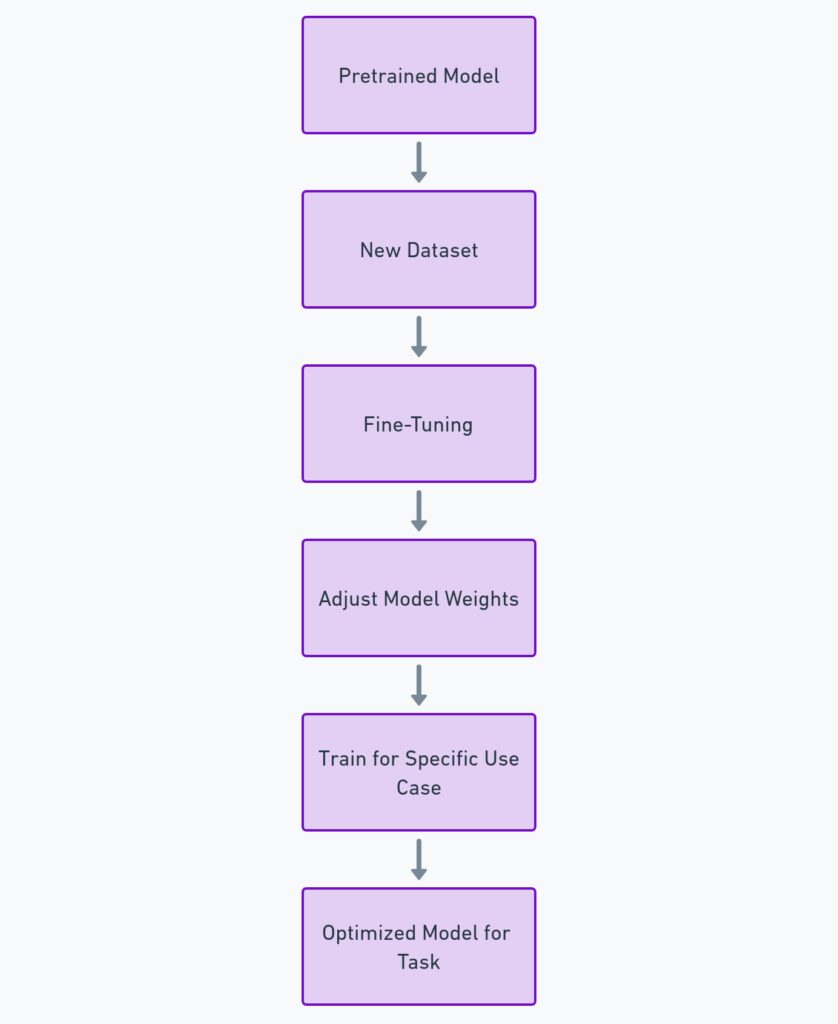

Fine-Tuning Pretrained Models

One of the great things about LLMs is that you don’t need to train them from scratch. You can fine-tune existing models (like those available on Hugging Face Transformers) for specific tasks with a relatively small dataset.

Fine-tuning involves:

- Choosing a pre-trained model: Select a model that suits your task (e.g., GPT-3 for text generation or BERT for classification).

- Training on a new dataset: Use transfer learning to adapt the model to a new domain or task. For example, you might fine-tune GPT to write product descriptions based on your company’s unique style.

Experiment with fine-tuning on tasks like:

- Text summarization.

- Sentiment analysis.

- Named Entity Recognition (NER).

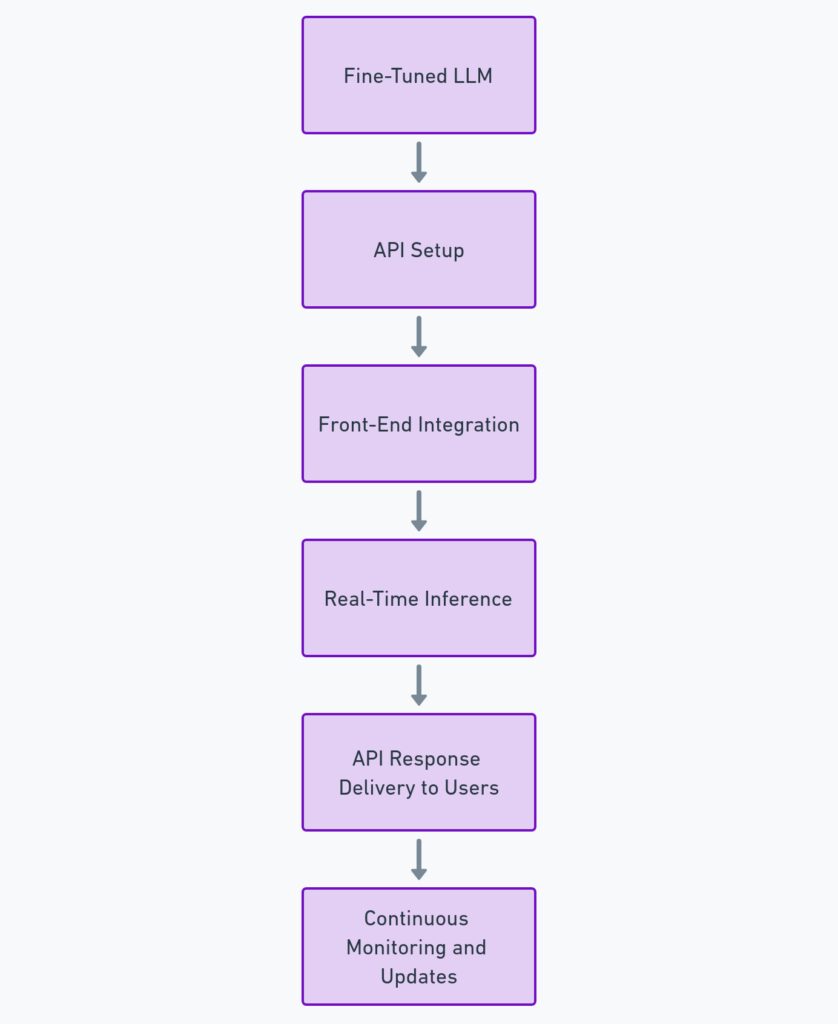

Deploying an LLM with APIs

After fine-tuning your model, the next step is to deploy it. Most LLMs today can be accessed via APIs, making it easy to integrate them into real-world applications.

You can deploy your LLM using platforms like Hugging Face or OpenAI’s API. Follow these steps to deploy:

- Set up the model: Export your fine-tuned model and create an API endpoint.

- Build an interface: Create a front-end (or back-end) that interacts with the API, allowing users to input text and receive AI-generated responses.

- Monitor and iterate: After deployment, keep track of performance and adjust your model as needed.

For example, many companies use fine-tuned LLMs for customer service bots, content generation tools, and even automated email drafting.

Advanced Topics and Real-World Applications

If you’ve made it through three weeks, you should be comfortable with the fundamentals of LLMs. However, this is just the beginning. In the AI world, there’s always more to learn. You can explore:

- Large-scale training: Learn about distributed training techniques for scaling LLMs.

- Prompt engineering: Master the art of crafting effective input prompts for LLMs.

- Ethical considerations: AI models often raise ethical concerns, from bias in language data to issues around data privacy.

How to Stay Updated in AI and NLP

The field of AI is evolving rapidly. To stay on top of the latest advancements:

- Join communities like Kaggle or AI Alignment forums.

- Follow influential AI researchers on Twitter or LinkedIn.

- Regularly read papers on arXiv or Google Scholar.

By keeping up with the latest research, you’ll ensure that your knowledge remains sharp as LLMs continue to evolve.

With this roadmap, you’ll not only understand LLMs but also build and deploy them, unlocking the full potential of these powerful AI tools. Enjoy your journey into the world of Large Language Models!

Resources for Learning and Implementing BERT and GPT

To deepen your understanding of BERT and GPT, here are some valuable resources you can explore. These cover tutorials, documentation, and videos to help you learn the theory and get hands-on experience with these models.

Official Documentation and Libraries

- Hugging Face Transformers

Hugging Face is one of the most widely used libraries for NLP and pretrained models like BERT and GPT. Their library offers easy-to-use APIs for implementing and fine-tuning models.

Link: Hugging Face Transformers

Recommended for: Easy access to both BERT and GPT models with comprehensive tutorials. - Google BERT GitHub Repository

This is the official repository where BERT was first introduced by Google Research. It includes pretrained models and details about the architecture.

Link: Google BERT GitHub

Recommended for: Diving deep into the original BERT implementation. - OpenAI GPT GitHub Repository

The OpenAI GPT repository contains resources for GPT’s implementation, focusing on its training, architecture, and examples.

Link: OpenAI GPT GitHub

Recommended for: Understanding the GPT architecture and examples of text generation.