Build Your First Neural Network with PyTorch: A Step-by-Step Guide

Introduction to PyTorch

What is PyTorch and its Advantages

PyTorch is a powerful open-source machine learning library developed by Facebook’s AI Research lab. It’s highly regarded for its dynamic computation graph, which makes building and modifying neural networks intuitive and flexible. Unlike static computation graphs used in other libraries, PyTorch allows you to change the graph on-the-fly, making debugging and experimenting much easier.

Why Choose PyTorch?

One of the key advantages of PyTorch is its simplicity and ease of use. It integrates seamlessly with Python, making it a favorite among researchers and practitioners. Additionally, PyTorch’s strong community support and comprehensive documentation make it a reliable choice for developing machine learning models.

Installing PyTorch

Step-by-Step Installation Instructions

Before we dive into building neural networks, let’s get PyTorch installed. Follow these steps to get started:

- Install Python: Ensure you have Python 3.6 or above installed. You can download it from Python’s official site.

- Install PyTorch: Open your terminal or command prompt and run:bashCode kopieren

pip install torch torchvision torchaudio - Verify Installation: Check if PyTorch is installed correctly by running:pythonCode kopieren

import torch print(torch.__version__)

PyTorch Fundamentals

Overview of Tensors in PyTorch

Tensors are the fundamental data structure in PyTorch, similar to arrays in NumPy but with additional capabilities for GPU acceleration. Here’s how you can create and manipulate tensors:

pythonCode import torch

# Creating a tensor

x = torch.tensor([1.0, 2.0, 3.0])

print(x)

# Basic operations

y = torch.tensor([4.0, 5.0, 6.0])

print(x + y) # Element-wise addition

print(x * y) # Element-wise multiplication

Introduction to Autograd

Autograd is PyTorch’s automatic differentiation engine that powers neural network training. It records operations performed on tensors to create a computation graph, which can then be used to compute gradients for optimization:

pythonCode # Enabling gradient computation

x = torch.tensor([1.0, 2.0, 3.0], requires_grad=True)

y = x + 2

z = y.mean()

z.backward() # Compute gradients

print(x.grad) # Gradient of z with respect to x

Creating a Neural Network

Defining a Simple Neural Network Architecture

Using torch.nn, you can define a neural network by creating a class that inherits from torch.nn.Module:

pythonCode import torch.nn as nn

class SimpleNN(nn.Module):

def __init__(self):

super(SimpleNN, self).__init__()

self.layer1 = nn.Linear(10, 50)

self.layer2 = nn.Linear(50, 1)

def forward(self, x):

x = torch.relu(self.layer1(x))

x = torch.sigmoid(self.layer2(x))

return x

In this example, SimpleNN consists of two layers. The forward method defines how data flows through the network.

Explanation of Layers, Activations, and Compiling a Model

Layers are the building blocks of neural networks. Each layer applies a transformation to the input data. Activations, such as ReLU and Sigmoid, introduce non-linearity, enabling the network to learn complex patterns. Compiling a model in PyTorch involves defining the architecture and setting up the forward pass.

Preparing Data

Loading and Preprocessing Data

PyTorch provides torch.utils.data to easily load and preprocess data. Use Dataset and DataLoader for handling data efficiently:

pythonCode from torch.utils.data import Dataset, DataLoader

class CustomDataset(Dataset):

def __init__(self, data, labels):

self.data = data

self.labels = labels

def __len__(self):

return len(self.data)

def __getitem__(self, idx):

return self.data[idx], self.labels[idx]

# Example usage

dataset = CustomDataset(data, labels)

dataloader = DataLoader(dataset, batch_size=32, shuffle=True)

Introduction to DataLoader for Batch Processing

DataLoader handles batch processing, shuffling, and parallel data loading, making it crucial for efficient training:

pythonCode dataloader = DataLoader(dataset, batch_size=32, shuffle=True, num_workers=2)

Training the Neural Network

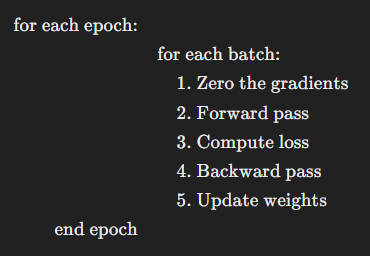

Setting Up a Training Loop

Training involves iterating over data, computing loss, and updating model weights. Here’s a simple training loop:

pythonCode import torch.optim as optim

model = SimpleNN()

criterion = nn.BCELoss()

optimizer = optim.SGD(model.parameters(), lr=0.01)

for epoch in range(num_epochs):

for inputs, targets in dataloader:

optimizer.zero_grad()

outputs = model(inputs)

loss = criterion(outputs, targets)

loss.backward()

optimizer.step()

print(f'Epoch {epoch+1}, Loss: {loss.item()}')

Model Evaluation and Prediction

Testing the Model with Unseen Data

Evaluate your model using a separate test set to ensure it generalizes well:

pythonCode# Switch to evaluation mode

model.eval()

test_loss = 0.0

with torch.no_grad():

for inputs, targets in test_dataloader:

outputs = model(inputs)

loss = criterion(outputs, targets)

test_loss += loss.item()

print(f'Test Loss: {test_loss/len(test_dataloader)}')

Discussing Common Pitfalls and Solutions

When evaluating your model, it’s crucial to watch for overfitting, where the model performs well on training data but poorly on unseen data. To mitigate this, use techniques like dropout and data augmentation.

Advanced Features

Introduction to GPU Acceleration

Leverage PyTorch’s GPU acceleration to speed up training:

pythonCode device = torch.device('cuda' if torch.cuda.is_available() else 'cpu')

model.to(device)

inputs, targets = inputs.to(device), targets.to(device)

Exploring Other Advanced Features

Beyond GPU acceleration, PyTorch offers numerous advanced features, such as distributed training, quantization for model optimization, and ONNX for interoperability with other frameworks.

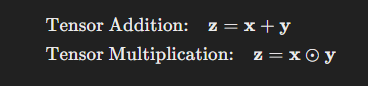

LaTeX Visuals

Tensor Operations

Tensor Addition:

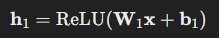

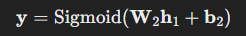

Neural Network Architecture

Layer 1:

Layer 2:

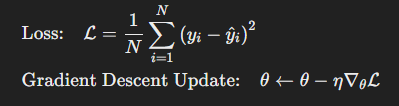

Loss Function and Backpropagation

Training Loop Diagram

With these LaTeX visual aids, complex concepts are more accessible, making the learning process smoother and more engaging.

Conclusion and Additional Learning Materials

Recap and Resources

We’ve covered the basics of getting started with PyTorch, from installation to training and evaluating a neural network. For further learning, explore these resources:

With this foundation, you’re ready to dive deeper into more advanced PyTorch features and build sophisticated models.

Links: