Optimizing XGBoost for Large Datasets

XGBoost is one of the most powerful machine learning tools around. But when dealing with massive datasets, even the most advanced algorithms can feel the heat.

Luckily, there are a few tricks you can use to keep XGBoost humming along quickly—even when the data load grows. Optimizing XGBoost for speed and scalability comes down to balancing memory usage, computation time, and model accuracy. Let’s dive into some of the more advanced techniques that make this possible.

Why Large Datasets Challenge Traditional Models

Handling large datasets isn’t just about size—it’s about how complex the data becomes. Traditional models struggle because they can’t efficiently manage billions of data points. But XGBoost, with its tree-based learning, tends to scale well. The real challenge here is to fine-tune it to work under extreme conditions.

Data may come from multiple sources, in various formats, and cleaning that data can take time. Moreover, high-dimensional datasets demand significant computational power to process. Without the right setup, this can lead to bottlenecks.

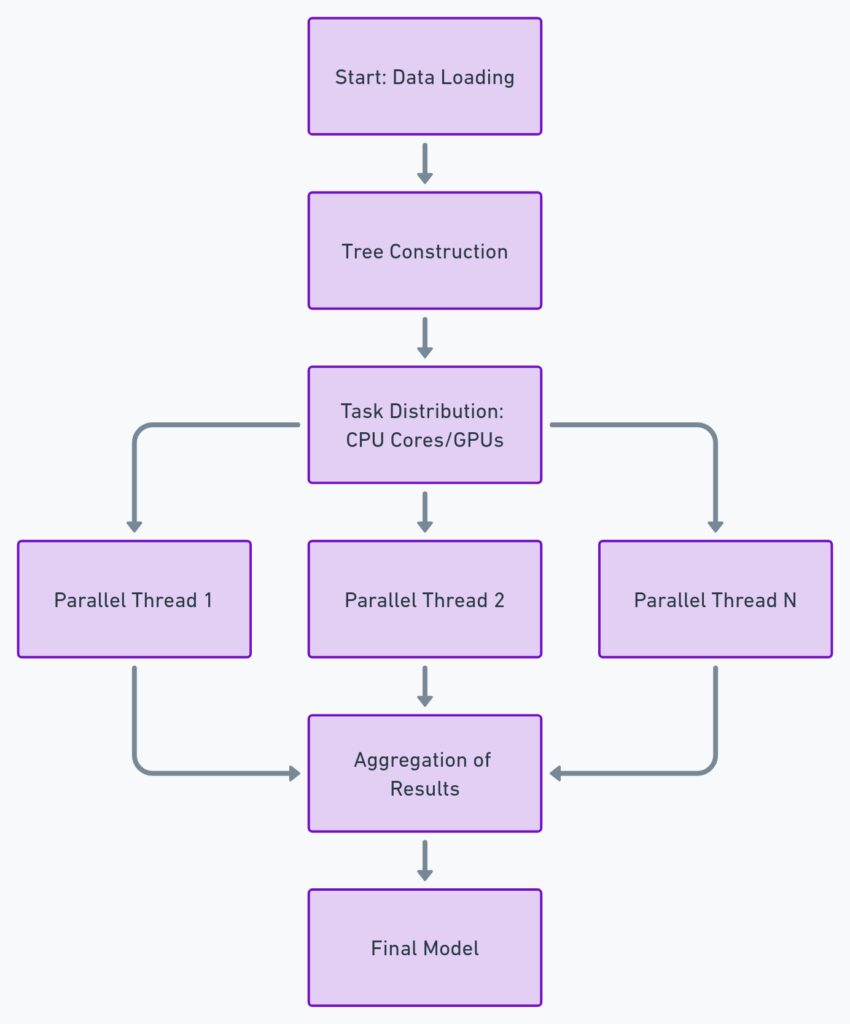

Leveraging XGBoost’s Parallelization Features

One of XGBoost’s strongest features is its ability to parallelize tasks across CPUs and GPUs. This cuts down training time significantly. However, to really optimize performance on large datasets, you’ll need to configure XGBoost correctly to make the most of your available hardware.

First, enable parallel tree construction. This can lead to faster convergence by allowing XGBoost to build multiple trees at once. Another way to leverage parallelism is to tune the nthread parameter, which controls how many CPU cores are used. The more cores, the faster the process—assuming your system can handle it.

Pro tip: Use distributed computing options if the dataset is too large for a single machine.

The Impact of Data Preprocessing on Performance

Data preprocessing is a critical step in optimizing XGBoost. In fact, a large chunk of the bottlenecks in training arise from poorly preprocessed data. When datasets grow large, even small inefficiencies in preprocessing can multiply, causing performance drops.

Start by ensuring all features are encoded correctly—XGBoost works best with numerical features. One-hot encoding for categorical variables and normalizing numerical data can help ensure faster training. Additionally, outliers should be handled carefully, as they can drastically affect model performance on large datasets.

Don’t forget feature engineering! While it can be tempting to throw raw data into the model, proper feature selection can reduce complexity and increase both speed and accuracy.

Reducing Memory Footprint Without Losing Accuracy

Memory can be a real choke point when working with big data. XGBoost can be quite resource-intensive, but there are ways to lower its memory footprint. One of the easiest ways to do this is by controlling the size of the trees built during training.

For example, limiting the max_depth of trees can reduce memory consumption significantly. It’s tempting to build deeper trees to capture more detail in your model, but this can also lead to overfitting. Shallow trees, on the other hand, tend to be more efficient, and with large datasets, you’ll often find that they’re just as effective.

Another technique is reducing the number of features used in training. Selecting only the most important features can trim the model down considerably without sacrificing much in terms of accuracy.

Hyperparameter Tuning for Large-Scale Models

When working with large datasets, hyperparameter tuning can make all the difference. But this can also be time-consuming. A grid search can take ages if you’re not careful, so it’s better to start with a random search over a more confined set of parameters.

Focus on parameters like learning_rate, max_depth, and subsample. The learning_rate controls how much the model adjusts per iteration, and smaller values tend to generalize better—especially with big datasets. max_depth, as mentioned earlier, limits tree size, and subsample defines what percentage of data is used to train each tree, which can reduce training time and memory use.

Finally, remember to track your experiments using tools like MLFlow to monitor performance during hyperparameter tuning. This can help you identify the best settings for your large-scale project.

Using Dask with XGBoost for Distributed Computing

When working with extremely large datasets, a single machine may not be enough to handle the workload efficiently. This is where distributed computing comes into play. Dask, a flexible parallel computing library in Python, integrates well with XGBoost, allowing you to scale horizontally across multiple machines.

Dask enables parallel computation by breaking down large datasets into smaller, more manageable chunks. This way, you can perform parallelized hyperparameter tuning or model training across clusters of machines. The best part? It’s easy to set up—simply replace XGBoost’s default train method with Dask’s equivalent.

For cloud environments, Dask is a perfect match. Whether you’re using AWS or Google Cloud, scaling your resources dynamically while running XGBoost training sessions becomes a breeze. This boosts your computational efficiency and cuts down on training time drastically.

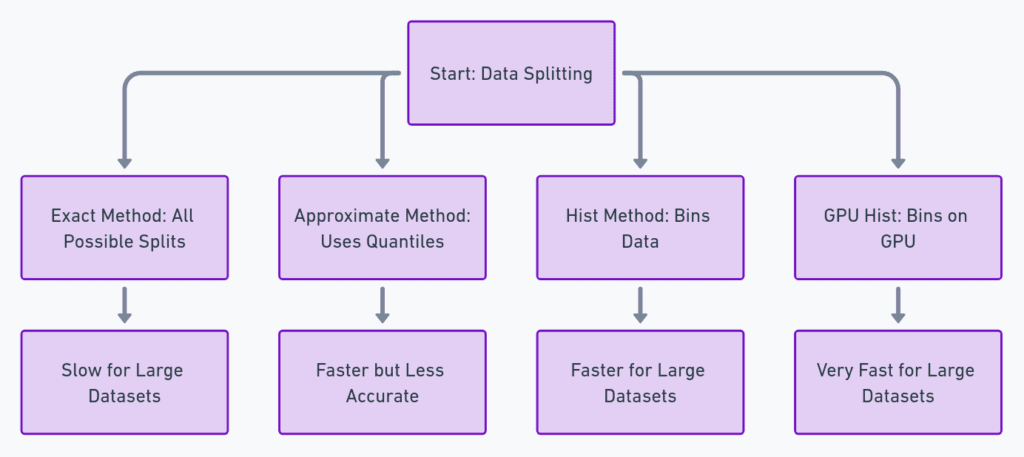

Tree Methods and Their Impact on Speed

XGBoost offers different types of tree methods to suit various scenarios, each having an impact on training speed and memory usage. By default, XGBoost uses the exact greedy algorithm for growing trees, which works fine for smaller datasets. However, when your data scales up, this method becomes slow and resource-heavy.

Switching to the approximate algorithm (tree_method=approx) can speed up the process. This method creates quantile sketches of feature distributions instead of computing exact splits, reducing computation time while still yielding good accuracy. For truly massive datasets, you might want to go a step further and try the hist method, which uses a histogram-based approach to speed up split finding.

For distributed systems, using the gpu_hist method leverages the power of GPUs to handle computations in parallel. If your system is equipped with a GPU, this can make an enormous difference, cutting training time by 60% or more.

Maximizing GPU Power for Faster Computation

GPUs are a game changer when it comes to large-scale machine learning. XGBoost supports GPU acceleration, which allows the model to take full advantage of parallel computations. For large datasets, training on a GPU can be up to 10 times faster than on a CPU.

To fully utilize your GPU, set the tree_method to gpu_hist. This method helps in building trees more efficiently by distributing the calculations across the GPU’s cores. Additionally, you can use predictor=gpu_predictor to speed up the prediction phase once the model has been trained.

Keep in mind that GPU performance improves when the dataset is sufficiently large. Small datasets won’t fully exploit the power of the GPU, so use this method only when you’re dealing with big data. Also, be cautious of your GPU’s memory limits. Running out of GPU memory can slow down training, or worse, cause it to fail.

Controlling Model Complexity for Scalability

As your dataset grows, so does the complexity of your model. More data means more patterns to learn, which can lead to overfitting. Controlling model complexity is essential to ensure that your XGBoost model remains scalable and generalizes well to new data.

Start by adjusting the regularization parameters such as lambda and alpha. These parameters penalize overly complex models, encouraging the algorithm to prioritize simpler trees. By increasing these values, you can control overfitting, especially in high-dimensional datasets.

Additionally, adjust the min_child_weight parameter, which controls the minimum sum of instance weight needed in a child node. By increasing this value, you can limit the number of splits in the trees, reducing model complexity and speeding up training. This is particularly useful for very large datasets with a lot of noise.

Batching Data for Improved Processing

When working with massive datasets, loading the entire dataset into memory at once can be inefficient—or impossible. Instead, batching data is a more practical approach. XGBoost supports out-of-core computation, allowing the model to train on data that doesn’t fit into memory by splitting the data into smaller batches.

To implement this, you need to configure the external_memory parameter and use batch_size to define how much data the algorithm should process at a time. This technique reduces memory usage while ensuring that the model can still learn from the full dataset. However, it’s important to find the right batch size—too small, and the model will train inefficiently; too large, and memory limits could be exceeded.

Batched data processing can also improve speed when combined with gradient boosting, as the model only updates on a portion of the data at each iteration.

The Role of Early Stopping in Large-Scale Training

Training XGBoost on large datasets can take considerable time, and sometimes the model may overfit before you realize it. That’s where early stopping comes in. Early stopping allows you to halt the training process once the model’s performance stops improving on the validation set, saving both time and computational resources.

To implement early stopping, set the early_stopping_rounds parameter. For example, if you set early_stopping_rounds=10, training will stop if the model doesn’t improve for 10 consecutive rounds. This method is particularly effective when dealing with massive datasets, as it prevents overtraining while reducing unnecessary iterations.

The beauty of early stopping is that it helps strike a balance between model complexity and computation time. You’ll also avoid spending hours on training, only to find that the additional rounds didn’t improve the model. It’s a must-have technique for optimizing both speed and performance, especially when you’re running distributed or GPU-accelerated XGBoost models.

Regularization Techniques to Improve Efficiency

Regularization is key to controlling model overfitting, especially when handling large datasets. XGBoost provides several regularization techniques to ensure that the model doesn’t become too complex, which can happen easily when you’re training on big data.

The two most critical regularization parameters are lambda (L2 regularization) and alpha (L1 regularization). By increasing these values, you reduce the model’s complexity by penalizing large coefficients. This can lead to a simpler model that generalizes better while requiring less computation during training.

Another powerful tool is gamma, which controls the minimum loss reduction required to make a further split on a leaf node. Increasing gamma reduces the model’s tendency to create unnecessary splits, thus decreasing both memory usage and training time.

Regularization doesn’t just make your model more efficient—it often leads to more robust models. The added constraints force the algorithm to focus on the most important patterns in the data, preventing it from being distracted by noise.

Handling Imbalanced Data Efficiently

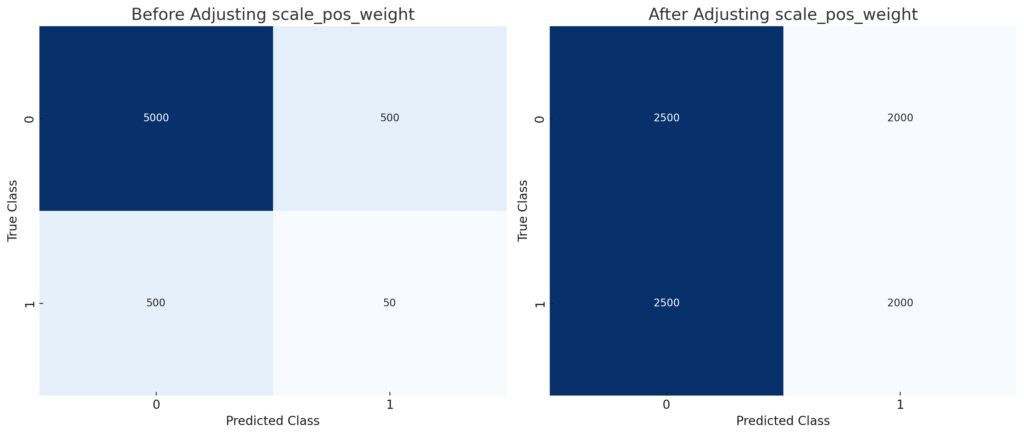

Large datasets often come with imbalanced classes, where one category dominates the others. This imbalance can drastically affect the performance of your XGBoost model, leading to biased predictions. To address this, XGBoost provides several built-in mechanisms that can help.

The scale_pos_weight parameter is your first line of defense. It helps to balance the positive and negative classes by assigning more weight to the minority class, making the model more sensitive to the underrepresented data. You can compute this weight as the ratio between negative and positive classes.

Another effective method is downsampling the majority class or upsampling the minority class, though this can be computationally expensive. An alternative approach is using the max_delta_step parameter, which controls the maximum step size of weight updates, making it especially useful in the case of imbalanced classes.

After Adjusting: Displays a more balanced distribution, indicating how the adjustment helps to handle class imbalance.

Finally, monitor metrics such as AUC-ROC (Area Under the Receiver Operating Characteristic Curve) rather than just accuracy. This helps you get a clearer picture of how well the model is performing across both classes, especially when one class is underrepresented.

Best Practices for Feature Selection in Big Data

When working with large datasets, feature selection becomes a crucial task. Too many features can increase model complexity and slow down training. Worse, irrelevant features can introduce noise, causing the model to perform poorly. To tackle this, focus on selecting the most impactful features to speed up training while maintaining accuracy.

Start with correlation analysis to identify highly correlated features. Removing redundant variables can make a significant difference, especially in high-dimensional data. Another method is using SHAP values (SHapley Additive exPlanations) to measure the importance of each feature. SHAP values allow you to rank features based on their contribution to the model’s predictions.

Another effective technique is recursive feature elimination (RFE), which iteratively removes the least important features and retrains the model, helping you zero in on the most critical variables.

Ultimately, fewer but well-chosen features lead to faster, more efficient training and a model that generalizes better on unseen data.

Evaluating Model Performance at Scale

Evaluating model performance on large datasets requires more than just looking at the accuracy metric. Large-scale models demand more nuanced evaluation metrics that reflect the complexity of the data and the challenges of big data.

For classification problems, metrics like AUC-ROC, precision, and recall give a better sense of the model’s performance, especially when working with imbalanced datasets. For regression tasks, focus on RMSE (Root Mean Squared Error) and MAE (Mean Absolute Error) to get a clear picture of prediction accuracy.

When scaling models, it’s also important to use cross-validation to ensure the model’s performance holds up across different subsets of the data. For large datasets, performing k-fold cross-validation can be computationally expensive, so use stratified sampling or random sampling to validate the model without needing to compute results for the entire dataset.

Incorporating logging and monitoring tools such as MLflow can help track experiments, allowing you to keep an eye on performance metrics throughout the model training process.

That wraps up an in-depth look at optimizing XGBoost for large datasets! From leveraging distributed computing to handling imbalanced data and reducing memory footprint, these advanced techniques can significantly boost your model’s scalability and speed. Whether you’re working in a cloud environment or on a GPU-enabled machine, these strategies ensure XGBoost handles your large-scale challenges with efficiency and precision.

FAQs

1. What is XGBoost, and why is it popular for large datasets?

XGBoost is an efficient, scalable machine learning algorithm designed for high-performance in supervised learning tasks, particularly for classification and regression problems. Its popularity stems from its use of gradient boosting, which builds models sequentially, optimizing errors from previous models. XGBoost is ideal for large datasets because it supports parallelization, distributed computing, and GPU acceleration, making it scalable for large-scale data.

2. How do I speed up XGBoost training on large datasets?

To speed up XGBoost on large datasets, you can:

- Enable parallel tree construction by configuring

nthread. - Use GPU acceleration (

tree_method=gpu_hist). - Leverage distributed computing frameworks like Dask.

- Use early stopping to avoid unnecessary iterations.

- Switch to faster tree-building algorithms like

approxorhist.

3. What is the best tree method for large datasets in XGBoost?

For large datasets, the hist (histogram-based) and gpu_hist (GPU-accelerated) methods are recommended. These methods speed up tree construction by using approximate techniques for finding the best split, rather than the slower exact method. gpu_hist is ideal when you have a GPU, as it further accelerates the process by taking advantage of parallel GPU cores.

4. How can I handle memory issues with XGBoost on big data?

Memory management is crucial when working with large datasets. You can:

- Limit the depth of trees using the

max_depthparameter. - Use out-of-core computation by enabling the

external_memoryoption. - Reduce the number of features or perform feature selection to limit the data complexity.

- Batch process the data using smaller chunks to avoid overwhelming memory.

5. How do I handle imbalanced datasets in XGBoost?

To handle imbalanced datasets, you can:

- Adjust the

scale_pos_weightparameter to give more importance to the minority class. - Use evaluation metrics like AUC-ROC or F1 Score, which focus on class balance rather than simple accuracy.

- Consider techniques like downsampling the majority class or upsampling the minority class.

- Use

max_delta_stepto limit weight updates and reduce bias.

6. Can I use XGBoost with distributed computing?

Yes, XGBoost supports distributed computing. By integrating with Dask or Apache Spark, you can train XGBoost models across multiple machines. This approach is especially useful when working with datasets too large for a single machine.

7. How do I optimize hyperparameters for large datasets?

For large datasets, hyperparameter tuning can be computationally expensive. Instead of a full grid search, start with a random search or Bayesian optimization to narrow down the parameter space. Key parameters to focus on include:

learning_rate: Controls how much the model adjusts after each boosting round.max_depth: Limits tree size to prevent overfitting.subsample: Helps reduce training time by using a subset of data.

8. What is early stopping, and how does it help with large datasets?

Early stopping is a technique where the training process halts once the model’s performance on a validation set stops improving. This is crucial for large datasets, as it prevents overfitting and reduces unnecessary computation. Set the early_stopping_rounds parameter to specify how many rounds of no improvement will trigger early stopping.

9. When should I use GPU acceleration in XGBoost?

GPU acceleration is ideal when you’re working with large datasets that require intense computation. By using the gpu_hist tree method, XGBoost can parallelize tasks across the GPU cores, speeding up the training process by a factor of up to 10x compared to CPU-only methods. However, it’s most effective when the dataset is large enough to justify the overhead of transferring data to the GPU.

10. What are SHAP values, and how do they help in feature selection?

SHAP values (SHapley Additive exPlanations) provide a way to measure the importance of each feature in a model’s predictions. They help you understand which features contribute the most to model accuracy, allowing for more informed feature selection. By focusing on the most impactful features, you can reduce the complexity of the model and speed up training.

11. How can I evaluate XGBoost performance on large datasets?

Evaluating performance on large datasets requires a mix of evaluation metrics and careful cross-validation. Use AUC-ROC, precision, recall, or F1 score for classification tasks, especially when dealing with imbalanced classes. For regression problems, focus on RMSE or MAE. Use cross-validation with random sampling for large datasets to avoid excessive computational costs.

Resources

Official Documentation & Tools:

- XGBoost Official Documentation:

XGBoost’s official documentation offers a detailed guide on installation, parameters, and advanced features like GPU support and distributed computing. - Dask for Distributed Computing:

Explore Dask’s official documentation here to learn how to scale XGBoost models across multiple machines.

Tutorials:

- Handling Imbalanced Data:

A great guide on managing imbalanced datasets with XGBoost can be found on Analytics Vidhya—a useful tutorial on how to adjust weights and avoid overfitting. - GPU Acceleration in XGBoost:

NVIDIA’s developer blog explains how to set up and leverage GPU support for XGBoost here.

Visualization Tools:

- SHAP (SHapley Additive exPlanations):

SHAP values are incredibly useful for feature importance analysis. Visit the SHAP documentation to understand how to visualize and interpret SHAP plots.

Books & Papers:

- “Hands-On Machine Learning with Scikit-Learn, Keras, and TensorFlow” by Aurélien Géron:

This book includes practical exercises and techniques for optimizing large-scale models, including XGBoost. - Chen, T. & Guestrin, C. (2016). XGBoost: A Scalable Tree Boosting System:

Read the original research paper here for an in-depth understanding of how XGBoost was developed and its scalability features.