Understanding the Basics of CNNs

What Are CNNs?

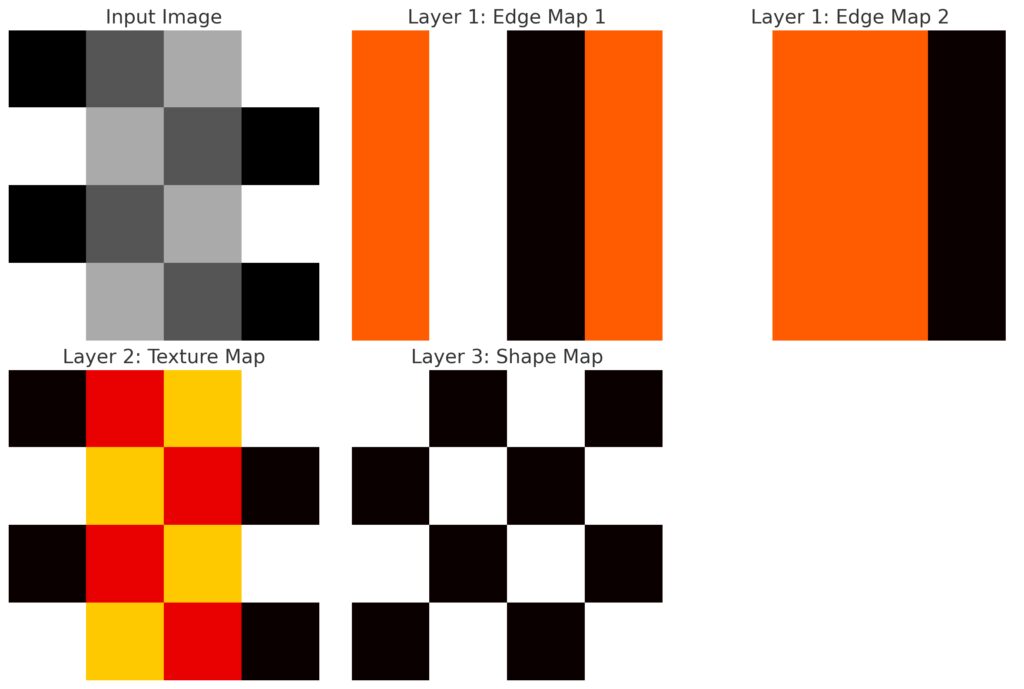

Convolutional Neural Networks (CNNs) are a specialized type of artificial neural network designed to process structured data, particularly images. Unlike traditional networks, CNNs can identify spatial hierarchies, making them highly effective in detecting patterns like edges, shapes, and textures.

Why are they so effective? They reduce computational load while focusing only on relevant features. For instance, instead of analyzing each pixel, they identify high-level patterns like curves or colors, mimicking how our brains process visual data.

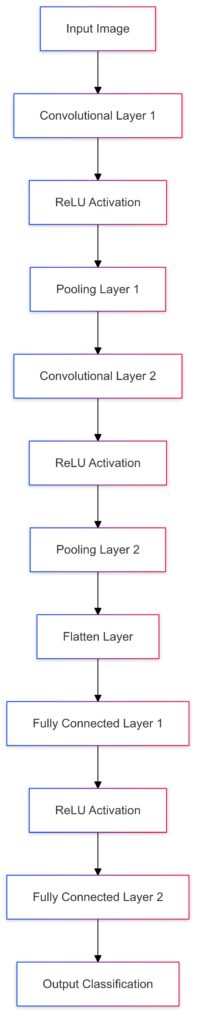

Layers of a CNN Explained

CNNs consist of several layers, each with a specific function:

- Convolutional Layers: These layers extract features using filters or kernels. Filters slide over the image, identifying aspects like edges or gradients.

- Pooling Layers: These layers compress information. For example, max pooling selects the most significant value in a region, shrinking the data size while preserving key features.

- Fully Connected Layers: This is where the CNN compiles information from previous layers and makes predictions. It’s the stage where the model decides if it “sees” a cat, dog, or another object.

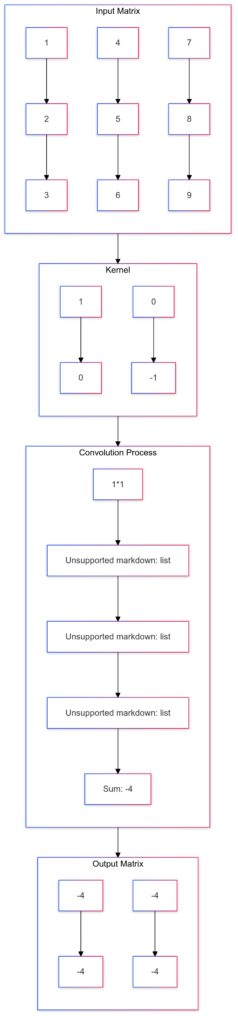

The Mathematics Behind Convolution

The convolution operation is at the heart of CNNs. Picture it as sliding a small grid (kernel) over an image and multiplying pixel values by kernel weights. The results are summed to form a feature map.

Why does this matter? This process highlights important features like edges and patterns while ignoring irrelevant noise. Overlapping kernels ensure no critical information is missed.

Activation Functions in CNNs

Activation functions introduce non-linearity to CNNs, enabling them to learn complex patterns. ReLU (Rectified Linear Unit) is the most common, as it keeps computations efficient by setting negative values to zero while retaining positive values.

Other options like Sigmoid and Tanh exist, but ReLU dominates due to its simplicity and effectiveness in handling the vanishing gradient problem.

Overfitting and Regularization

Overfitting occurs when a CNN memorizes the training data instead of learning to generalize. To combat this:

- Dropout Layers: Randomly deactivate neurons during training to prevent reliance on specific patterns.

- Data Augmentation: Expands datasets with transformations like flipping or rotating images.

- Weight Decay: Penalizes overly complex models, forcing simplicity.

Building Better CNNs

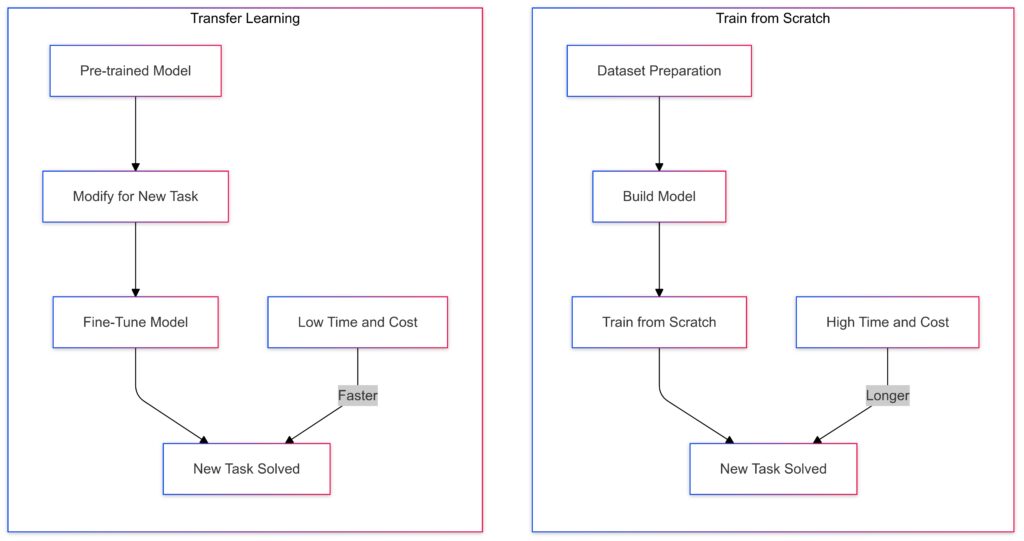

Pre-Trained Models and Transfer Learning

Pre-trained models like VGG, ResNet, and MobileNet offer a head start by leveraging networks trained on large datasets like ImageNet. Instead of starting from scratch, you can fine-tune these models for your specific task, saving significant time and resources.

For instance, in medical imaging, transfer learning enables you to adapt a general-purpose model to detect abnormalities in X-rays without needing millions of labeled images. This approach is powerful for tasks with limited data availability.

Fine-Tuning CNN Hyperparameters

Optimizing CNN performance often involves tweaking hyperparameters like:

- Kernel size: Affects feature resolution. Larger kernels detect broader patterns, while smaller kernels focus on finer details.

- Stride: Determines how much a kernel shifts during convolution. A larger stride reduces the feature map size, speeding up computations but potentially losing details.

- Padding: Controls how edges are handled. “Same” padding retains the image size, while “valid” padding reduces it.

Experimentation, guided by tools like Grid Search or Bayesian Optimization, helps achieve optimal configurations.

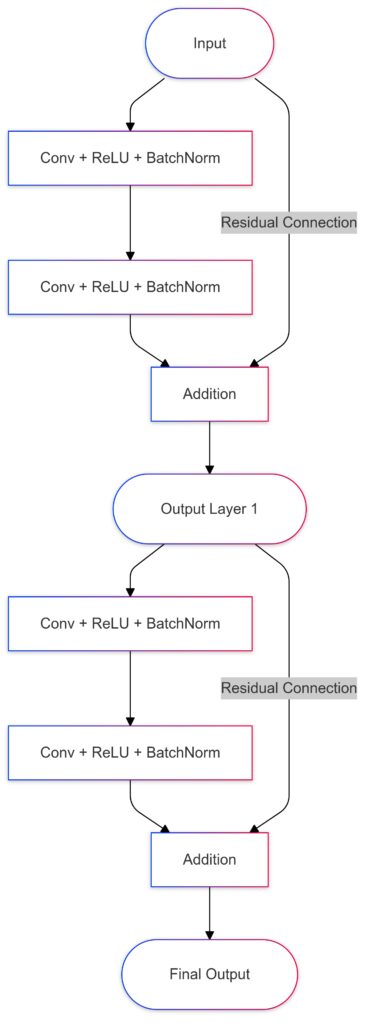

Training Deep CNNs

Training deeper networks, such as ResNets with hundreds of layers, requires strategies to prevent issues like vanishing gradients:

- Batch Normalization: Standardizes layer inputs, improving stability.

- Residual Connections: Skip connections in ResNet bypass layers, allowing gradients to flow back without vanishing.

- Dropout Layers: Reduces overfitting by randomly deactivating neurons during training.

Additionally, using GPUs or distributed training significantly speeds up computations, enabling larger and deeper models.

Visualization and Interpretation

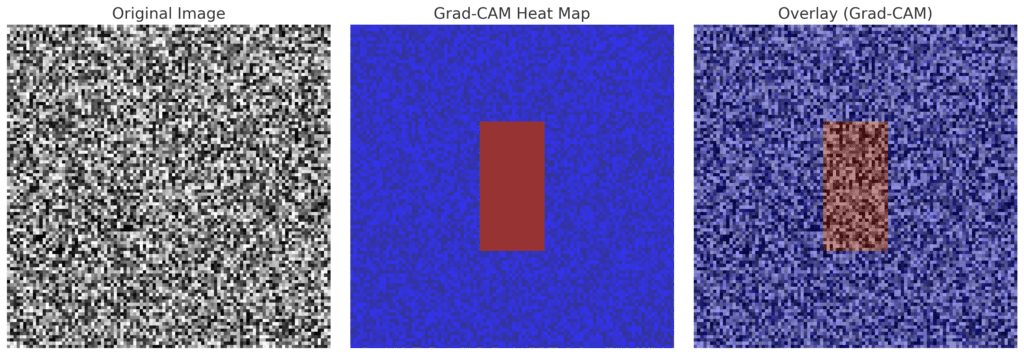

Understanding what a CNN “sees” is crucial for trust and debugging. Tools like Grad-CAM (Gradient-weighted Class Activation Mapping) visualize feature maps to show which parts of an image influenced a prediction.

For example, in medical imaging, Grad-CAM can highlight regions of an X-ray linked to a tumor prediction, helping radiologists validate AI findings. This interpretability ensures that CNNs are more than black-box models.

Architectures Beyond Basic CNNs

Residual Network architecture with skip connections that bypass layers to enhance gradient flow and improve training efficiency.

Basic CNNs have limitations, such as struggling with vanishing gradients or computational inefficiency. Modern architectures address these issues:

- ResNet: Introduced residual connections to train deeper networks effectively.

- Inception: Combines multiple kernel sizes in one layer for more comprehensive feature extraction.

- DenseNet: Connects each layer to every other layer, maximizing information flow.

These advancements push the boundaries of what CNNs can achieve in complex tasks.

Real-World Applications of CNNs

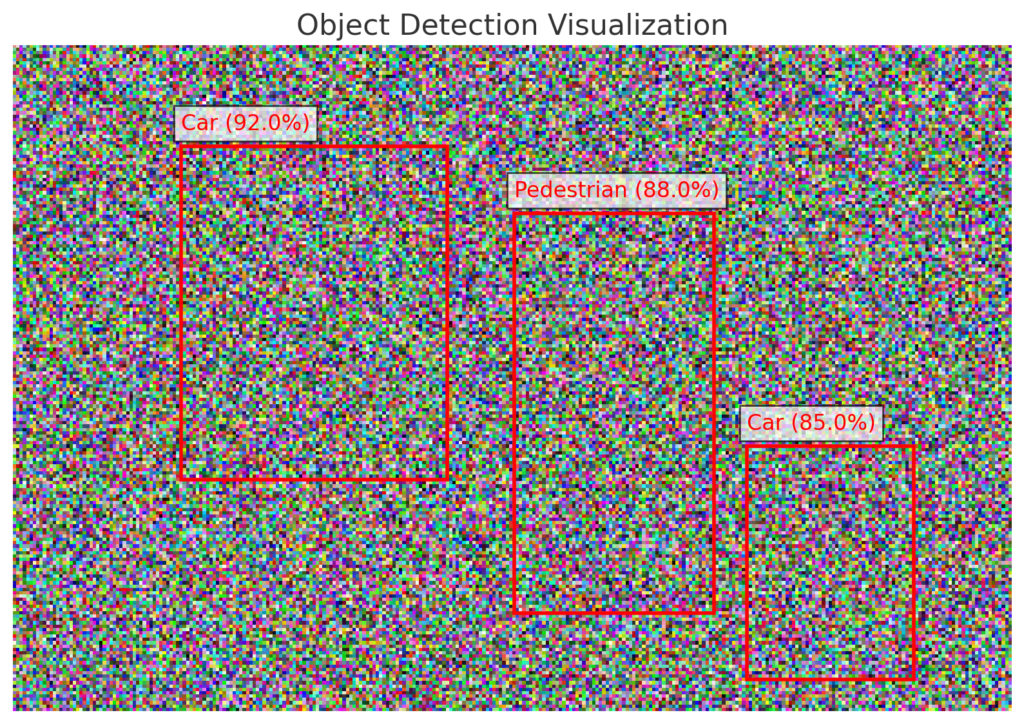

Image Recognition and Object Detection

CNNs shine in image recognition tasks, enabling machines to identify objects, faces, and even emotions. Advanced frameworks like YOLO (You Only Look Once) and Faster R-CNN enhance object detection by spotting multiple items in an image with high accuracy.

Real-world use cases:

- Security: Facial recognition for surveillance systems.

- Retail: Identifying items in inventory management.

- Healthcare: Detecting abnormalities in medical scans.

Natural Language Processing with CNNs

While traditionally used for images, CNNs also excel in text-related tasks, processing data as sequences or grids:

- Text classification: Spam detection, sentiment analysis, and topic identification.

- Named Entity Recognition (NER): Identifying names, places, and other specific terms.

For instance, in sentiment analysis, CNNs capture n-grams (word groups) to understand tone and context. This makes them ideal for analyzing social media trends or customer feedback.

Medical Imaging Advancements

CNNs revolutionize healthcare, particularly in medical imaging. By analyzing complex patterns in scans, they provide early detection of diseases:

- Tumor detection: Spotting malignancies in CT or MRI scans.

- X-ray analysis: Diagnosing conditions like pneumonia or fractures.

In many cases, CNNs outperform human experts, offering faster and often more accurate diagnostics. Tools like TensorFlow and PyTorch power these innovations in clinical AI systems.

Autonomous Vehicles

CNNs form the backbone of self-driving car vision systems. These systems process massive amounts of visual data in real-time, identifying lanes, road signs, pedestrians, and obstacles.

Key components:

- Lane detection using segmentation techniques.

- Object detection to avoid collisions.

- Behavior prediction of surrounding vehicles.

Companies like Tesla and Waymo rely on CNNs to navigate complex driving environments safely.

Creative and Fun Applications

CNNs also fuel artistic and recreational breakthroughs:

- Style transfer: Applying artistic styles (e.g., Van Gogh) to photos using deep learning.

- AR filters: Face and object tracking in apps like Instagram and Snapchat.

- Gaming: Enhancing textures and animations in virtual environments.

These playful uses highlight the versatility of CNNs in blending art, entertainment, and technology.

With this, we’ve covered both the fundamentals and advanced applications of CNNs. Let’s summarize everything in the conclusion!

Conclusion: Unlocking the Power of CNNs

Convolutional Neural Networks (CNNs) have redefined how machines perceive and interpret visual and structured data. From their layered architecture to advanced applications like autonomous vehicles and medical imaging, CNNs showcase incredible versatility and efficiency.

Key takeaways:

- CNNs mimic how humans process visual information, excelling in tasks like image recognition and object detection.

- Modern techniques such as transfer learning, hyperparameter tuning, and advanced architectures like ResNet and DenseNet push their capabilities further.

- Beyond technical tasks, CNNs power creative solutions, from style transfer to AR innovations.

Their blend of precision, scalability, and adaptability positions CNNs as a cornerstone of modern AI, paving the way for continued breakthroughs in both industry and academia.

Stay curious, explore more, and dive deeper into the world of CNNs to harness their full potential!

FAQs

Can CNNs be used outside image processing?

Absolutely! CNNs are effective in various domains:

- Text classification: Sentiment analysis in customer reviews.

- Audio processing: Identifying speech patterns or environmental sounds.

- Time-series data: Analyzing stock market trends or sensor data.

For example, a CNN might process spectrograms (visual representations of sound) to recognize spoken words in an audio file.

How do modern architectures like ResNet improve CNNs?

ResNet introduces residual connections, allowing the network to skip layers. This solves the vanishing gradient problem and enables training of deeper networks.

In image classification, ResNet models are known for their ability to outperform simpler CNNs, identifying subtle patterns like textures in animal fur or fine details in industrial defect detection.

Is transfer learning useful for small datasets?

Yes, transfer learning is especially beneficial when data is limited. By starting with a pre-trained model, you can adapt its knowledge to your dataset.

For instance, using a pre-trained ResNet trained on ImageNet, you could quickly fine-tune it to classify medical X-rays without requiring millions of labeled images.

What are some practical tools for building CNNs?

Popular frameworks include:

- TensorFlow: Offers flexibility for custom architectures.

- PyTorch: Preferred for research due to its dynamic computation graph.

- Keras: Ideal for beginners with its intuitive API.

These tools provide pre-built functions for layers, training, and visualization, making it easy to implement CNNs for projects like object detection or text recognition.

Can CNNs make mistakes?

Yes, like all AI models, CNNs can make errors, especially when:

- Trained on biased or insufficient data.

- Exposed to adversarial examples, such as slightly altered images designed to trick the network.

For example, a CNN trained on urban images might misclassify a rural photo because of unfamiliar textures. Ensuring high-quality, diverse data reduces these errors.

How are feature maps generated in CNNs?

Feature maps are created during the convolution operation, where a kernel (or filter) slides across the input image, performing element-wise multiplication and summing the results. This process extracts specific features, such as edges, textures, or patterns.

For example, if you input an image of a dog, early layers may detect edges and curves, while deeper layers focus on the dog’s fur texture or eye shapes.

Why is data augmentation important for CNNs?

Data augmentation artificially increases the diversity of the training dataset by applying transformations like rotation, flipping, zooming, and cropping. It helps:

- Prevent overfitting by exposing the model to varied examples.

- Improve robustness to variations in input data.

For instance, in training a CNN for face recognition, augmenting with flipped or rotated images ensures the model performs well regardless of the face’s orientation.

What’s the difference between padding types in CNNs?

Padding controls how the edges of an image are handled during convolution:

- Same padding: Maintains the input dimensions by adding zeros around the image.

- Valid padding: Only processes the part of the image where the kernel fits fully, reducing dimensions.

In tasks like medical imaging, same padding is useful to retain fine details near the image edges, such as a tumor’s boundary.

How do CNNs handle color images versus grayscale images?

Color images have three channels (RGB), while grayscale images have only one. CNNs process color images by using 3D kernels that slide across the height, width, and channel dimensions simultaneously.

For example, in an RGB image of a sunset, one kernel might focus on red hues to detect the sun, while another emphasizes blue tones for the sky. Grayscale images, being simpler, require fewer kernels.

What role does batch normalization play in CNNs?

Batch normalization standardizes the inputs to each layer, ensuring consistent mean and variance. This stabilizes training, speeds up convergence, and reduces sensitivity to initialization.

For instance, in a CNN training to classify handwritten digits, batch normalization ensures that small differences in digit thickness don’t disrupt the learning process.

Are CNNs sensitive to input resolution?

Yes, CNNs are sensitive to input resolution. Resizing an image too much can lead to loss of critical features, while oversized inputs increase computational costs.

For example, in object detection, downscaling a high-resolution traffic sign image may blur text or fine details, making it harder for the CNN to identify. Tools like resizing and cropping help balance resolution and performance.

What is the role of stride in convolution?

Stride determines how far the kernel moves during convolution.

- Stride of 1: Overlapping kernels cover every pixel, capturing fine details but increasing computational load.

- Stride greater than 1: Skips pixels, reducing feature map size and computational cost.

For instance, detecting large objects like cars in aerial images might use a stride of 2, while detecting tiny objects like streetlights might require a stride of 1.

Can CNNs handle video data?

Yes, CNNs can process videos using extensions like 3D CNNs, which apply convolution over spatial (height, width) and temporal (time) dimensions.

For example, in action recognition, a 3D CNN might analyze consecutive video frames to identify movements like jumping or running. This makes them ideal for applications like security surveillance and sports analytics.

How are CNNs used in edge devices?

CNNs are optimized for edge devices (e.g., smartphones, drones) by reducing their size and computational requirements. Techniques like model quantization and pruning help compress models while maintaining accuracy.

For instance, lightweight CNN architectures like MobileNet enable real-time facial recognition on smartphones without requiring cloud processing.

What’s the biggest challenge in using CNNs?

The main challenges include:

- Data dependency: CNNs require large labeled datasets for effective training.

- Computational cost: Training deep networks demands high-performance hardware like GPUs.

- Interpretability: Understanding why a CNN made a specific decision can be difficult.

For example, if a CNN misclassifies a cat as a dog, identifying whether the issue lies in the training data, architecture, or feature extraction requires careful debugging.

How do CNNs handle spatial hierarchies in images?

CNNs are designed to capture spatial hierarchies, meaning they identify simple patterns in the early layers and progressively more complex structures in deeper layers.

For example, in an image of a car:

- Early layers detect edges and corners.

- Intermediate layers identify wheels and windows.

- Final layers recognize the entire car as a whole object.

This hierarchical feature learning makes CNNs particularly effective for image-related tasks.

Why is GPU acceleration crucial for CNNs?

CNNs involve intensive matrix computations, particularly during the training phase. GPUs are optimized for parallel processing, significantly reducing training time compared to CPUs.

For instance, training a deep CNN like ResNet on a large dataset (e.g., ImageNet) might take weeks on a CPU but only days on a high-performance GPU. Frameworks like TensorFlow and PyTorch are designed to leverage GPUs seamlessly.

What is the vanishing gradient problem, and how do CNNs address it?

The vanishing gradient problem occurs in deep networks when gradients become too small to update weights effectively during backpropagation. This makes training slow or ineffective.

CNNs address this through:

- Activation functions like ReLU, which maintain non-zero gradients.

- Batch normalization, ensuring stable gradient flow.

- Residual connections in architectures like ResNet, which bypass layers and allow gradients to propagate effectively.

How do CNNs compare to RNNs?

CNNs are designed for spatial data like images, while Recurrent Neural Networks (RNNs) are better suited for sequential data like time-series or text.

For example:

- A CNN might classify objects in a photo (static data).

- An RNN might predict stock prices based on historical trends (dynamic data).

That said, CNNs can also handle sequential data like spectrograms in audio or pixel patterns in handwritten text, making them versatile.

What is the role of dropout in CNNs?

Dropout is a regularization technique where random neurons are deactivated during training. This prevents the network from relying too heavily on specific neurons, reducing the risk of overfitting.

For example, in a CNN trained to classify dog breeds, dropout ensures the model doesn’t memorize specific features, like the exact fur pattern of a Golden Retriever, and instead generalizes across all retrievers.

Can CNNs detect objects at multiple scales?

rogressive feature maps generated by convolutional layers, capturing edges, textures, and complex patterns in an input image.

Yes, CNNs can detect objects of various sizes using multi-scale feature extraction techniques. Architectures like Faster R-CNN and YOLO excel at this by processing feature maps at different resolutions.

For instance, in drone surveillance, multi-scale detection allows a CNN to identify both large buildings and tiny pedestrians within the same frame.

How do CNNs handle occlusions in images?

Occlusions (when part of an object is hidden) are challenging for CNNs. However, CNNs can still recognize partially visible objects by leveraging context and high-level patterns learned from training data.

For example, a CNN trained to detect faces might recognize someone wearing sunglasses by focusing on visible features like the nose and mouth. Data augmentation (e.g., random cropping) during training can also improve performance under occlusions.

What are feature pyramids, and why are they important?

Feature pyramids involve creating multiple scales of feature maps, enabling a CNN to detect objects of varying sizes. This technique is used in advanced architectures like FPN (Feature Pyramid Network).

For example, in wildlife monitoring, a feature pyramid helps a CNN detect both large animals (like elephants) and smaller ones (like birds) in the same image.

Can CNNs process 3D data?

Yes, CNNs can process 3D data using specialized architectures like 3D CNNs or volumetric CNNs. These extend the convolution operation to three dimensions, capturing spatial and volumetric relationships.

Applications include:

- Medical imaging: Analyzing 3D scans like MRIs.

- Autonomous driving: Interpreting point cloud data from LiDAR sensors.

How are adversarial attacks a threat to CNNs?

Adversarial attacks involve slightly modifying input data to mislead a CNN. These changes are often imperceptible to humans but cause the CNN to make incorrect predictions.

For example, by adding subtle noise to an image of a stop sign, an attacker might trick a CNN in a self-driving car into interpreting it as a speed limit sign. Techniques like adversarial training help CNNs become more robust to such attacks.

What are lightweight CNN architectures?

Lightweight CNNs, such as MobileNet and SqueezeNet, are designed for resource-constrained environments like smartphones or IoT devices. They use techniques like depthwise separable convolutions to reduce computational requirements while maintaining accuracy.

For instance, MobileNet enables real-time face recognition on mobile devices without offloading tasks to the cloud.

Can CNNs improve with unsupervised learning?

While CNNs traditionally rely on labeled data, unsupervised learning techniques like self-supervised learning and contrastive learning are gaining traction. These methods allow CNNs to learn useful features from unlabeled data.

For example, by training on millions of unlabeled images, a CNN can pre-learn patterns like shapes and textures, making it more effective when fine-tuned on smaller labeled datasets.

How can CNNs be debugged when they fail?

When a CNN fails, debugging involves:

- Visualizing feature maps: Check if the CNN is focusing on relevant image regions using tools like Grad-CAM.

- Analyzing training data: Ensure diversity and quality in the dataset to avoid biases.

- Tuning hyperparameters: Adjust kernel size, stride, or learning rate.

For example, if a CNN misclassifies dogs as wolves, Grad-CAM might reveal that it’s focusing on snowy backgrounds, not the animals, highlighting the need for diverse training data.

Resources

Foundational Articles and Tutorials

- Stanford’s CS231n: Convolutional Neural Networks for Visual Recognition

- An excellent online course covering CNN theory and applications. Includes lecture notes, assignments, and practical examples.

- Visit the Course

- Deep Learning Book by Ian Goodfellow, Yoshua Bengio, and Aaron Courville

- Chapters 9 and 10 provide a detailed exploration of CNNs, their architecture, and their mathematical foundations.

- Read the Book

- Towards Data Science (Blog on Medium)

- Features numerous articles breaking down CNN concepts, architectures, and applications.

- Example: “A Beginner’s Guide to Convolutional Neural Networks” explains CNN layers and their workings.

- Explore Articles

Hands-On Tools and Frameworks

- TensorFlow

- Offers pre-built layers for creating CNNs with ease, along with tools for training and visualization.

- Free tutorials: “Deep Learning for Beginners with TensorFlow”.

- Visit TensorFlow

- PyTorch

- A flexible framework popular for research and experimentation. Includes pre-trained models and simple APIs for CNN design.

- Check out the 60 Minute Blitz to get started.

- Visit PyTorch

- Keras

- A high-level deep learning library for quick prototyping and deployment. Great for beginners working with CNNs.

- Example tutorial: “Building Your First CNN with Keras” on the official documentation site.

- Visit Keras

Video Courses

- Coursera: Deep Learning Specialization by Andrew Ng

- Module 4 focuses on CNNs, including visualizations and use cases like facial recognition and style transfer.

- Visit Course

- Fast.ai: Practical Deep Learning for Coders

- A beginner-friendly course that covers CNNs while emphasizing real-world applications.

- Visit Course

- YouTube: 3Blue1Brown’s Neural Networks Series

- Explains CNNs visually and intuitively, breaking down complex topics like convolutions and backpropagation.

Research Papers and Advanced Concepts

- “ImageNet Classification with Deep Convolutional Neural Networks” (AlexNet Paper)

- The seminal paper introducing CNNs for large-scale image classification.

- “Deep Residual Learning for Image Recognition” (ResNet Paper)

- Explains the concept of residual connections and their impact on training deep networks.

- Read the Paper

- “Going Deeper with Convolutions” (Inception Paper)

- Discusses the Inception architecture and its innovations for multi-scale feature extraction.

- Read the Paper

Online Communities and Forums

- Stack Overflow

- Ideal for troubleshooting code and understanding CNN implementation challenges.

- Visit Stack Overflow

- Reddit: r/MachineLearning

- A community-driven forum for discussions, questions, and sharing resources about CNNs and other deep learning topics.

- Visit Subreddit

- Kaggle

- Access free datasets, tutorials, and kernels (notebooks) with CNN implementations for practical projects.

- Example: Datasets for handwritten digit recognition (MNIST) and medical image classification.

- Visit Kaggle