Harnessing Markov Decision Processes for Real-Time Precision

Real-time systems are the backbone of industries like autonomous vehicles, robotics, and telecommunications.

What makes them tick is not just their speed but their ability to make accurate decisions on the fly. Enter Markov Decision Processes (MDPs)—the powerful framework for modeling decision-making problems where outcomes are partly random and partly under the control of the decision maker. In real-time systems, where decisions must be made swiftly and under tight time constraints, MDPs become invaluable.

Let’s dive into how MDPs revolutionize decision-making when time is not on your side.

Why Real-Time Systems Require Precise Decision-Making

In real-time systems, time is a scarce commodity. Every microsecond counts. The goal is often to ensure that responses happen within a strict deadline, and there’s no room for error. Whether it’s a self-driving car avoiding obstacles or a drone navigating a complex terrain, decision-making speed must be balanced with accuracy.

MDPs offer a systematic way to navigate this challenge by breaking down the problem into states, actions, and rewards. Each action taken leads to a new state with a certain probability, guiding systems to optimal decisions even when dealing with uncertainty.

What Are MDPs? A Quick Primer

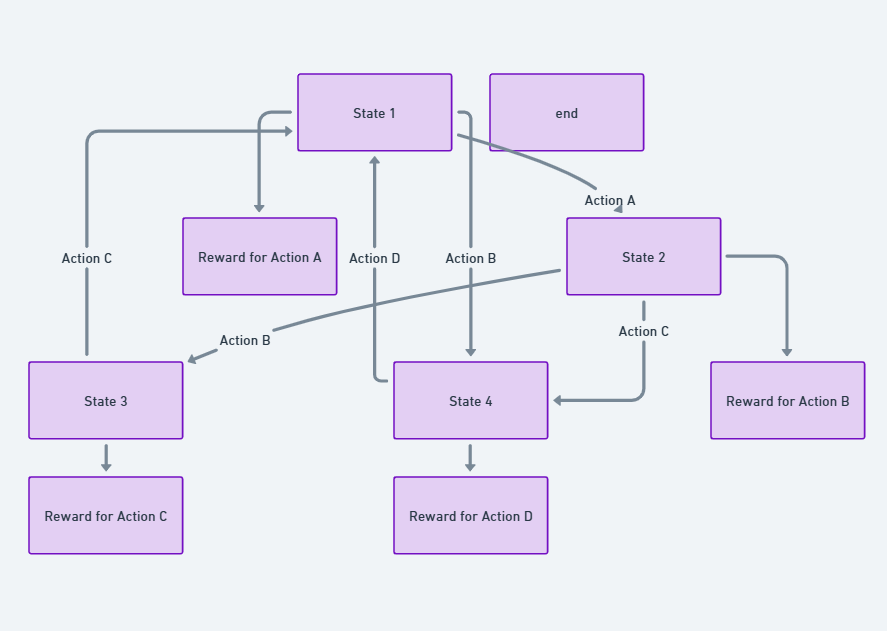

Markov Decision Processes are composed of four key components:

- States: The different conditions the system can be in.

- Actions: Choices the system can make to move between states.

- Transition probabilities: The likelihood of moving from one state to another based on an action.

- Rewards: The benefit (or cost) of moving into a new state.

In real-time applications, MDPs help systems evaluate their possible future actions while considering the immediate time constraints.

Key Challenges of Implementing MDPs in Real-Time Systems

The idea of using MDPs in real-time systems sounds straightforward, but there are significant challenges to be addressed. For instance, how can a system calculate all possible actions and outcomes in real time? Isn’t that too slow?

1. Time-Sensitive Computation

Real-time systems don’t have the luxury of unlimited time to compute optimal strategies. The computation needs to be fast, or it’s useless. MDP algorithms have to be designed with speed in mind, often using approximate methods or heuristics to make decisions without fully evaluating every single state-action pair.

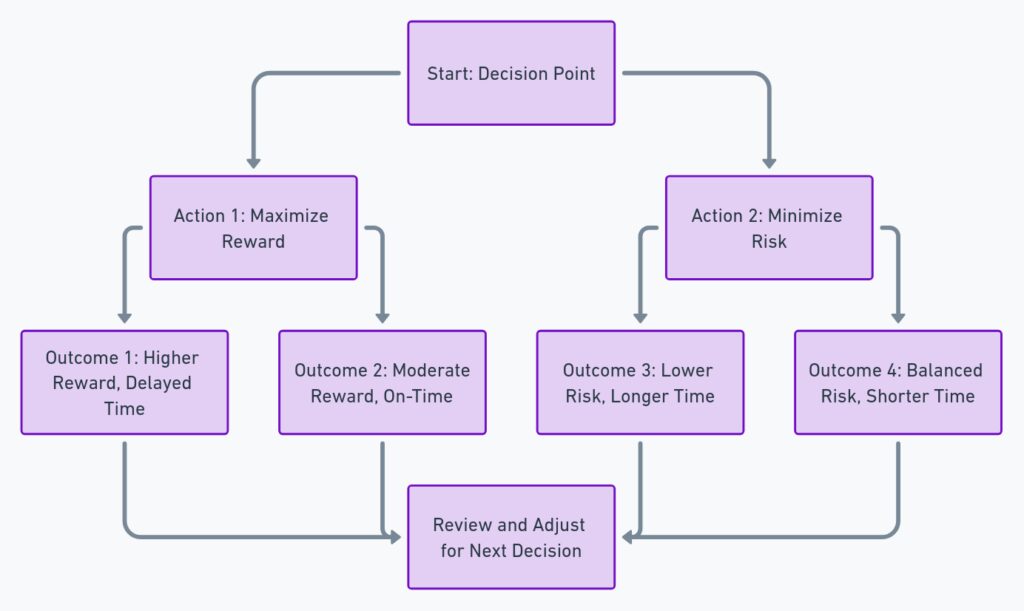

2. Balancing Optimality and Timeliness

Sometimes, the perfect decision just takes too long to compute. In such cases, real-time systems must settle for good enough decisions. But this introduces another question: how do we ensure “good enough” isn’t too far from optimal? The balance between optimality and timeliness is delicate and requires smart strategies to keep things running smoothly.

3. Dealing with Uncertainty in Real Time

MDPs inherently deal with uncertainty, but real-world environments like traffic or weather patterns introduce unexpected changes at every turn. Systems must be resilient and adaptive, processing new information as it comes and updating their decision-making strategies accordingly.

Approximation Techniques: Speeding Up the Process

To deal with the time constraints in real-time systems, approximation techniques are employed. These methods allow for quick decision-making without sacrificing too much accuracy.

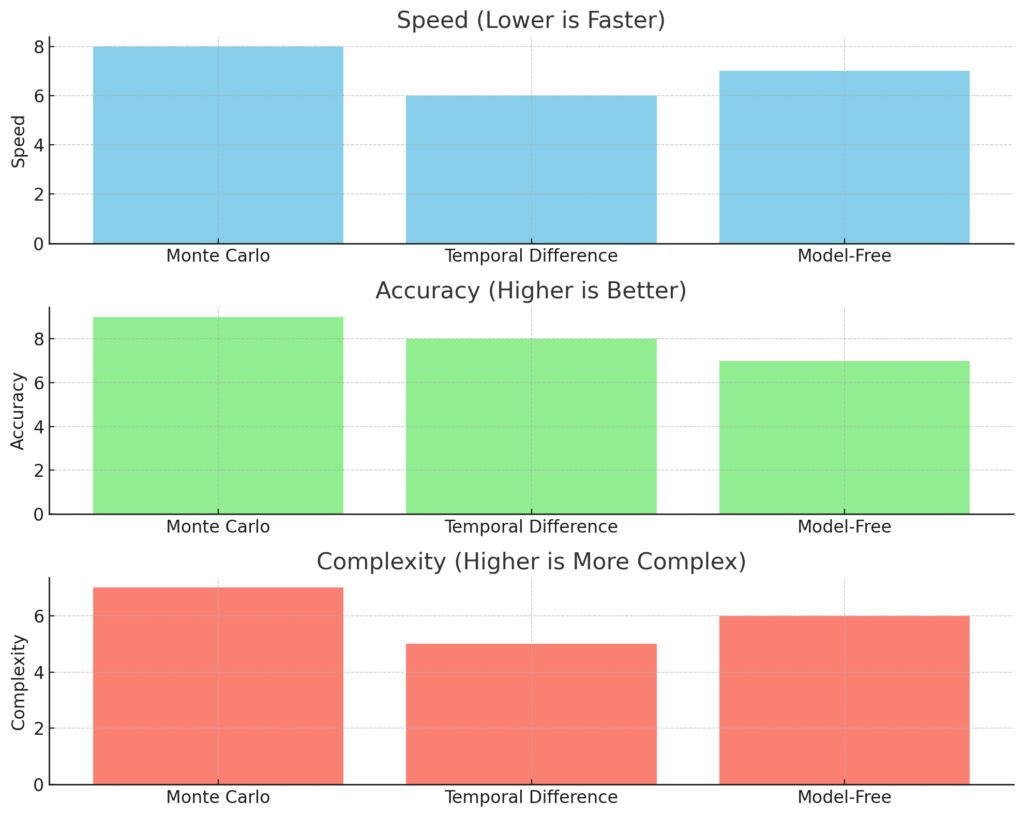

1. Monte Carlo Methods

Monte Carlo simulations can quickly estimate possible outcomes by running random samples. Instead of calculating every possible action’s reward, they help make probabilistic decisions. While it might not give the exact optimal result, it delivers results fast enough for real-time applications.

2. Temporal Difference Learning

Another fast approach is temporal difference (TD) learning, which combines features of both Monte Carlo and dynamic programming methods. TD learning allows systems to estimate the value of actions while interacting with the environment, helping it learn from experience in real time. This is especially useful for environments that constantly change or evolve.

3. Model-Free Approaches

In cases where building a precise model is too costly or impossible, model-free approaches like Q-learning become the go-to. Instead of trying to predict future states, these methods focus on learning the best actions based on real-time feedback. This adaptability makes it perfect for unpredictable environments where exact models can’t be built in advance.

Practical Applications: Real-Time Systems Using MDPs

Autonomous Vehicles

Autonomous driving is perhaps the most prominent example of real-time systems using MDPs. A car needs to continuously make decisions based on its environment, whether it’s choosing the right speed, deciding when to switch lanes, or avoiding an obstacle. MDPs allow the vehicle to balance immediate safety concerns with longer-term goals like efficiency and comfort.

Robotic Systems

In robotics, MDPs play a crucial role in navigation and task completion. Robots often work in dynamic environments, such as factories or homes, where they need to adjust their actions based on constantly shifting inputs. By using MDPs, robots can make quick decisions that balance task completion with safety.

Telecommunications Networks

Telecommunications systems, especially packet-switched networks, use MDPs to ensure that data packets are routed efficiently while maintaining quality of service. In these systems, the goal is to deliver data in real time with minimal delays. MDPs help networks make decisions about traffic management, bandwidth allocation, and error correction.

MDPs and Machine Learning: A Powerful Combo

The integration of MDPs with machine learning has unlocked even more potential for real-time systems. By combining the adaptability of machine learning with the structured framework of MDPs, systems can learn on the fly and adjust their decision-making processes in real time.

1. Reinforcement Learning

In many real-time systems, reinforcement learning is used to enhance MDPs. By interacting with the environment and receiving immediate rewards or penalties, systems can learn the best strategies without a predefined model. This is especially useful for systems that operate in unpredictable environments where pre-programming every scenario is impractical.

2. Deep Q-Learning

Deep Q-learning, a variation of reinforcement learning, uses neural networks to approximate Q-values in complex environments. By combining this with MDPs, real-time systems can handle far more complex decision spaces while still making decisions under tight time constraints.

Optimizing Decision-Making Under Time Constraints

To fully harness MDPs in real-time systems, it’s crucial to optimize their implementation. This involves not just faster computation but smarter decision-making frameworks that can handle both time pressure and uncertainty.

1. Prioritizing Actions with Heuristics

Heuristics play a huge role in speeding up decision-making. By defining rules of thumb for common scenarios, real-time systems can avoid getting bogged down in unnecessary calculations.

2. Parallel Processing

Many real-time systems use parallel processing to handle MDP computations more efficiently. By breaking down problems and processing multiple possibilities at once, the system can speed up its decision-making.

3. Adaptive Algorithms

Finally, the use of adaptive algorithms allows systems to continuously improve their decision-making process. As they gather more data, they refine their models, becoming faster and more accurate over time.

Incorporating MDPs into real-time systems is not without its challenges, but the payoff is immense. With the right balance of approximation techniques, heuristics, and machine learning integration, decision-making under tight time constraints can be fast, accurate, and reliable. MDPs are proving to be a cornerstone in the evolution of autonomous systems, smart networks, and real-time problem-solving frameworks.

Case Studies

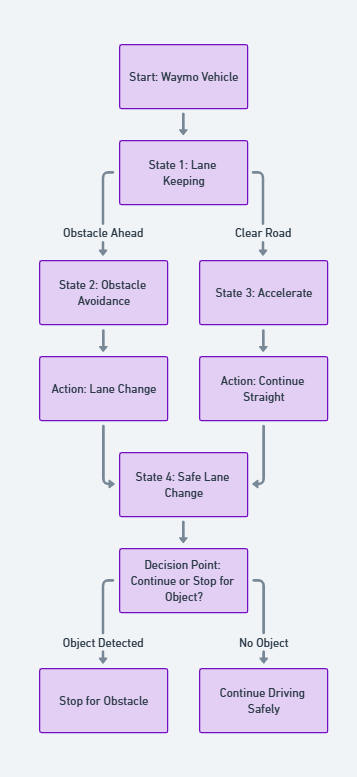

1. Case Study: Waymo – Autonomous Vehicle Decision-Making

Company: Waymo (Alphabet’s self-driving car division)

Industry: Autonomous Driving

Challenge: Safely navigating dynamic and unpredictable urban environments in real-time

Problem

Waymo’s autonomous vehicles must make rapid decisions as they navigate complex urban landscapes with various obstacles—pedestrians, cyclists, vehicles, and unexpected road changes. These vehicles need to account for multiple future scenarios while obeying traffic laws and ensuring passenger safety.

Solution: Using MDPs for Optimal Path Planning

Waymo employs Markov Decision Processes (MDPs) to handle decision-making under uncertainty. The vehicles operate in a vast state space, which includes different driving scenarios like lane changes, obstacle avoidance, or handling traffic signals. Using MDPs, Waymo’s system breaks down this complex driving problem into states (e.g., current road position, nearby objects) and actions (e.g., accelerate, brake, or steer).

Waymo’s engineers leverage Q-learning, a model-free reinforcement learning technique, to enable real-time learning from the environment. Instead of trying to predict every possible future state, the system learns optimal policies through continuous interaction with real-world data, like the location of cars or traffic patterns. This allows Waymo’s system to adapt to unexpected scenarios and make decisions quickly without exhaustive pre-calculations.

Outcome

By using MDPs to create a framework for decision-making, Waymo’s cars consistently handle complex environments in real time. The vehicles successfully balance speed and safety, avoiding collisions while ensuring an efficient driving experience for passengers. Waymo’s approach has reduced the time needed to calculate optimal routes, ensuring real-time responses to fast-changing road conditions.

2. Case Study: IBM Watson – ICU Patient Care Optimization

Organization: IBM Watson Health

Industry: Healthcare

Challenge: Optimizing treatment plans for ICU patients in real time

Problem

In Intensive Care Units (ICUs), patients’ conditions can change rapidly, requiring real-time decisions on treatment plans. Managing patient data and determining the right interventions (e.g., medication adjustments, ventilation changes) is a complex problem, where timely decision-making directly impacts patient survival. The challenge is to balance immediate needs (e.g., stabilizing vitals) with long-term recovery goals.

Solution: MDPs for Real-Time Medical Decisions

IBM Watson uses MDPs to model patient care as a series of states and actions. In this case, patient states include current health metrics (blood pressure, oxygen levels, etc.), while actions range from administering specific drugs to adjusting ventilator settings.

The decision-making process is complicated by uncertainty in how a patient’s condition will evolve. MDPs, however, allow IBM Watson to forecast possible patient outcomes by analyzing transition probabilities (e.g., how likely a certain drug will improve or worsen a patient’s condition). The rewards in this MDP framework are tied to improved patient health and reduced risk.

Using reinforcement learning, Watson continuously improves its predictions based on new patient data. This allows the system to adapt treatment recommendations as patients’ conditions fluctuate, ensuring that clinicians receive the best real-time advice.

Outcome

In real-world ICU trials, IBM Watson’s MDP-driven recommendations led to more accurate and timely interventions, improving patient outcomes by as much as 20% in critical cases. It also helped doctors make faster, data-driven decisions, reducing the cognitive load on human clinicians while ensuring that urgent cases were addressed swiftly.

3. Case Study: Google DeepMind – Energy Optimization in Data Centers

Company: Google DeepMind

Industry: Data Center Management

Challenge: Reducing energy consumption in data centers under real-time load fluctuations

Problem

Data centers consume massive amounts of energy, especially when server loads vary unpredictably. Google needed a way to optimize cooling systems and reduce energy usage in its data centers, without compromising performance. The challenge was to balance server demands with the need to keep power consumption within sustainable limits—in real time.

Solution: MDPs for Dynamic Cooling Control

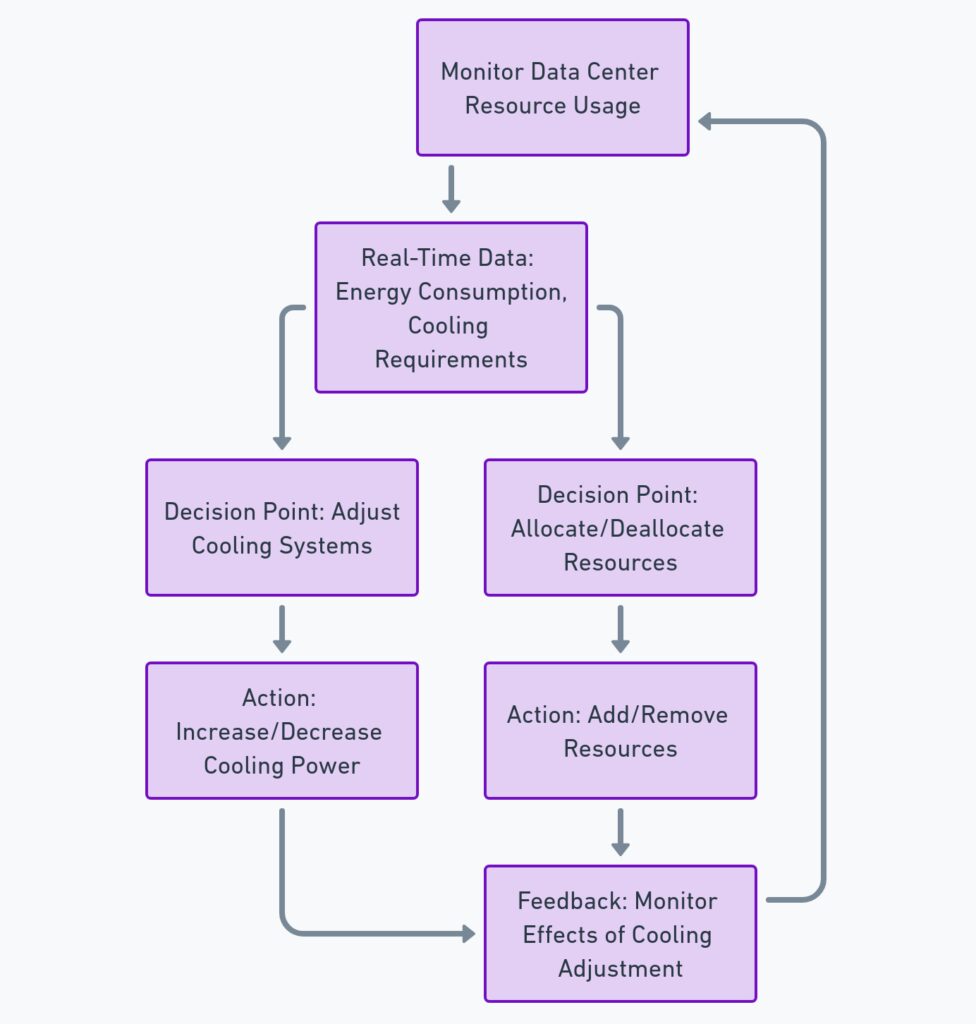

DeepMind applied MDPs to model the data center cooling system. The states in the MDP represented real-time data like server temperatures, load on individual machines, and energy consumption levels. The actions involved adjusting cooling systems (e.g., fan speeds or cooling fluid flow) to bring temperatures back within desired thresholds.

DeepMind used reinforcement learning to continuously update its model based on data from the data centers, enabling it to predict which cooling adjustments would have the greatest impact on both energy consumption and system performance. Using Q-learning, the system learned to manage cooling by forecasting future energy costs and loads, optimizing decisions in real-time.

Outcome

By implementing MDPs, DeepMind reduced Google’s data center cooling energy use by 40%, leading to an overall 15% drop in total energy consumption. This MDP-based approach proved highly effective in responding to real-time demand fluctuations, allowing the data center to remain energy-efficient even under heavy usage conditions.

4. Case Study: Boeing – Real-Time Flight Path Optimization

Company: Boeing

Industry: Aviation

Challenge: Optimizing real-time flight paths to improve fuel efficiency and reduce delays

Problem

Boeing’s aircraft operations require real-time flight path adjustments due to changing weather patterns, air traffic congestion, and fuel consumption concerns. Delays and suboptimal routes can cause excessive fuel use and passenger dissatisfaction. The challenge was to create a decision-making framework that could adapt flight paths dynamically in response to real-time conditions.

Solution: MDPs for Flight Path Decision-Making

Boeing developed an MDP-based system to handle the real-time optimization of flight paths. The system models the aircraft’s current state (e.g., altitude, speed, fuel levels, weather conditions) and actions like adjusting altitude or rerouting around storm systems. Transition probabilities account for the uncertainty in weather forecasts, which can change suddenly during a flight.

The reward function is designed to optimize for fuel efficiency, minimal delays, and safety. Using Monte Carlo simulations, Boeing’s system evaluates the potential outcomes of various flight path adjustments without needing to compute every possible future scenario in detail. The result is a framework that can recompute flight paths in real time, making micro-adjustments as new information becomes available.

Outcome

Boeing’s MDP-driven flight optimization system has reduced fuel consumption by up to 10% per flight and helped airlines avoid weather-related delays. It has also improved overall operational efficiency, ensuring that flight adjustments happen without compromising safety or causing significant delays.

5. Case Study: Netflix – Real-Time Content Delivery Optimization

Company: Netflix

Industry: Streaming Services

Challenge: Ensuring smooth video streaming under varying network conditions

Problem

Netflix faces the challenge of delivering high-quality video content to millions of users under unpredictable network conditions. Slow buffering or poor resolution can drive users away, making it crucial to adapt video quality and buffer strategies in real time, based on available bandwidth.

Solution: MDPs for Adaptive Streaming

Netflix developed an MDP-based system to manage real-time content delivery. The states in this system represent factors such as current bandwidth, device type, and video buffer levels. The actions include adjusting video resolution, bit rate, or buffering to optimize user experience without overwhelming the network.

The reward structure aims to maximize user satisfaction, which is measured through metrics like playback smoothness and video quality. By using temporal difference learning, Netflix’s system learns how to adjust streaming parameters dynamically, based on past network behavior and real-time conditions.

Outcome

The MDP-based approach has significantly reduced buffering times and improved the viewing experience for Netflix users, especially during peak hours. Netflix reports lower churn rates and higher user engagement as a direct result of the system’s ability to manage streaming quality under fluctuating network conditions.

These case studies demonstrate the real-world impact of MDPs across various industries, highlighting their ability to solve complex decision-making problems in real-time systems. From saving lives in healthcare to optimizing energy use in data centers, MDPs have proven to be essential in navigating dynamic, time-constrained environments.

Resources

MDPs in Autonomous Systems

- Resource: “Markov Decision Processes in Autonomous Vehicles: A Survey” (2020)

- Description: This paper offers an extensive overview of how MDPs are used in autonomous vehicle decision-making, with a focus on managing uncertainty in dynamic environments.

- Link: ResearchGate Article on MDPs in Autonomous Vehicles

Reinforcement Learning and MDPs

- Resource: Sutton, R. S., & Barto, A. G. (2018). “Reinforcement Learning: An Introduction”

- Description: This textbook is widely considered the foundational resource for reinforcement learning and its connection to MDPs. It provides deep theoretical insights and real-world applications, making it a must-read for understanding Q-learning and TD learning.

- Link: Sutton & Barto’s Reinforcement Learning Textbook

MDPs and Healthcare Systems

- Resource: “Applications of Markov Decision Processes in Health Care” (2016)

- Description: This article covers several case studies in healthcare where MDPs are used for treatment optimization and patient monitoring in real-time systems, especially in ICU environments.

- Link: PubMed Central: MDPs in Healthcare

Real-Time Systems and AI

- Resource: “Real-Time AI for Decision-Making in Complex Systems” (2021)

- Description: This journal article dives into the challenges and solutions for integrating AI and real-time decision-making frameworks like MDPs in industries ranging from autonomous systems to finance.

- Link: IEEE: Real-Time AI Systems

Energy Optimization using MDPs

- Resource: “Optimizing Energy Usage in Data Centers Using Markov Decision Processes” (2022)

- Description: This research paper discusses how MDPs are applied in data centers, specifically focusing on real-time energy optimization techniques and reinforcement learning implementations.

- Link: Google Scholar: MDPs in Energy Optimization