Machine learning (ML) is a game-changer for many industries, but the path from model development to deployment often feels like navigating a maze. This is where MLOps (Machine Learning Operations) comes in—a framework to simplify, streamline, and optimize the deployment of your models.

Let’s explore how AI frameworks play a crucial role in enabling seamless MLOps, ensuring your ML projects are efficient and scalable.

What Is MLOps, and Why Does It Matter?

The Essence of MLOps

MLOps is the practice of integrating machine learning processes with DevOps principles. It focuses on:

- Automation of workflows.

- Collaboration between teams.

- Scalability for larger models and datasets.

Essentially, it bridges the gap between data science and operationalization.

Why Companies Need MLOps

Without MLOps, ML models often remain in the development phase. Teams face challenges like:

- Manual deployment processes that are error-prone.

- Difficulty in scaling models as data complexity grows.

- Lack of monitoring, leading to decreased model performance over time.

Adopting MLOps frameworks ensures consistency, reduces time-to-market, and keeps machine learning models production-ready.

Role of AI Frameworks in MLOps

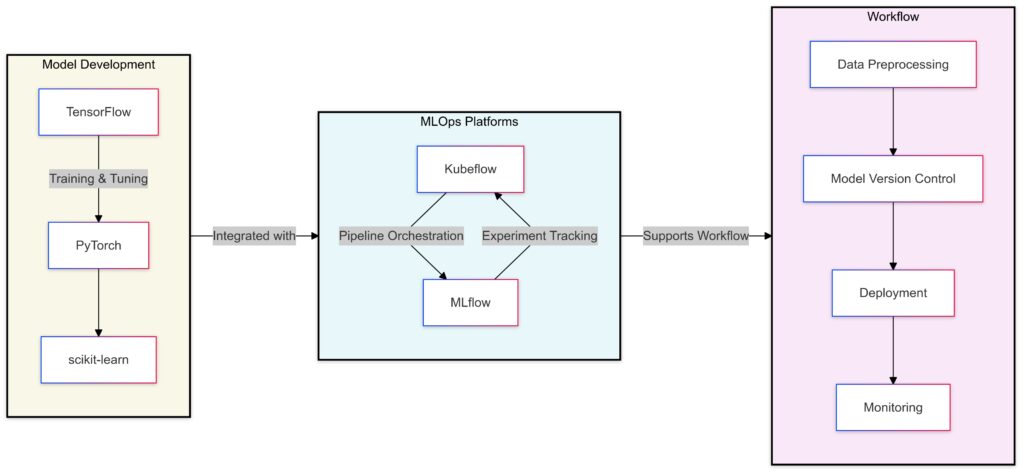

Model Development:

Frameworks: TensorFlow, PyTorch, and scikit-learn are used for model creation, training, and tuning.

MLOps Platforms:

Kubeflow: Facilitates pipeline orchestration and automation.

MLflow: Handles experiment tracking and model versioning.

Workflow:

Data Preprocessing: Initial step to clean and prepare data for training.

Model Version Control: Ensures traceability and reproducibility of models.

Deployment: Integrates models into production environments.

Monitoring: Tracks model performance and triggers retraining if necessary.

Why AI Frameworks Are the Backbone

AI frameworks like TensorFlow, PyTorch, and scikit-learn provide the tools necessary to:

- Build models effectively.

- Integrate with MLOps pipelines.

- Handle data preprocessing and feature engineering.

These frameworks also offer pre-built APIs for deployment, making them invaluable in the MLOps journey.

Integration with MLOps Platforms

Platforms like Kubeflow, MLflow, and SageMaker seamlessly integrate with popular frameworks. This allows:

- Version control for experiments.

- Smooth model monitoring post-deployment.

- Scalable solutions with containerized deployments using Docker or Kubernetes.

These integrations ensure your AI frameworks are operationally robust.

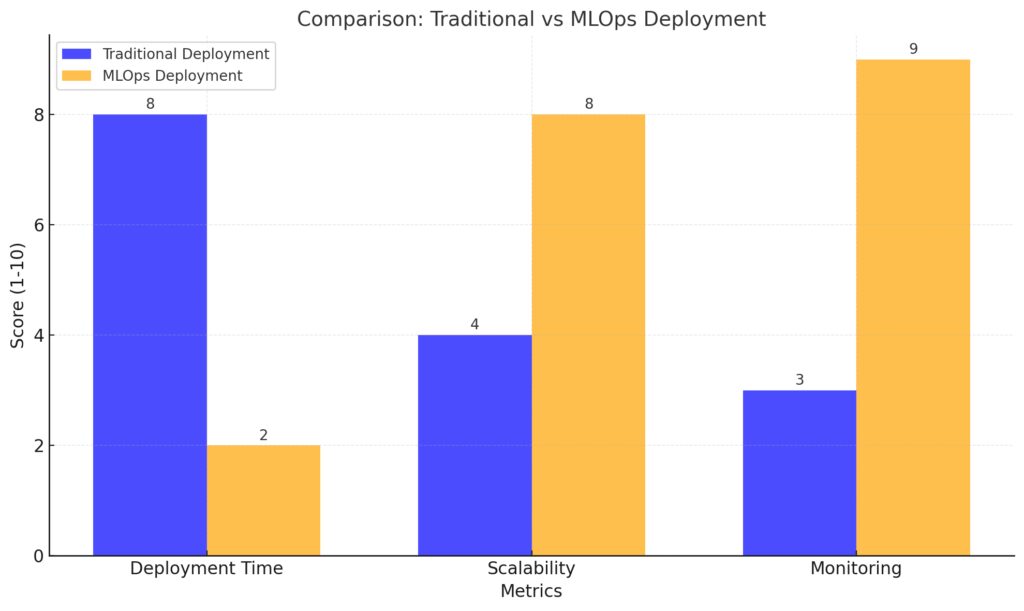

Challenges in Model Deployment Without MLOps

Traditional: Score of 8, indicating longer deployment times.

MLOps: Score of 2, reflecting significantly faster deployment.

Scalability:

Traditional: Score of 4, limited scalability due to manual processes.

MLOps: Score of 8, enhanced scalability through automation.

Monitoring:

Traditional: Score of 3, minimal monitoring capabilities.

MLOps: Score of 9, robust monitoring with real-time insights.

Limited Reproducibility

Manually deploying models often leads to inconsistencies. You can’t easily replicate experiments, resulting in wasted time.

Manual Scaling Issues

As data increases, manual processes falter. Your infrastructure struggles to adapt, causing inefficiencies.

Lack of Monitoring

Without automated tools, you won’t know if your model performance is degrading, which affects real-world applications.

By leveraging MLOps with AI frameworks, these challenges become manageable, transforming ML into a continuous, repeatable process.

Automation: The Key to Simplifying MLOps

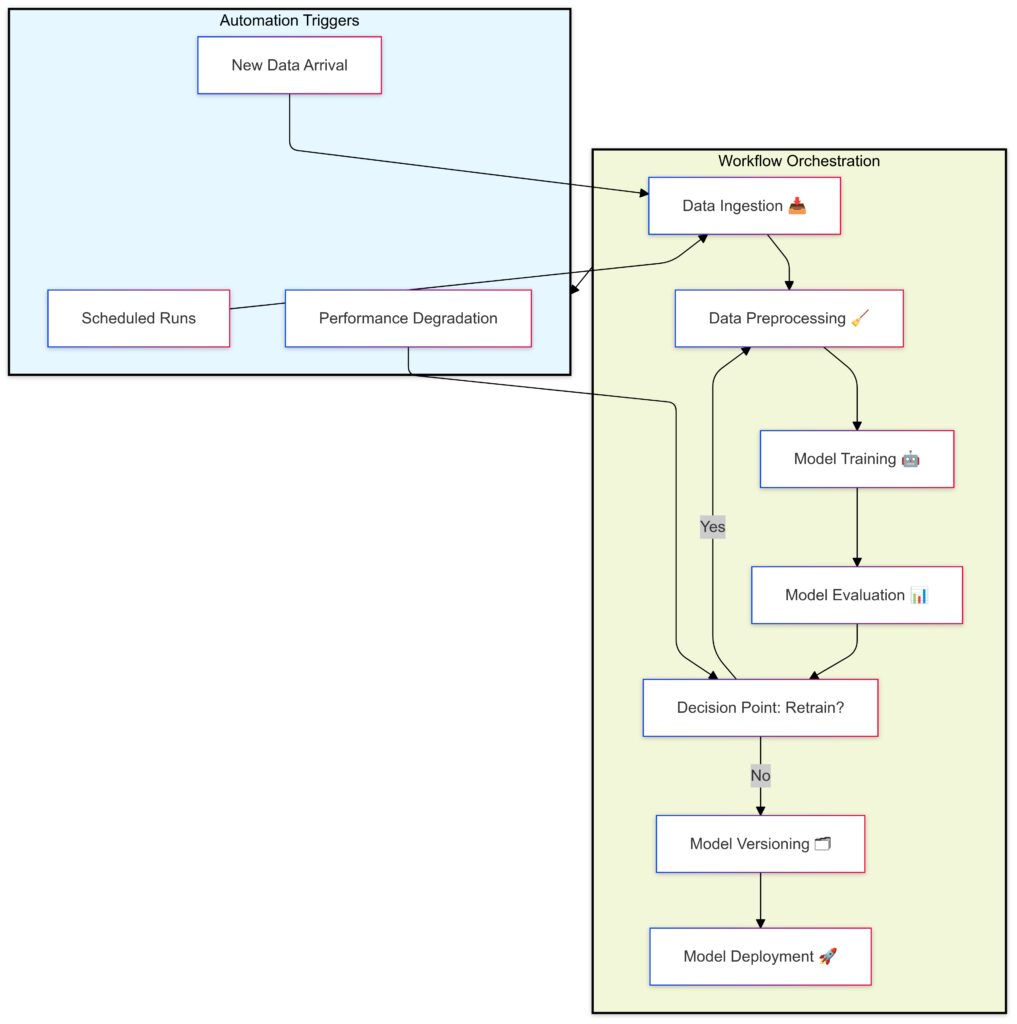

Workflow Orchestration

With AI frameworks, tools like Apache Airflow or Prefect automate tasks, ensuring consistent execution across teams.

Workflow Orchestration:

Data Ingestion 📥: Gather raw data from various sources.

Data Preprocessing 🧹: Clean and prepare data for analysis.

Model Training 🤖: Train machine learning models using preprocessed data.

Model Evaluation 📊: Assess model performance against validation metrics.

Decision Point (Retrain?): Evaluate whether to retrain based on new data or performance.

Model Versioning 🗂: Archive models for reproducibility and traceability.

Model Deployment 🚀: Deploy models to production environments.

Automation Triggers:

Scheduled Runs: Automate workflows at predefined intervals.

New Data Arrival: Trigger workflows upon detecting new data.

Performance Degradation: Initiate retraining if model performance drops below a threshold.

Continuous Integration/Continuous Deployment (CI/CD)

AI frameworks can be plugged into CI/CD pipelines to streamline updates. Code changes, model retraining, and redeployment happen effortlessly.

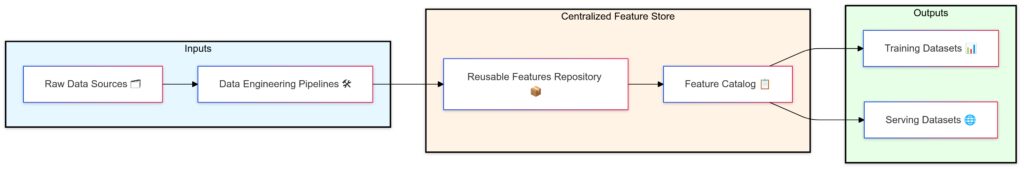

Data Versioning and Feature Stores

Frameworks like TensorFlow Extended (TFX) enable data versioning. This keeps track of input data changes, ensuring robust feature management.

By automating these workflows, you can spend more time innovating and less time firefighting deployment issues.

Monitoring and Optimization in MLOps

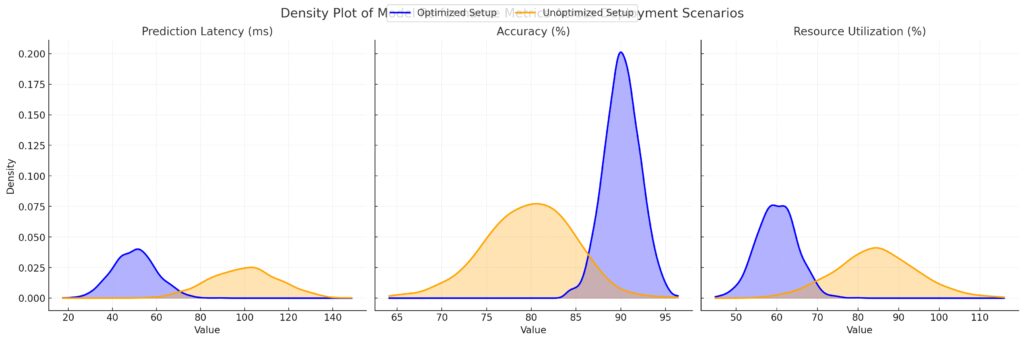

Prediction Latency (ms):

Optimized Setup: Lower latency, represented by the blue curve.

Unoptimized Setup: Higher latency, shown with the orange curve.

Accuracy (%):

Optimized Setup: Higher accuracy with less variability (blue curve).

Unoptimized Setup: Lower accuracy and greater spread (orange curve).

Resource Utilization (%):

Optimized Setup: Moderate usage (blue curve).

Unoptimized Setup: Higher resource demands with significant variability (orange curve).

Real-Time Model Monitoring

AI frameworks allow integration with monitoring tools like Prometheus or ELK Stack. You can track:

- Prediction accuracy.

- Latency.

- Resource usage.

Drift Detection and Retraining

Over time, models face data drift, where input data changes from the original training dataset. MLOps platforms use AI frameworks to trigger automated retraining pipelines when drift is detected.

Performance Optimization

Tools like TensorRT or ONNX Runtime optimize model inference for specific hardware, reducing latency and increasing throughput.

Monitoring ensures that your models stay efficient and provide consistent value.

Building a Scalable MLOps Pipeline

Designing for Scalability from the Start

Scalability is a cornerstone of effective MLOps. To ensure your pipeline grows with your needs:

- Use cloud-native tools like AWS SageMaker or Google Vertex AI for elastic scaling.

- Leverage distributed training frameworks such as PyTorch Distributed or Horovod for large datasets.

- Employ microservices architecture to isolate model training, deployment, and serving.

Starting with scalable infrastructure prevents bottlenecks as your project evolves.

Data Pipelines for High-Volume Workloads

A robust MLOps pipeline requires efficient data ingestion and preprocessing. Tools like:

- Apache Kafka for real-time data streaming.

- Apache Spark for distributed data processing.

- Feature stores (e.g., Feast or Tecton) to centralize reusable features.

These solutions ensure your models are always working with accurate, up-to-date data.

Cloud vs. On-Premises Considerations

Cloud platforms offer unparalleled scalability, but on-premises setups might suit industries with strict data regulations.

- Cloud: Elastic scaling, lower upfront costs, faster deployment.

- On-Premises: Enhanced control, security, and potential cost savings for large-scale operations.

Choosing the right environment depends on your business needs and regulatory constraints.

Best Practices for Deploying Models

Containerization and Orchestration

Deploying models in containers (using Docker) ensures consistency across environments. Tools like Kubernetes help manage:

- Load balancing.

- Autoscaling.

- Seamless updates with minimal downtime.

Choosing the Right Serving Framework

AI frameworks offer tailored serving solutions:

- TensorFlow Serving for TensorFlow models.

- TorchServe for PyTorch models.

- ONNX Runtime for cross-platform compatibility.

Selecting the right serving framework optimizes latency and resource usage.

A/B Testing and Shadow Deployment

Deploying updates without disruption is critical. Techniques include:

- A/B testing: Split traffic between old and new versions.

- Shadow deployment: Run the new model alongside the production model without affecting live data.

These strategies mitigate risks and ensure robust performance before full rollout.

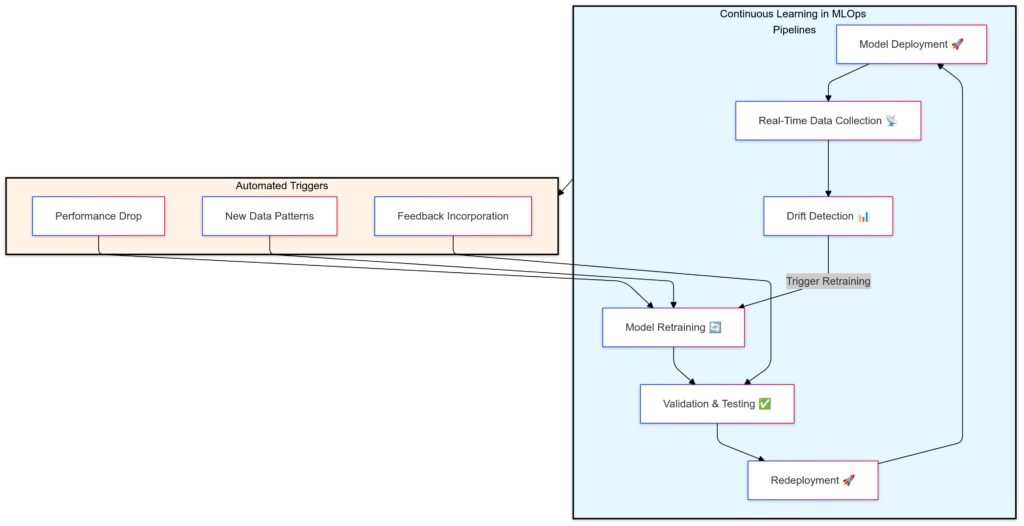

Continuous Learning in MLOps

Model Deployment 🚀: Begin with deploying the model into production.

Real-Time Data Collection 📡: Gather live data for performance monitoring and updates.

Drift Detection 📊: Identify changes in data distribution or model performance.

Model Retraining 🔄: Triggered by performance drops or data shifts, the model is retrained with updated data.

Validation & Testing ✅: Ensure the retrained model meets quality and performance standards.

Redeployment 🚀: Deploy the validated model back into production, completing the cycle.

Automated Triggers:

Performance Drop: Automatically detect and address significant declines in model effectiveness.

New Data Patterns: Identify and adapt to emerging trends or patterns in data.

Feedback Incorporation: Integrate feedback from users or stakeholders into retraining and validation.

Feedback Loops for Model Improvement

Real-world data is dynamic, and models must adapt. Feedback loops enable:

- Real-time performance evaluation from production data.

- Identification of edge cases for retraining.

Incorporating feedback helps maintain accuracy and relevance.

Automating Retraining Pipelines

AI frameworks integrate seamlessly with retraining workflows:

- Monitor performance metrics.

- Automatically trigger retraining on new datasets.

- Validate and redeploy updated models without manual intervention.

This ensures your ML system evolves alongside your data.

Ethical AI and Governance

As models become more autonomous, ensuring transparency and fairness is critical. Use tools like IBM’s AI Fairness 360 or Google’s What-If Tool to:

- Detect biases in data or predictions.

- Explain model decisions.

- Comply with data privacy regulations like GDPR or CCPA.

A commitment to ethical AI builds trust and reduces risk.

Advanced Tools for MLOps Optimization

Experiment Tracking

Keeping track of experiments is critical for reproducibility. Tools like MLflow or Weights & Biases allow you to log:

- Model parameters and metrics.

- Dataset versions.

- Hardware configurations.

Hyperparameter Optimization

Frameworks like Ray Tune or Optuna automate hyperparameter tuning, leading to better-performing models faster.

Edge Deployment for IoT Devices

For low-latency applications, AI frameworks enable edge deployment:

- TensorFlow Lite or PyTorch Mobile for lightweight inference.

- ONNX for compatibility across hardware platforms.

Optimizing for edge ensures your models can serve predictions anywhere, even with limited connectivity.

By combining MLOps best practices with AI frameworks, you can create a pipeline that scales effortlessly while delivering reliable, optimized models. The result? Faster deployment, better performance, and continuous learning—an unbeatable strategy in today’s fast-paced ML landscape.

FAQs

Is MLOps only for large organizations?

No, MLOps benefits small teams too! Even a startup working on a chatbot can use tools like MLflow for version control or Apache Airflow for workflow automation. These solutions scale with your team’s needs, making MLOps accessible to all.

What are feature stores, and why are they important?

Feature stores centralize feature engineering, ensuring consistent feature usage across training and serving. For example, a food delivery app might store customer location data as a reusable feature for both delivery time predictions and user clustering models.

How does MLOps support ethical AI?

MLOps platforms integrate tools like AI Fairness 360 to identify biases in models. For instance, a hiring algorithm could use these tools to ensure decisions are not influenced by irrelevant factors like gender or ethnicity. Regular audits ensure transparency and compliance with ethical guidelines.

Can MLOps improve edge AI deployment?

Yes! MLOps frameworks optimize models for deployment on edge devices like smartphones or IoT sensors. Using TensorFlow Lite or PyTorch Mobile, you can deploy lightweight models, such as real-time language translation apps, even on devices with limited resources.

By addressing these common questions, MLOps becomes clearer as a transformative solution for streamlining AI workflows and ensuring long-term success.

How does MLOps handle model versioning?

MLOps ensures proper model versioning by integrating tools like MLflow and DVC (Data Version Control). These tools allow teams to keep track of:

- Changes in model parameters.

- Updates to training datasets.

For instance, a financial institution deploying fraud detection models can roll back to a previous version if a new update affects performance. This ensures reliable and reproducible outcomes.

How do CI/CD pipelines work in MLOps?

CI/CD pipelines in MLOps automate the process of:

- Building and testing models during development.

- Deploying updates without downtime.

For example, an online streaming platform might use a CI/CD pipeline to regularly update its recommendation model with new user data. With tools like GitLab CI or Jenkins, the platform can automatically test the new model and deploy it after validation.

What role does containerization play in MLOps?

Containerization ensures models run consistently across development, testing, and production environments. Using Docker, teams package models with all their dependencies. For example:

- A computer vision model built with TensorFlow and OpenCV can run seamlessly in any Kubernetes cluster when containerized.

This eliminates the “it works on my machine” problem.

How does MLOps ensure reproducibility?

Reproducibility is achieved through:

- Experiment tracking tools like Weights & Biases or Neptune.

- Logging of hyperparameters, data versions, and evaluation metrics.

For instance, a marketing analytics team might revisit an experiment six months later to test new data. Using MLOps tools, they can replicate past results exactly, accelerating innovation.

Can MLOps help with regulatory compliance?

Yes! Industries like healthcare, finance, and insurance often require adherence to strict regulations. MLOps frameworks facilitate compliance by:

- Tracking data lineage for audit trails.

- Enforcing access controls to protect sensitive information.

For example, an AI model predicting patient diagnoses can use MLOps to ensure compliance with HIPAA by restricting access to identifiable data.

How do MLOps platforms handle multi-cloud or hybrid environments?

Many MLOps platforms, like Kubeflow or MLflow, support multi-cloud setups, enabling seamless integration across AWS, Azure, or Google Cloud. This is ideal for organizations balancing on-premises and cloud-based resources. For example, a retail company may train models on-premises for cost efficiency but deploy them on a public cloud for global scalability.

How can drift detection be automated in MLOps?

Automated drift detection tools like Evidently AI or custom scripts monitor production data distributions and compare them with training data. If significant changes are detected, they can trigger alerts or initiate retraining. For instance, a social media platform’s content moderation model can be retrained as user behavior evolves, ensuring consistent accuracy.

What is the role of orchestration in MLOps?

Orchestration tools like Apache Airflow, Prefect, or Luigi schedule and coordinate pipeline tasks. They ensure that:

- Data preprocessing happens before training.

- Models are tested before deployment.

For example, an ad tech company might schedule a pipeline to update bidding models daily. Orchestration ensures these workflows run without manual intervention.

How does MLOps improve model performance on edge devices?

Edge AI models often need to run with low latency and minimal computational resources. MLOps frameworks support this by:

- Optimizing models using TensorRT or ONNX Runtime.

- Enabling deployments with TensorFlow Lite or PyTorch Mobile.

For instance, a smart home device using speech recognition can leverage these optimizations to deliver real-time responses, even with limited hardware.

How does feature engineering benefit from MLOps?

MLOps tools simplify feature engineering by centralizing reusable features in feature stores like Feast or Tecton. This ensures:

- Consistency between training and serving data.

- Faster deployment of new models.

For example, a ride-sharing app might store user location history and trip preferences as features, streamlining updates for their demand prediction models.

Can MLOps support multiple teams working on the same project?

Yes! Collaboration is one of the core benefits of MLOps. With shared tools like Git, MLflow, or Databricks, multiple teams can:

- Collaborate on different parts of the ML lifecycle.

- Track progress and ensure alignment.

For example, a banking organization can have one team focusing on fraud detection model development while another ensures the deployment process adheres to security standards.

By addressing these deeper questions, it’s clear that MLOps is a comprehensive approach that not only streamlines deployment but also enhances collaboration, scalability, and compliance across diverse industries.

Resources

Official Documentation

TensorFlow Extended (TFX):

Provides resources for building end-to-end ML pipelines using TensorFlow tools.

PyTorch:

Covers training, deployment, and scaling workflows with PyTorch, including integrations with MLOps tools.

MLflow:

Comprehensive guide to tracking, packaging, and deploying machine learning models.

Kubeflow:

Explains how to build, deploy, and manage scalable machine learning pipelines.

Books and E-Books

- “Building Machine Learning Pipelines” by Hannes Hapke and Catherine Nelson

Focuses on implementing ML pipelines with tools like TFX and Kubeflow. - “Practical MLOps” by Noah Gift and Alfredo Deza

A practical guide to implementing MLOps in real-world scenarios.

Available on Amazon - “Designing Machine Learning Systems” by Chip Huyen

Focuses on designing scalable and efficient machine learning systems, including MLOps concepts.

Available here

Online Courses and Tutorials

- Coursera – Machine Learning Engineering for Production (MLOps)

Offered by Google Cloud, this specialization teaches MLOps fundamentals and advanced practices.

Learn more on Coursera - Udemy – MLOps Concepts

A beginner-friendly course introducing key MLOps practices and tools like MLflow and Docker. - AWS Training – MLOps Foundations on SageMaker

AWS provides hands-on training for building and deploying ML workflows using SageMaker.

Access AWS MLOps Training

Blogs and Communities

- MLOps Community: MLOps Community

Join a vibrant network of professionals sharing insights, tools, and case studies about MLOps. - Neptune.ai Blog:

Deep dives into experiment tracking, model monitoring, and other MLOps essentials. - Weights & Biases Blog:

Features articles on building scalable ML workflows and practical MLOps advice.

Tools and Platforms

- Evidently AI – Evidently AI

A drift detection and model monitoring tool for production environments. - DVC (Data Version Control): DVC Website

Version control system tailored for ML workflows, enabling reproducibility and collaboration. - Seldon Core:

An open-source platform for deploying machine learning models at scale.

Research Papers

- “Hidden Technical Debt in Machine Learning Systems”

By Sculley et al., this paper highlights challenges in deploying and maintaining ML systems. - “The ML Test Score: A Rubric for ML Production Readiness”

Provides a checklist to evaluate the production readiness of ML systems.