Advances in deep learning have opened new pathways for handling sequential data, a cornerstone of applications in finance, healthcare, NLP, and beyond.

While Recurrent Neural Networks (RNNs) and their evolved forms like Long Short-Term Memory (LSTM) networks have been long-standing champions in this arena, Neural Ordinary Differential Equations (Neural ODEs) have recently entered the scene, offering unique advantages, especially in continuous data settings.

But how do these models stack up against each other? And when should you choose one over the others?

Neural ODEs: Continuous Modeling with Differentiable Solvers

How Neural ODEs Work

Unlike traditional neural networks that apply layers sequentially, Neural ODEs model differential equations to handle data in continuous time. Essentially, they represent the hidden state of a system as a function of time using ODE solvers. Instead of updating in discrete steps (as RNNs do), Neural ODEs allow for smooth transitions in the hidden state, making them highly suitable for systems where data evolves gradually or sporadically over time.

Strengths of Neural ODEs

- Continuous Time Modeling: Neural ODEs excel in situations where events are non-uniformly spaced or when capturing the subtle nuances in the evolution of a system.

- Memory Efficiency: By only storing the initial state and computing the solution on the fly, Neural ODEs are often more memory-efficient than deep RNNs or LSTMs.

- Adaptability: They offer flexible time intervals, making them valuable for systems with irregular sampling or missing time points.

Use Cases for Neural ODEs

Neural ODEs are ideal for time-series applications where data points are sparse or unevenly spaced. For instance:

- Biological and ecological modeling: In these domains, processes evolve over time in a non-discrete manner, benefiting from Neural ODEs’ continuity.

- Finance and economics: Many financial time-series, like interest rates or stock prices, vary continuously rather than in fixed intervals.

- Physics and engineering: Physical systems are often governed by differential equations, making Neural ODEs a natural fit.

Learn more about applications of Neural ODEs in continuous data modeling.

Recurrent Neural Networks (RNNs): The Baseline for Sequential Data

How RNNs Work

Recurrent Neural Networks use a looping structure that allows information to persist. At each time step, the hidden state is updated with new inputs, giving RNNs a form of “memory” for capturing dependencies in sequences. However, standard RNNs struggle with long sequences due to issues like vanishing gradients and limited long-term memory.

Strengths of RNNs

- Simple Architecture: RNNs provide a straightforward approach to sequential data, ideal for situations where patterns are relatively short and simple.

- Low Computational Cost: Basic RNNs are less resource-intensive than LSTMs or Neural ODEs, making them faster for simpler tasks.

- Ease of Interpretation: The straightforward structure can sometimes make RNNs easier to debug or interpret compared to more complex networks.

Use Cases for RNNs

RNNs work best when dealing with short, predictable sequences. Typical scenarios include:

- Text-based tasks: Simple text classification tasks can often be handled by basic RNNs, especially when dealing with short documents.

- Audio and speech: Short audio signals, such as phoneme recognition, can be effectively modeled with RNNs.

- Sensor data with fixed sampling: RNNs can perform well when sensor data is recorded at fixed intervals and doesn’t require long-range dependency tracking.

Long Short-Term Memory (LSTM): Managing Long-Term Dependencies

How LSTMs Work

LSTMs are an advanced version of RNNs designed to manage long-term dependencies more effectively. They use a set of gates—input, output, and forget gates—that regulate the flow of information and help the model retain or discard information as necessary. This architecture is particularly beneficial for tasks requiring a deep memory of previous inputs.

Strengths of LSTMs

- Effective for Long Sequences: LSTMs maintain information over longer time spans, making them effective for sequences with long-term dependencies.

- Prevention of Vanishing Gradient: By incorporating gates, LSTMs mitigate the vanishing gradient problem, enabling deeper network training.

- Proven Track Record: They are a tried-and-true approach for sequential data and have been widely adopted across industries, particularly in NLP.

Use Cases for LSTMs

LSTMs shine in applications where remembering past inputs is crucial:

- Natural Language Processing (NLP): LSTMs are widely used in tasks like sentiment analysis, machine translation, and text generation.

- Financial forecasting: Stock price prediction models often leverage LSTMs to capture long-term trends and seasonality.

- Speech recognition: For tasks where the context of a full sentence or longer audio clip matters, LSTMs are highly effective.

For a practical demonstration of LSTM’s capabilities, check out this tutorial on text generation with LSTMs.

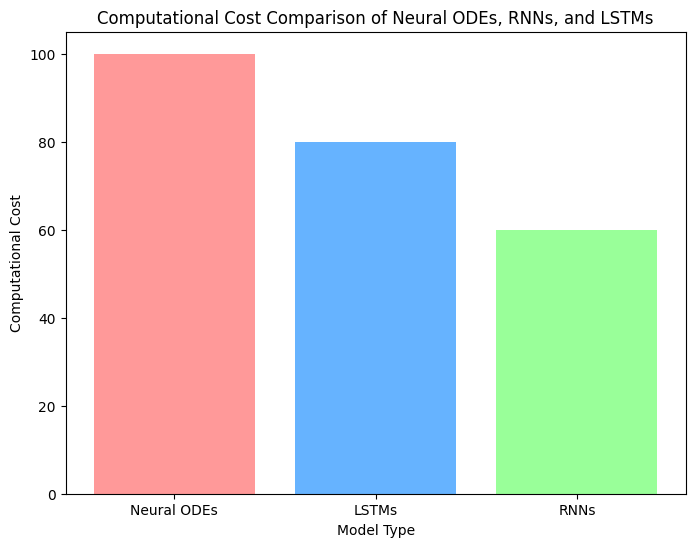

Handling Computational Requirements: Neural ODEs vs. RNNs vs. LSTMs

Computational Efficiency and Training Time

One of the main differentiators among Neural ODEs, RNNs, and LSTMs is the computational power and training time required:

- Neural ODEs: These models leverage differential equation solvers, which can be computationally intensive, especially for long sequences or highly complex systems. However, they tend to use memory more efficiently, which can be a benefit when working with large datasets.

- RNNs: RNNs are typically the least computationally demanding due to their simpler structure. They can be trained relatively quickly on shorter sequences, making them a go-to for tasks with simpler patterns or limited dependencies.

- LSTMs: The additional gates and memory cell structures in LSTMs make them more computationally demanding than RNNs. However, they remain manageable on modern hardware and are generally faster to train than Neural ODEs on longer sequences, though with higher memory usage.

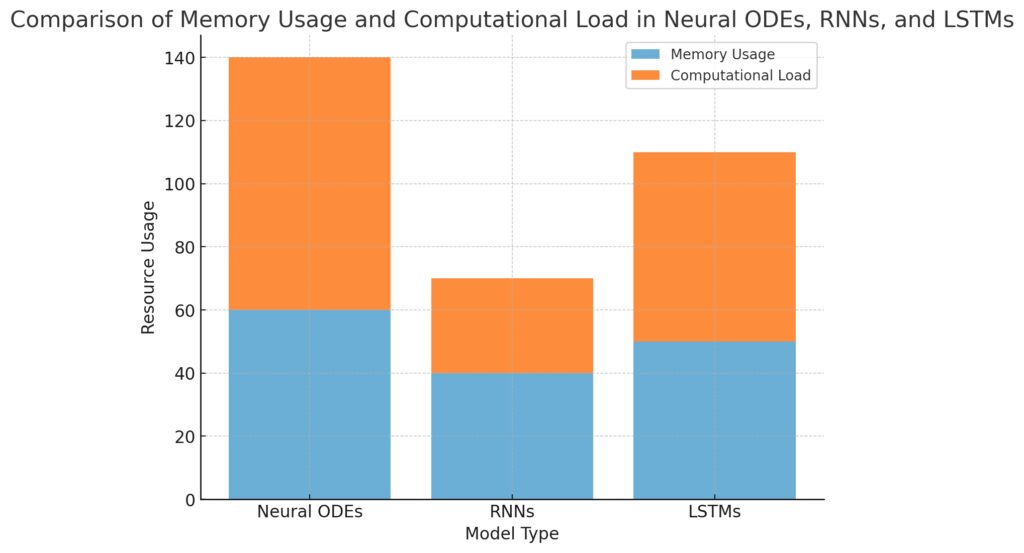

Neural ODEs: Higher computational load with moderate memory usage.

RNNs: Lowest computational load and memory usage.

LSTMs: Moderate levels for both metrics, falling between Neural ODEs and RNNs.

In short, Neural ODEs can be computationally expensive due to their continuous modeling, RNNs are the lightest, and LSTMs fall somewhere in between.

Model Selection Based on Resources

When choosing a model, it’s essential to match your available computational resources with the model’s complexity:

- For low-resource settings, opt for RNNs where possible.

- In medium-resource settings, LSTMs provide a robust solution.

- For high-resource, high-precision needs, Neural ODEs can be worth the investment, especially if your data benefits from continuous modeling.

Dealing with Irregularly Sampled Data: A Unique Edge for Neural ODEs

Irregular Sampling: A Common Challenge in Sequential Data

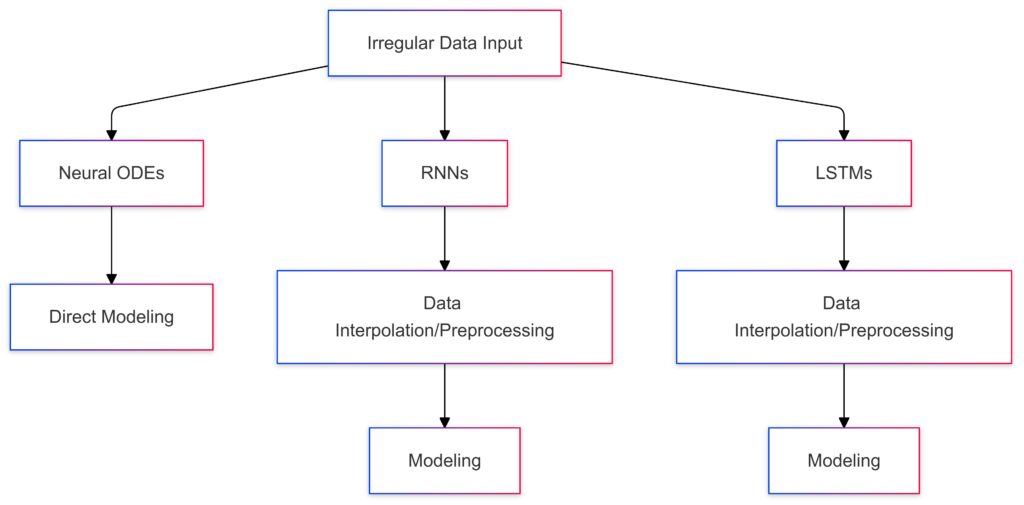

Real-world data isn’t always neatly spaced; it’s often irregular or incomplete. This inconsistency can pose challenges for RNNs and LSTMs, which expect regularly sampled time steps. To handle such data, additional preprocessing (such as interpolation) is usually required, which can introduce noise or distort the original data.

RNNs and LSTMs: Require data interpolation/preprocessing before the modeling phase.

Neural ODEs’ Advantage in Continuous Modeling

Since Neural ODEs model data in continuous time, they naturally accommodate irregularly sampled data without any need for interpolation. The model can directly learn the evolution of data points as they occur, capturing more accurate dynamics in datasets like medical records, environmental data, or sporadically recorded financial transactions.

For example, Neural ODEs are particularly well-suited for healthcare time-series where patient visits or recorded measurements are irregular but critical for accurate prediction.

Memory and Long-Term Dependency Handling: RNNs, LSTMs, and Neural ODEs

The Challenge of Long-Term Dependencies

Sequential data often requires long-term memory—the ability to retain and access information from earlier time steps. This capability varies widely across the models:

- RNNs: Suffer from short-term memory issues due to vanishing gradients, making them unsuitable for tasks with extended dependencies.

- LSTMs: Effectively manage long-term dependencies thanks to gating mechanisms, making them highly popular in tasks like language modeling or predictive maintenance where long-term information retention is crucial.

- Neural ODEs: Their suitability for long-term dependencies depends on the specific problem, but they excel in continuous, slow-evolving data where exact timing matters more than retaining deep memories. For tasks where fine-grained data flow over time is essential, Neural ODEs can offer a clear advantage.

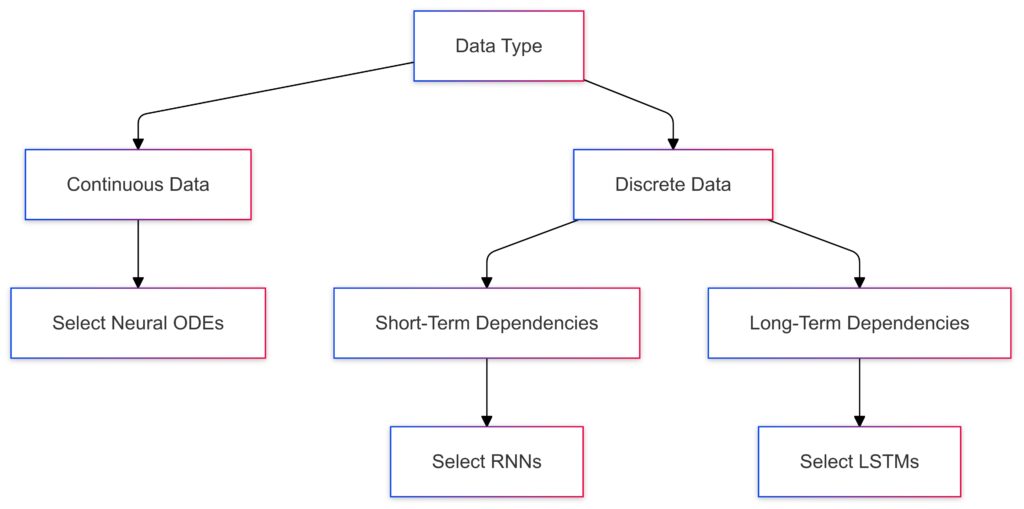

Discrete Data: Splits further into:

Short-Term Dependencies: Leads to RNNs.

Long-Term Dependencies: Leads to LSTMs.

Flexibility in Application: Which Model Fits Your Use Case?

Quick Recommendations by Task Type

- Short sequences with straightforward patterns: RNNs work well here, especially if computational efficiency is a priority.

- Long sequences or sequences with complex dependencies: LSTMs are generally the best fit for tasks requiring memory over long time steps.

- Continuous, irregularly sampled data: Neural ODEs provide a natural fit for continuous modeling, where time dynamics and subtle variations are crucial.

| Feature | Neural ODEs | RNNs | LSTMs |

|---|

| Real-Time Suitability | Limited for real-time applications due to computational intensity. | Suitable for real-time processing with lower complexity. | Suitable, though may lag in high-complexity environments. |

| Continuous Data Handling | Highly suited for handling continuous and irregular data. | Less suitable; primarily designed for discrete time steps. | Moderately suitable, often used with discrete data but adaptable. |

| Long-Term Dependency Management | Effective in capturing long-term dependencies with fewer parameters. | Limited capacity to manage long-term dependencies effectively. | Excellent for capturing long-term dependencies, ideal for sequential data. |

| Training Complexity | High training complexity, requires specialized solvers. | Low complexity, straightforward to train. | Moderate to high complexity, with optimization challenges. |

| Memory Efficiency | Memory-intensive, especially in high-dimensional settings. | More memory-efficient, especially with short sequences. | Moderate efficiency; can handle longer sequences with memory trade-offs. |

Summary: Picking the Right Model for Your Sequential Data

In a nutshell:

- Choose RNNs for quick, low-complexity tasks with limited dependencies.

- Use LSTMs for standard tasks that need to capture long-term dependencies in sequential data.

- Go with Neural ODEs when dealing with irregularly spaced data, continuous processes, or when modeling smooth, evolving data over time.

By aligning your choice with your data’s characteristics and your task’s complexity, you can unlock the potential of each model for truly effective sequential data analysis.

FAQs

How do Neural ODEs handle missing data?

Neural ODEs excel with irregular or missing data because they model data evolution as a continuous function over time. This allows them to skip interpolation steps, directly learning from whatever data points are available. This feature is especially useful in applications like medical data, where recordings are not consistently spaced.

Which model is best for real-time applications?

For real-time applications that need fast responses, RNNs and LSTMs tend to be better choices than Neural ODEs, as they’re generally faster to compute. RNNs are particularly efficient, while LSTMs balance real-time responsiveness with the ability to capture long-term dependencies, making them ideal for tasks like speech recognition or real-time language translation.

Are Neural ODEs more computationally intensive than LSTMs?

Yes, Neural ODEs can be more computationally demanding, especially with long sequences. They rely on differential equation solvers, which may require more processing power and time. While Neural ODEs are memory efficient, their continuous modeling approach often makes them slower than LSTMs, which use discrete steps and a gate mechanism to handle dependencies.

Can Neural ODEs replace RNNs and LSTMs in all cases?

No, Neural ODEs are not a universal replacement for RNNs and LSTMs. Each model has strengths suited to specific types of data and tasks. Neural ODEs are best for continuously evolving systems or irregularly spaced data, but they may not be ideal for tasks that require handling explicit long-term dependencies, where LSTMs or RNNs often perform better. For tasks with frequent, regular updates and discrete sequential data (like language modeling or short audio sequences), RNNs and LSTMs remain competitive choices.

How do RNNs, LSTMs, and Neural ODEs compare in terms of memory efficiency?

Neural ODEs can be quite memory-efficient because they store only the initial state and use an ODE solver to generate the rest of the sequence as needed. This contrasts with LSTMs and RNNs, which must keep track of states across each step, which can add up with large datasets. However, the ODE solvers that Neural ODEs rely on may increase computational time, especially for complex or long sequences. For models with larger hidden states or long sequences, LSTMs are more memory-intensive than RNNs due to their gating mechanisms but still remain manageable on standard hardware.

Which model is most effective for financial forecasting?

LSTMs tend to be the most effective for financial forecasting because they capture long-term dependencies and trends, which are often critical in financial data. Neural ODEs can also be valuable in finance, especially for modeling continuous-time processes like stock price dynamics or interest rates that don’t fit neatly into discrete time steps. RNNs, while useful for basic time-series forecasting, are generally not as powerful for capturing the long-range dependencies seen in complex financial data.

How do these models handle long-term dependencies differently?

- RNNs: Have limited ability to capture long-term dependencies due to vanishing gradients, making them suitable only for short sequences or patterns with immediate dependencies.

- LSTMs: Use gated mechanisms to manage long-term dependencies, making them effective at retaining relevant information over extended sequences.

- Neural ODEs: Don’t retain long-term memory in the same way as LSTMs. Instead, they excel in representing data as a continuous flow over time, making them ideal for tasks where subtle, ongoing transitions are more important than explicit, long-term memory.

What kinds of tasks are not suitable for Neural ODEs?

Neural ODEs may not be ideal for tasks that require long-range dependencies in a discrete context, like language modeling or extensive sequence prediction tasks where each step strongly relies on preceding states. They also may be less efficient for real-time applications that demand rapid computation, as Neural ODEs can be slower due to the continuous nature of the ODE solvers. Additionally, extremely large datasets may make Neural ODEs computationally prohibitive. For these types of tasks, LSTMs or RNNs are often more practical.

Are Neural ODEs suitable for natural language processing (NLP)?

Neural ODEs are generally not the go-to choice for NLP tasks like language modeling, text generation, or sentiment analysis, where discrete, structured, and long-range dependencies between words are crucial. LSTMs and transformers are better suited for NLP because they can handle complex sentence structures, grammar rules, and long-term context in sequences of words. Neural ODEs lack the specific mechanisms for such structured dependencies, making them less effective for language-related applications that benefit from understanding discrete step-by-step relationships.

How does training time compare across Neural ODEs, RNNs, and LSTMs?

- RNNs: Generally the fastest to train due to their simpler architecture, making them suitable for short sequences or simpler sequential tasks.

- LSTMs: Training time is longer than RNNs because of the added complexity of the gating mechanisms, but they balance this with strong performance on long sequences.

- Neural ODEs: Tend to have the longest training times, as ODE solvers can be computationally intensive, especially for complex or long sequences. Training can require careful tuning of the solver’s accuracy and time-step parameters, which can add to overall training time.

Can I use these models together in a hybrid approach?

Yes, combining these models can be powerful. Hybrid models can leverage the strengths of each type for complex tasks. For example:

- Neural ODEs with LSTMs: In time-series analysis, Neural ODEs can capture continuous dynamics over irregular time points, while an LSTM layer can handle dependencies within the resulting sequence. This can be useful for irregular medical data or financial transactions where both continuity and memory are needed.

- Stacked RNN-LSTM layers: Using a combination of RNNs for initial pattern detection and LSTMs for handling long-term dependencies can improve performance on longer sequential tasks without overwhelming memory.

Hybrid approaches can also address specific data issues, such as irregular sampling, where Neural ODEs might preprocess the data before feeding it into an RNN or LSTM for final analysis.

How well do these models perform on streaming data?

LSTMs and RNNs are generally better suited for real-time streaming data due to their discrete, step-by-step processing. They can update and generate predictions as new data arrives, making them efficient for applications like speech recognition or real-time monitoring. Neural ODEs, on the other hand, require a full recalculation for each data point, which can slow down performance in streaming settings. While they’re excellent for modeling continuous processes, real-time or streaming applications can be challenging for Neural ODEs without additional optimizations.

What are some of the main challenges in deploying Neural ODEs?

Neural ODEs face challenges with deployment due to:

- Computational Overheads: ODE solvers can be computationally expensive and sometimes require high-performance hardware for real-time applications.

- Tuning Complexity: Choosing the right solver and setting hyperparameters for the ODE can be complex, requiring more expertise compared to deploying RNNs or LSTMs.

- Interpretability: Neural ODEs often lack the interpretability found in discrete models, making it harder to understand how specific time points contribute to the output.

In contexts where computational efficiency and deployment speed are priorities, RNNs and LSTMs are typically easier to deploy, requiring less complex hardware and tuning.

Are there specific industries or fields that favor one model over the others?

- Healthcare: Neural ODEs are popular for modeling irregularly sampled patient data or continuous biological processes.

- Finance: LSTMs are often used for financial forecasting, given their strength in capturing trends over long time frames, although Neural ODEs are gaining interest for modeling continuous data like interest rates or volatility.

- Manufacturing and Predictive Maintenance: RNNs and LSTMs are frequently used in sensor-based monitoring and predictive maintenance, as they effectively capture patterns in consistent, regularly collected sensor data.

- Natural Language Processing (NLP): LSTMs are common for tasks with long textual data, although transformers are now leading the field in many NLP applications.

Resources

- “Sequence Modeling with Recurrent Neural Networks” on Deep Learning Book by Ian Goodfellow, Yoshua Bengio, and Aaron Courville.

Covers RNNs, LSTMs, and GRUs in detail, explaining their mechanics, common applications, and limitations. - “Neural Ordinary Differential Equations” (original paper by Chen et al.)

This foundational paper introduced Neural ODEs, discussing their architecture, computational dynamics, and unique benefits for continuous modeling. Available on arXiv. - “A Comprehensive Guide to Recurrent Neural Networks (RNNs)” on Analytics Vidhya

Offers a practical overview of RNNs, LSTMs, and GRUs, including use cases, training insights, and comparisons among different models.