Noise Contrastive Estimation (NCE) is a technique that’s gaining attention for its effectiveness in optimizing large models, especially in natural language processing (NLP) and computer vision.

Let’s dive into how NCE works and why it’s an essential tool for AI model enhancement.

What is Noise Contrastive Estimation?

The Basics of NCE in Machine Learning

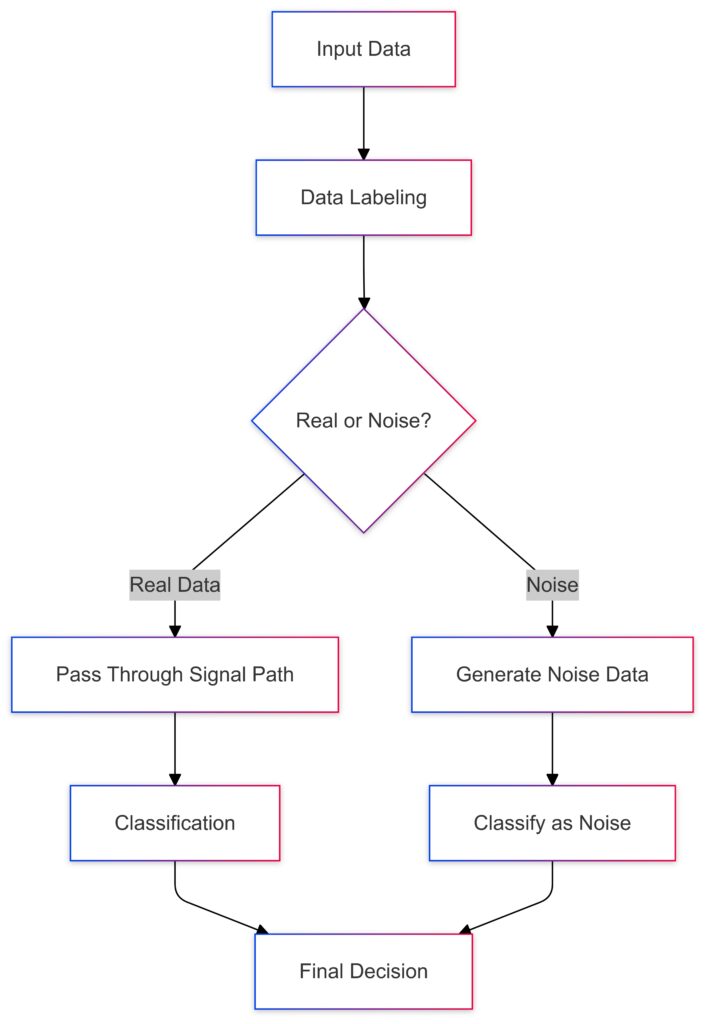

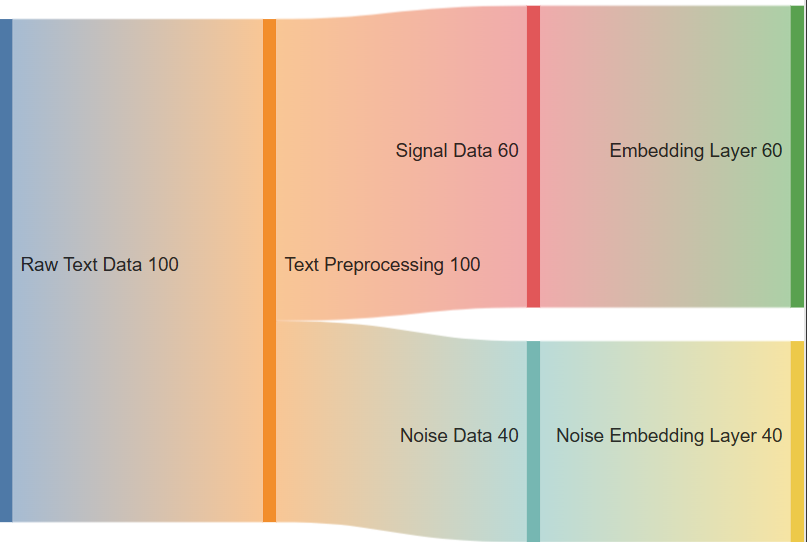

Noise Contrastive Estimation is a technique for estimating complex models by transforming an unsupervised learning problem into a supervised one. Rather than maximizing likelihoods in the traditional sense, NCE differentiates between actual data (signal) and random noise, helping a model learn patterns without getting bogged down by extraneous information. Originally developed for probabilistic modeling, NCE has proven beneficial in diverse AI applications.

Real Data follows the signal path for classification.

Noise goes through noise generation and classification before reaching the final decision.

How NCE Works in Practice

NCE adds “noise samples” to the dataset to teach the model what data does not look like. During training, it’s given both real samples and these noise samples, learning to distinguish one from the other. This strategy effectively reduces computational costs and allows the model to generalize better across various data types. Models using NCE essentially become skilled at differentiating true signal from noise, enhancing their robustness and adaptability.

Why NCE Outperforms Traditional Methods

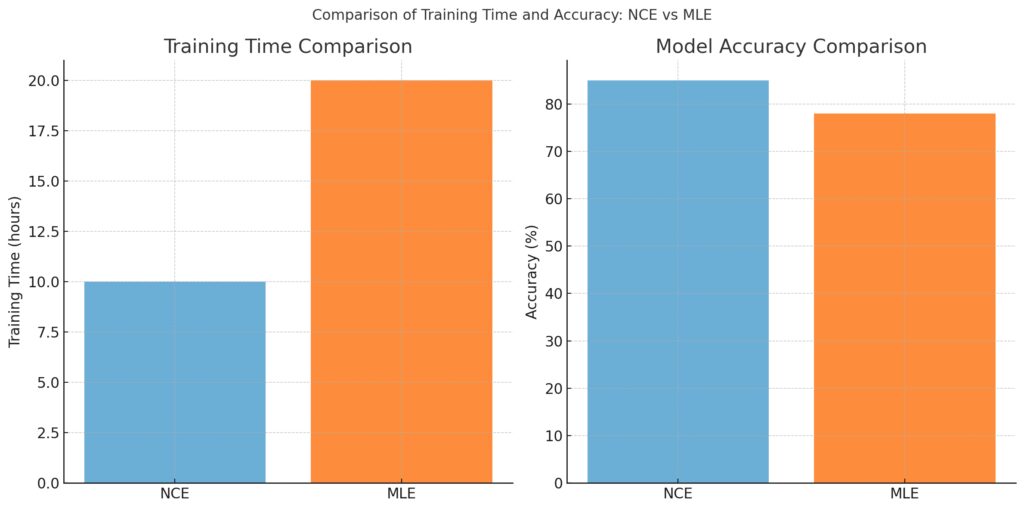

Many traditional methods rely on maximum likelihood estimation, which can be computationally intense and prone to overfitting. By contrasting noise against true data, NCE requires fewer parameters, performs faster, and has less risk of overfitting. This efficiency makes it ideal for large-scale applications, such as word embeddings and other NLP tasks.

Training Time: NCE converges faster with a shorter training time.

Accuracy: NCE achieves higher accuracy compared to MLE.

NCE’s Impact on Natural Language Processing

Improving Word Embeddings with NCE

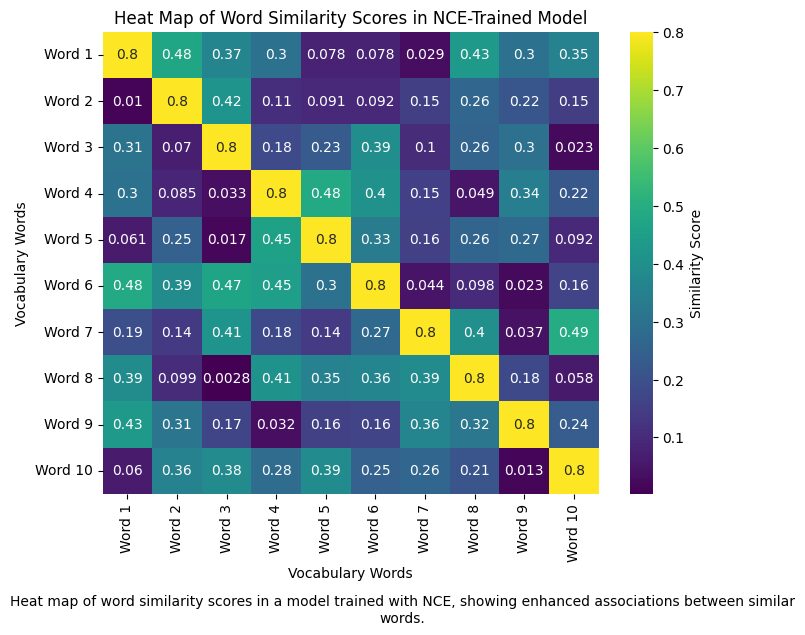

In NLP, word embeddings represent words in vector space, making it easier for models to understand language nuances. NCE has been transformative here, especially in models like Word2Vec. By learning from both real and noise data, NCE-based embeddings better capture semantic meanings, context, and associations between words without excessive computational demands.

Vocabulary: Simulated vocabulary of 10 words labeled as “Word 1” to “Word 10.”

Similarity Scores: Higher values along the diagonal indicate improved word associations.

Heat Map: The heat map visualizes word similarity scores with a color gradient from low (darker) to high (lighter) values.

Reducing Overhead in Language Models

For large language models, training on vast datasets is resource-intensive. Noise Contrastive Estimation allows these models to achieve high accuracy with fewer resources. Instead of needing extensive parameter tuning, NCE helps the model quickly learn relevant patterns, reducing both training time and computational costs. This is particularly useful in real-time applications where efficiency and quick adaptation to new data are critical.

Signal and Noise flow through respective embedding layers, converging at the final Classification Layer.

Enhancing Contextual Understanding

NCE-based training helps models understand context more efficiently. When exposed to diverse linguistic noise, models become adept at filtering out irrelevant information, which enhances their ability to handle ambiguous or noisy language data. This increased contextual sensitivity is especially valuable for chatbots and other real-time NLP systems.

Applications of NCE Beyond NLP

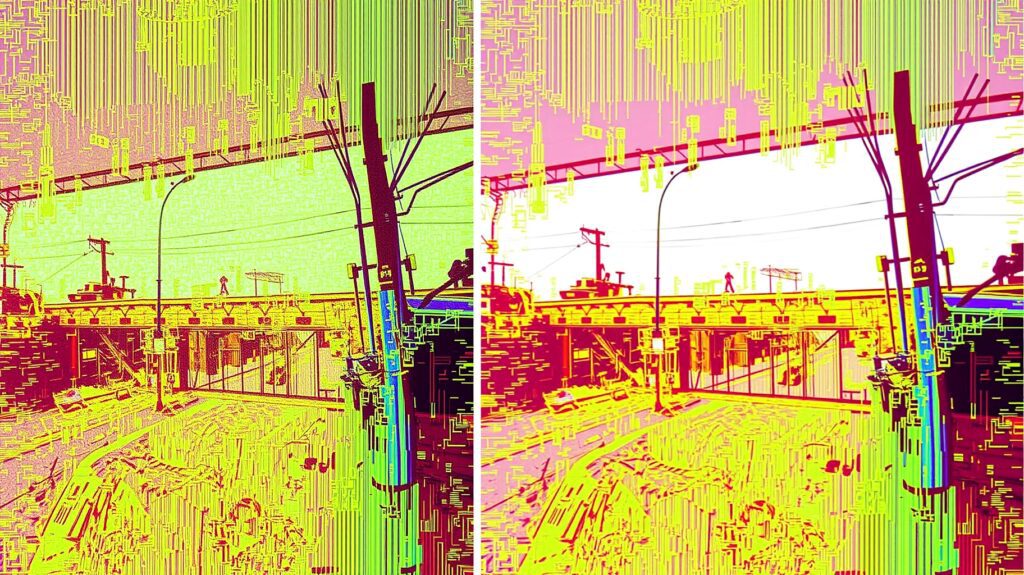

Image Recognition and Computer Vision

In computer vision, noise contrastive estimation is used to refine feature recognition, enabling models to more effectively distinguish between objects and backgrounds. By introducing noise samples that resemble backgrounds or irrelevant patterns, NCE-based models become highly skilled at object detection, segmentation, and image classification tasks.

Speech Recognition and Audio Processing

For speech recognition, distinguishing meaningful audio from background noise is challenging. NCE improves these models by training them to identify clear audio signals amid noise, enhancing accuracy in applications like voice assistants and transcription services.

Why NCE is Crucial for Reducing Overfitting

The Role of Noise in Regularization

Overfitting can be a major pitfall in AI model development. When models learn too closely from training data, they lose the ability to generalize to new data. Noise Contrastive Estimation (NCE) serves as an effective regularization technique by introducing noisy data, allowing models to learn from variation rather than perfection. This enables them to capture patterns that apply across a range of data, minimizing overfitting issues in large datasets.

Improved Generalization Across Datasets

The ability to generalize is critical for any model to perform well in real-world scenarios. NCE-trained models learn not only the characteristics of real data but also develop a sensitivity to noise, helping them adapt to new, unseen data more effectively. In fields like healthcare or finance, where data variability is high, NCE’s generalization power is especially valuable for ensuring consistent and accurate predictions.

Reduced Need for Extensive Data Labeling

Labeling data for supervised learning is resource-intensive, especially with large datasets. NCE helps reduce this burden because it can train on a mix of labeled and unlabeled data using noise samples. With fewer labeled data points, NCE-based models can still achieve high accuracy, making it a cost-effective solution for industries requiring vast datasets but limited by labeling resources.

NCE in Self-Supervised and Unsupervised Learning

Revolutionizing Self-Supervised Learning

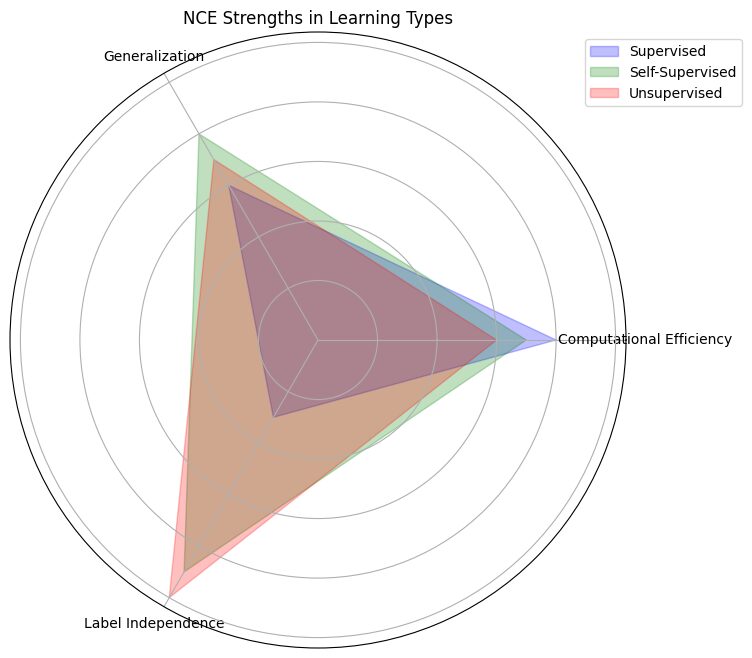

Self-supervised learning has gained traction for its ability to train models without manual labeling. In this paradigm, NCE shines by helping models differentiate between features and noise within unlabeled data. For example, contrastive learning techniques in vision and language often leverage NCE to distinguish positive pairs (similar objects or contexts) from negative pairs (dissimilar ones). This setup significantly improves feature extraction and model robustness without needing exhaustive labeling.

Axes: Each axis represents one factor: computational efficiency, generalization, or label independence.

Values: Scaled values (0 to 1) for each learning type: supervised, self-supervised, and unsupervised.

Colors: Different color fills for each learning type to visually distinguish strengths.

Advancements in Unsupervised Learning

Unsupervised learning benefits from NCE by shifting away from traditional clustering methods, which often struggle with large, noisy datasets. By framing the problem as a binary classification—signal vs. noise—NCE gives unsupervised models a more structured way to learn from raw data. In complex applications like recommendation engines or anomaly detection, this approach enhances model reliability and relevance in identifying useful patterns.

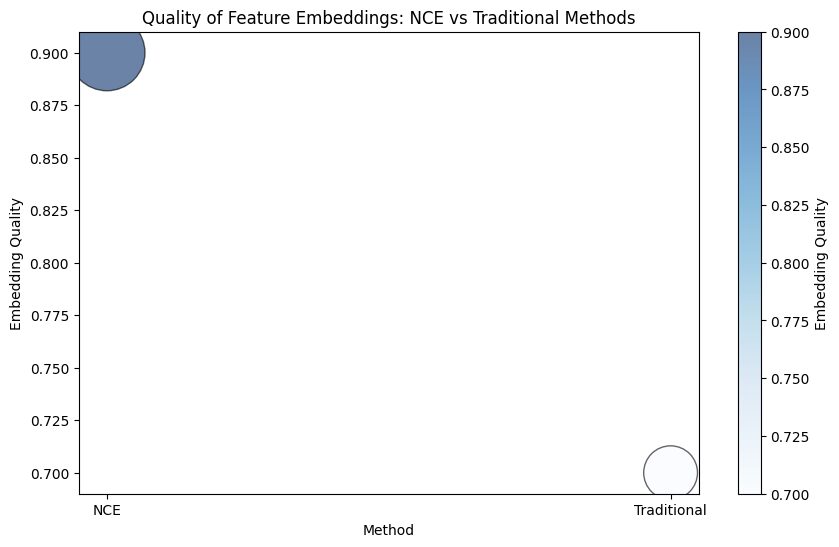

NCE and Representation Learning

NCE also plays a significant role in representation learning by helping models develop high-quality data embeddings. These embeddings capture essential data features, which can be used in downstream tasks like classification, clustering, or predictive modeling. By reducing the influence of noise, NCE-based embeddings achieve greater precision, making them invaluable for applications in NLP, computer vision, and beyond.

Bubble Size: Represents the number of features, with NCE having more features than traditional methods.

Color Intensity: Indicates embedding quality, with NCE showing a higher quality (darker color) than traditional methods.

NCE’s Role in Scalable AI Model Training

Handling Large-Scale Datasets Efficiently

Training on large datasets can be computationally prohibitive. NCE reduces these requirements by converting complex likelihood computations into simpler classification problems. Instead of needing to process each data point with precision, the model can learn efficiently by differentiating signal from noise, achieving scalability without sacrificing accuracy.

| Method | Dataset Size | Computational Requirements | Memory Usage | Model Performance |

|---|---|---|---|---|

| Noise Contrastive Estimation (NCE) | Small | Low – Efficient for smaller datasets | Minimal, suitable for edge devices | High accuracy with minimal resources |

| Medium | Moderate – Fast convergence with increasing size | Low to Moderate | High performance with optimal tuning | |

| Large | Moderate – Scales well due to reduced training cost | Moderate – Remains efficient | Consistent performance across large datasets | |

| Maximum Likelihood Estimation (MLE) | Small | Moderate – Efficient at small scale | Low to Moderate | High accuracy, but slower than NCE |

| Medium | High – Requires increased power and time | Moderate to High | Moderate performance, tuning dependent | |

| Large | Very High – Computationally intensive | High | May underperform on large datasets | |

| Bayesian Estimation | Small | High – Computationally intensive even at small scale | High | High accuracy, robust but costly |

| Medium | Very High – Slow processing for complex models | Very High | High accuracy, but may be inefficient | |

| Large | Extremely High – Limited scalability | Extremely High | Not ideal for very large datasets |

Key Points:

- NCE:

- Computational Requirements: Requires less computation across dataset sizes, benefiting from fast convergence.

- Memory Usage: Uses minimal memory for smaller datasets and remains efficient with large datasets.

- Model Performance: Maintains high accuracy, especially suitable for real-time and resource-constrained applications.

- MLE:

- Computational Requirements: Increasingly intensive as dataset size grows.

- Memory Usage: Moderate for small datasets but scales poorly with large ones.

- Model Performance: Strong accuracy for small datasets, but performance may diminish without extensive tuning for large datasets.

- Bayesian Estimation:

- Computational Requirements: Highly intensive across all dataset sizes.

- Memory Usage: Very high memory demands, making it less suitable for large-scale data.

- Model Performance: Accurate but impractical for very large datasets due to the high cost.

Lowering Memory and Computational Costs

In traditional maximum likelihood estimation, model training can quickly exhaust memory resources. NCE’s simplified objective function requires fewer calculations, meaning models can operate with lower memory and processing power. This makes NCE a strong choice for AI applications with limited computational resources or those that require rapid model deployment.

Faster Convergence for Faster Results

NCE-trained models converge faster than those trained on traditional likelihood-based methods. This is particularly beneficial for rapid development cycles, where quick training times mean faster deployment and iteration for real-world applications. The accelerated convergence rate of NCE is especially useful for startups or teams looking to achieve faster insights without compromising on accuracy.

Future Directions: Enhancing AI with NCE

Hybrid NCE Approaches

In the future, NCE could be combined with other training methods to create hybrid models that harness the strengths of multiple techniques. For example, mixing NCE with reinforcement learning could help models learn from both reward signals and noise discrimination, improving adaptability and performance in dynamic environments.

NCE in Federated Learning

As privacy concerns grow, federated learning—which allows models to train on distributed data—gains importance. NCE’s efficiency could make it ideal for federated settings, enabling models to handle noise in decentralized data, reducing the need for central data gathering and improving data privacy.

Integrating NCE into Edge Computing

Edge computing, where data processing happens close to data sources, demands efficient and scalable models. NCE’s ability to process large datasets with reduced computational loads could make it a cornerstone in edge AI applications, especially in fields like IoT, autonomous vehicles, and healthcare monitoring.

With applications across domains, Noise Contrastive Estimation promises to remain a critical tool in the advancement of AI, enabling efficient, scalable, and highly adaptable models poised to tackle complex real-world problems.

FAQs

What is Noise Contrastive Estimation in simple terms?

Noise Contrastive Estimation (NCE) is a machine learning technique that transforms unsupervised learning into a supervised problem. By adding noise samples (random data) and contrasting them against real data, the model learns to distinguish true patterns from irrelevant ones. This technique allows models to perform well with fewer computations and reduces the chance of overfitting.

How does NCE improve model efficiency?

NCE improves model efficiency by reducing the need for complex likelihood computations. It simplifies the training process, requiring the model to classify data as either signal (real) or noise, rather than calculating probabilities for each data point. This decreases memory and computational costs and enables faster training times, which is crucial for large-scale applications.

Why is NCE useful for natural language processing?

NCE is particularly effective in natural language processing (NLP) because it helps models understand context by distinguishing meaningful language patterns from noise. When used in word embeddings, for instance, NCE improves how the model captures word associations and meanings. This technique enhances NLP applications like chatbots, search engines, and language translation tools by reducing computational load and increasing contextual understanding.

Can NCE be used in unsupervised learning?

Yes, NCE is widely used in unsupervised learning. It enables models to identify useful features without labeled data by framing the learning problem as one of signal vs. noise classification. This approach helps unsupervised models learn patterns in raw data, which is beneficial in applications like recommendation systems, clustering, and anomaly detection.

How does NCE help reduce overfitting?

By exposing the model to noise samples, NCE effectively regularizes it, helping prevent overfitting. The model learns to recognize genuine patterns that generalize well to new data rather than memorizing specifics of the training data. This increases the model’s robustness and accuracy when applied to diverse datasets.

Where is NCE most beneficial?

NCE is beneficial in fields requiring large-scale, efficient models, such as NLP, computer vision, and speech recognition. In computer vision, for example, NCE allows models to better distinguish objects from backgrounds by training with noise that mimics irrelevant data. Similarly, in speech recognition, NCE helps models focus on clear audio signals amidst noise, enhancing accuracy in real-world applications.

Is NCE suitable for edge computing?

Yes, NCE is well-suited for edge computing because it minimizes memory and computational demands, making it ideal for low-power, decentralized environments. By allowing models to train and operate efficiently, NCE enables applications like IoT devices, autonomous vehicles, and real-time health monitoring to process data on-site rather than relying on centralized data centers.

Can NCE be combined with other machine learning methods?

NCE can be combined with other methods, such as reinforcement learning, to create hybrid models. Such combinations can enhance the model’s ability to learn from both rewards and noise differentiation, potentially improving performance in complex and dynamic environments like robotics and real-time decision-making systems.

How does NCE handle large datasets effectively?

NCE handles large datasets efficiently by simplifying the training objective. Instead of calculating probabilities for each data point (which is computationally expensive), the model learns by distinguishing between signal and noise. This allows it to process large datasets with reduced memory and processing power, making NCE an optimal choice for applications requiring scalability, such as NLP with massive text corpora and image recognition with extensive datasets.

What is the difference between NCE and maximum likelihood estimation?

Maximum likelihood estimation (MLE) focuses on maximizing the probability of observed data under a given model, which can be resource-intensive and prone to overfitting. NCE, on the other hand, trains the model to classify data as either signal or noise, avoiding the need for complex likelihood calculations. This distinction makes NCE faster and more robust to noise, especially for high-dimensional data where traditional MLE methods may struggle.

How does NCE improve representation learning?

In representation learning, models learn to convert raw data into compact, meaningful representations or embeddings. NCE enhances this process by training the model to focus on distinctive patterns rather than memorizing details. By contrasting real data with noise, the model learns to create embeddings that accurately capture essential features, leading to more effective results in downstream tasks like classification, clustering, and anomaly detection.

Why is NCE advantageous for self-supervised learning?

NCE is beneficial in self-supervised learning because it allows models to create labels based on data context. For instance, by contrasting similar and dissimilar data points, NCE helps models learn relevant features without needing manual labeling. This ability to operate with minimal supervision is particularly useful in fields like image recognition, speech processing, and NLP, where labeled data can be scarce or expensive to obtain.

Does NCE reduce the need for labeled data?

Yes, NCE reduces reliance on labeled data by allowing models to learn from a mixture of labeled and unlabeled data. With noise samples guiding the learning process, NCE can improve model performance even when labels are sparse. This characteristic makes it a cost-effective approach for industries like healthcare, finance, and autonomous driving, where large labeled datasets can be challenging to collect.

How does NCE work in audio and speech recognition?

In audio and speech recognition, NCE helps models distinguish between meaningful audio signals and background noise. By training with noise samples, NCE-based models become adept at isolating relevant audio features, improving their accuracy in real-world environments. This is valuable for applications like voice assistants, transcription services, and automated customer service, where clarity and reliability are paramount.

Is NCE effective in real-time applications?

NCE is highly effective for real-time applications due to its faster training and lower computational requirements. The technique’s simplified objective function allows models to operate with minimal lag, making NCE ideal for applications that demand speed and accuracy, such as fraud detection, social media monitoring, and recommendation systems. Real-time performance is essential in these fields, and NCE’s efficiency meets this demand well.

What are some challenges associated with NCE?

While NCE has many advantages, it does come with challenges. Selecting suitable noise samples is essential, as poorly chosen noise can lead to ineffective training and reduced model accuracy. Additionally, in extremely high-dimensional data, the noise contrastive process may become more complex, potentially requiring fine-tuning of noise distribution to achieve optimal results.

Resources

Research Papers and Articles

- “Noise-Contrastive Estimation of Unnormalized Statistical Models, with Applications to Natural Image Statistics” by Gutmann and Hyvärinen (2010)

The foundational paper introducing NCE, explaining its mathematical background and application to image data. Available on ArXiv - “Efficient Estimation of Word Representations in Vector Space” by Mikolov et al. (2013)

Discusses how NCE was applied in the popular Word2Vec model to generate word embeddings for natural language processing tasks. Available on Google Scholar - “Unsupervised Feature Learning and Deep Learning: A Review and Outlook” by LeCun, Bengio, and Hinton (2015)

Provides insights into unsupervised feature learning techniques, including NCE, and their application in deep learning. Available on Science or ResearchGate