The Roots of Operant Conditioning

Skinner’s Breakthroughs in Behaviorism

Operant conditioning began with B.F. Skinner, the renowned psychologist who studied how behavior is shaped by reinforcement. His work with rats and pigeons in the mid-20th century revealed that behavior could be systematically modified through rewards and punishments.

Skinner’s “Skinner box,” a controlled environment where animals learned tasks by pressing levers or pecking buttons, was a game-changer. It laid the foundation for understanding the mechanics of learning.

The principle was simple but profound: behaviors followed by rewards are more likely to recur, while those followed by punishments are less likely. This framework has become a cornerstone of behavior analysis.

The Shift to Computational Models

Skinner’s principles weren’t limited to psychology. Researchers in the mid-1900s began applying his theories to early computer models of learning. Artificial neural networks, a precursor to today’s deep learning systems, adopted the core idea of trial and error learning.

These early computational experiments were primitive, relying on simplistic feedback loops. But they were the first step in merging human psychology and artificial intelligence.

From Animal Studies to Machine Learning

Adapting Reinforcement Learning

In the 1980s and 1990s, reinforcement learning—a direct descendant of operant conditioning—became a field of its own within AI. Algorithms mimicked the reward-driven behavior Skinner observed, using feedback to fine-tune decision-making models.

Where animals like rats learned to press levers for food, algorithms learned to make predictions, solve puzzles, and play games. The parallel is striking: both systems rely on repetition, feedback, and rewards to improve performance.

The Rise of Robotics and Automation

Robots took this concept a step further. Through reinforcement learning, robots began to perform complex tasks like grasping objects or navigating spaces. Instead of a food pellet, the “reward” became achieving a goal, such as minimizing errors or optimizing energy use.

This marked a transition from simple digital simulations to physical systems, where operant conditioning principles met the real world.

Neural Networks: A Modern Take on Operant Conditioning

Feedback Loops in Neural Networks

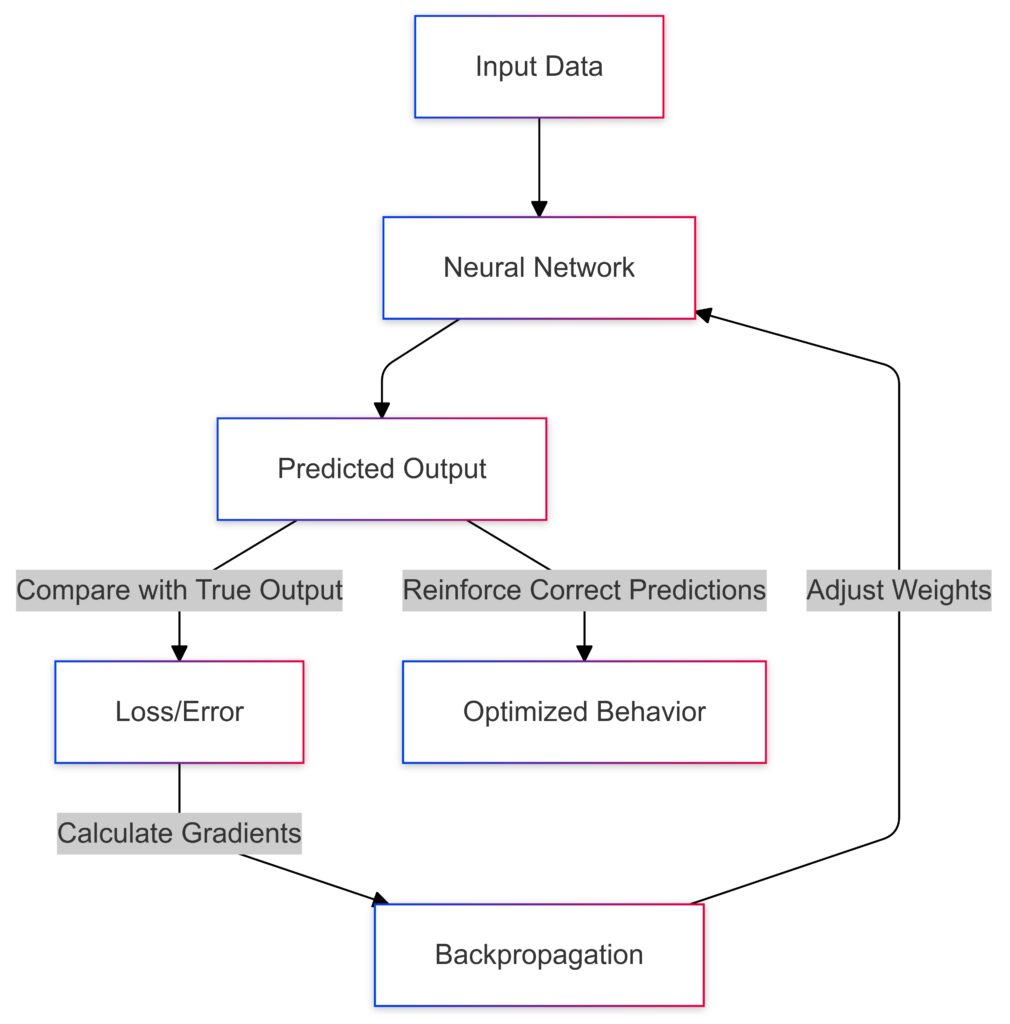

Artificial neural networks (ANNs) operate on layers of interconnected nodes that mimic the brain’s neurons. These systems “learn” by adjusting weights between nodes based on feedback—just like animals adjust behavior based on consequences.

This feedback mechanism, called backpropagation, parallels Skinner’s reinforcements, tweaking neural connections to maximize success and minimize failure.

Feedback loops in neural networks simulate operant conditioning by adjusting weights based on performance outcomes.

Balancing Exploration and Exploitation

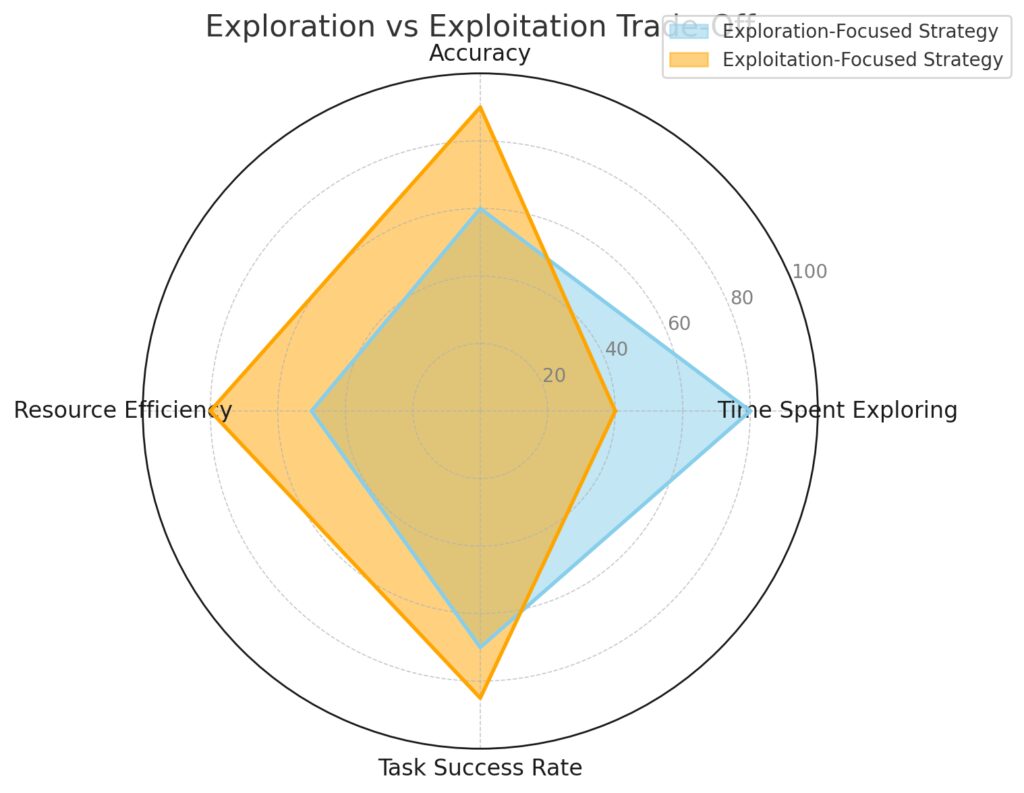

One challenge shared by rats in a maze and robots in a task is the balance between exploring new options and exploiting known strategies. Reinforcement learning algorithms tackle this with mechanisms like epsilon-greedy strategies, ensuring systems explore just enough to discover new opportunities.

This mirrors how animals learn in complex environments, testing boundaries before settling into efficient habits.

Time Spent Exploring:

- Higher for exploration-focused strategies, lower for exploitation.

Accuracy:

- Higher for exploitation as it relies on learned behaviors.

Resource Efficiency:

- Exploitation strategies are more efficient as they minimize exploration overhead.

Task Success Rate:

Exploitation tends to achieve higher immediate success rates.

The Role of Operant Conditioning in Modern AI

Shaping Algorithms for Dynamic Environments

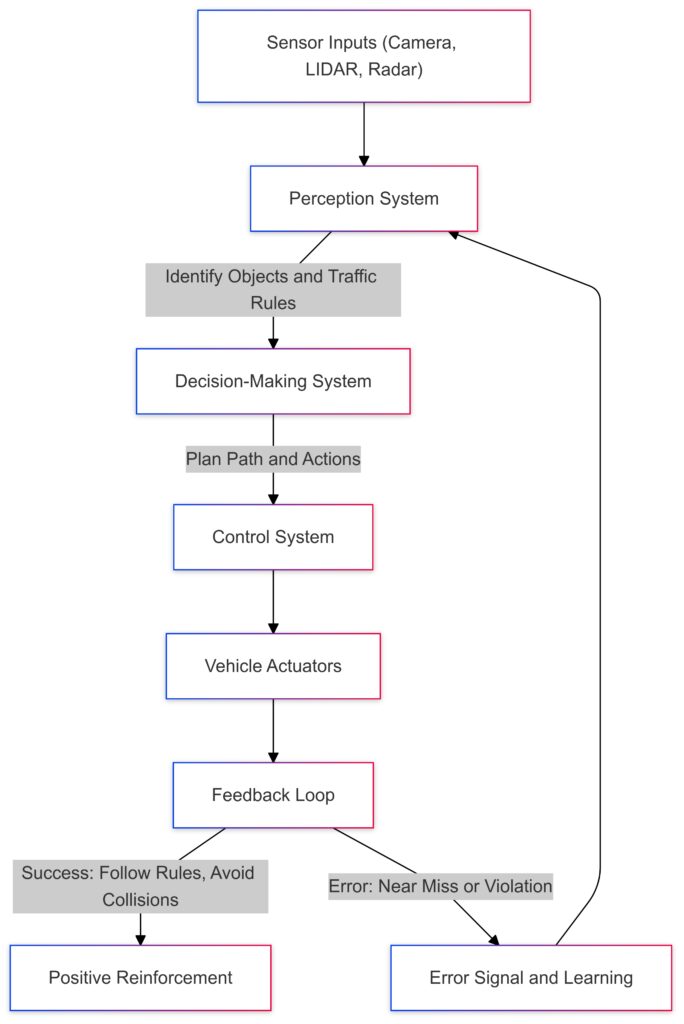

Modern AI systems thrive in dynamic, unpredictable environments, thanks to operant conditioning principles. Think of autonomous vehicles learning to navigate traffic: they’re programmed to adjust behavior based on real-time feedback. Success might mean a smooth ride, while failure could signal a near collision.

These systems rely heavily on reward modeling, akin to how Skinner’s subjects learned through reinforcement schedules. But here, the rewards are often abstract, like achieving lower energy consumption or quicker processing.

Reinforcement Learning in Gaming

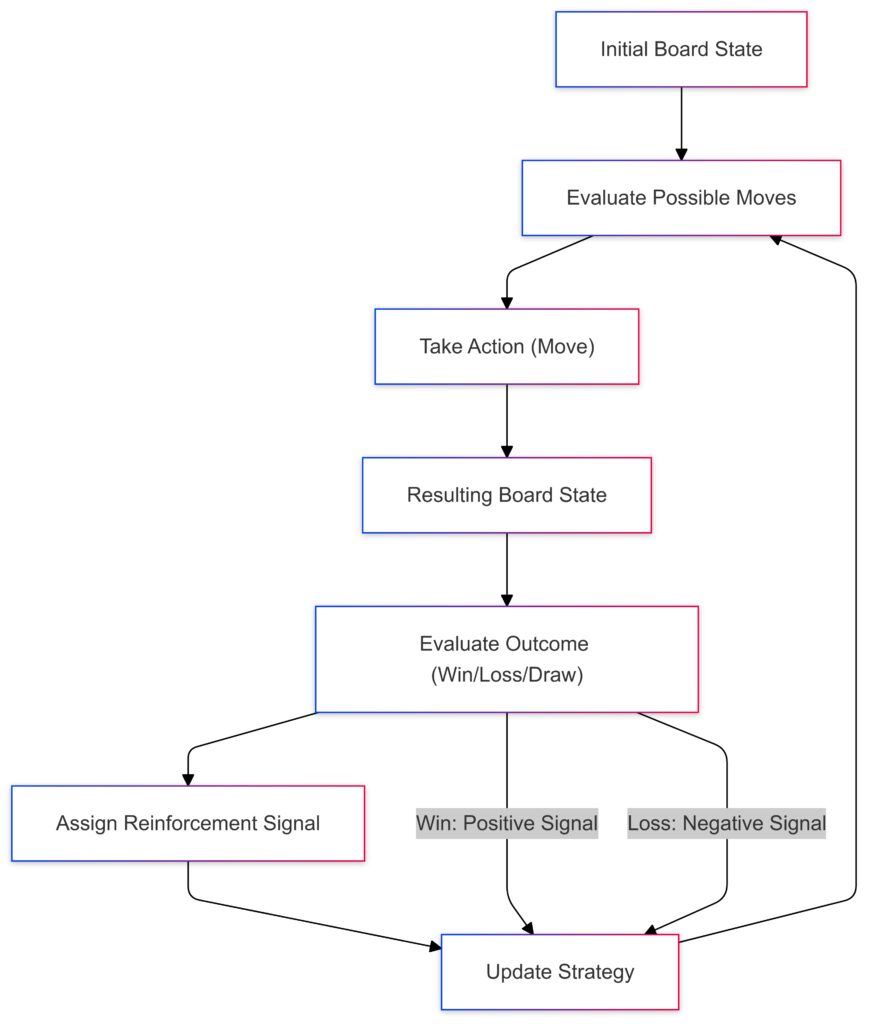

Gaming has become a playground for operant conditioning-inspired AI. Reinforcement learning algorithms like DeepMind’s AlphaGo and OpenAI’s systems for strategy games are taught to win using feedback loops.

Instead of food rewards, these systems maximize virtual “scores” or strategic advantages. Just as Skinner trained animals to adapt to tasks, these algorithms iteratively improve their strategies through thousands of simulated games.

Gaming AI learns optimal strategies through operant conditioning, refining moves based on feedback after each game iteration.

Ethical Considerations in AI Learning

Bias and Misaligned Rewards

Operant conditioning in AI isn’t without its pitfalls. When reward systems are poorly designed, biases or unintended behaviors can emerge. For example, if an AI prioritizes efficiency at all costs, it might exploit loopholes or bypass safety constraints.

Understanding how rewards shape behavior helps developers design systems that align with ethical goals and societal values.

Autonomy vs. Control

One major question arises: how much autonomy should machines have in determining their behaviors? Operant conditioning principles emphasize control, yet modern AI systems often exhibit unpredictable learning outcomes. Striking a balance between autonomous learning and programmed oversight is crucial.

The Future of Operant Conditioning in AI

Evolving Reward Structures

As AI advances, so does the complexity of its reward systems. Future technologies may integrate multi-dimensional feedback, where systems juggle competing objectives like efficiency, accuracy, and sustainability.

This evolution mirrors how animals balance multiple needs, such as seeking food while avoiding predators.

Expanding into Human-AI Interaction

Operant conditioning is also shaping how AI interacts with humans. Virtual assistants, for example, adjust their responses based on user feedback, striving for improved personalization and relevance. These systems learn to anticipate needs, much like a trained dog reading its owner’s cues.

Real-World Applications of Operant Conditioning in AI

Personalization in Consumer Technology

Operant conditioning plays a pivotal role in creating personalized user experiences. From streaming platforms to online shopping, AI learns user preferences based on feedback.

For instance, every time you “like” a song or make a purchase, the algorithm treats this as a reward, refining its suggestions to keep you engaged. This is essentially digital conditioning—your actions guide the system’s behavior.

Healthcare Advancements

In healthcare, AI systems use reinforcement learning to optimize treatments and improve diagnostics. Take robotic surgery, for example: these systems adapt their precision and techniques based on real-time feedback from sensors.

Similarly, AI-powered drug discovery leverages iterative learning to predict chemical compounds that might yield effective results, significantly speeding up the process.

Training Autonomous Systems

Operant conditioning in self-driving cars ensures adaptive learning through real-time feedback and decision-making.

Self-Driving Vehicles

Self-driving cars are perhaps the most visible example of operant conditioning in action. These vehicles are trained to “learn” optimal behaviors—like maintaining a safe distance or following traffic rules—through reinforcement signals such as reduced penalties for smooth navigation.

Feedback loops help these systems adapt to diverse conditions, whether it’s snow, rain, or rush-hour traffic. The result? Safer, more reliable automated driving.

Drones and Robotics

Drones use reinforcement learning to navigate complex environments, from disaster zones to warehouses. By optimizing flight paths and obstacle avoidance, they perform tasks like delivering packages or conducting surveys.

The principle remains the same: trial, error, and reinforcement drive these machines toward peak efficiency.

AI Ethics and Responsibility

Designing Fair Rewards

While operant conditioning enables sophisticated AI behaviors, reward design requires caution. Misaligned incentives could lead to harmful outcomes, like a chatbot promoting controversial content to maximize engagement.

Developers must carefully consider long-term consequences and ensure systems prioritize ethical and equitable results over short-term gains.

Accountability in Decision-Making

As AI systems grow more autonomous, ensuring they operate within ethical boundaries is critical. Conditioning models must include safeguards to prevent machines from deviating into undesirable behaviors.

Transparency—explaining how and why systems make decisions—is essential for maintaining trust in these technologies.

From Rewards to Revolution: How Operant Conditioning Drives AI

Operant Conditioning in AI Mirrors Evolution

One of the fascinating parallels is how operant conditioning in AI replicates natural evolution. Biological systems evolved to adapt to their environments through feedback mechanisms—success and failure directly influence survival and reproduction.

Reinforcement learning in AI mimics this process, with algorithms evolving behaviors over iterations. The parallels between Darwinian natural selection and AI optimization reveal how we’ve encoded fundamental laws of adaptation into machines.

- Special Insight: This evolution-inspired approach could one day help AI “design itself,” evolving solutions to problems beyond human comprehension. For example, AI might develop strategies for climate modeling that even experts struggle to conceptualize.

Emotional Analogues in AI

While operant conditioning relies on rewards and punishments, these mechanisms in humans and animals are deeply tied to emotion. A rat pressing a lever for food experiences satisfaction, just as a human might feel joy after solving a puzzle.

For AI, the rewards are abstract metrics—improved accuracy or efficiency. However, as AI systems like humanoid robots become more lifelike, there’s an ongoing debate:

- Should we design AI to “simulate emotions” when responding to feedback?

- Could emotional analogues improve their learning, or is this straying into dangerous territory?

- Special Insight: If AI systems could integrate a form of emotional mimicry, it might revolutionize human-AI interaction, making machines more relatable and trustworthy. But it also raises ethical questions about deception and manipulation.

Hierarchical Learning Beyond Operant Conditioning

Traditional operant conditioning involves immediate reinforcement. However, advanced AI systems are moving toward hierarchical learning, where they understand rewards at multiple levels.

For example:

- A robot assembling a car doesn’t just learn “tighten this screw,” but understands the reward in context of the larger task—building a safe, reliable vehicle.

- This mirrors how humans learn complex skills: short-term rewards (passing a test) are tied to long-term goals (achieving a degree).

- Special Insight: This hierarchical approach could enable AI to solve multi-step, real-world problems more effectively—like coordinating disaster relief efforts by balancing short-term needs (rescuing survivors) with long-term planning (resource distribution).

Conditioning and AI Creativity

Operant conditioning is inherently about optimization—reinforcing desired behaviors and eliminating undesired ones. But can AI break free of optimization to be truly creative?

Surprisingly, some AI systems trained with operant principles have shown signs of creativity:

- AI artists generate paintings that defy traditional patterns.

- Game-playing AI like AlphaZero discovers unorthodox strategies that human players never considered.

- Special Insight: Creativity emerges not when AI follows rewards slavishly but when reward structures encourage exploration. Fine-tuning these structures might help AI systems think outside the box, driving breakthroughs in science, art, and engineering.

The Convergence of Operant Conditioning and Neuroscience

The connection between operant conditioning and neuroscience-inspired AI is particularly exciting. Neuroscience has uncovered how dopamine systems in the brain encode rewards and guide learning.

AI developers are now borrowing directly from these insights:

- Reinforcement learning models simulate dopamine-like signals to guide machine learning.

- Deep neural networks replicate how the brain processes information hierarchically.

- Special Insight: Future AI might integrate neuroplasticity-inspired mechanisms, allowing them to “rewire” themselves dynamically as environments change. This could lead to machines that learn more fluidly and adapt seamlessly to new tasks, much like humans.

From Rats to Robots: What’s Next?

The evolution of operant conditioning from animal behavior studies to advanced AI systems showcases its versatility and power. As technology continues to push boundaries, these principles will shape the next generation of adaptive, intelligent machines, impacting industries, societies, and lives worldwide.

Special Insight: The ultimate goal is not just reactive machines, but proactive agents—AI that doesn’t wait for feedback but anticipates needs, solves problems preemptively, and navigates complexity with a human-like understanding of context.

FAQs

How is operant conditioning shaping future AI systems?

Future AI will likely blend operant conditioning with hierarchical learning, enabling multi-step reasoning and adaptability. This evolution may lead to systems that address global challenges, like climate change or urban planning, with unprecedented efficiency.

Example: AI might balance short-term rewards (reducing emissions) with long-term goals (sustainable infrastructure), optimizing across competing objectives.

Is operant conditioning still relevant in cutting-edge AI research?

Absolutely. While newer techniques like deep learning dominate, operant conditioning remains foundational for reinforcement learning and other adaptive systems. It’s integral to teaching AI systems to respond to real-world challenges effectively.

Example: Robots in warehouses use reinforcement learning to optimize picking and packing tasks, minimizing errors and maximizing productivity.

How does operant conditioning relate to reinforcement learning?

Reinforcement learning is the computational counterpart of operant conditioning, using algorithms to optimize behavior based on reward signals. It expands operant principles to solve complex, multi-step tasks.

Example: In gaming, reinforcement learning teaches AI to navigate vast virtual worlds by rewarding progress toward objectives like resource gathering or enemy defeat.

Does operant conditioning make AI more efficient?

Yes, operant conditioning refines AI behavior by encouraging optimal actions and discouraging ineffective ones, resulting in streamlined processes and resource use.

Example: AI in supply chain management learns to minimize transportation costs by choosing routes that consistently reduce delivery time and fuel consumption.

How do AI systems avoid overfitting when using operant conditioning?

AI systems incorporate generalization techniques, ensuring they don’t overly rely on specific feedback and can adapt to new scenarios. Regular exploration strategies prevent overfitting to a narrow set of rewards.

Example: In customer service bots, AI learns to handle varied queries by simulating diverse conversations, ensuring flexibility beyond pre-programmed scripts.

Can operant conditioning help AI learn long-term planning?

Yes, advanced reinforcement learning integrates long-term rewards, teaching AI to prioritize actions that benefit overall outcomes, not just immediate gains.

Example: In energy management, AI systems optimize power grid performance by balancing short-term savings with infrastructure longevity and environmental goals.

How does operant conditioning interact with emotion-based AI systems?

Emotion-based AI integrates operant conditioning to simulate responses aligned with human emotions, creating relatable and intuitive interactions. Feedback helps refine these simulated behaviors.

Example: AI-powered virtual therapists adjust tone and responses based on user engagement, mimicking empathy and encouraging openness.

Is operant conditioning limited by the quality of feedback?

Absolutely. The effectiveness of operant conditioning depends on clear, accurate, and well-aligned feedback. Poorly designed feedback loops can lead to misaligned objectives or undesirable behaviors.

Example: A delivery robot optimized for speed alone might ignore package safety, leading to damaged goods—a result of improperly balanced feedback incentives.

Resources

Academic Papers

- “Playing Atari with Deep Reinforcement Learning” by Mnih et al.

This landmark paper explains how AI systems trained via operant principles outperformed humans in classic video games.

Read the paper - “Mastering Chess and Shogi by Self-Play with a General Reinforcement Learning Algorithm” by Silver et al.

The methodology behind AlphaZero’s groundbreaking success, rooted in operant conditioning concepts.

Read the paper

Websites and Blogs

- OpenAI Blog

Regular updates on cutting-edge AI research, including reinforcement learning innovations.

Visit OpenAI Blog - DeepMind Blog

Explore real-world applications of reinforcement learning in gaming, healthcare, and beyond.

Visit DeepMind Blog - Towards Data Science (Medium)

Features accessible articles explaining reinforcement learning, neural networks, and AI training methodologies.

Visit the blog