Why C is Ideal for AI Algorithm Optimization

You may wonder why C is still a popular choice for AI algorithm optimization when more modern languages, like Python, seem to dominate AI development. Well, C’s reputation as a low-level, high-speed language makes it an ideal candidate for optimizing the core algorithms that power AI systems.

The truth is, C allows developers to directly manage memory, control hardware resources, and fine-tune performance in ways that high-level languages just can’t.

By using C, you can squeeze out every last bit of speed from your hardware—something that’s absolutely critical when running machine learning models on large datasets. But it’s not just about speed; it’s about having full control over how your program operates, ensuring that it’s as efficient as possible without any unnecessary overhead.

Understanding Time Complexity for AI Algorithms

Before you even write a line of code, understanding time complexity is critical to building efficient AI algorithms. Time complexity helps you evaluate how your algorithm’s runtime grows as the input size increases. The trick is to aim for algorithms that operate in O(log n) or O(n) time, ensuring that your AI processes are as fast as possible.

Take sorting algorithms, for instance: A poorly chosen sorting method can slow down your entire system, especially with larger datasets. But by analyzing and selecting a better-performing algorithm (like merge sort or quicksort), you save time and computational resources.

The Importance of Memory Management in C

Unlike higher-level languages that manage memory automatically, C gives you the reins. You have to explicitly manage memory using commands like malloc() and free(), which can make your AI program faster, but also adds complexity. Without careful memory management, it’s easy to create memory leaks or waste system resources—issues that can severely slow down your AI algorithms over time.

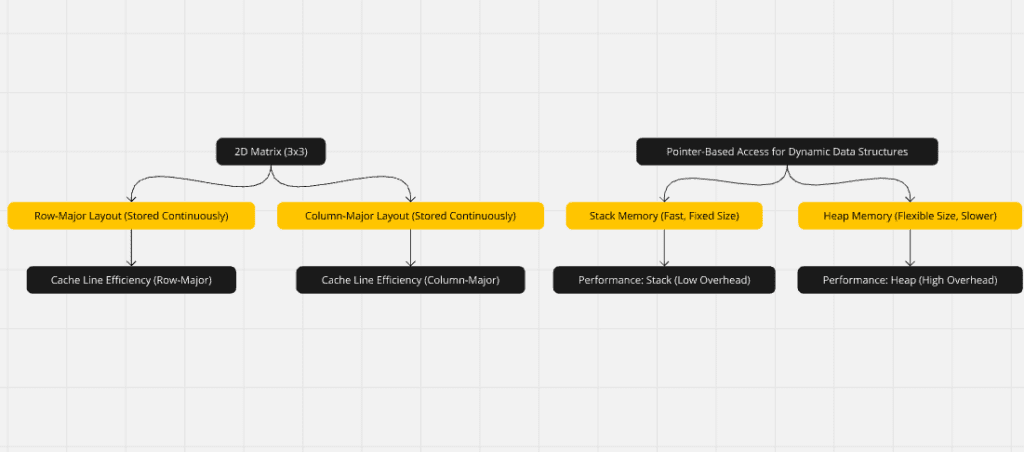

Another critical aspect is the cache. Effective use of CPU cache can make or break your program’s speed. Ensuring that frequently accessed data is stored in cache-friendly structures can drastically reduce the time it takes to access memory, giving your algorithm a huge performance boost.

2D matrix is stored in a linear memory layout, showing row-major vs. column-major order, and how it affects cache performance in matrix-heavy AI algorithms like convolutional neural networks

Leveraging Data Structures for Faster Execution

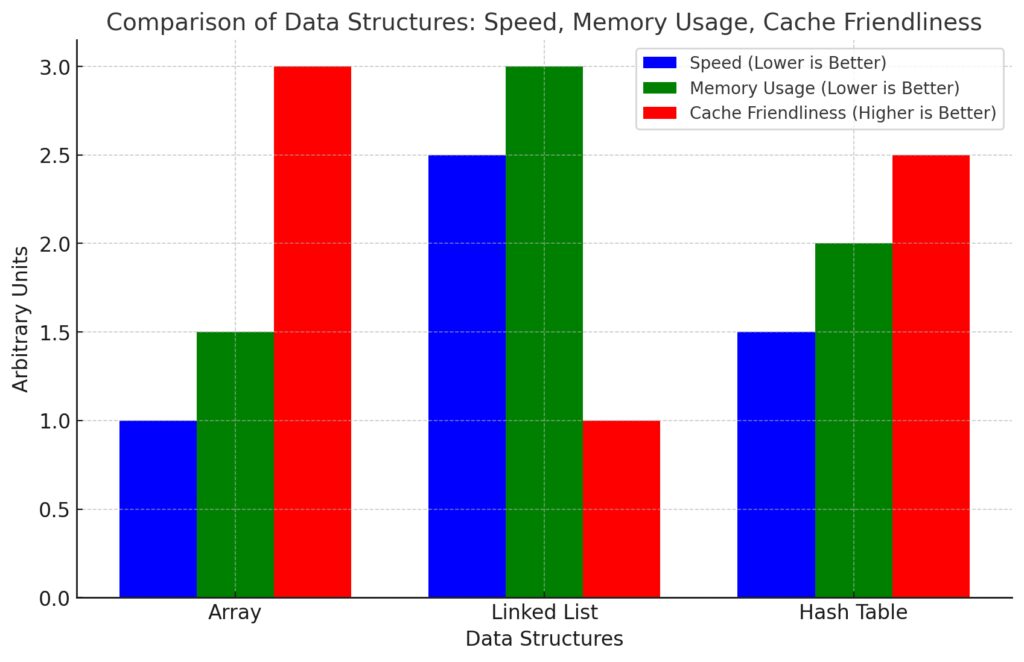

Choosing the right data structures can make a massive difference in the speed and efficiency of your AI algorithm. In C, you have a rich variety of data structures at your disposal: arrays, linked lists, stacks, queues, and hash tables, among others. The secret is to use these tools strategically.

For example, when implementing neural networks, storing data in an array might be more efficient than using linked lists, due to the contiguous memory allocation of arrays, which makes them more cache-friendly. On the other hand, if your algorithm involves frequent insertions and deletions, a linked list or a dynamically resizing array might be the better option.

Comparison of Data Structures: Speed, Memory Usage, Cache Friendliness

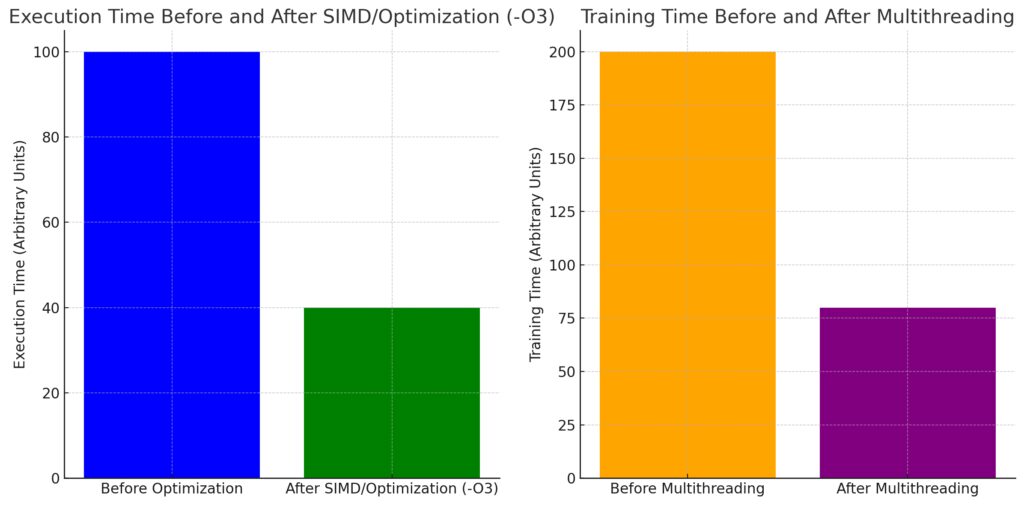

Execution times before and after SIMD optimizations or using -O3 compiler flags.Training times before and after multithreading implementation.

How to Optimize AI Algorithms with Compiler Flags

An often-overlooked technique is using compiler flags to optimize your C code. Modern C compilers, like GCC, offer a slew of options that can significantly enhance performance. For example, using the -O3 flag during compilation enables advanced optimizations, such as loop unrolling and inlining functions, both of which reduce runtime. Another great option is the -march=native flag, which ensures that the code is optimized for your specific CPU architecture.

With these compiler optimizations in place, you can often get performance boosts without changing a single line of code! However, it’s crucial to test your AI algorithms after enabling these optimizations, as they can sometimes introduce bugs or unexpected behavior.

Inline Functions and Macros for Speed Boosts

One trick to boost performance in C is using inline functions and macros. Instead of having the CPU repeatedly jump in and out of functions, inlining injects the function code directly into each place it’s called. This eliminates the overhead of a function call, speeding things up.

For instance, when performing matrix operations in machine learning, such as multiplying large matrices, using inline functions can significantly reduce function-call overhead, especially in loops where the same operation is performed thousands or even millions of times.

Similarly, macros can be a powerful tool when used carefully. Because macros are processed by the preprocessor and directly replace code, they can result in faster execution. However, misuse of macros can lead to tricky bugs, as they don’t respect C’s scope rules like functions do. A general rule? Use macros for simple, repetitive tasks, and inline functions for more complex logic.

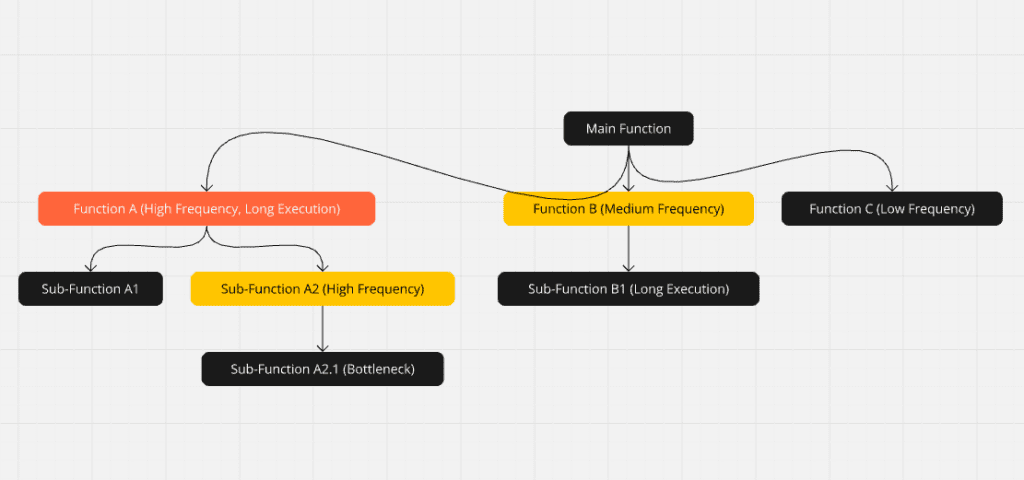

Call graphs help you identify which functions are called most frequently and take the longest to execute, indicating areas where inlining or refactoring might improve performance.

Minimizing Redundant Calculations in AI Loops

It’s amazing how often redundant calculations sneak into AI code, especially within loops. AI algorithms frequently require nested loops, whether for training neural networks or for search algorithms like A*. If you’re not careful, you can easily end up performing the same calculations repeatedly, wasting both time and resources.

Consider a situation where you’re recalculating the same value each iteration. This slows down the entire process. The trick is to identify these redundant calculations and move them outside of the loop. For instance, if you’re using a sigmoid function for a neural network, precompute values where possible or store them in a lookup table to avoid recalculating the same value over and over again.

How to Use Parallel Processing in C for AI

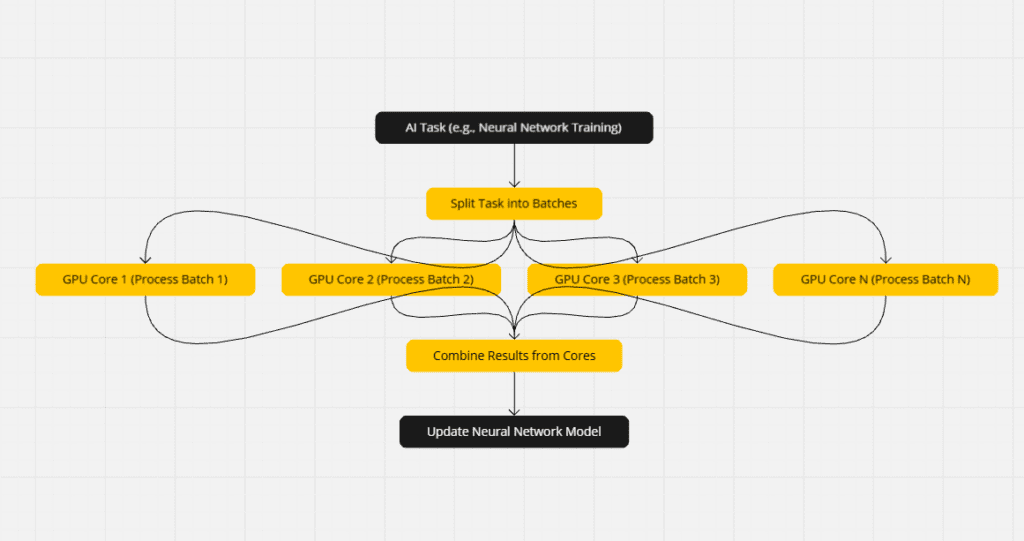

Parallel processing is one of the best ways to optimize AI algorithms for faster execution. AI algorithms, especially those that involve massive datasets, benefit immensely from being able to run multiple processes simultaneously. In C, libraries like OpenMP or POSIX threads (pthreads) allow you to break tasks into chunks that can be handled by multiple CPU cores at the same time.

For example, training a neural network can be parallelized by dividing up the training data across multiple threads, with each thread working on its own portion of the dataset. The results can then be aggregated at the end. By splitting these operations across multiple threads, you can drastically reduce the time it takes to train the model.

It’s important, however, to be careful about thread synchronization and race conditions—when two threads try to access and modify shared data simultaneously. Proper locking mechanisms, such as mutexes and semaphores, are critical to prevent these issues and ensure that your program runs smoothly in parallel.

The diagram that shows how tasks are split and processed simultaneously in a parallel processing environment

Efficient Use of Arrays and Pointers in AI Code

Arrays and pointers are fundamental to writing efficient C code, especially in AI where handling large datasets is common. Using pointers effectively can drastically reduce memory consumption and speed up your algorithms by minimizing unnecessary data copying.

For example, when dealing with matrix operations, pointers allow you to manipulate data in place rather than making copies of entire arrays. This results in faster, more efficient code, especially when handling multidimensional arrays. Instead of looping over each element in a matrix and passing it by value, using pointers lets you modify the original matrix directly.

However, pointers can also introduce complexity. Mistakes like dangling pointers or buffer overflows can lead to crashes or hard-to-find bugs. Mastering the use of pointers is crucial for AI developers in C, as it provides the power to manipulate memory directly and optimize data access.

Avoiding Pitfalls: Debugging and Profiling AI in C

Optimizing your AI algorithms in C isn’t just about writing faster code; it’s also about understanding how your code behaves in real-time. This is where debugging and profiling come into play. Using tools like gdb for debugging and gprof for profiling can help you identify bottlenecks in your code.

Profiling is especially important when working on complex AI algorithms, as it helps you visualize how much time each function or block of code takes to execute. By analyzing your program’s execution flow, you can pinpoint inefficient parts of the code and focus your optimization efforts where they matter most. Debugging, on the other hand, is crucial for identifying logical errors, memory leaks, or issues like stack overflows, which can grind your AI algorithm to a halt.

Additionally, make sure to run stress tests—push your algorithm to its limits with large datasets or extreme inputs. This is the best way to ensure that your AI code remains efficient and stable under pressure.

Reducing Algorithm Overhead with Tail Call Optimization

One clever way to reduce algorithm overhead is by utilizing tail call optimization (TCO). In AI algorithms, especially recursive ones like search algorithms or decision trees, recursion can quickly lead to stack overflow or excessive memory use. Tail call optimization allows the compiler to reuse the current function’s stack frame for the recursive call instead of creating a new one.

In C, ensuring that a recursive function can take advantage of TCO involves placing the recursive call at the end of the function, known as the tail position. By doing this, the compiler can replace the current function’s call frame with the next one, reducing memory overhead and allowing deep recursion without the usual risks of stack overflow.

For example, imagine a depth-first search in AI: if written with tail recursion in mind, the function can traverse enormous decision trees or graphs without incurring the heavy performance penalties typical of deep recursive calls.

Memory Alignment Techniques for Better AI Performance

Did you know that how you align memory in C can dramatically impact your AI algorithm’s performance? Memory alignment refers to arranging data in memory such that it conforms to the architecture’s word boundaries. Modern processors are optimized to access data faster when it is properly aligned, typically to 4 or 8-byte boundaries.

When dealing with large data structures in AI—like arrays or matrices for neural networks—ensuring that the memory is aligned can reduce cache misses and improve access times. Using tools like posix_memalign() or aligning variables manually using __attribute__((aligned(x))) in C can ensure that your data structures are set up to take advantage of cache lines, resulting in faster memory access and overall improved algorithm performance.

This becomes especially critical in AI algorithms that frequently access memory, such as those involving matrix multiplications, where every millisecond counts.

Dynamic Memory Allocation vs Static: What’s Better for AI?

A common question when working on AI algorithms in C is whether to use dynamic or static memory allocation. Both have their strengths and weaknesses depending on the application.

Dynamic memory allocation, handled through malloc() and free(), allows your AI program to adjust memory usage at runtime, which is particularly useful when dealing with variable-sized datasets. If you’re writing a genetic algorithm or an evolutionary neural network where the structure evolves over time, dynamic allocation allows for greater flexibility.

However, dynamic allocation can introduce overhead, as the system must constantly manage memory on the heap. This can slow down performance if not carefully managed, particularly in AI algorithms that require rapid, repeated memory access.

On the other hand, static memory allocation is generally faster because it occurs on the stack, where memory access is nearly instantaneous. It’s ideal for fixed-size datasets or when performance is the top priority, such as in real-time AI applications. The downside is the lack of flexibility—if your AI algorithm requires more memory than you initially allocated, you could face memory limitations.

Balancing Code Readability with Performance in C

As you optimize AI algorithms in C, there’s always a trade-off between making the code fast and making it easy to read and maintain. Writing code that’s incredibly efficient might lead to hard-to-understand, overly complex logic. In AI, where algorithms can already be intricate, this can make future debugging or scaling of the program more difficult.

One way to strike this balance is by writing modular, well-commented code but optimizing critical sections with techniques like function inlining, loop unrolling, or using low-level memory management tricks. By isolating the performance-critical parts of your AI algorithm into separate functions or modules, you can maintain both readability and efficiency.

In the end, it’s about making your AI code both maintainable and efficient. While performance should be your focus, never underestimate the importance of being able to easily debug and extend your algorithm down the road.

Real-World AI Case Studies Optimized in C

To better understand the impact of optimizing AI algorithms in C, let’s look at some real-world examples. One standout case is how OpenAI used C to optimize the inner workings of GPT-2 and GPT-3 models. These models rely heavily on matrix multiplications and other mathematical operations, which were initially slow due to Python’s high-level abstraction. By re-writing performance-critical components in C and leveraging techniques like SIMD instructions and manual memory management, they were able to dramatically improve performance.

Another notable example comes from Google’s TensorFlow, where several components were optimized in C. TensorFlow’s core was written in C++ for performance reasons, but much of the low-level memory management and mathematical operations were handled in C. This allowed TensorFlow to handle massive AI workloads efficiently, especially when deployed in data centers with large-scale AI models.

These case studies demonstrate that when speed and efficiency are the top priority, C’s low-level control offers unparalleled benefits, allowing AI algorithms to run at peak performance in real-time environments.

Optimizing AI algorithms in C offers unmatched control over performance and resource usage

— AIC

How to Handle Large Datasets Efficiently in C

When working with AI algorithms, especially in machine learning and deep learning, you’ll often face the challenge of handling large datasets. Efficiently managing these datasets in C is crucial, as it directly impacts your algorithm’s speed and memory usage. One of the key strategies here is batch processing, where you divide the dataset into smaller chunks or batches, processing them sequentially or in parallel.

This approach not only reduces memory overhead but also ensures your algorithm can scale to handle real-world data sizes. Rather than loading an entire dataset into memory at once (which might not even be possible for very large datasets), you load manageable portions that your system can handle.

Additionally, file I/O becomes a critical factor. Reading data from files can be slow, so techniques like memory-mapped files (using mmap()) allow you to map the dataset directly into memory. This eliminates the need to load the entire file into memory, speeding up the process significantly.

For datasets stored in binary formats, accessing data via pointers rather than performing costly conversions also optimizes performance. Finally, be mindful of using double buffering or prefetching techniques, which ensure that while one batch of data is being processed, the next batch is being loaded into memory, further improving overall performance.

Efficient Memory Deallocation for Long-Running AI Programs

Memory leaks can slowly but surely degrade the performance of your AI algorithm. This is especially true for long-running programs, such as AI models that require several hours—or even days—of training. In C, you’re responsible for cleaning up your memory, and failure to do so properly will result in memory fragmentation or exhausted system resources, causing your AI model to slow down or even crash.

To avoid this, you should always pair your malloc() or calloc() calls with corresponding free() calls. However, this is not as simple as it sounds, especially in complex algorithms with multiple layers of abstraction. Using tools like Valgrind to check for memory leaks is essential.

Moreover, to ensure optimal performance, avoid frequent memory allocations and deallocations within tight loops. Instead, preallocate large chunks of memory that can be reused, minimizing the overhead of repeated malloc() and free() calls. If your AI algorithm creates temporary structures, deallocate these as soon as they are no longer needed, rather than waiting until the end of the program.

Taking Advantage of SIMD Instructions in AI Algorithms

For truly high-performance AI algorithms, utilizing SIMD (Single Instruction, Multiple Data) instructions is a game-changer. Modern processors support SIMD operations, which allow a single instruction to operate on multiple pieces of data simultaneously. This can significantly speed up tasks that involve vectorized operations, like matrix multiplications or image processing, which are common in AI workloads.

C gives you the ability to take advantage of SIMD through intrinsics, which are low-level functions provided by your processor’s architecture (like SSE, AVX, or NEON for ARM). By refactoring your code to use these intrinsics, you can process multiple elements of an array or matrix in parallel, rather than looping through them one by one.

For example, in convolutional neural networks (CNNs), image convolutions can be optimized using SIMD to apply filters to entire chunks of pixels at once. This drastically reduces the computational time needed, especially for high-resolution images. Libraries like Intel’s Math Kernel Library (MKL) also provide optimized routines that use SIMD, making it easier to incorporate these optimizations into your C code.

Optimizing AI Models with Cache-Aware Programming

When running AI algorithms on large datasets, cache performance is often the limiting factor. Modern CPUs rely heavily on L1, L2, and L3 caches to keep frequently accessed data close to the processor. If your algorithm accesses data in a way that causes cache misses, performance can degrade rapidly.

The key to optimizing your AI algorithm is to design it in a cache-aware manner. This means structuring your data and loops to maximize cache locality. For example, instead of processing data in row-major order, try processing it in column-major order if that better aligns with how the data is stored in memory.

Additionally, you can break up large arrays or matrices into smaller blocks that fit within your CPU’s cache size, ensuring that once a block is loaded into cache, your algorithm can fully utilize it before moving on to the next one. Techniques like loop tiling or blocking are particularly effective in matrix-heavy operations, such as in deep learning models, where large amounts of data need to be processed in an efficient, cache-friendly manner.

Choosing the Right Libraries for Optimized AI Algorithms in C

You don’t always have to reinvent the wheel when it comes to optimizing AI algorithms in C. There are several high-performance libraries specifically designed to speed up AI computations, allowing you to focus on algorithm design rather than the nitty-gritty details of low-level optimizations.

Some of the most popular libraries include BLAS (Basic Linear Algebra Subprograms), which provides optimized routines for common linear algebra operations. OpenBLAS and Intel’s MKL are popular implementations of BLAS that offer multithreading and SIMD support.

For neural networks, libraries like cuDNN (for NVIDIA GPUs) offer highly optimized routines for deep learning operations, allowing you to leverage GPU acceleration from C-based code. Meanwhile, TensorFlow’s C API enables you to use a C interface to manage and optimize AI models within TensorFlow, giving you access to the framework’s powerful AI capabilities with the control and performance tuning that C provides.

By leveraging these libraries, you can focus on refining your AI models and core logic, knowing that the heavy lifting of matrix multiplication, convolutions, and vector operations is handled in the most efficient way possible.

Final Thoughts on AI Optimization in C

Optimizing AI algorithms in C is like tuning a high-performance engine—you have to consider every part, from memory management to loop efficiency, in order to get the best results. While C gives you unparalleled control over how your algorithm interacts with hardware, this also means you’re responsible for making sure everything runs smoothly and efficiently.

By leveraging compiler optimizations, SIMD instructions, and choosing the right data structures, you can create lightning-fast AI models that scale to handle even the largest datasets. With tools like profilers and debuggers, you can identify performance bottlenecks and fine-tune your algorithm until it hums with precision.

Remember, though, that there’s always a balance between readability and efficiency. While squeezing every last bit of performance from your algorithm is tempting, writing code that’s maintainable, modular, and easy to understand is equally important in the long run—especially when scaling or collaborating with other developers.

So whether you’re working on neural networks, search algorithms, or genetic algorithms, using C can provide the speed and efficiency you need for cutting-edge AI applications. By following best practices, optimizing your data access patterns, and utilizing powerful libraries, you’ll ensure that your AI solutions are both fast and effective.

FAQ

Why is C a preferred language for AI optimization?

C provides low-level control over memory management and hardware resources, allowing developers to optimize performance in ways that higher-level languages like Python cannot. This makes it ideal for speed-critical AI algorithms, especially when dealing with large datasets or real-time applications.

How can time complexity improve AI algorithm performance?

Understanding time complexity helps you predict how an algorithm’s runtime will scale as input size increases. Choosing efficient algorithms with lower complexity, like O(n log n) instead of O(n²), is crucial for ensuring your AI programs handle large data efficiently.

What are the advantages of using inline functions and macros in C?

Inline functions avoid the overhead of function calls by inserting code directly where it’s used, speeding up execution in performance-critical loops. Macros, while offering similar benefits, should be used with caution due to potential scoping issues, but they’re powerful for simple, repetitive tasks.

How does parallel processing improve AI algorithm efficiency?

Parallel processing breaks tasks into multiple threads or processes, allowing them to run simultaneously across multiple CPU cores. This is particularly useful for computationally intensive AI tasks, such as training neural networks or performing matrix operations, reducing execution time significantly.

Why are arrays and pointers critical for AI optimization in C?

Pointers allow direct memory access, reducing the need for data copying, while arrays provide contiguous memory allocation, which is cache-efficient. Both are essential for optimizing data-heavy operations like matrix multiplications, making your AI code faster and more memory-efficient.

What role do compiler flags play in optimizing AI algorithms?

Compiler flags like -O3 enable aggressive optimizations such as loop unrolling and function inlining. Additionally, -march=native optimizes your code for the specific architecture of your CPU. These flags help increase performance without needing to rewrite the algorithm.

Should I use dynamic or static memory allocation in AI programs?

Dynamic memory allocation (malloc()) is flexible and ideal for handling variable data sizes, but it adds overhead. Static memory allocation, being faster, is more suited to AI algorithms with fixed data sizes or where high performance is a priority.

How can I prevent memory leaks in long-running AI algorithms?

To avoid memory leaks, ensure that every malloc() or calloc() has a corresponding free() call. Use tools like Valgrind to check for leaks, and implement memory management best practices, especially for long-running AI models that consume large amounts of memory.

What is tail call optimization, and how does it help recursive AI algorithms?

Tail call optimization (TCO) allows recursive functions to reuse their own stack frame, reducing memory overhead. This is particularly useful in recursive AI algorithms, like search algorithms or decision trees, as it prevents stack overflow and improves performance.

How can SIMD instructions optimize AI algorithms in C?

SIMD (Single Instruction, Multiple Data) instructions allow one operation to be performed on multiple data points simultaneously, which is highly efficient for tasks like matrix multiplication or vector processing. SIMD instructions help parallelize operations, improving overall performance.

What is cache-aware programming, and why does it matter for AI?

Cache-aware programming ensures that frequently accessed data is stored in a way that maximizes CPU cache efficiency. By optimizing your data structures and access patterns (e.g., using blocking for matrix operations), you can reduce cache misses and speed up your AI algorithms.

What libraries are useful for optimizing AI algorithms in C?

Libraries like OpenBLAS, Intel MKL, and cuDNN provide highly optimized routines for common AI operations like linear algebra and deep learning. These libraries help you achieve faster performance without manually optimizing every aspect of your algorithm.

What is memory alignment, and how does it improve performance?

Memory alignment arranges data in memory based on the processor’s word boundaries, typically 4 or 8 bytes. Aligned memory allows faster access, reducing cache misses and improving the efficiency of memory-intensive operations in AI, such as matrix calculations.

How does profiling help with AI algorithm optimization?

Profiling tools like gprof or Valgrind help identify performance bottlenecks by showing where your algorithm spends the most time. This allows you to focus on optimizing the most resource-intensive parts of your code, ensuring efficient system usage and faster AI performance.

Resources for Optimizing AI Algorithms in C

1. Books:

- “The C Programming Language” by Brian W. Kernighan and Dennis M. Ritchie

A foundational book on C, providing a deep understanding of the language and best practices, which are crucial when optimizing algorithms. - “Algorithms in C” by Robert Sedgewick

This book covers a wide range of algorithms and provides insights into optimizing them specifically in C. - “Effective C: An Introduction to Professional C Programming” by Robert C. Seacord

Focuses on writing efficient and professional-level C code, helping you to master performance techniques for AI. - “Programming Massively Parallel Processors: A Hands-on Approach” by David B. Kirk, Wen-mei W. Hwu

A valuable resource for learning parallel processing and how to take advantage of multicore processors in your AI algorithms.

2. Online Tutorials and Courses:

- Udemy – C Programming For Beginners

A course for those starting in C, but covers fundamental concepts necessary for AI optimizations like memory management and pointers. - Coursera – Algorithms Specialization by Stanford University

While language-agnostic, this course helps you understand algorithm complexity, a crucial skill when optimizing for performance in C. - MIT OpenCourseWare – Introduction to Algorithms

Free lecture series from MIT that covers algorithmic efficiency and design, providing a strong foundation for optimizing AI in C. - NVIDIA Developer – cuDNN and CUDA Programming

Learn about GPU acceleration and CUDA programming for enhancing AI performance when working with C.

3. Documentation and Websites:

- GNU Compiler Collection (GCC) Documentation

Deep dive into compiler optimizations and flags (-O3,-march=native) that can boost performance in your C programs: GCC Optimization Options - Intel Developer Zone – Intel Math Kernel Library (MKL)

MKL provides highly optimized math routines that are ideal for speeding up AI algorithms: Intel MKL - OpenBLAS Documentation

A go-to for optimized linear algebra routines in C, which are frequently used in machine learning and AI models: OpenBLAS - Valgrind – Memory Debugging, Profiling

Use Valgrind to detect memory leaks, profile performance, and debug AI algorithms written in C: Valgrind

4. Libraries and Tools:

- OpenBLAS

A highly optimized library for linear algebra operations. Essential for AI tasks involving large matrix calculations and other linear algebra tasks: OpenBLAS GitHub - Intel MKL

Provides high-performance, optimized routines for deep learning and linear algebra operations on Intel hardware: Intel MKL Download - cuDNN (CUDA Deep Neural Network Library)

Optimized for running neural network tasks on NVIDIA GPUs, allowing you to write C code that leverages GPU acceleration: cuDNN - Gprof

A profiling tool to help analyze where your AI algorithms are spending the most time, enabling you to optimize efficiently: Gprof Documentation

5. Forums and Communities:

- Stack Overflow – C Programming and AI Optimization

Great for asking questions about AI optimizations and C-specific issues: Stack Overflow C Programming - Reddit – r/C_Programming

A community for sharing tips, asking questions, and discussing C programming optimizations: r/C_Programming - Intel Developer Zone Forum

Get help with Intel MKL and other performance-optimizing tools for C: Intel Forums