AI and machine learning models are powerful, but they’re also computationally demanding. Neural code execution optimization is crucial to unlock the full potential of AI applications, making them faster and more efficient.

Let’s dive into effective strategies for speeding up neural code execution and ensuring that AI applications perform at their best.

Understanding Neural Code Execution: Key Concepts

Neural Code Execution vs. Traditional Code Execution

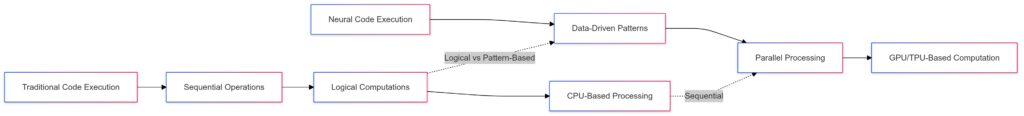

Neural code execution differs from standard code execution in a few major ways. Unlike typical code, which runs sequentially and operates on straightforward logic, neural code works on data-driven patterns learned through experience. This difference necessitates specialized approaches to make it run efficiently on hardware.

Traditional Execution includes sequential operations, logical computations, and CPU-based processing.

Neural Code Execution involves data-driven patterns, parallel processing, and GPU/TPU-based computation.

Whereas traditional code may use CPUs effectively, neural networks thrive on parallel processing. GPUs and TPUs, for instance, allow operations to run concurrently, which is essential for the matrix operations at the heart of most AI models.

Importance of Latency and Throughput in AI

Latency (the time it takes for data to move through the neural network) and throughput (the amount of data processed per time unit) are critical metrics. AI applications in real-time systems, like autonomous driving or natural language processing, depend on low latency to avoid lag. Optimizing neural code execution can make these applications faster and smoother by improving both metrics.

Hardware Optimization: GPUs, TPUs, and Beyond

GPUs are the go-to for many AI applications due to their capability for parallel computation. However, Tensor Processing Units (TPUs) developed by Google offer even faster execution for deep learning models. Understanding the strengths of each type of hardware is essential for optimizing code execution, as is tuning code to leverage specific hardware capabilities effectively.

Techniques for Reducing Neural Network Execution Time

Quantization: Making Models Lighter

Quantization reduces the precision of neural network weights, transforming them from 32-bit floating-point numbers to smaller bit representations. While precision might drop slightly, quantized models use less memory and run significantly faster. This method is especially beneficial when running AI applications on edge devices, where hardware limitations restrict resources.

- Post-Training Quantization: After training, weights are converted, making this ideal for applications where slightly lower accuracy is acceptable.

- Quantization-Aware Training: Adjusts weights during training to account for reduced precision, often resulting in minimal accuracy loss.

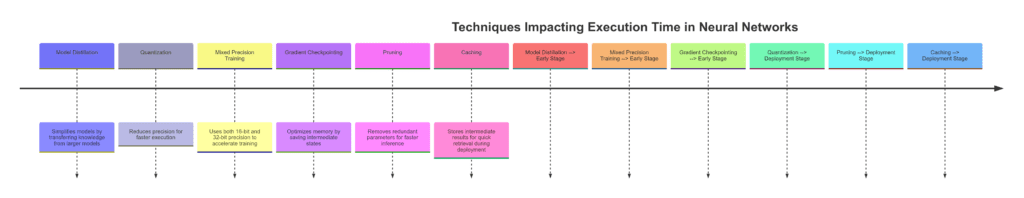

Model Distillation: Simplifies models by transferring knowledge.

Quantization: Reduces precision for faster execution.

Mixed Precision Training: Uses both 16-bit and 32-bit precision.

Gradient Checkpointing: Optimizes memory.

Pruning: Removes redundant parameters.

Caching: Stores intermediate results for faster retrieval.

Pruning: Cutting Down on Redundant Connections

Pruning involves identifying and removing less influential neurons or weights from the network, resulting in a smaller, more efficient model. This technique not only speeds up execution but also reduces memory usage.

- Unstructured Pruning removes individual weights based on importance.

- Structured Pruning eliminates entire neurons or layers, streamlining computation even more.

For instance, in applications where split-second decisions are crucial, such as robotic surgery or financial trading algorithms, pruning can be a game-changer.

Model Distillation: Training Smaller Models from Large Ones

Distillation uses a large, complex model to train a smaller model, capturing much of the original’s capabilities but at a fraction of the computational cost. The student model learns to mimic the teacher model and often achieves a high degree of accuracy despite its size reduction.

For example, BERT (a large language model) has been distilled into smaller versions like DistilBERT, which runs faster while retaining much of its predecessor’s NLP prowess.

Improving Execution Efficiency Through Data Pipeline Optimization

Optimizing Data Loading with Caching and Preprocessing

Data loading can be a major bottleneck in neural code execution. By optimizing the data pipeline, we can reduce loading times and keep the neural network fed with data at optimal speed.

- Caching Frequently Used Data: Involves storing commonly accessed data in memory, speeding up access times.

- Data Augmentation at Runtime: This technique applies transformations like cropping or rotation to data on the fly, reducing storage needs while enriching the dataset.

Batch Processing and Parallelism in Data Handling

Batching data can significantly speed up training and inference processes. With parallel data loading, data is prepared in chunks, letting the model focus on computation without delays. For instance, batching is a must in image recognition systems, where millions of images must be processed quickly.

Streaming Data for Real-Time Applications

For AI applications in real-time, such as live translation or stock market prediction, streaming data enables models to process information continuously, reducing waiting time between inputs. By optimizing neural code to handle small, rapid data packets, we achieve smoother execution without overloading the system.

| Technique | Description | Performance Boost |

|---|---|---|

| Caching | Stores frequently accessed data locally to reduce repeated I/O operations. | Increases data access speed, reduces data load lag. |

| Batching | Processes data in grouped batches rather than individually. | Reduces processing time, improves throughput. |

| Streaming | Continuously processes data as it arrives, without waiting for full batches. | Minimizes latency, optimizes real-time performance. |

| Prefetching | Loads data ahead of time to ensure smooth processing flow. | Reduces data access delays, enhances processing speed. |

| Parallel Processing | Distributes tasks across multiple processors for simultaneous execution. | Decreases computation time, maximizes resource usage. |

Breakdown:

- Caching: Stores data locally for faster access, minimizing lag.

- Batching: Groups data for processing, improving overall throughput.

- Streaming: Allows real-time processing of incoming data, reducing latency.

- Prefetching: Preloads data to prevent delays during processing.

- Parallel Processing: Runs tasks concurrently, speeding up computation.

Leveraging Parallelization and Distributed Computing

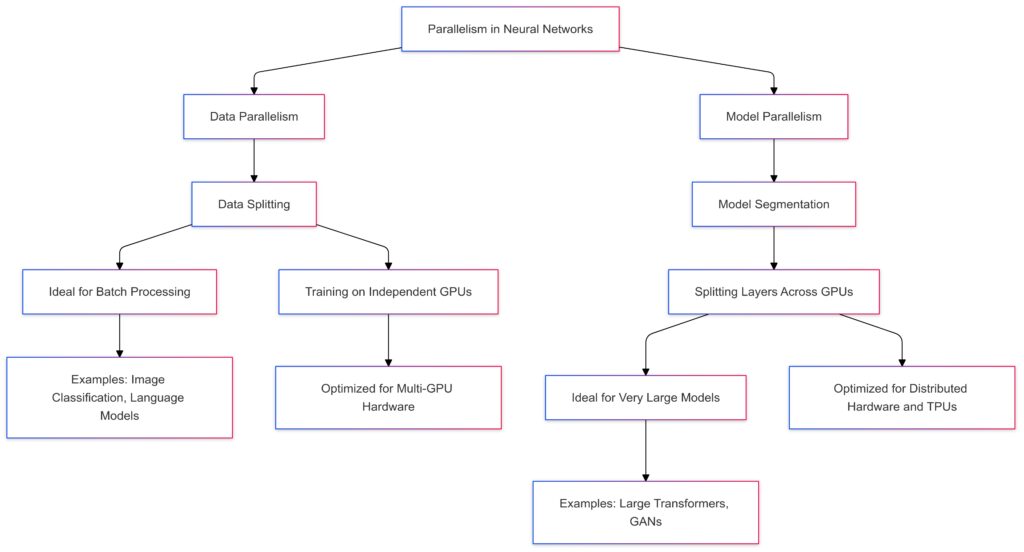

Data Parallelism vs. Model Parallelism

In data parallelism, data is split and processed by multiple devices, each running a copy of the model. Model parallelism, on the other hand, involves dividing the model itself across different devices.

- Data Parallelism: Useful for smaller models with large datasets.

- Model Parallelism: Ideal for very large models where dividing computation across devices enhances efficiency.

Each approach can significantly improve performance, especially in systems with multiple GPUs or TPUs, like data centers running large AI applications.

Data Parallelism: Splits data across independent GPUs, ideal for batch processing (e.g., image classification, language models), and is optimized for multi-GPU hardware.

Model Parallelism: Segments the model itself across GPUs, suited for very large models like transformers and GANs, and optimized for distributed hardware and TPUs.

Distributed Training Across Multiple Machines

For extensive datasets or complex models, distributing training across multiple machines can vastly reduce training time. Frameworks like Horovod and Distributed TensorFlow allow AI models to be trained across clusters, enabling faster execution and better resource allocation.

- Ring-AllReduce Algorithms: Used in frameworks like Horovod, this method helps in efficient communication across machines, reducing training time.

- Gradient Aggregation: Combines gradients from multiple machines, reducing the number of communication operations required per training step.

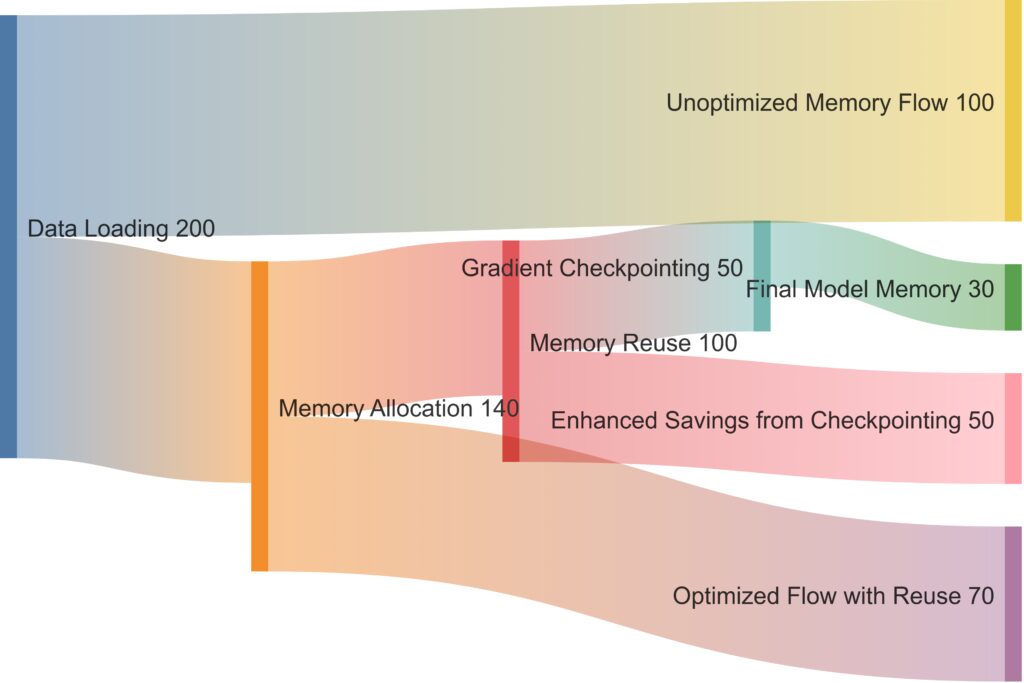

Memory Optimization Strategies for Faster Neural Code Execution

Memory Allocation and Reuse: Avoiding Fragmentation

Neural code execution relies heavily on memory management, and inefficient allocation can slow things down. By reusing memory blocks rather than creating new allocations during each iteration, we can prevent memory fragmentation, which leads to better execution speed. Libraries like PyTorch and TensorFlow handle this internally, but for custom implementations, careful memory management can reduce lag.

For instance, memory reuse in recurrent neural networks (RNNs), where sequences of data are processed, minimizes time spent in memory allocation and improves throughput for sequential data tasks, such as language modeling or time-series analysis.

Using Mixed Precision Training

Mixed precision training combines lower precision (16-bit) and standard precision (32-bit) calculations, offering significant improvements in speed and memory use without a noticeable loss in accuracy. This approach leverages hardware-accelerated operations specifically designed for lower precision, like NVIDIA’s Tensor Cores, which make this method highly efficient.

AI models with millions of parameters in deep learning, such as those in computer vision, benefit greatly from mixed precision, especially when training on hardware optimized for it.

Efficient Gradient Checkpointing

Gradient checkpointing strategically saves certain intermediate layers during the forward pass. By recalculating only those layers that are essential for the backward pass, we can reduce memory usage, which is beneficial when training large models with limited memory.

This technique is useful for resource-intensive applications like transformer-based models in NLP, where memory constraints often hinder execution. Gradient checkpointing essentially allows us to train larger models without requiring as much memory, which helps accelerate the training process.

Advanced Techniques in Neural Code Optimization

Dynamic Computational Graphs for Flexibility

Dynamic computational graphs, as opposed to static ones, allow the network structure to change during runtime. This flexibility is especially beneficial for variable-length sequences or complex tasks with changing requirements, such as adaptive learning systems.

Frameworks like PyTorch use dynamic graphs, which can automatically adjust operations based on input conditions, offering better performance and making the code easier to debug. For applications that handle diverse data inputs, dynamic graphs can significantly speed up neural code execution by optimizing each run.

Neural Architecture Search (NAS) for Optimal Model Structures

Neural Architecture Search (NAS) is an automated process to find the best network architecture for a given task, which can improve execution efficiency by reducing model complexity while maintaining accuracy. NAS identifies efficient architectures that optimize both accuracy and speed, tailored to the specific hardware available, whether it’s a cloud-based TPU or an edge-based CPU.

This technique is particularly beneficial in mobile AI applications where computation resources are limited. Efficient NAS-derived architectures, like MobileNet or EfficientNet, provide comparable performance to larger networks but are optimized for faster execution on smaller devices.

Compiler Optimizations with XLA and ONNX

Compilers play a significant role in optimizing neural code for execution speed. Frameworks like XLA (Accelerated Linear Algebra) for TensorFlow and ONNX (Open Neural Network Exchange) offer compiler optimizations that tailor code execution to the hardware at hand.

- XLA: Optimizes TensorFlow models, reducing execution time by compiling operations into faster, more efficient representations.

- ONNX Runtime: A cross-platform, hardware-optimized compiler that can run models trained in multiple frameworks, optimizing for speed and compatibility.

By leveraging these compilers, AI applications can achieve higher throughput, particularly on specialized hardware, making them ideal for production-level deployments.

Real-World Applications and Case Studies in Neural Code Optimization

Autonomous Vehicles: Faster Decision-Making with Optimized Neural Networks

Autonomous vehicles rely on a combination of sensors, cameras, and radar to interpret their surroundings. By implementing quantization and pruning, neural networks in these vehicles can process inputs faster, enabling them to react to changing road conditions in real-time.

For example, Tesla uses optimized neural networks to improve object detection speed and path planning, enhancing both safety and response times in their autonomous systems.

Real-Time Healthcare Monitoring and Diagnosis

In healthcare, real-time diagnostic tools need to process data quickly, often on edge devices. Memory-efficient techniques like mixed precision training and gradient checkpointing make it feasible to deploy models for medical imaging or heart rate monitoring with rapid, accurate results.

Companies developing portable ultrasound or ECG devices often leverage these optimization techniques to achieve real-time data analysis, empowering faster, on-the-spot diagnostics.

Financial Trading Algorithms: Millisecond-Level Latency Reduction

In financial markets, milliseconds can mean the difference between profit and loss. Optimizing neural code execution using distributed computing and model distillation allows trading algorithms to process data streams with near-instantaneous speed.

For instance, hedge funds use pruned, quantized models to keep latency as low as possible, allowing them to make trade decisions faster than competitors who rely on standard neural networks.

Optimizing neural code execution for faster AI applications goes beyond simply choosing the right algorithm. By understanding hardware, employing efficient data handling, and leveraging advanced techniques like mixed precision and compiler optimization, developers can create AI systems that are both high-performing and adaptable across various platforms and applications. As AI applications continue to grow in complexity, these strategies become essential for ensuring that models not only work as expected but also deliver real-time results when it matters most.

FAQs

How do quantization and pruning help in speeding up AI models?

Quantization reduces the precision of model weights, which decreases memory usage and speeds up computation, making it ideal for devices with limited resources. Pruning removes unnecessary neurons or connections, shrinking the model’s size and computation needs. Both methods effectively streamline the neural network, resulting in faster execution without drastically compromising accuracy.

Can I use mixed precision on any type of hardware?

Mixed precision training is especially effective on hardware optimized for it, like NVIDIA GPUs with Tensor Cores. However, most modern hardware supports some level of mixed precision, so you can often implement it to improve speed and efficiency across various devices. Some CPUs and TPUs also handle mixed precision well, but it’s best to verify compatibility with the specific hardware in use.

What is model distillation, and when should it be used?

Model distillation involves training a smaller model (student) to replicate the outputs of a larger, more complex model (teacher). This technique is useful when you need a fast and efficient model for deployment but still want good performance. Applications like mobile AI and edge devices benefit from distilled models, as they can perform complex tasks without requiring high computational power.

How does data parallelism differ from model parallelism?

In data parallelism, data is split across multiple devices, each running a copy of the same model to process different data simultaneously. In model parallelism, parts of the model are distributed across devices to process data in segments, which works well for very large models. Both techniques improve execution speed by utilizing the parallel computing power of GPUs and other processors.

Why are dynamic computational graphs advantageous?

Dynamic computational graphs, like those in PyTorch, allow neural network structures to adapt during runtime, enabling flexibility and easier debugging. This is particularly useful for tasks involving variable data input sizes or when developing complex applications where the model needs to change based on real-time input. Dynamic graphs allow for more efficient, adaptable execution in these cases.

What role do compilers like XLA and ONNX play in optimization?

Compilers such as XLA and ONNX optimize neural code execution by converting model operations into more efficient, hardware-specific formats. XLA, for example, enhances TensorFlow models by reducing redundant operations, while ONNX allows for cross-platform deployment with optimized execution. They are crucial for making AI applications run faster and more efficiently on specific hardware setups.

When should distributed training be considered?

Distributed training is ideal for very large models or datasets that require substantial processing power. By spreading training across multiple machines, frameworks like Horovod and Distributed TensorFlow enable faster execution, making it feasible to handle massive datasets in less time. Distributed training is particularly useful in research and production settings where rapid model iteration is key.

How does gradient checkpointing work, and why is it useful?

Gradient checkpointing saves certain intermediate model states during the forward pass, so not all layers need to be stored in memory at once. Instead, layers are recalculated as needed during the backward pass, which saves memory and allows larger models to be trained on limited hardware. This technique is highly beneficial for memory-intensive tasks like natural language processing with large transformer models.

Can Neural Architecture Search (NAS) improve model speed?

Yes, Neural Architecture Search (NAS) automates the process of finding the optimal model architecture, balancing performance and speed. By evaluating various architectures, NAS identifies structures that require fewer resources but still deliver high accuracy. Models generated through NAS, like EfficientNet, run significantly faster, making them ideal for real-time applications on mobile and edge devices.

Is memory fragmentation an issue in neural code execution?

Memory fragmentation can slow down neural code execution as the system struggles to allocate large enough memory blocks for operations. To avoid this, many AI frameworks use techniques like memory pooling and reuse to minimize fragmentation. Memory fragmentation is especially problematic in large-scale models where efficient memory allocation is crucial for smooth execution.

How do caching and preloading improve data pipeline efficiency?

Caching and preloading store frequently used data in memory, reducing access time and improving data loading efficiency. This is especially helpful for AI models that need to process large datasets continuously. By preloading data, the model doesn’t face delays waiting for data to load, which is essential for high-performance applications, such as image recognition and real-time analytics.

What are some common challenges in neural code optimization?

Challenges include balancing accuracy and speed, managing memory limitations, and adapting to different hardware. Ensuring models remain effective while being optimized for speed can be difficult, as reducing precision or simplifying layers might impact accuracy. Additionally, integrating optimizations across diverse hardware platforms and deploying distributed training setups can add complexity to the process.

Can compiler optimizations work with multiple AI frameworks?

Yes, compilers like ONNX Runtime are designed to be cross-compatible, meaning they can optimize models trained in different frameworks (like PyTorch, TensorFlow, and MXNet). This flexibility is particularly useful for deploying models across various platforms and hardware types, ensuring they run efficiently regardless of the framework used for training.

Are there risks of accuracy loss with quantization and pruning?

While quantization and pruning can lead to minor accuracy loss, most models retain close-to-original accuracy if optimized properly. Quantization-aware training can help reduce accuracy degradation by adjusting weights during training, while structured pruning can be targeted to remove only low-impact connections. For many applications, the speed and memory benefits of these techniques outweigh the slight accuracy loss.

When is model parallelism preferable over data parallelism?

Model parallelism is preferable for extremely large models that exceed the memory capacity of a single device. By splitting the model itself across multiple devices, each part processes a segment of the input data, making it ideal for complex, memory-intensive architectures. Data parallelism, by contrast, works well with high-data-volume tasks that can run independent copies of the model across different datasets.

Resources

Research Papers

- “Mixed Precision Training” by Micikevicius et al.

A highly cited paper on using mixed-precision to optimize neural networks, reducing computational cost without sacrificing accuracy. Available on arXiv. - “MobileNets: Efficient Convolutional Neural Networks for Mobile Vision Applications” by Howard et al.

This paper introduces MobileNet, a neural architecture designed for efficiency on mobile devices, showcasing practical application of optimization techniques. Available on arXiv. - “DistilBERT, a distilled version of BERT: smaller, faster, cheaper, and lighter” by Sanh et al.

A must-read for understanding model distillation, describing how BERT was distilled into a more efficient model for faster execution. Available on arXiv.