We’ve seen countless examples where AI has made life more efficient. But at what cost?

Many people still wonder: if a machine decides something critical, how can we be sure it’s accurate?

As AI continues to evolve, the role of perplexity AI is gaining attention. It promises to boost the balance between machine precision and human oversight. This could be a game-changer for trust.

The Trust Dilemma in AI Accuracy

While AI can process data faster than any human could, accuracy alone isn’t enough. Sure, machines can give us answers, but understanding how they arrive at those answers is crucial. Without transparency, how can users feel confident that an AI’s prediction is fair or correct?

For example, when a loan application is rejected based on an AI model’s decision, it can feel mysterious, even frustrating. Was it due to an error in data? A hidden bias? That’s the trust dilemma we’re facing with AI today.

This is where human oversight becomes essential. Balancing AI accuracy with the judgment and context that humans bring is key to building trust in these systems.

Why Human Oversight is Essential in AI

AI can provide data-backed insights, but humans need to ensure these insights align with ethical standards. Human oversight introduces accountability. It’s not just about correcting mistakes; it’s about validating decisions within a broader context.

For instance, in healthcare, AI can predict patient diagnoses or treatment outcomes. But doctors must review these predictions, considering a patient’s history or unique circumstances. Machines can crunch numbers, but they can’t feel empathy or consider nuanced moral dilemmas.

Human oversight helps catch algorithmic biases, which arise from the data AI trains on. Without human involvement, we risk perpetuating or amplifying these biases.

How Perplexity AI Aims to Enhance Transparency

Enter Perplexity AI, an emerging concept that seeks to improve the interpretability of AI systems. Perplexity in AI refers to how well a system predicts or understands a particular outcome. In theory, a lower perplexity score indicates a more accurate and reliable prediction.

By emphasizing perplexity, AI developers aim to create models that don’t just give the “right” answers but are also easier to understand. This could make it easier for humans to scrutinize AI decisions and ensure they make sense in real-world applications.

This approach is particularly appealing in sectors where trust is paramount, such as legal systems or financial institutions. A transparent AI system that highlights its reasoning process could be a significant step towards bridging the trust gap.

Understanding Perplexity in AI Systems

So, what exactly does perplexity measure in AI? At its core, perplexity is a statistical measure that evaluates how well a predictive model performs. It gauges the likelihood of a model accurately predicting an outcome based on the information it’s given. The lower the perplexity, the better the model’s performance.

In the context of natural language processing (NLP), for example, perplexity measures how well an AI can predict the next word in a sentence. Lower perplexity means the model is better at generating coherent, contextually appropriate responses.

For AI users, this could mean more understandable, predictable, and trustworthy outcomes. But while lower perplexity often correlates with better accuracy, it doesn’t guarantee that the predictions will always be ethical or free from bias. That’s where human oversight comes in.

Perplexity AI vs. Traditional AI Models

When comparing Perplexity AI with traditional AI models, a key difference lies in the transparency of their decision-making processes. Traditional AI models often work like “black boxes,” where their internal workings are hidden and hard to interpret. While they may achieve high accuracy, the logic behind their outcomes is rarely clear, making it tough for users to trust them fully.

| Feature | Traditional AI Models | Perplexity AI |

|---|---|---|

| Transparency | Limited transparency due to complex, black-box models | Increased transparency with clearer reasoning paths |

| Interpretability | Hard to interpret results, especially with deep learning models | Focus on understandable, explainable results |

| Bias Detection | Requires additional tools or human intervention to detect biases | Advanced bias detection with inbuilt mechanisms for fairness |

| Accuracy | High accuracy, but may suffer in real-world generalization | Optimized for both high accuracy and adaptability in various contexts |

In contrast, Perplexity AI focuses on offering more interpretable and explainable results. By improving how clearly an AI system can explain its predictions, perplexity AI aims to make decision-making processes more transparent to the user. This builds trust, particularly in industries where high-stakes decisions are made.

Traditional models may offer speed, but trust and understandability are where Perplexity AI is working to shift the conversation.

Navigating Ethical Concerns in AI Use

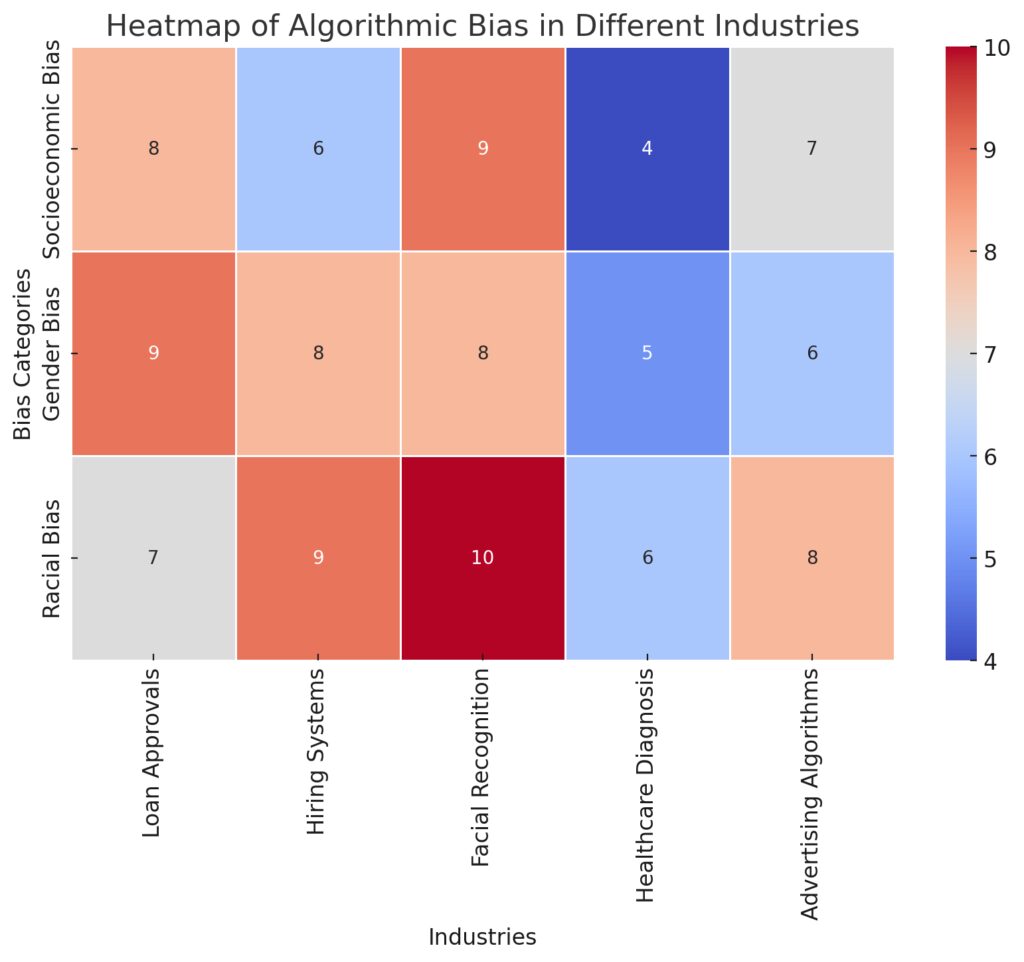

One of the biggest issues facing AI adoption today is its ethical implications. Bias in AI systems can lead to discriminatory practices, affecting everything from job recruitment to criminal sentencing. The data AI uses to “learn” often reflects human biases, and without careful monitoring, these biases can skew outcomes.

This is where human oversight becomes crucial. Although Perplexity AI can enhance understanding of AI decisions, it doesn’t eliminate the need for humans to monitor these processes. Ethical AI use requires accountability and a moral compass, something AI systems, no matter how advanced, can’t inherently possess.

Developers need to think about the moral ramifications of AI and ensure systems are designed with fairness and equity in mind.

Challenges in Balancing Perplexity and Accuracy

There’s a delicate balance to strike between lowering perplexity and maintaining high accuracy. While lower perplexity improves a model’s clarity and reliability, it doesn’t guarantee flawless accuracy. AI systems, by design, seek patterns in data—sometimes patterns that don’t align perfectly with human ethics or standards.

The risk lies in focusing too heavily on reducing perplexity without ensuring the outcomes serve ethical purposes. This challenge is even greater in industries like law enforcement or healthcare, where a small error can lead to life-altering consequences. Human oversight helps mitigate these risks, ensuring AI predictions are put into context.

The push toward explainability must not come at the cost of losing accuracy in crucial predictions.

Human Bias vs. Algorithmic Bias

It’s important to remember that while we often discuss algorithmic bias, human bias is equally pervasive. AI models, including those using perplexity to improve trustworthiness, learn from data provided by humans. If this data is biased—whether due to historical prejudices, incomplete datasets, or skewed samples—then the AI’s decisions will reflect these biases.

Perplexity AI offers hope in addressing these issues by making the reasoning process clearer, allowing humans to spot and address biases more effectively. However, human oversight is still needed to catch these subtle, often ingrained, biases that even sophisticated AI systems might overlook.

Reducing algorithmic bias starts with tackling human biases during the data collection and model training processes.

Perplexity AI and Accountability in AI

One of the most compelling aspects of Perplexity AI is its potential to improve accountability in AI decision-making. By increasing transparency, perplexity-driven systems provide clearer insights into how decisions are made, making it easier to hold both developers and users accountable.

For instance, in a judicial system where an AI recommends sentencing, it would be essential to understand the AI’s logic. Perplexity-based models could offer insights into which data points influenced the recommendation, making it easier to verify whether the decision was fair and unbiased.

This added accountability can help instill greater trust in AI systems across sectors like law, finance, and healthcare.

Improving AI Interpretability Through Perplexity

One of the major promises of Perplexity AI is enhancing the interpretability of AI models. AI systems are often criticized for their lack of transparency, leaving users uncertain about how decisions are made. By focusing on reducing perplexity, AI developers aim to create models that can clearly explain their decision-making processes.

This interpretability is critical for professionals who rely on AI for important decisions. For instance, in medicine, a doctor needs to understand how an AI reached a diagnosis before trusting the recommendation. A more transparent AI system offers this clarity, reducing the ambiguity that typically surrounds AI outcomes.

When AI decisions are more understandable, trust naturally increases, making Perplexity AI a promising tool for improving AI usability.

Building Confidence in AI Outputs

Perplexity AI plays a significant role in building confidence in AI-generated outcomes. Many users hesitate to trust AI systems, especially when they make critical predictions about healthcare, finance, or legal matters. This hesitation stems from uncertainty—if users can’t understand why an AI made a particular recommendation, it’s hard to rely on it.

By improving how AI systems explain their predictions, perplexity can help bridge the trust gap. Users gain more confidence in AI decisions when they can trace the logic behind the output. This trust is especially important in high-stakes industries, where decisions can affect lives and livelihoods.

Increased confidence in AI will accelerate its adoption, creating a future where AI and humans can collaborate effectively.

Will Perplexity AI Really Solve the Trust Problem?

Despite its potential, the question remains: will Perplexity AI fully solve the trust issue in AI? While it offers a pathway toward greater transparency and accuracy, it doesn’t entirely eliminate the need for human oversight. Even the most advanced AI models can make mistakes or overlook key ethical concerns.

Additionally, perplexity focuses primarily on prediction quality, not the moral or social implications of AI decisions. Trust in AI cannot rest solely on mathematical calculations; it also requires ethical governance, accountability, and continual human involvement to guide these technologies responsibly.

So, while Perplexity AI is a significant step forward, solving the trust problem will likely require a combination of improved technology and thoughtful, ethical human oversight.

The Future of AI Governance and Human Oversight

The evolution of AI governance will likely focus on maintaining the delicate balance between machine efficiency and human accountability. As AI systems like Perplexity AI become more sophisticated, they will play a larger role in critical decision-making processes across industries. However, ensuring these systems remain transparent and ethical will require clear guidelines and regulatory frameworks.

Governments and organizations need to set policies that demand transparency in AI systems, including standards for explainability and human review. These policies must ensure AI tools serve the common good without exploiting vulnerabilities in data or amplifying biases.

Moving forward, AI governance will hinge on human oversight, with models like Perplexity AI helping provide the transparency necessary for this collaboration.

Can We Trust AI Without Human Intervention?

At this stage, the idea of trusting AI without any human intervention seems risky. While AI models are improving in accuracy and interpretability, they still lack the capacity to fully understand the complexities of human ethics and values. Machines may excel in pattern recognition and prediction, but they cannot provide the same level of judgment that humans can.

Relying entirely on perplexity AI or any other advanced system, without incorporating human review, risks overlooking important contextual factors. Trustworthy AI systems will likely always require some level of human intervention, ensuring that decisions align with broader social and ethical standards.

As AI evolves, the role of human oversight will remain crucial in guaranteeing that we can trust these systems in the long term.

FAQs

What is Perplexity AI?

Perplexity AI refers to an approach in AI development that focuses on improving the interpretability and transparency of predictions made by AI systems. Perplexity, a statistical measure, evaluates how well an AI model predicts outcomes, particularly in natural language processing (NLP). The goal is to create AI systems that not only provide accurate answers but also make the reasoning behind their predictions easier to understand.

Why is human oversight necessary in AI?

While AI systems are great at processing data and making predictions, they lack the ability to fully grasp ethical considerations or nuanced judgments. Human oversight ensures that AI decisions are fair, accountable, and align with human values, helping to catch mistakes, biases, or unintended consequences in AI-driven outcomes.

How does Perplexity AI help with AI trust issues?

Perplexity AI improves trust by making AI models more transparent. It allows users to understand how an AI arrives at its conclusions, reducing the “black box” problem common in traditional AI models. This increased interpretability helps users feel more confident in AI decisions, particularly in high-stakes sectors like healthcare and finance.

What is the relationship between perplexity and AI accuracy?

Perplexity measures how well an AI model predicts a given outcome. A lower perplexity score generally indicates higher accuracy in making predictions. However, while perplexity can improve how understandable an AI model is, it doesn’t always guarantee that the predictions will be ethically sound or completely accurate. That’s why balancing perplexity with ethical oversight is essential.

Can AI function without human oversight?

AI systems can perform many tasks without direct human intervention, but when it comes to high-stakes decisions or ethical concerns, human oversight is crucial. Even the most advanced AI models can make errors or propagate biases from their training data. Human involvement ensures accountability and helps mitigate unintended consequences.

How does Perplexity AI differ from traditional AI models?

Traditional AI models often function as “black boxes,” where the internal decision-making process is opaque, making it hard for users to understand how outcomes are generated. Perplexity AI, on the other hand, focuses on transparency and explainability. It not only provides predictions but also offers insight into the reasoning behind them. This makes Perplexity AI more interpretable, which is crucial for building trust in sectors where accuracy and fairness are critical.

What are the ethical concerns around AI without human oversight?

When AI operates without human oversight, there are significant risks of bias, discrimination, and ethical oversights. AI systems often inherit biases from the data they are trained on, which can result in unfair outcomes. Without human involvement, these biases may go unchecked, leading to unintended consequences in areas like hiring, lending, or law enforcement. Human oversight ensures that decisions are reviewed through an ethical lens.

Can Perplexity AI eliminate biases in AI systems?

While Perplexity AI can enhance transparency and help users identify biases, it cannot fully eliminate them on its own. Biases often originate from the data that AI models are trained on. Human intervention is still necessary to address these biases, ensure fairness, and make adjustments to training data or algorithms as needed. Perplexity AI helps make these biases more visible, but human oversight remains essential for correcting them.

How does Perplexity AI contribute to AI accountability?

Perplexity AI improves accountability by making AI’s decision-making processes more transparent. When users can see how and why an AI arrived at a certain conclusion, it becomes easier to hold the system—and its developers—accountable for any errors or biases in its output. This increased visibility into the algorithm’s logic fosters greater responsibility, ensuring that AI systems are not used blindly but are scrutinized for fairness and accuracy.

In which industries is Perplexity AI most useful?

Perplexity AI is particularly valuable in industries where trust and accuracy are paramount. In healthcare, it can help doctors understand how AI reaches diagnostic conclusions. In finance, it offers transparency in lending decisions. In legal applications, Perplexity AI can explain how sentencing recommendations are made. By improving interpretability, Perplexity AI is useful in any sector that requires clear, reliable, and ethically sound decisions.

What is the future of AI with Perplexity AI integration?

As Perplexity AI continues to develop, it’s likely to play a major role in increasing the adoption of AI across industries. By making AI systems more understandable and accountable, it can help overcome the current trust gap. Future AI models will likely place a greater emphasis on transparency, ethical decision-making, and human oversight, fostering a collaboration where machines and humans work together more effectively.

How does Perplexity AI impact AI governance?

With Perplexity AI, governance becomes easier to implement because the AI system’s processes are more transparent. Clear explanations of how AI arrives at decisions can help regulators and policymakers set guidelines for AI use. This contributes to ethical AI governance, where both AI developers and users are held responsible for the decisions their systems make. It’s a step forward in ensuring that AI aligns with broader social values and legal frameworks.

Resources

Human Oversight in AI

Harvard Business Review on AI and Human Oversight

This article explores when and how humans should oversee AI decision-making and highlights critical moments when human judgment is necessary.