Retrieval-augmented generation (RAG) models have become game changers for open-domain question-answering (Q&A).

ICombining generative language models with a robust retrieval mechanism, RAG offers an effective way to provide accurate, real-time answers from vast datasets. Here’s how you can harness this innovative approach.

How RAG Transforms Open-Domain Q&A

Traditional Q&A Limitations

Traditional Q&A systems, whether based on retrieval or generative AI, often falter when faced with expansive datasets or rapidly evolving information. Retrieval systems struggle to synthesize coherent answers, while generative models may hallucinate facts due to incomplete knowledge.

RAG’s Unified Approach

RAG models resolve these issues by integrating retrieval mechanisms with generative processing. Here’s how they do it:

- The retrieval module fetches relevant documents, ensuring input data is current and precise.

- The generation module uses this data to synthesize a well-structured, human-like response.

This architecture creates a feedback loop where retrieved documents continuously inform answer generation, ensuring contextual accuracy.

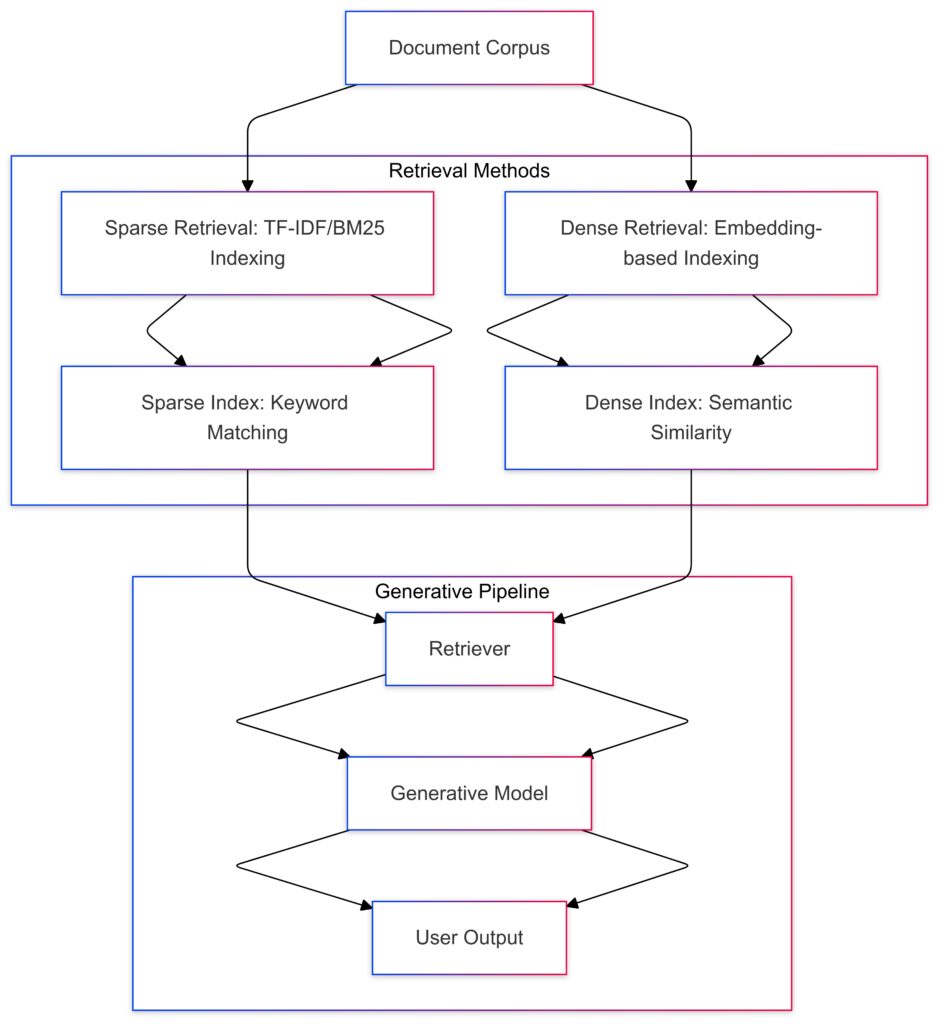

The Architecture of RAG Models

Key Components

RAG models consist of two primary components:

Sparse Retrieval:Uses techniques like TF-IDF or BM25 for keyword-based indexing.

Outputs a sparse index suitable for exact keyword matching.

Dense Retrieval:Employs embeddings for semantic indexing.

Outputs a dense index for finding semantically similar documents.

Retriever:Combines inputs from both sparse and dense indices.

Generative Model:Processes retrieved documents to generate responses.

User Output:Delivers the final response to the user.

1. Document Retriever

The retriever identifies and ranks relevant documents from a knowledge base. It can employ:

- Sparse retrieval techniques, such as BM25, for keyword-based matching.

- Dense retrieval techniques, like embeddings from BERT or DPR, for semantic search.

The choice between sparse and dense retrieval depends on the data’s complexity and the desired speed. Dense retrieval is often preferred for modern applications due to its superior contextual understanding.

2. Generative Model

This module, often powered by transformer-based architectures (like GPT or T5), generates coherent answers by leveraging retrieved content. Key features include:

- Conditional generation: Outputs are tailored to the retrieved context.

- Multi-turn Q&A: Models retain conversational history for nuanced dialogue.

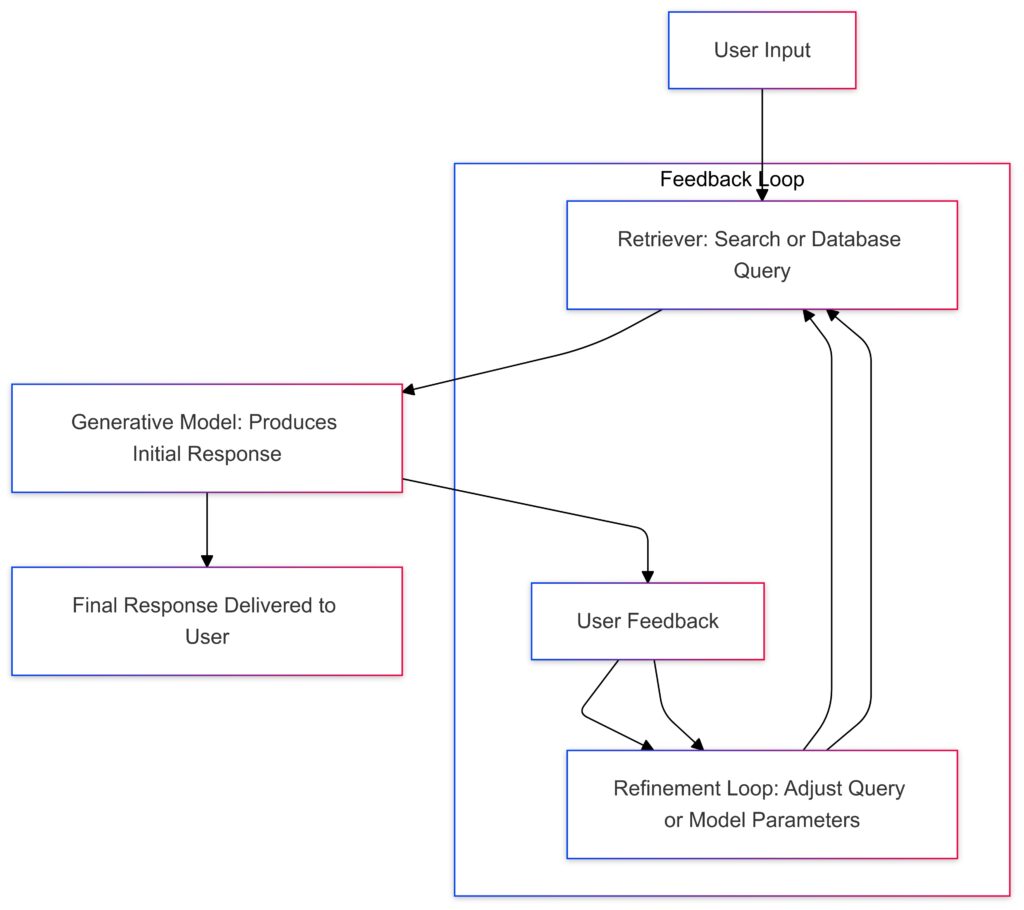

Retriever: Fetches relevant data from a search index or database.

Generative Model: Uses the retrieved data to produce an initial response.

User Feedback: The user reviews and provides feedback for improvement.

Refinement Loop: Based on feedback, adjustments are made to the query or model parameters, leading to further iterations.

Final Response: After refinement, the polished output is delivered to the user.

Interaction Between Modules

RAG employs either:

- End-to-End Training: The retriever and generator are trained together to maximize synergy.

- Two-Stage Systems: Each module is optimized independently for flexibility and fine-tuning.

Optimizing RAG Models for Your Use Case

Preprocessing Your Knowledge Base

To get the most out of RAG, prepare your data with care:

- Clean and structure documents.

- Index using advanced tools like FAISS for faster vector search.

- Prioritize high-quality, unbiased information sources.

Fine-Tuning Generative Models

Adapting the generative model to your needs requires domain-specific training:

- Use labeled datasets for Q&A.

- Include edge cases to improve robustness.

- Regularly evaluate using BLEU or ROUGE scores for natural language quality.

RAG & Chatbots: Delivering Smart, Real-Time Customer Support

Overcoming Challenges in RAG Implementation

Managing Computational Costs

RAG models demand significant resources for retrieval and generation. Optimize by:

- Caching frequently asked questions.

- Using hybrid retrieval models to reduce processing time.

Ensuring Answer Accuracy

Poorly retrieved data undermines the entire system. Avoid this by:

- Implementing quality control metrics.

- Regularly updating the knowledge base.

Future Trends in RAG for Open-Domain Q&A

Advances in Retrieval Methods

Expect to see better algorithms for semantic search and improved relevance-ranking techniques, enabling faster, more accurate retrieval.

Generative Model Improvements

The future holds promise for models with better contextual understanding and more compact architectures, making RAG systems accessible to smaller organizations.

Incorporating these trends will help you stay ahead in open-domain Q&A innovation.

Deploying RAG Models for Open-Domain Q&A

Choosing the Right Framework

To deploy RAG models, start by selecting a framework that aligns with your goals and resources. Popular options include:

- Hugging Face Transformers: Offers pre-trained models and tools for customization.

- OpenAI API: Provides access to cutting-edge generative models.

- LangChain: Ideal for building retrieval-augmented pipelines with minimal setup.

When deciding, consider factors like scalability, cost, and compatibility with existing infrastructure.

Integrating RAG into Your Workflow

RAG can be adapted for various workflows, such as:

- Embedding in chatbots for customer service.

- Incorporating into search engines for enhanced query understanding.

- Using as a backend for knowledge discovery tools in research-heavy fields.

For seamless integration, ensure robust APIs and real-time synchronization with your knowledge database.

Monitoring and Maintenance

Deploying the model is just the beginning. Continuous monitoring is critical to maintaining high performance.

Focus on:

- Feedback loops: Gather user inputs to refine retrieval and generation accuracy.

- Regular retraining: Update the model with new data to avoid knowledge gaps.

- Error analysis: Identify failure cases to improve responses.

This ongoing process ensures your system evolves with your users’ needs.

Enhancing User Experience with RAG

Personalization in Responses

RAG models can leverage user context to provide tailored answers. For example:

- Customize responses based on user history or preferences.

- Dynamically prioritize sources most relevant to the user’s query.

Personalization builds trust and engagement by delivering more meaningful interactions.

Making the Interface Intuitive

An intuitive interface can make or break user experience.

- Use clear prompts or suggestions to guide user queries.

- Offer follow-up question options for deeper exploration.

- Provide transparency by citing sources for generated answers.

A polished interface helps bridge the gap between complex AI systems and end-users.

Multi-Language Support

For global applications, multilingual capabilities are a must.

- Train models with diverse datasets to support multiple languages.

- Implement language detection to route queries seamlessly.

- Incorporate localization to cater to cultural nuances.

This expands your model’s usability across regions and demographics.

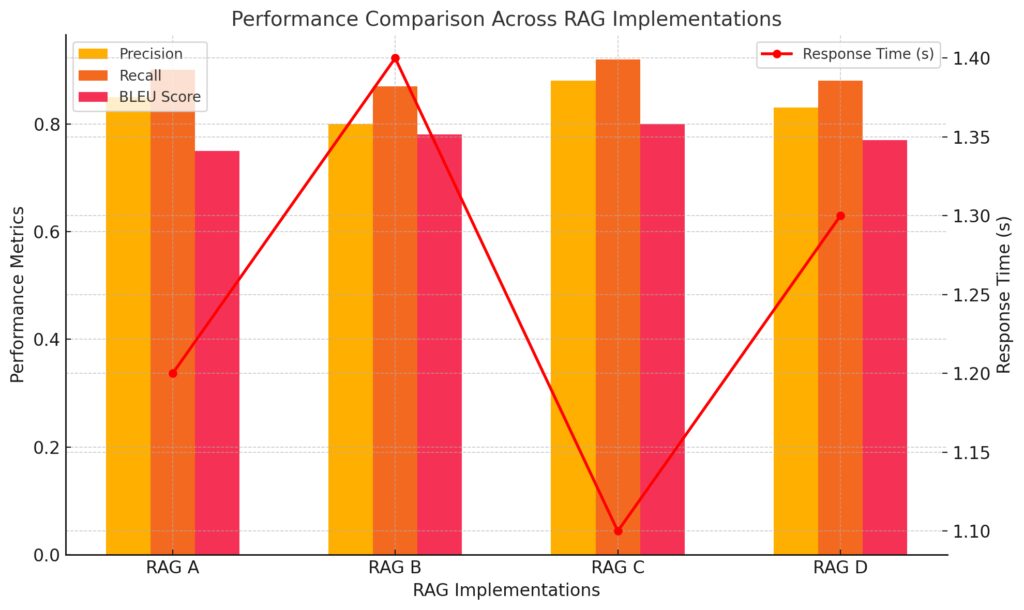

Evaluating RAG Model Performance

Key Metrics for Success

To ensure your RAG model is delivering value, track these metrics:

- Precision and recall: Measure the accuracy of retrieved documents.

- Response coherence: Evaluate how well the generated answers align with the query.

- User satisfaction scores: Gather feedback to assess real-world effectiveness.

Combining quantitative metrics with qualitative feedback offers a comprehensive picture of performance.

Precision, Recall, and BLEU are shown as bar charts.

Response Time (in seconds) is represented as a line graph for easy comparison.

Benchmarking Against Industry Standards

Compare your RAG system’s results to benchmarks from similar models. Popular datasets for evaluation include:

- Natural Questions (NQ) for open-domain Q&A.

- MS MARCO for document retrieval tasks.

Staying competitive requires regular benchmarking to measure progress and identify improvement areas.

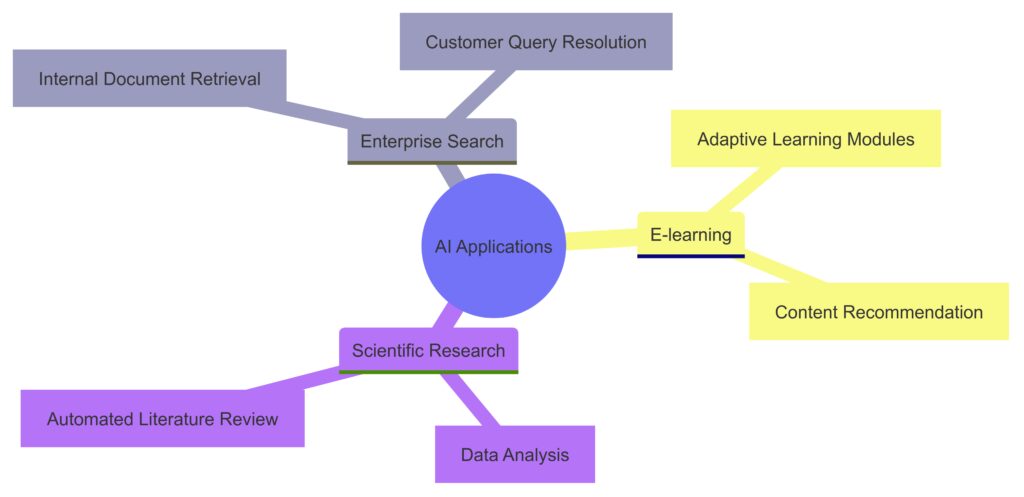

Real-World Applications

Enterprise Search

RAG enhances enterprise search engines by delivering precise answers instead of overwhelming users with document lists. Examples include:

- Legal Research: Synthesizing case law summaries.

- Corporate Knowledge: Answering internal employee queries based on HR or operational policies.

Education and Training

In e-learning platforms, RAG models can act as intelligent tutors, adapting answers based on the student’s level of understanding.

Scientific Research

RAG accelerates literature reviews by summarizing relevant studies, saving researchers hours of manual work.

Internal Document Retrieval: Efficiently locating internal resources.

Customer Query Resolution: Answering customer questions using indexed knowledge bases.

E-learning:

Adaptive Learning Modules: Tailored educational experiences based on learner progress.

Content Recommendation: Suggesting personalized study materials.

Scientific Research:

Data Analysis: Streamlining the processing of experimental data.

Automated Literature Review: Quickly summarizing relevant academic publications.

Pushing the Boundaries with RAG

Integrating Advanced Retrieval Techniques

As retrieval technology evolves, new methods like neural retrieval (leveraging deep learning for semantic understanding) can drastically improve precision. Explore these advancements to stay ahead.

Exploring Beyond Text

Current RAG implementations focus on text, but there’s untapped potential in combining multimodal capabilities:

- Images and videos for richer context.

- Audio transcripts for domains like customer support or legal documentation.

This can unlock new dimensions in Q&A systems.

Incorporating these cutting-edge approaches ensures your RAG model continues to thrive in open-domain environments.

Future Directions

Multimodal RAG Systems

Future advancements may include combining text with images, videos, and audio, enabling richer context for Q&A systems.

Federated RAG Models

Decentralized RAG systems could process private datasets locally, ensuring data privacy while maintaining retrieval accuracy.

Continuous Learning Frameworks

Integrating online learning will allow RAG systems to adapt in real-time, keeping pace with evolving user queries and knowledge bases.

In summary, RAG is revolutionizing open-domain Q&A with unparalleled precision, scalability, and adaptability. Whether for enterprise applications, education, or research, investing in RAG ensures you stay ahead in delivering knowledge solutions.

Let’s explore how you can tailor these strategies to your specific use case!

FAQs

How does RAG improve accuracy in open-domain Q&A systems?

By using retrieval to pull up-to-date information and generation to create fluent answers, RAG provides responses based on the most relevant, accurate data available. It retrieves external knowledge in real-time, reducing inaccuracies and hallucinations that generative-only models sometimes produce.

What makes RAG different from other Q&A models?

Unlike other models that may only retrieve information or generate answers from a fixed knowledge base, RAG performs both tasks. It first retrieves relevant documents and then processes this data to generate more insightful, comprehensive answers. This fusion enables RAG to tackle open-domain questions more effectively.

Can RAG access real-time information?

Yes! RAG can access real-time knowledge by retrieving documents from live sources like websites or updated knowledge bases. This is particularly useful for industries where information changes frequently, such as news, technology, or healthcare.

What are the limitations of RAG in Q&A systems?

Despite its strengths, RAG faces challenges such as the quality of retrieved documents and computational cost. If the external data sources are biased or incorrect, the generated answers can still be flawed. Also, RAG’s dual-process system (retrieval + generation) requires more processing power, which can slow down performance in real-time applications.

How does training a RAG model differ from training other AI models?

RAG models require both retrieval training and generation training. The retrieval component must be trained to identify the most relevant documents, while the generative model is trained to process that information into coherent answers. This dual-training approach ensures that RAG can handle complex queries from open domains effectively.

What industries can benefit from using RAG?

RAG has a broad range of applications across industries like:

- Customer support: Offering accurate, context-aware responses in real-time.

- Healthcare: Providing up-to-date medical information for patients and practitioners.

- Education: Assisting students with personalized learning and in-depth answers.

- Legal services: Helping lawyers find relevant case law and statutory documents quickly.

What is the role of retrieval in RAG?

The retrieval step in RAG involves searching external databases or knowledge sources to find the most relevant documents related to the question. These documents are then used as the foundation for generating the final response, ensuring that the answer is based on real, current data.

Does RAG solve the problem of hallucinations in generative models?

RAG reduces hallucinations by grounding the generation process in real documents retrieved during the first step. However, it’s not entirely immune to hallucinations, especially if the retrieved documents are not fully aligned with the query. But overall, its retrieval-based approach makes it far more reliable than purely generative models.

How can RAG be improved in the future?

Future improvements for RAG may include:

- Enhancing retrieval accuracy through better search algorithms.

- Reducing computational costs to make it faster and more scalable.

- Developing domain-specific RAG models for niche industries.

- Creating multilingual RAG systems for global applications.

How does RAG combine with other technologies?

RAG can be integrated with technologies like knowledge graphs, neural networks, and even blockchain to further enhance accuracy, transparency, and reliability. Combining these tools allows RAG to retrieve structured, interconnected information and ensure more trustworthy answers.

Can RAG be used for personal assistants and chatbots?

Absolutely! RAG is particularly well-suited for AI-driven personal assistants and chatbots due to its ability to provide accurate, up-to-date answers in real-time. It ensures that users receive relevant, conversational responses, making interactions feel more natural.

What are the future trends in RAG technology?

In the future, expect to see more industry-specific RAG models, enhanced retrieval capabilities, and faster processing times. Additionally, multilingual support will expand RAG’s utility, making it a go-to solution for global organizations that need to handle diverse queries in multiple languages.

How does RAG help in reducing bias?

While RAG can still inherit biases from the documents it retrieves, developers can address this issue by curating high-quality, balanced corpora. Future improvements in retrieval filtering and bias-detection algorithms will further reduce the risk of biased outputs.