What Is Quantization in Generative AI?

Simplifying Deep Learning Without Losing Its Soul

Quantization is a technique used to reduce the precision of neural network computations, typically by converting 32-bit floating-point values to 8-bit integers. It helps shrink model size and speed up inference, especially on edge devices.

However, there’s a tradeoff—accuracy can drop. In generative AI, where quality is everything, even small degradations in model output are noticeable.

Why It Matters in Modern AI Workloads

Generative models like transformers and diffusion networks have massive compute needs. Quantization promises to make these systems more deployable—if it can be done without harming performance.

But for these models, it’s not that simple.

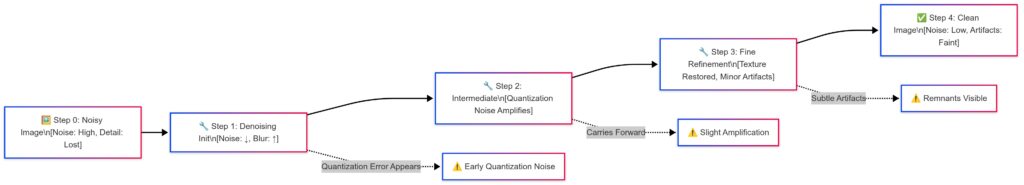

Why Diffusion Models Are Quantization Headaches

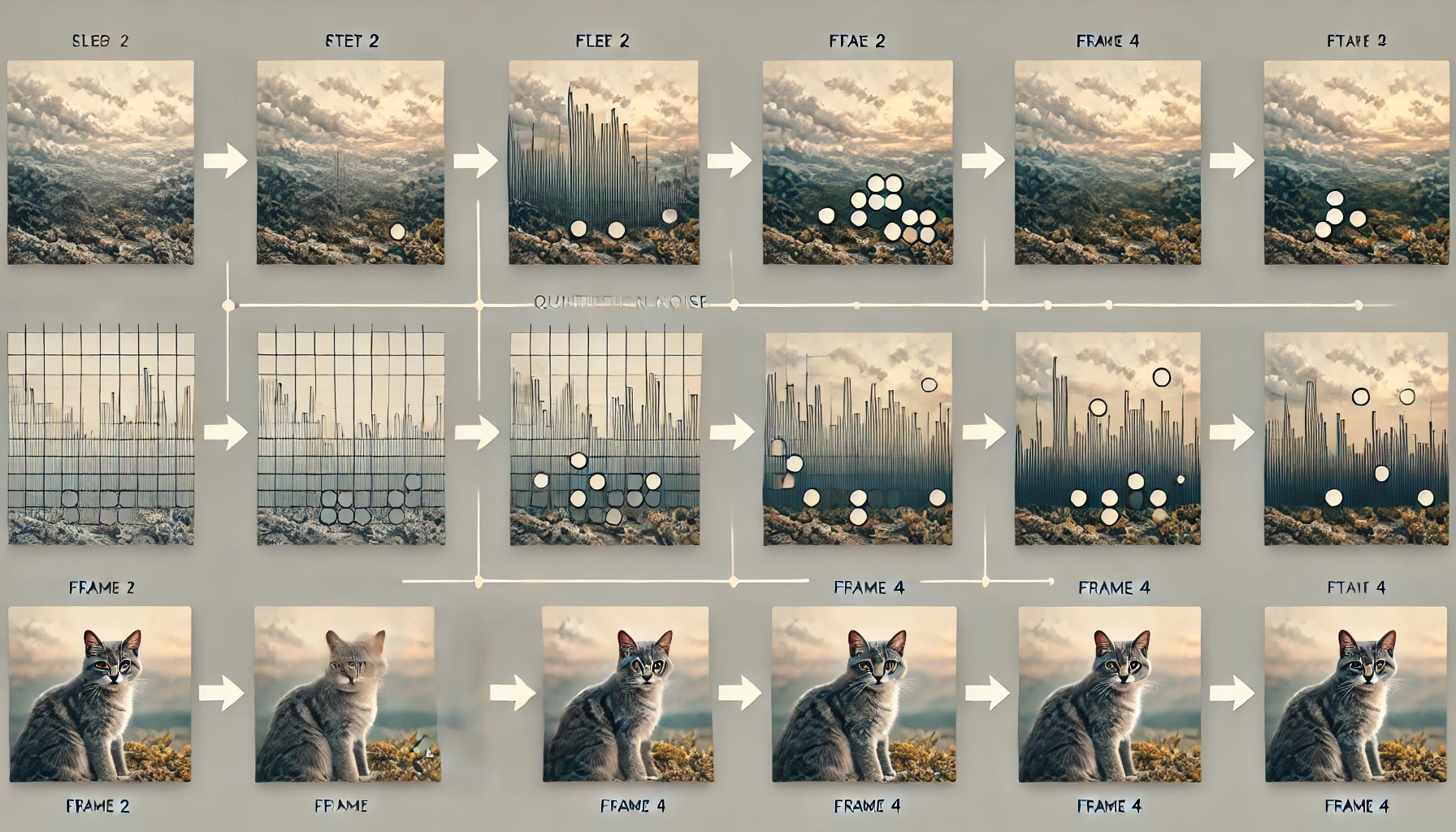

Complex Timesteps & Continuous Noise

Diffusion models like Stable Diffusion or DALL·E use a step-by-step denoising process that’s highly sensitive to numerical changes. Even slight quantization errors in early steps can snowball into major quality drops later.

Their dependency on precise, smooth transitions makes them more vulnerable than classification models.

Distribution Mismatch Worsens the Problem

Post-quantization, the distribution of noise predictions or timestep embeddings can shift. This causes instability because the model was trained on high-precision inputs and can’t generalize well with quantized representations.

Did You Know?

Most quantization-friendly models are feed-forward or classification-focused. Diffusion models break the mold with their iterative and noise-sensitive architecture.

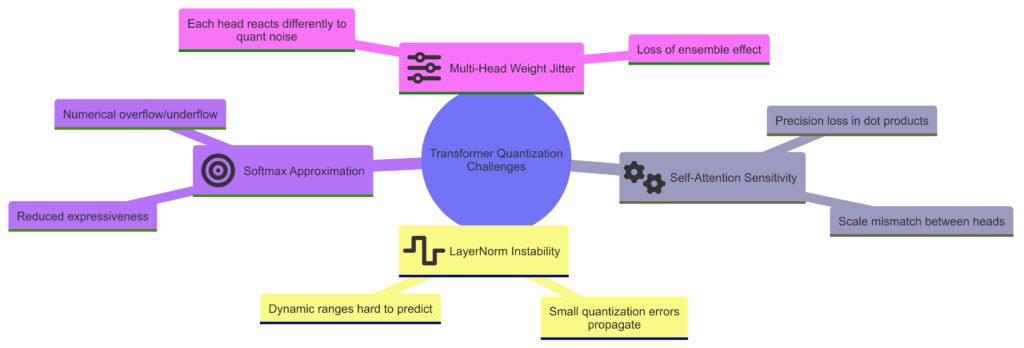

Transformers Resist Quantization for Subtle Reasons

Attention Is Sensitive to Noise

The self-attention mechanism, the core of transformers, involves operations like softmax, matrix multiplication, and layer norm—all of which can degrade sharply when precision is reduced.

Each head in multi-head attention relies on fine-grained weights. Quantization introduces jitter in these weights, especially problematic in generation tasks.

LayerNorm Is a Trouble Spot

Layer normalization, crucial for transformer stability, is tricky to quantize. Its calculations involve means and variances that are highly dynamic and don’t tolerate coarse approximations well.

This limits the effectiveness of both static and dynamic quantization methods.

Precision-Sensitive Tasks Expose the Limits

Tiny Shifts, Big Differences

In generative AI, tasks like image synthesis, text completion, or music generation depend on maintaining high perceptual quality. Even tiny distortions in latent representations lead to outputs that feel “off”—blurry images, awkward grammar, or strange tone shifts.

With quantized models, quality loss may not show up in accuracy metrics—but humans feel the difference instantly.

Where Accuracy Metrics Fall Short

Benchmarks like perplexity or FID don’t always reveal the subtleties introduced by quantization. For truly generative systems, it’s the qualitative user experience that matters most.

Key Takeaways:

- Quantization errors compound in iterative models like diffusion networks.

- Transformers rely on mathematical operations highly sensitive to reduced precision.

- Perceptual quality loss is more important than numerical performance drops.

- LayerNorm and Softmax layers are especially hard to quantize effectively.

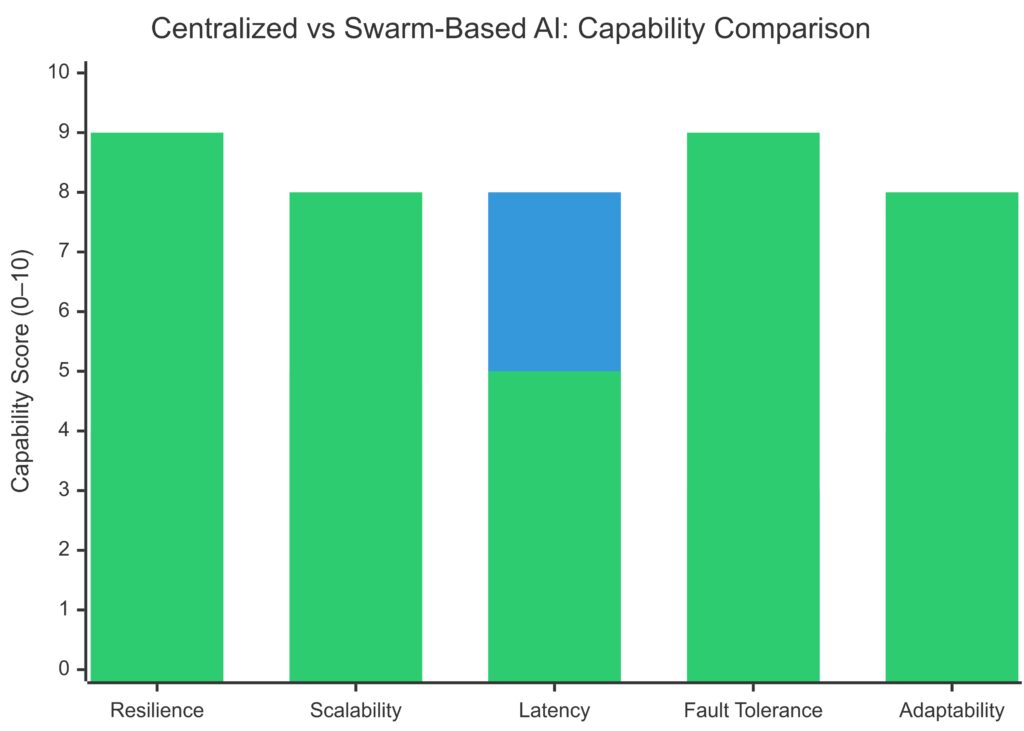

Hardware Optimizations Lag Behind AI Complexity

Limited Support for Mixed-Precision Generative Models

While GPUs like NVIDIA’s Tensor Cores support mixed-precision math, many hardware accelerators aren’t optimized for the nuanced flow of generative AI models. Quantization-aware training (QAT) helps, but it’s complex to apply at scale.

Lack of Flexibility in Toolkits

Toolkits like TensorRT or ONNX Runtime offer quantization tooling, but they often lag behind the latest generative architectures. Features like dynamic tensor shapes, attention masking, or timestep embeddings aren’t always supported out of the box.

Up next: Let’s explore the clever workarounds, bleeding-edge research, and promising future of quantizing these tricky models. From fine-tuning techniques to hardware-software co-design—this is where the battle gets interesting.

Workarounds to Make Quantization Viable

Quantization-Aware Training (QAT) to the Rescue

QAT simulates low-precision math during training, allowing the model to learn to cope with quantized behavior. For generative models, this means retraining with awareness of 8-bit or 4-bit constraints, helping mitigate sudden quality drops.

It’s not plug-and-play though—QAT increases training complexity and often demands large compute budgets.

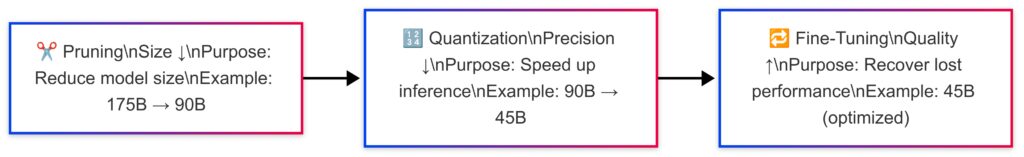

Fine-Tuning Post-Quantization

After quantizing a pre-trained model, some teams use lightweight fine-tuning to recover lost quality. This often involves unfreezing specific layers (like attention heads) and adapting them to low-precision environments.

This strategy is especially useful when you can’t afford full retraining from scratch.

Distillation as a Soft Landing

Teaching Small Models to Think Like Big Ones

Model distillation—training a smaller “student” model to imitate a larger “teacher”—has become a go-to approach when full quantization is risky. The student model, trained from scratch, internalizes behaviors in a way that aligns naturally with low-precision ops.

In generative AI, this technique is widely used in text-to-image systems and chatbots, where inference speed is critical.

Combining Distillation with Quantization

Some researchers now use a dual-approach: distill a model first, then quantize it. This way, the distilled model is already simpler and often more robust to the quirks of integer math.

It’s not perfect, but it’s far more reliable than straight-up quantizing a large model like GPT-4.

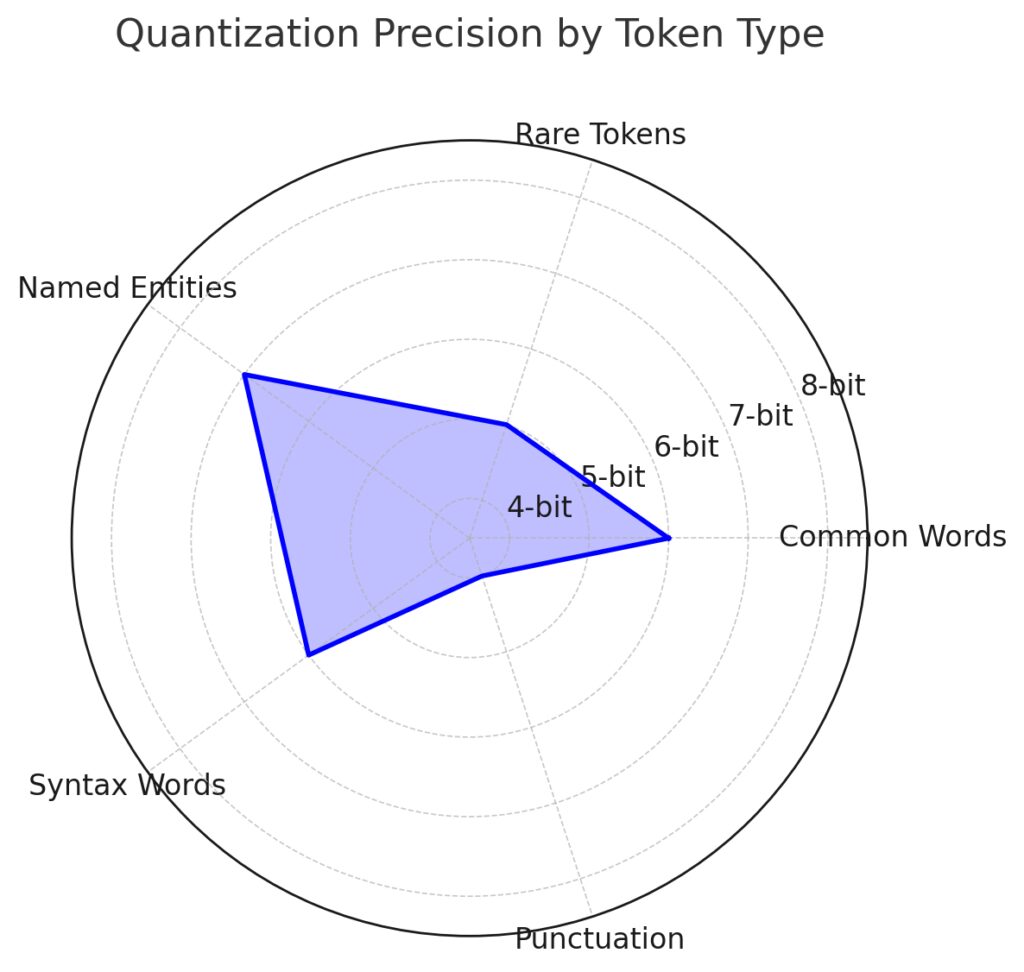

Layer-wise Quantization Strategies

Layer-wise sensitivity to quantization in transformer models reveals where precision matters most.

Prioritizing Sensitive Layers

Not all layers are created equal. Some—like output layers or embeddings—are extremely precision-sensitive. Others, like early convolutional layers or feed-forward blocks, are more forgiving.

Modern quantization methods use layer-wise calibration to apply different bit depths based on each layer’s role.

Mixed Precision for the Win

Instead of going full 8-bit, many solutions now adopt a hybrid approach: mix 8-bit, 16-bit, and even floating point layers where needed. This preserves critical accuracy without bloating the entire model.

Think of it like using premium paint only where it matters—edges, accents, and detail zones.

Did You Know?

Recent experiments show that attention and normalization layers contribute more to generative quality degradation under quantization than dense layers do.

Token-Level Optimization in Transformers

Token-level quantization strategies vary precision based on token importance in language generation tasks.

Scaling by Frequency and Importance

Some cutting-edge approaches treat tokens differently based on frequency or semantic role. Rare tokens or crucial prompt keywords might be handled at higher precision, while filler words go low-bit.

It’s still experimental, but shows promise in text generation models where token importance varies wildly.

Dynamic Quantization Based on Context

Another idea gaining traction is context-aware quantization. Instead of static quantization, the model adapts bit-widths dynamically during inference, depending on task or input complexity.

Great in theory—but so far, it’s hard to implement efficiently.

Compression + Quantization: A Dual Strategy

Pruning First, Then Quantizing

Pruning removes redundant weights or neurons, making the network leaner. By pruning first, there’s less risk of precision loss since the remaining weights are often more significant and structured.

Then quantization is applied to what’s left. The result? A smaller, faster model with surprisingly little degradation.

Combining With Low-Rank Approximations

Low-rank methods approximate large matrices with simpler forms. Pairing these with quantization compresses models even further. It’s especially effective in large transformer backbones where attention matrices dominate memory usage.

Key Takeaways:

- QAT and post-quant fine-tuning improve resilience to quantization.

- Distillation offers a safer route to compact models.

- Layer-wise and token-level strategies are emerging as smarter alternatives.

- Compression techniques like pruning and low-rank factorization complement quantization efforts.

Real-World Use Cases: Who’s Quantizing What?

OpenAI’s Strategy for Speed and Scale

OpenAI has hinted at internal efforts to deploy quantized versions of its larger language models for edge and enterprise use. While specifics remain secret, techniques like mixed-precision inference and distilled GPT variants are likely in play for applications like Copilot and ChatGPT plugins.

They’ve also embraced FP16/BF16 formats, offering a middle ground between performance and quality.

NVIDIA’s Role in Optimized Inference

NVIDIA has been pivotal, thanks to its TensorRT platform and hardware like the H100. These tools enable fine-grained quantization workflows for transformer and diffusion models.

NVIDIA’s custom kernels support per-channel quantization, critical for models like Stable Diffusion XL or LLaMA 2.

Hugging Face and On-Device AI

Hugging Face is leading efforts for open quantized model sharing. Their Optimum library and BitsAndBytes integration allow developers to test 4-bit and 8-bit transformers on consumer hardware.

These innovations have enabled on-device LLMs to become viable even on consumer GPUs or edge chips.

Benchmarks: How Much Does Quality Drop?

Numbers Tell Half the Story

On paper, quantized transformers and diffusion models can perform within 1-2% of their full-precision counterparts on standard benchmarks like BLEU, perplexity, or FID.

But that’s not the whole truth.

Human Evaluations Paint a Different Picture

When actual users judge image or text quality, quantized outputs often “feel” less rich or coherent. Slight grammar errors, flattened textures, or artifacts sneak in—especially under heavy quantization (4-bit and below).

This has led to hybrid models being favored for production, using full precision for sensitive tasks and quantized ops for the rest.

Tools and Frameworks Enabling the Shift

PyTorch & TensorFlow Stay Competitive

Both major frameworks now support quantization flows. PyTorch offers FX Graph Mode Quantization, while TensorFlow provides Post-Training Quantization (PTQ) and QAT through TensorFlow Lite.

For generative models, PyTorch is currently better equipped—especially with its community extensions.

Specialized Libraries Make It Easier

Libraries like BitsAndBytes (for LLMs) and Intel Neural Compressor bring advanced quantization and tuning capabilities. These tools let you experiment with different bit-widths, apply mixed-precision configs, and calibrate models without needing to dive into low-level math.

Expert Opinions on Quantization in Generative AI

Dr. Tim Dettmers – University of Washington, Model Compression Researcher

“Quantization works best when co-designed with architecture. You can’t just chop a model to 8-bit and hope for the best—especially with transformers and diffusion models. LayerNorm, attention softmax, and embedding tables behave very differently under low precision.”

His 4-bit work in BitsAndBytes has set new benchmarks in large model efficiency, showing that quantization can succeed—if you treat it as a system-wide design problem.

Sarah Hooker – Director of Cohere for AI

“Generative models amplify subtle issues. A classification model might mislabel one item, but a generative model can collapse an entire output into gibberish or incoherence from a small numerical error. This makes quality-preserving quantization way more nuanced.”

Her work emphasizes the importance of human-centric evaluation when applying compression techniques to generative outputs like text, audio, and vision.

Dr. Hanlin Tang – Intel Labs

“Layer-aware quantization and mixed precision are the future for generative AI. At Intel, we’re focused on balancing compression with calibration-aware tooling so that developers can choose tradeoffs dynamically—without having to retrain from scratch.”

He advocates for more flexible quantization flows embedded into model compilation pipelines, so generative models can adapt to hardware constraints in real time.

Quentin Guenther – Applied Scientist, Hugging Face

“You have to test quantization in context. For text generation, that means evaluating full conversations, not token accuracy. For image generation, look at consistency across styles. A single metric isn’t enough—it takes a qualitative eye to really debug a quantized model.”

Hugging Face’s work with 4-bit and 8-bit transformers shows that user-perceived quality must drive optimization choices—not just benchmarks.

Jonathan Frankle – Chief Scientist at MosaicML

“Ideally, quantization shouldn’t be an afterthought—it should be baked into pretraining. Future model families will be quantization-aware from day one. That’s how we unlock true scalability for generative AI, especially in edge and distributed applications.”

He argues for a shift in how we train large models, pushing for pretraining regimes that anticipate low-bit deployment scenarios from the start.

Future Outlook: The Path Forward

More Adaptive, Context-Aware Systems

Future quantization techniques will likely be adaptive—shifting precision based on model context, workload, or even user interaction. Imagine a chatbot that boosts accuracy on-the-fly when it detects user frustration.

This level of fluidity is still in early research, but it’s coming.

Hardware-Software Co-Design Is the Next Frontier

We’ll see tighter integration between AI frameworks and hardware accelerators. NVIDIA, Apple, and Google are already embedding custom quantization paths directly into silicon.

Expect AI chips with layer-aware quantization logic, making deployments smarter and more energy-efficient.

Future Outlook

Within 2-3 years, expect transformer-based models to run smoothly in 4-bit or even 2-bit mode—without perceptual quality loss. This will unlock always-on AI agents, smarter mobile assistants, and real-time creative tools that fit in your pocket

Let’s Talk Model Compression

What’s your experience with quantized generative models? Have you tried 4-bit transformers or explored diffusion model pruning?

Drop your thoughts, toolkits, or challenges—we’d love to hear how you’re pushing the edge of what’s possible.

Let’s build the future of fast, efficient generative AI—together.

FAQs

Why is quantization harder for generative models than for classifiers?

Classification models only need to make a single decision—like picking a label. But generative models must produce structured, high-dimensional outputs such as sentences, images, or audio. This makes them much more sensitive to small errors in precision.

For example, a quantized ResNet might still correctly classify a cat photo, but a quantized diffusion model might generate a cat with one too many eyes.

Does quantization always reduce model accuracy?

Not necessarily. In many cases, 8-bit quantization maintains 95–99% of a model’s full-precision performance. However, for generative tasks, especially those with artistic or semantic complexity, it’s less about hard accuracy and more about quality degradation—awkward phrasing, visual noise, or rhythm loss.

That’s why human evaluation is key when assessing quantized generative models.

Can quantized models still be fine-tuned?

Yes, but it’s a bit trickier. If the model is already quantized (especially statically), full fine-tuning can be unstable. A better approach is quantization-aware fine-tuning, where you start from a quantized checkpoint and selectively fine-tune layers using mixed precision.

Some frameworks also support partial fine-tuning—you dequantize specific layers temporarily, train them, then re-quantize.

How does quantization impact latency and throughput?

Quantized models often run 2x to 4x faster and require significantly less memory. That means lower latency and higher throughput—perfect for real-time applications like voice synthesis or conversational AI.

For instance, Meta’s quantized LLaMA models can generate responses on a laptop in under 1 second per token, versus 3–4 seconds in FP32.

Are some generative architectures more quantization-friendly?

Yes. Simpler transformers with fewer attention layers or those with grouped multi-head attention (like GPT-NeoX) tend to quantize better. Architectures that avoid recurrent loops or iterative denoising (like GANs) are generally easier to quantize than diffusion models.

Emerging quantization-aware models are being designed from the ground up with these challenges in mind.

Can quantization help reduce energy consumption?

Absolutely. Low-bit operations require less power, which means quantized models consume less energy—crucial for running AI on smartphones, IoT devices, or large-scale inference farms.

For example, switching from FP32 to INT8 can cut power usage by more than 60% on certain chips.

What are common pitfalls when quantizing generative models?

- Over-quantizing LayerNorm or Softmax can destabilize training or inference.

- Using inappropriate calibration data during static quantization leads to poor scaling.

- Applying uniform bit-widths across all layers instead of using mixed precision.

- Skipping post-quantization validation on perceptual quality.

Testing with real-world prompts or generation scenarios is a must to catch subtle regressions.

Resources

Libraries & Toolkits You Should Know

BitsAndBytes (by Tim Dettmers)

A powerful PyTorch extension for 8-bit and 4-bit quantization. Popular for quantizing large language models like LLaMA, GPT-J, and BLOOM.

👉 BitsAndBytes GitHub

Hugging Face Optimum

Built for inference optimization. Includes quantization support through ONNX, TensorRT, and Intel Neural Compressor integrations.

TensorRT

NVIDIA’s high-performance deep learning inference optimizer. Offers advanced support for quantized models, including INT8 calibration.

👉 TensorRT Developer Guide

Intel Neural Compressor (INC)

Supports post-training and quantization-aware training (QAT). Ideal for both cloud and edge deployments using Intel hardware.

👉 Intel Neural Compressor GitHub

Frameworks with Built-In Quantization Features

PyTorch Quantization (FX Graph Mode)

Offers both eager and graph-based quantization flows. Strong support for transformers and flexible mixed-precision configs.

TensorFlow Lite

Tailored for mobile and embedded deployment. Includes post-training quantization, dynamic quantization, and QAT.

Papers & Blogs Worth Reading

LLM.int8(): 8-bit Matrix Multiplication for Transformers at Scale

A foundational paper for scaling LLMs efficiently using quantization without major performance loss.

👉 Read on arXiv

SmoothQuant (by Microsoft Research)

A technique to enable accurate INT8 quantization for LLMs via input-aware scaling of activations.

👉 Read on arXiv

Tim Dettmers’ Blog on Model Compression

In-depth, practical insights on quantization, low-rank methods, and training efficiency.

👉 Tim Dettmers Blog

Community & Tutorials

Hugging Face Forums

Great for real-world quantization discussions, tutorials, and model-specific help.

NVIDIA Technical Blogs

Covers performance benchmarks, quantization use cases, and optimization tips for diffusion and transformer models.

👉 NVIDIA Developer Blog

OpenVINO Toolkit by Intel

Includes quantization and model optimization flows targeted at edge deployments.