Quantum Machine Learning (QML) is where the cutting-edge of quantum computing meets artificial intelligence. This emerging field promises to revolutionize data analysis, pattern recognition, and decision-making by leveraging the quirks of quantum mechanics.

Whether you’re an AI enthusiast or a developer ready to dive into quantum computing, this beginner’s guide will help you take your first steps into building quantum ML algorithms.

What is Quantum Machine Learning?

A Fusion of Two Powerful Fields

Quantum Machine Learning blends quantum computing and machine learning. While traditional ML relies on classical computers, QML algorithms utilize quantum bits (qubits) for processing. This enables tasks like optimization and pattern detection at unprecedented speeds.

Why Does QML Matter?

- Exponential speedup: QML algorithms can tackle problems infeasible for classical systems.

- Unique capabilities: Quantum states like superposition and entanglement unlock new ways to handle data.

- Transformative potential: From healthcare to finance, QML could redefine industries.

While the field is nascent, Google’s quantum supremacy experiments and advancements in quantum hardware signal a bright future.

Key Concepts in Quantum Computing for ML

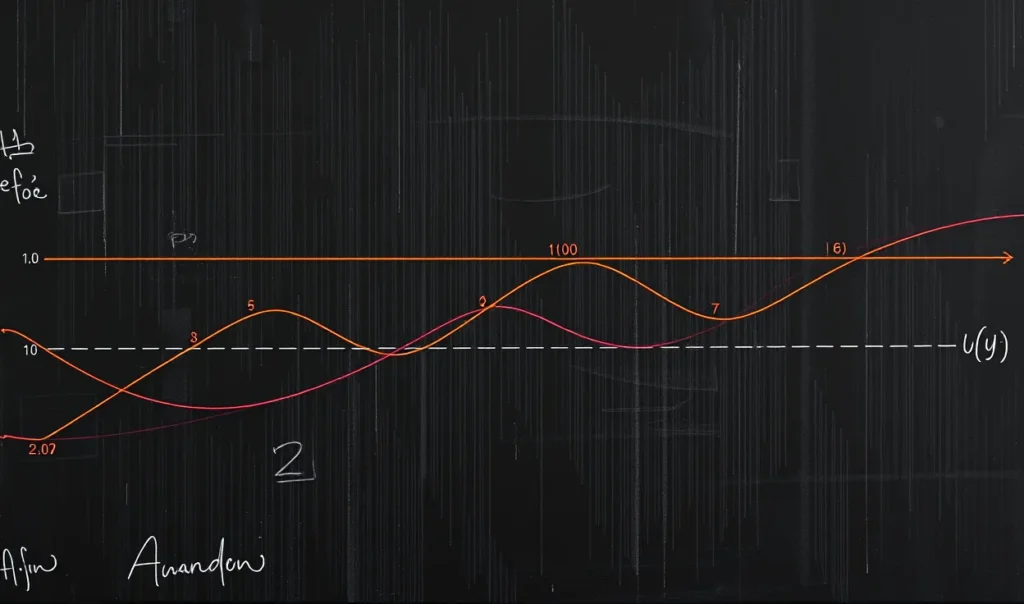

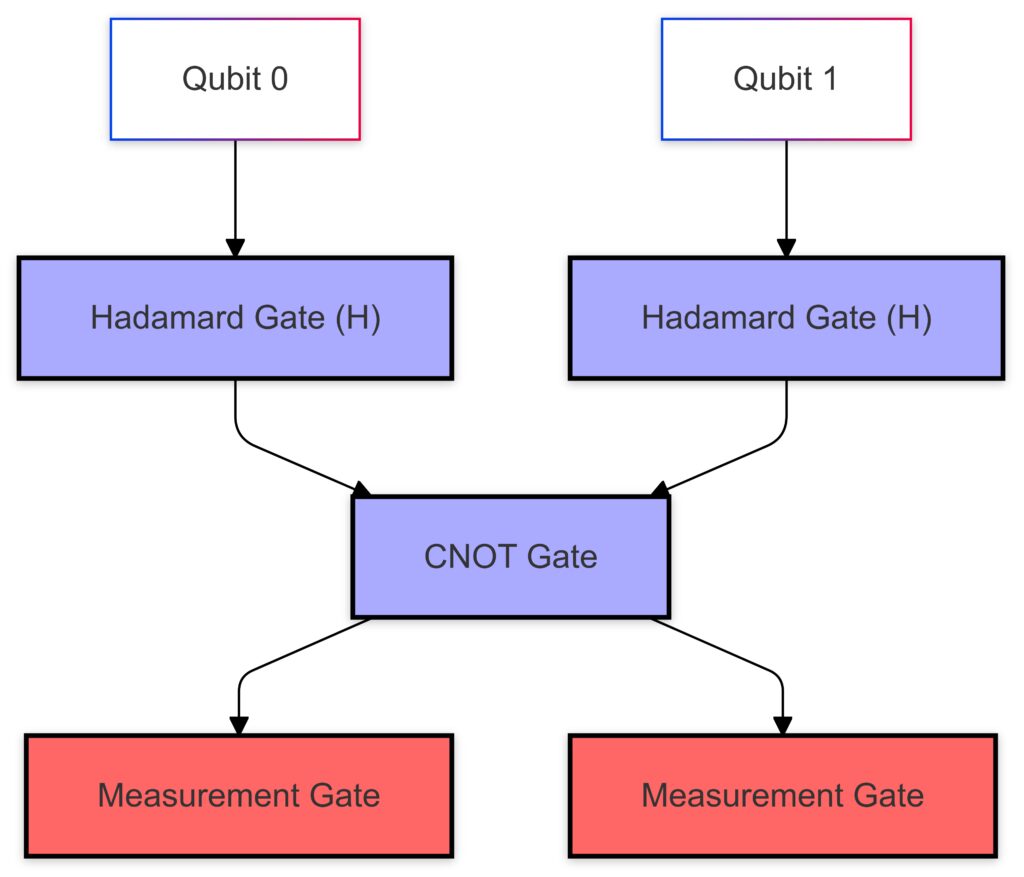

A quantum circuit illustrating superposition, entanglement, and measurement operations for two-qubit systems.

Understanding Qubits

Unlike binary bits (0 or 1), qubits can exist in a superposition of states. This makes quantum systems uniquely powerful.

Core Principles: Superposition, Entanglement, and Interference

- Superposition: Allows qubits to perform many calculations simultaneously.

- Entanglement: Links qubits in ways that classical systems can’t replicate.

- Interference: Fine-tunes probabilities, optimizing computational outcomes.

Quantum Gates and Circuits

Quantum operations are applied via quantum gates (e.g., Hadamard, Pauli-X). These form the building blocks of quantum circuits, the quantum analog to classical algorithms.

To grasp these basics, tools like IBM Quantum Composer and Qiskit are invaluable.

How Does QML Differ from Classical ML?

Hybrid vs. Pure Quantum Approaches

Many QML frameworks integrate quantum and classical components. For example:

- Classical preprocessing handles data preparation.

- Quantum processing units (QPUs) handle optimization tasks.

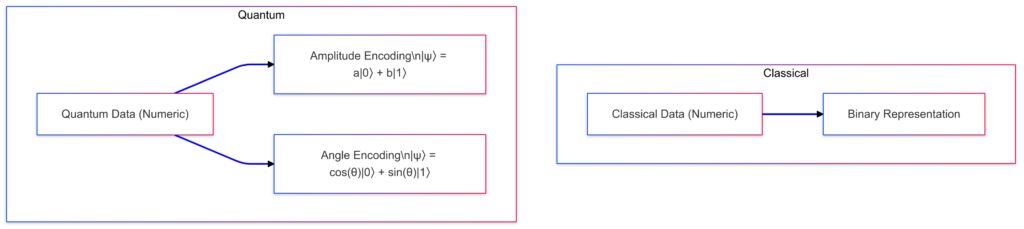

Data Encoding: Quantum States vs. Numerical Values

QML algorithms represent classical data using quantum states. This requires specialized encoding methods like amplitude or angle encoding.

Training Models with Quantum Optimization

Classical optimization algorithms (e.g., gradient descent) are replaced or augmented by quantum optimization techniques, such as Variational Quantum Algorithms (VQAs).

Tools and Platforms for Building QML Algorithms

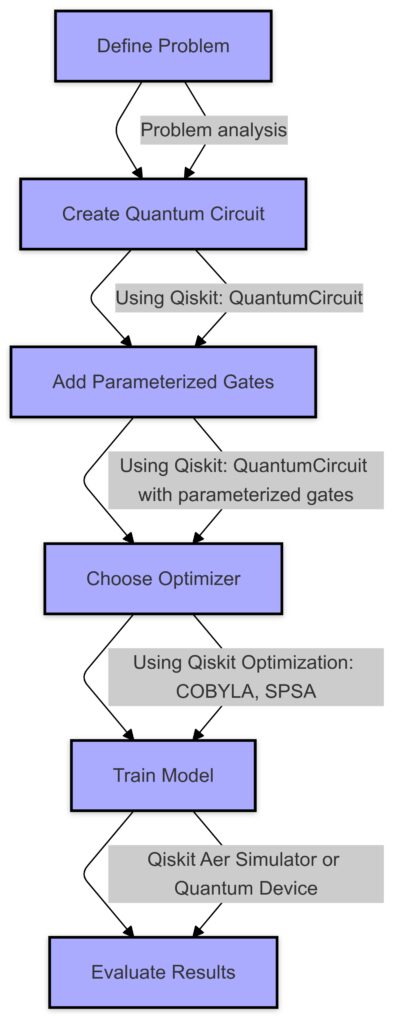

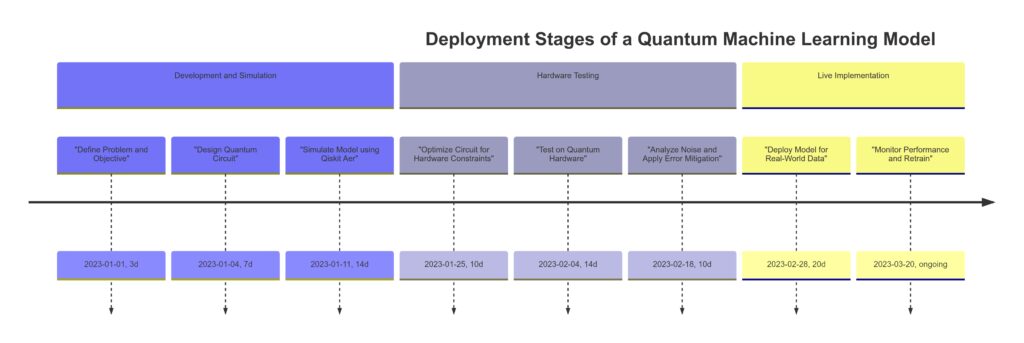

Workflow for building quantum machine learning models using Qiskit, from circuit creation to optimization and evaluation.

Qiskit

Developed by IBM, Qiskit is an open-source framework for building quantum circuits. Its qiskit-machine-learning module bridges quantum computing and ML seamlessly.

TensorFlow Quantum

Google’s TensorFlow Quantum combines TensorFlow’s ML capabilities with quantum computing frameworks. It’s a go-to tool for experimenting with hybrid models.

PennyLane

PennyLane is a platform designed for creating hybrid quantum-classical systems. With support for popular ML libraries like PyTorch, it simplifies QML workflows.

Explore these tools to experiment with quantum-enhanced models without deep knowledge of quantum physics.

The Road Ahead for Developers

Why Start Now?

Quantum computing is rapidly evolving, and early adoption offers immense advantages. By gaining familiarity with QML, developers can:

- Stay ahead in the tech landscape.

- Experiment with tools shaping the future.

- Gain competitive skills for in-demand industries.

Overcoming Learning Barriers

The learning curve can be steep, but it’s manageable with the right resources:

- Beginner-friendly courses like IBM’s Quantum Computing Fundamentals.

- Online communities and forums.

- Open-access papers on quantum and ML topics.

Stay curious, experiment often, and build on small successes!

Step-by-Step: Building Your First Quantum ML Algorithm

Creating your first Quantum Machine Learning (QML) algorithm can be a thrilling yet challenging experience. Let’s break it into manageable steps with practical examples, starting with the simplest form of QML: Quantum-enhanced data classification.

Step 1: Setting Up Your Development Environment

Choose Your Framework

- Qiskit: Best for developers looking for flexibility and detailed control.

- TensorFlow Quantum: Ideal if you’re familiar with TensorFlow and hybrid models.

- PennyLane: A great choice for PyTorch or Keras enthusiasts.

For this guide, we’ll use Qiskit, as it offers robust tools for quantum development.

Installation

Install Qiskit with a simple pip command:

pip install qiskit

Optional: Install additional libraries for visualization and machine learning:

pip install matplotlib scikit-learn

Step 2: Understanding the Dataset and Data Encoding

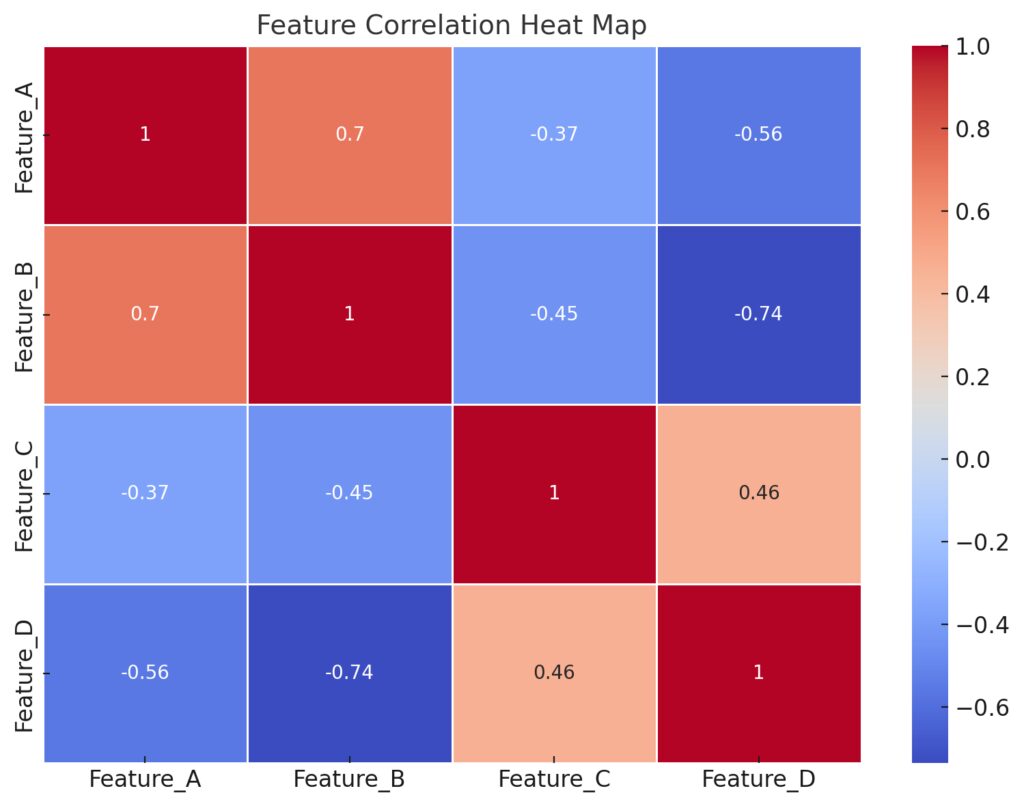

Blue shades indicate positive correlations.

Red shades indicate negative correlations.The intensity of the color reflects the strength of the correlation.

Correlation heat map of input features, demonstrating relationships before encoding into quantum states for QML algorithms.

Choosing a Dataset

Start with a simple dataset, like Iris, to classify flowers based on features such as petal and sepal length.

Encoding Data into Quantum States

Since quantum systems process quantum states rather than raw numbers, you’ll need to encode classical data.

Common Encoding Techniques

- Amplitude Encoding: Maps data to quantum states using amplitudes.

- Angle Encoding: Converts data into rotation angles for quantum gates.

For this tutorial, we’ll use Angle Encoding for simplicity.

Step 3: Creating a Quantum Circuit

Initialize Qubits

Start by creating a quantum circuit with QuantumCircuit in Qiskit:

from qiskit import QuantumCircuit

# Create a quantum circuit with 2 qubits

num_qubits = 2

qc = QuantumCircuit(num_qubits)

Add Gates for Encoding

Apply rotation gates to encode data features into quantum states:

import numpy as np

# Example feature

feature = [0.5, 1.2]

# Apply rotations for encoding

qc.rx(feature[0], 0) # Rotate around the X-axis for qubit 0

qc.ry(feature[1], 1) # Rotate around the Y-axis for qubit 1

Build a Parameterized Model

Add trainable parameters using variational gates, which are essential for machine learning:

from qiskit.circuit import Parameter

# Define trainable parameters

theta = Parameter('θ')

phi = Parameter('φ')

# Add parameterized gates

qc.rx(theta, 0)

qc.ry(phi, 1)

Step 4: Implementing a Quantum Classifier

Define the Quantum Model

Combine the quantum circuit with a classical ML layer for classification. You’ll measure the qubits and use these results as features for a classical classifier:

from qiskit import Aer, transpile, assemble

from qiskit.visualization import plot_histogram

# Simulate the quantum circuit

backend = Aer.get_backend('aer_simulator')

qc.measure_all()

tqc = transpile(qc, backend)

qobj = assemble(tqc)

result = backend.run(qobj).result()

counts = result.get_counts()

# Visualize the output

plot_histogram(counts)

Trainable Parameters and Optimization

In Qiskit, use the qiskit-machine-learning library to integrate the quantum circuit into a Variational Quantum Classifier (VQC). This involves optimizing the parameterized circuit to minimize a cost function.

Step 5: Training and Evaluating the Model

Train the Quantum Model

Train the VQC using a classical optimizer, such as COBYLA or ADAM, to fine-tune the parameters.

from qiskit_machine_learning.algorithms import VQC

from qiskit_machine_learning.kernels import QuantumKernel

# Train the model with labeled data

vqc = VQC(quantum_instance=backend, optimizer='COBYLA', training_data=features, labels=labels)

vqc.train(features, labels)

Evaluate Performance

Evaluate the model on a test set and compare its performance with classical ML models.

Troubleshooting Quantum Machine Learning: A Deep Dive

Building Quantum Machine Learning (QML) algorithms can be exciting, but challenges often arise due to the complexity of quantum systems. This section covers common pitfalls and practical fixes to keep your development smooth and productive.

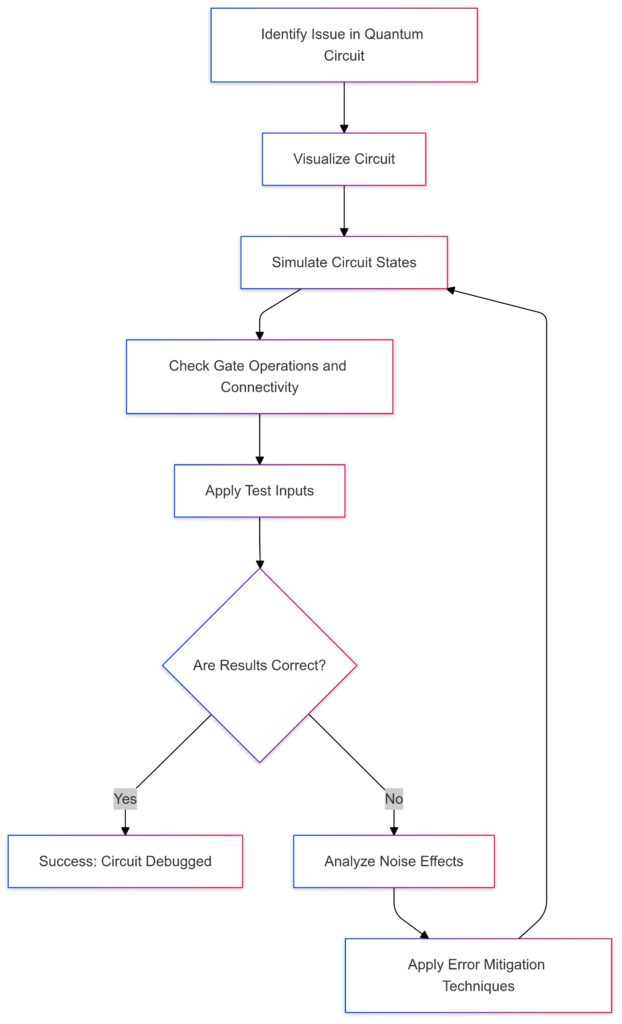

Step-by-step debugging process for resolving errors in quantum circuits and improving model reliability.

1. Understanding Hardware and Simulation Limitations

Issue: Noise in Quantum Hardware

Quantum hardware is prone to noise due to environmental factors, leading to errors in computation.

Solution: Mitigate Noise

- Use error-correcting codes (e.g., Quantum Error Mitigation techniques).

- Perform simulations on a noisy backend to test robustness before running on real hardware:

from qiskit.providers.aer import AerSimulator

simulator = AerSimulator(noise_model=noise_model)

- Consider using platforms like IBM Quantum, which provide qubit fidelity metrics to select more reliable qubits.

Issue: Limited Qubit Count

Quantum systems currently have limited qubits, often making it hard to scale models.

Solution: Optimize Resource Usage

- Use hybrid models where classical computers handle non-quantum operations.

- Compress data via dimensionality reduction (e.g., PCA) to fit it within available qubits.

2. Debugging Quantum Circuits

Issue: Circuit Design Errors

Quantum circuits can fail due to incorrect gate operations, measurement orders, or parameter mismatches.

Solution: Verify Circuit Design

- Visualize the circuit to ensure gates and measurements are correctly placed:

qc.draw('mpl') # Display the circuit in a matplotlib diagram - Test individual components before integrating them into larger circuits.

- Use Qiskit’s transpiler to optimize circuits for the backend:

from qiskit import transpile optimized_circuit = transpile(qc, backend)

Issue: Invalid Measurement Results

Unexpected measurement results may occur due to misaligned qubit states or entanglement issues.

Solution: Test Measurement Outcomes

- Simulate smaller sections of your circuit to identify which gates or parameters cause incorrect states.

- Check qubit initialization; uninitialized qubits may introduce errors.

- Use Qiskit’s statevector simulation to debug intermediate states:

from qiskit.providers.aer import StatevectorSimulator simulator = StatevectorSimulator() result = simulator.run(transpile(qc, simulator)).result() print(result.get_statevector())

3. Training and Optimization Challenges

Issue: Slow or Converging Training

Training variational circuits can be sluggish due to high-dimensional parameter spaces.

Solution: Optimize Training Parameters

- Use gradient-based optimizers like ADAM for faster convergence.

- Initialize parameters with values near expected minima. Random initialization may take longer to converge.

- Experiment with Ansatz designs (the structure of your quantum circuit) to reduce unnecessary parameters.

Issue: Vanishing Gradients (Barren Plateaus)

Some QML models suffer from barren plateaus, where gradients vanish, making optimization impossible.

Solution: Avoid Barren Plateaus

- Use local cost functions to focus on small regions of parameter space.

- Choose an Ansatz tailored to your problem to minimize barren plateaus.

- Employ hybrid classical-quantum training strategies to sidestep purely quantum plateaus.

4. Data Representation and Encoding Errors

Issue: Data Encoding Doesn’t Represent Features Accurately

Improper encoding can result in quantum circuits that don’t capture the essence of the dataset.

Solution: Select the Right Encoding Method

- For large datasets, use Amplitude Encoding to efficiently represent data.

- Use Angle Encoding for simplicity and better interpretability in smaller datasets.

- Test the circuit’s ability to distinguish data classes by simulating small inputs and measuring output probabilities.

Issue: Data Scaling Problems

Quantum gates operate within specific ranges (e.g., [0, 2π]). Improper scaling can lead to invalid rotations or states.

Solution: Normalize Data

- Use data normalization techniques like Min-Max scaling to map features to appropriate quantum ranges:

from sklearn.preprocessing import MinMaxScaler scaler = MinMaxScaler(feature_range=(0, np.pi)) scaled_features = scaler.fit_transform(features)

5. Integration Challenges with Classical Models

Issue: Difficulty Combining Quantum and Classical Layers

Hybrid models can fail if data flow between quantum and classical components is inefficient or mismatched.

Solution: Use Compatible Frameworks

- Frameworks like PennyLane and TensorFlow Quantum simplify integration by providing end-to-end pipelines.

- Ensure output from the quantum component matches the expected format for classical layers (e.g., probability distributions or state vectors).

Issue: Bottleneck in Data Communication

Passing data back and forth between quantum circuits and classical processors can slow down the pipeline.

Solution: Minimize Data Transfers

- Batch operations to reduce communication overhead.

- Perform classical preprocessing before quantum computation, rather than alternating between the two.

6. Real-World Deployment Issues

Issue: Limited Access to Quantum Hardware

Access to quantum hardware may be constrained by queue times or provider limits.

Solution: Simulate First

Simulate your algorithms extensively before submitting them to quantum hardware to reduce debugging needs.

Issue: Inconsistent Results on Hardware

Quantum hardware introduces variability due to noise, leading to inconsistent results.

Solution: Use Repeated Runs

- Run circuits multiple times and average the results to account for noise.

- Use IBM Quantum’s shots parameter to specify the number of repetitions:

result = backend.run(qobj, shots=1024).result() counts = result.get_counts()

7. Learning Resources for Troubleshooting

Stay Updated

Quantum computing is an evolving field. Keep up with advancements via resources like:

- Qiskit Documentation

- PennyLane Tutorials

- Quantum Open Source Foundation

Seek Community Help

Join communities like:

- Quantum Stack Exchange

- IBM Quantum Slack or Discord groups.

With perseverance and the right strategies, you can overcome QML challenges and unlock its potential.

Detailed Debugging Example: Resolving Measurement Errors in a Quantum Classifier

Measurement errors are a common hurdle in Quantum Machine Learning (QML), especially when working with quantum classifiers. In this example, we’ll debug an issue where measurement results are inconsistent with expected outputs.

Problem: Unexpected Measurement Results

You’ve built a quantum classifier to distinguish two classes of data. After training, the model’s predictions don’t match expectations. Some measurements seem random, while others are skewed toward a single class.

Possible Causes

- Incorrect quantum circuit design.

- Noise in the quantum system or simulator.

- Misaligned data encoding or gate parameters.

Step 1: Verify Circuit Design

Visualize the Circuit

First, inspect the quantum circuit to ensure all gates, measurements, and data encodings are applied correctly:

from qiskit import QuantumCircuit

# Example: Simple encoding with a rotation gate

qc = QuantumCircuit(2)

qc.h(0) # Hadamard gate for superposition

qc.cx(0, 1) # CNOT gate for entanglement

qc.measure_all()

# Visualize the circuit

qc.draw('mpl')

Check for:

- Missing or extra gates.

- Correct measurement placement.

Fix: Add or adjust gates to ensure they align with the intended quantum states.

Step 2: Simulate Intermediate States

Measurement errors can stem from issues earlier in the circuit. Use statevector simulation to inspect intermediate quantum states:

from qiskit.providers.aer import StatevectorSimulator

# Simulate and inspect intermediate states

backend = StatevectorSimulator()

result = backend.run(qc).result()

statevector = result.get_statevector()

print(statevector)

If states don’t match expectations (e.g., missing superposition or entanglement), debug the preceding gates.

Fix: Ensure initial states and transformations (gates) prepare the system as intended.

Step 3: Debug Data Encoding

Issue: Inconsistent Encoding

If data is improperly encoded, the model won’t differentiate classes effectively. Let’s debug an Angle Encoding example:

import numpy as np

# Example feature for encoding

feature = [0.5, 1.2]

# Circuit for angle encoding

qc = QuantumCircuit(2)

qc.rx(feature[0], 0)

qc.ry(feature[1], 1)

qc.measure_all()

Test Encoding:

- Simulate with a fixed input to verify the circuit.

- Cross-check if the encoded angles match the dataset features.

print("Encoded angles:", feature)

Fix: Normalize or preprocess features if they exceed the valid range for rotation gates.

from sklearn.preprocessing import MinMaxScaler

scaler = MinMaxScaler(feature_range=(0, np.pi))

normalized_features = scaler.fit_transform(dataset_features)

Step 4: Mitigate Noise

Use a Noise Model

Simulators like Aer can add realistic noise to replicate hardware behavior. Check if noise affects your circuit’s results:

from qiskit.providers.aer.noise import NoiseModel

noise_model = NoiseModel.from_backend(backend)

noisy_simulator = Aer.get_backend('aer_simulator')

# Run the circuit with noise

result = noisy_simulator.run(transpile(qc, noisy_simulator), noise_model=noise_model).result()

print(result.get_counts())

If noise significantly impacts accuracy, consider mitigation strategies:

- Use error mitigation tools (e.g., readout error correction).

- Reduce circuit depth to minimize exposure to noise.

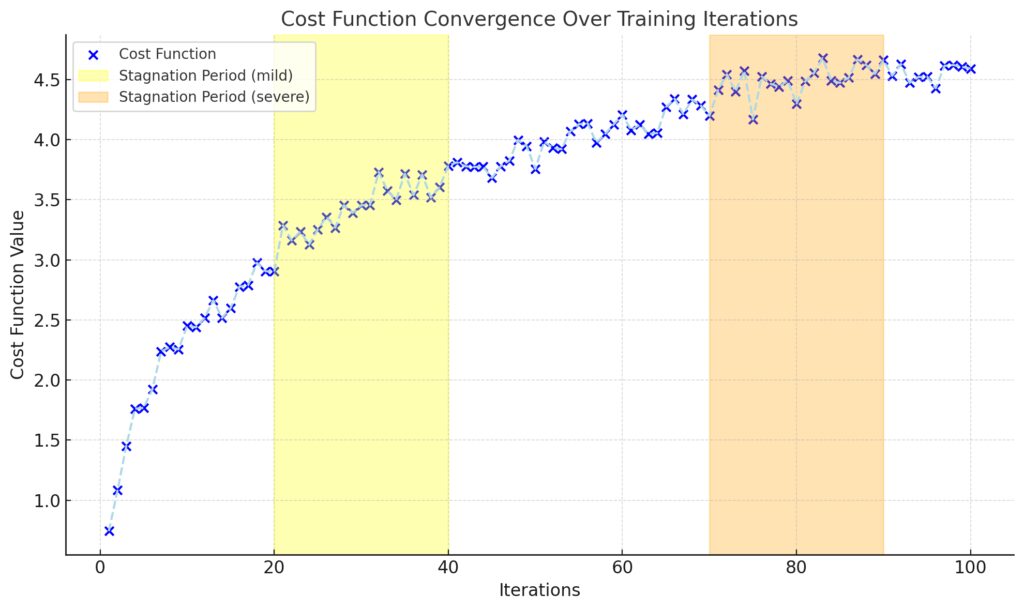

Step 5: Adjust Training Parameters

Issue: Inadequate Optimization

If the quantum classifier isn’t learning effectively, parameterized gates may not be optimized.

Check Training Logs

Track the loss or cost function during training. If it stagnates, try:

- Using a different optimizer (e.g., COBYLA or SPSA).

- Adjusting the learning rate for gradient-based optimizers.

from qiskit_machine_learning.algorithms import VQC

vqc = VQC(optimizer='SPSA', ...)

vqc.fit(training_features, labels)

Fix: Restart Training

Reinitialize parameters closer to expected values to avoid barren plateaus:

import numpy as np

# Set initial parameters manually

initial_params = np.random.uniform(low=0, high=2*np.pi, size=(num_params,))

Step 6: Cross-Check Results

Finally, verify the output probabilities to ensure the classifier distinguishes classes effectively. Use a larger number of shots to reduce randomness:

backend = Aer.get_backend('aer_simulator')

result = backend.run(transpile(qc, backend), shots=1024).result()

counts = result.get_counts()

print(counts)

Fix: Refine parameters or encoding based on results, repeating Steps 2-5 as needed.

By systematically addressing circuit design, noise, data encoding, and training, you can isolate and resolve measurement errors in your quantum classifier.

Key Takeaways: Debugging Quantum Machine Learning

Debugging Quantum Machine Learning (QML) algorithms can be complex, but breaking it down into clear steps simplifies the process. Here are the key takeaways for resolving common issues:

1. Understand Your Tools and Frameworks

- Use platforms like Qiskit, PennyLane, and TensorFlow Quantum to simplify circuit design and debugging.

- Simulate extensively before running on real quantum hardware.

2. Debugging Quantum Circuits

- Visualize circuits to check for missing or misplaced gates.

- Use statevector simulators to examine intermediate states and identify issues early.

- Optimize circuits using Qiskit’s transpiler to reduce unnecessary complexity.

3. Handle Noise and Hardware Constraints

- Incorporate noise models in simulations to mimic real hardware behavior.

- Reduce circuit depth and qubit usage to minimize noise effects.

- Select high-fidelity qubits when running on actual quantum devices.

4. Data Encoding Matters

- Choose an appropriate encoding method (e.g., Angle Encoding or Amplitude Encoding) based on your dataset.

- Normalize or preprocess features to fit the input range of quantum gates.

5. Optimize Training Effectively

- Use parameterized gates and experiment with different optimizers like ADAM, COBYLA, or SPSA.

- Monitor the loss function during training to detect issues like barren plateaus or slow convergence.

- Adjust initial parameter values to improve optimization efficiency.

6. Evaluate Results Thoroughly

- Run multiple shots during measurement to reduce variability in output probabilities.

- Validate your quantum classifier’s accuracy by comparing it to classical benchmarks.

7. Leverage Learning Resources and Communities

- Stay updated with official documentation and tutorials from Qiskit, PennyLane, or TensorFlow Quantum.

- Join online forums like Quantum Stack Exchange or IBM Quantum Slack for expert advice.

The Big Picture

Troubleshooting QML requires patience, experimentation, and a deep understanding of both quantum mechanics and machine learning principles. With the right strategies, even beginners can build reliable and scalable quantum algorithms.

Resources

1. “A Survey on Quantum Machine Learning: Current Trends and Challenges”

This comprehensive survey provides an in-depth overview of foundational concepts in quantum computing and their integration with machine learning. It discusses various QML algorithms and their applicability across different domains.

2. “Challenges and Opportunities in Quantum Machine Learning”

This paper highlights the distinctions between quantum and classical machine learning, focusing on quantum neural networks and deep learning. It also explores potential areas where QML could offer significant advantages.

3. “Quantum Machine Learning: A Review and Case Studies”

Offering a research path from fundamental quantum theory through QML algorithms, this review discusses basic algorithms that form the core of QML, providing insights from a computer scientist’s perspective.

4. “Quantum Machine Learning: From Physics to Software Engineering”

This review examines key approaches that can advance both quantum technologies and artificial intelligence, including quantum-enhanced algorithms that improve classical machine learning solutions.

5. “Systematic Literature Review: Quantum Machine Learning and Its Applications”

This systematic review analyzes papers published between 2017 and 2023, identifying and classifying various algorithms used in QML and their applications across different fields.

6. “Quantum Machine Learning on Near-Term Quantum Devices: Current State of the Art”

This paper reviews the progress in QML algorithms designed for near-term quantum devices, discussing their current capabilities and limitations.

7. “Quantum Machine Learning: A Comprehensive Review on Optimization of Classical Machine Learning”

This study explores how quantum computing can enhance classical machine learning functionalities, focusing on various learning models that incorporate quantum circuits.

8. “Quantum Machine Learning for Classical Data”

This dissertation investigates quantum algorithms for supervised machine learning that operate on classical data, aiming to understand the promises and limitations of current QML algorithms.

9. “Large-Scale Quantum Reservoir Learning with an Analog Quantum Computer”

This paper presents a scalable quantum reservoir learning algorithm implemented on neutral-atom analog quantum computers, demonstrating competitive performance across various machine learning tasks.

10. “MAQA: A Quantum Framework for Supervised Learning”

This work proposes a universal, efficient framework that can reproduce the output of various classical supervised machine learning algorithms using quantum computation advantages.

These resources offer a solid foundation for understanding the current landscape and future directions of Quantum Machine Learning. Engaging with these materials will provide deeper insights into both the theoretical and practical aspects of QML.

Recent Advances in Quantum Computing

Google says it has cracked a quantum computing challenge with new chip

New York PostGoogle claims quantum chip may prove existence of parallel universes