As quantum computing edges closer to practical applications, one exciting frontier is the intersection between quantum computing and artificial intelligence.

Quantum Recurrent Neural Networks (Quantum RNNs) represent a promising fusion of quantum computing’s power and the sequential modeling capabilities of Recurrent Neural Networks (RNNs).

By bringing together these two advanced fields, researchers aim to create faster, more efficient models capable of tackling complex sequential tasks like language processing, time-series analysis, and financial forecasting.

In this article, we’ll explore the potential of Quantum RNNs, the unique advantages they offer, and the hurdles that researchers still face in making these powerful tools accessible for real-world applications.

Understanding Quantum Computing: A Brief Overview

What Is Quantum Computing?

At its core, quantum computing leverages the principles of quantum mechanics to perform calculations that are impossible (or would take unfeasibly long) on traditional computers. Unlike classical computers that use binary bits (0s and 1s), quantum computers use qubits. These qubits can exist in multiple states simultaneously due to a property called superposition, and they can also affect each other through entanglement.

This dual capability allows quantum computers to process vast amounts of information simultaneously, making them ideal for solving complex problems that involve large datasets or require immense computational power.

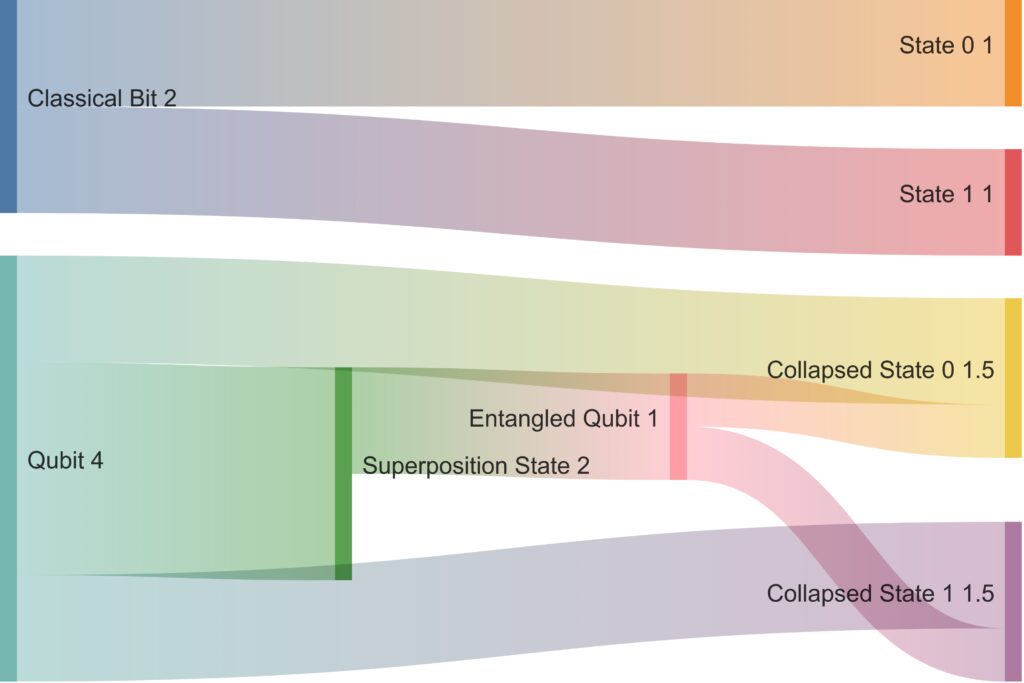

Classical Bit: Limited to two distinct states, 0 and 1.

Qubit: Exists in a superposition of states, represented as the “Superposition State,” which can collapse into either 0 or 1 upon measurement.

Quantum Entanglement: The qubit in a superposition state connects with an entangled qubit, creating a link that does not occur in classical bits, where entangled states share a probabilistic connection until collapsed.

The Role of Qubits in Sequential Data Processing

One of the exciting applications of qubits is in sequential data processing—tasks that require analyzing data points in order, like language modeling or stock prediction. The ability of qubits to exist in superpositions means they can model probabilities and correlations in unique ways, enabling potentially more accurate or efficient sequential analysis.

As quantum processors continue to improve, they are opening doors to quantum-enhanced RNNs that could address previously unsolvable or highly resource-intensive problems.

What Makes RNNs Important in AI?

The Purpose of Recurrent Neural Networks (RNNs)

Recurrent Neural Networks, or RNNs, are specialized neural networks that excel at analyzing sequential data, where context and order matter. Unlike traditional neural networks, RNNs have “memory” of previous steps within a sequence, which allows them to retain and use information from earlier in the sequence to improve predictions.

RNNs are widely used in:

- Natural Language Processing (NLP) for tasks like language translation and sentiment analysis

- Financial forecasting to predict stock prices based on historical data

- Time-series analysis for understanding patterns in sequential data across various domains

However, despite their capabilities, traditional RNNs have notable limitations. Training these networks can be slow and computationally expensive, particularly as the length of the sequence grows. Additionally, they suffer from the vanishing gradient problem, where gradients become too small to support learning over long sequences.

Quantum RNNs: A Potential Solution for RNN Limitations

The idea behind Quantum RNNs is to leverage quantum computing’s processing power to overcome some of these RNN limitations. Since quantum computers can represent complex states and relationships more efficiently, Quantum RNNs could reduce computation time and handle longer sequences without suffering from vanishing gradients.

How Quantum RNNs Work: Key Concepts

Quantum Circuits and RNN Architecture

Quantum RNNs incorporate quantum circuits as part of their architecture. In a basic sense, a quantum circuit consists of quantum gates that manipulate qubits and help to process input data. By feeding sequential data through these circuits, Quantum RNNs can effectively model dependencies in time-series data, just as classical RNNs do.

These quantum circuits typically consist of:

- Parameterizable quantum gates that adjust based on the data, allowing the network to learn from input sequences

- Entanglement operations to enable connections between qubits, simulating the way neurons in traditional RNNs are interconnected

Through this design, Quantum RNNs can capture complex relationships and process information in ways traditional RNNs struggle to match.

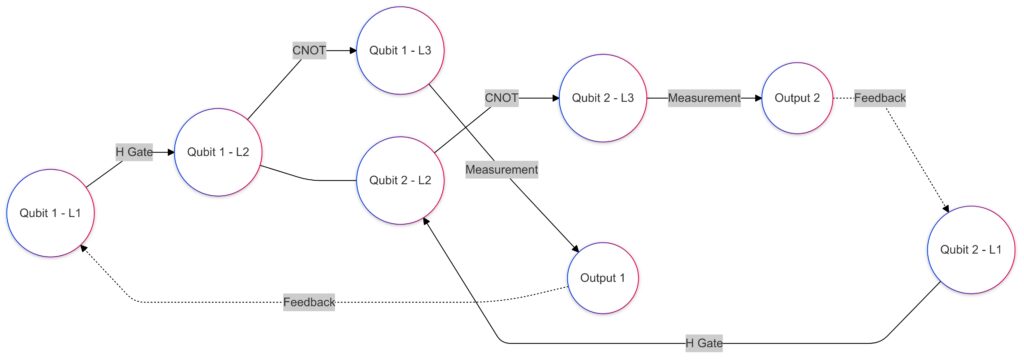

Layers:Input Layer: Initial qubits enter with an H gate operation.

Intermediate Layer: Includes CNOT gates and an entanglement connection between qubits.

Output Layer: Each qubit undergoes measurement, generating outputs.

Feedback Loops: Arrows indicating feedback from the output layer back to the input layer, simulating memory in the RNN.

Entanglement and Superposition for Sequential Data

The entanglement of qubits allows Quantum RNNs to model relationships between data points in the sequence more naturally. When qubits are entangled, the state of one can instantly affect the state of another, even if they are separated. This feature can be leveraged to model dependencies across distant points in the data, which is particularly useful for tasks like long-form language generation.

Superposition, meanwhile, allows a quantum state to represent multiple possible outcomes at once. This parallelism could make Quantum RNNs faster than classical RNNs, as they can consider multiple possibilities without needing to process each one separately.

Hybrid Quantum-Classical Models

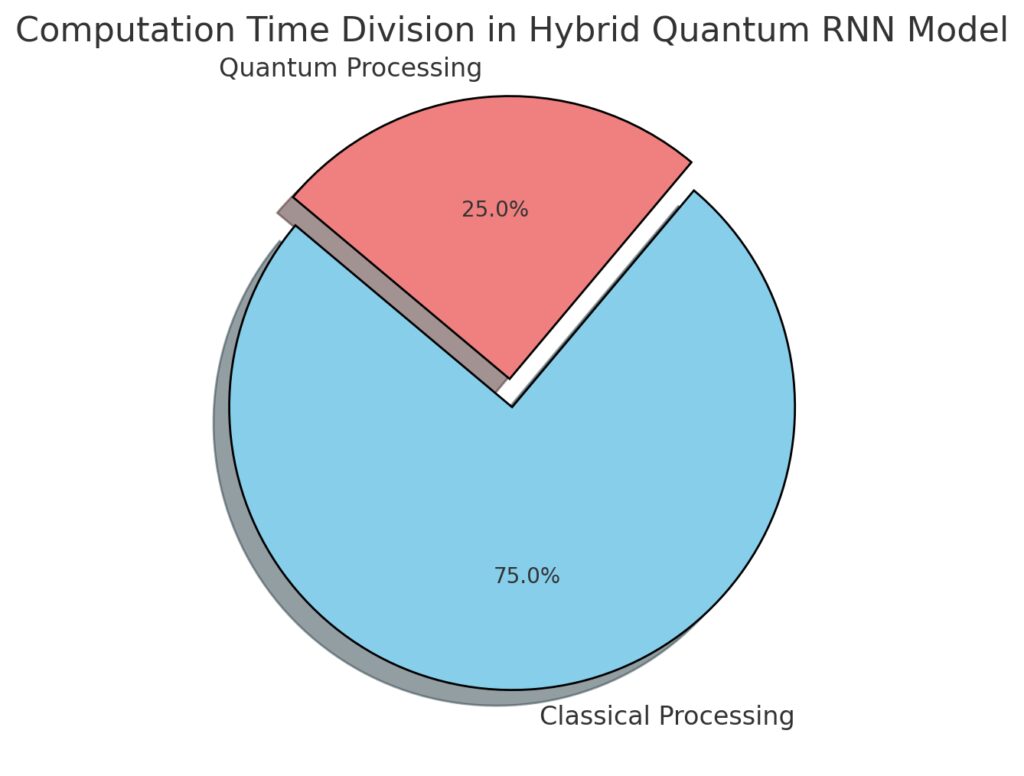

In practice, Quantum RNNs are often used in hybrid quantum-classical models. These systems integrate both quantum and classical computing components, utilizing quantum circuits to handle the most computationally intensive parts while relying on classical processing for other tasks. Hybrid models enable practical experimentation on current quantum computers, which are still in the NISQ (Noisy Intermediate-Scale Quantum) phase, meaning they are powerful but also prone to errors.

Classical Processing: Occupies a larger segment (75%), showing the current dependency on classical resources.

Quantum Processing: A smaller, but highlighted, segment (25%) to emphasize its critical role despite current hardware limitations.

This visualization illustrates how computational load is shared, with quantum processing essential yet constrained by available quantum technology.

Applications of Quantum RNNs: Where They Could Shine

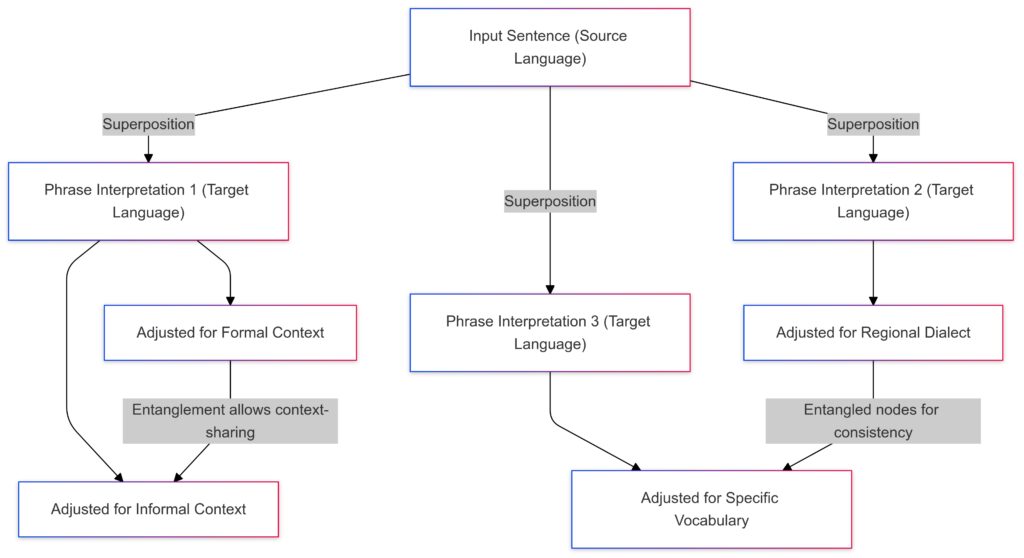

Advanced Natural Language Processing

One of the most promising areas for Quantum RNNs is Natural Language Processing. Language models benefit immensely from understanding context, long-term dependencies, and probability, all of which quantum computing is well-suited to handle. In Quantum RNNs, the entanglement between qubits could capture these dependencies more accurately, resulting in better understanding and generation of language.

Root Node: Represents the input sentence in the source language.

Branches: Show different phrase interpretations in the target language, made possible through quantum superposition.

Contextual Adjustments: Nodes on each branch represent adjustments based on formal, informal, dialectal, or vocabulary-specific contexts.

Quantum Entanglement: Links between nodes (e.g., formal and informal adjustments) illustrate the ability to share contextual information across interpretations, enhancing consistency.

For example, Quantum RNNs might improve machine translation models, enabling more accurate translations by capturing subtle contextual cues that classical RNNs may miss. Additionally, they could play a significant role in summarizing large texts, generating coherent responses in conversational AI, and advancing other NLP applications.

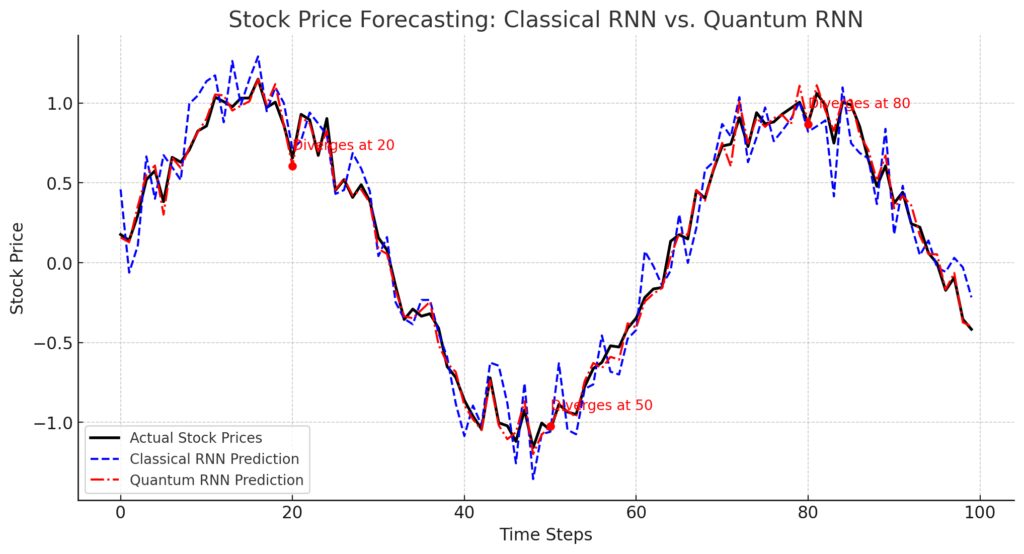

Time-Series Analysis for Financial Forecasting

In finance, time-series forecasting is critical for predicting stock prices, market trends, and economic indicators. Quantum RNNs could improve the accuracy of these predictions by analyzing complex, nonlinear patterns in historical data. This is especially valuable in stock market analysis, where traditional RNNs often struggle due to the sheer volume and complexity of the data involved.

Black Line: Actual stock prices.

Blue Dashed Line: Predictions from the Classical RNN, which show lag and reduced adaptability to trends.

Red Dotted Line: Predictions from the Quantum RNN, demonstrating better alignment with actual stock prices.

Divergence Points: Marked where the Quantum RNN diverges from the Classical RNN and better captures market trends, illustrating its enhanced ability to adapt to changing patterns.

With quantum enhancements, financial institutions could develop models that detect patterns faster and adapt more dynamically to changing market conditions. The improved processing speed could allow for real-time analysis and forecasting, a major advantage in fast-paced markets.

Enhanced Performance in Scientific Research

Another area where Quantum RNNs could make a big difference is in scientific research, particularly fields involving sequential data, such as genomics and climate science. These fields rely on analyzing lengthy data sequences and identifying subtle trends or patterns. Quantum RNNs, with their enhanced sequential modeling capacity, could accelerate research by providing more accurate and faster models for complex tasks like gene-sequence analysis or climate pattern prediction.

For example, in genomics, Quantum RNNs could help model the relationships between genes and predict how certain genetic sequences impact health, while in climate science, they could assist in forecasting extreme weather events based on historical climate data.

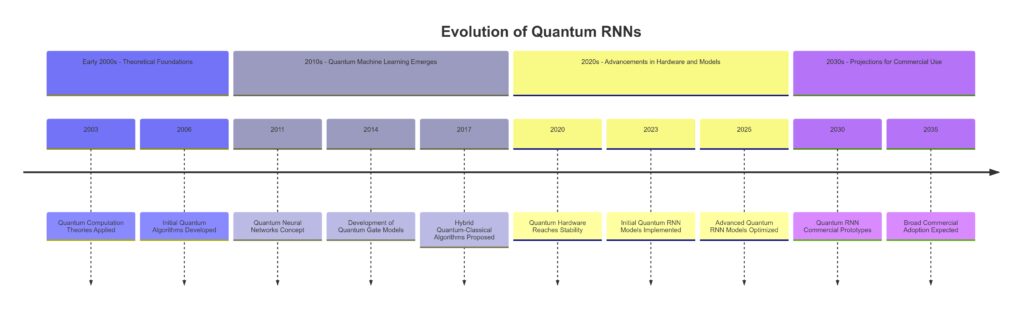

Challenges and Future Directions for Quantum RNNs

Scalability and Error Correction

Quantum computers today are still in an experimental phase, with many limitations related to scalability and error rates. As Quantum RNNs rely on entangled qubits, they can be sensitive to errors, which can affect the accuracy of the model. For Quantum RNNs to become practically useful, researchers need to develop better error correction techniques and ensure that qubits remain stable over longer periods.

Early 2000s – Theoretical Foundations: Initial quantum computation theories and algorithms.

2010s – Quantum Machine Learning: Quantum neural networks and gate model developments.

2020s – Hardware and Model Advances: Stable quantum hardware and initial Quantum RNN implementations.

2030s – Commercial Projections: Expected broad adoption and commercialization of Quantum RNNs.

Quantum Hardware Limitations

Quantum RNNs also depend on advanced quantum hardware, which remains limited. Currently, most quantum processors have a relatively small number of qubits, restricting the size and complexity of models that can be run. As quantum hardware improves and more qubits become available, we may see an explosion in the potential applications of Quantum RNNs.

Learning Algorithms and Frameworks

Developing effective learning algorithms for Quantum RNNs is an ongoing challenge. Traditional machine learning frameworks, like TensorFlow and PyTorch, are not designed to work seamlessly with quantum computers. Fortunately, quantum computing libraries like Qiskit and PennyLane are emerging to bridge this gap, but further development is needed to make Quantum RNNs more accessible for widespread research and commercial applications.

As quantum computing advances, Quantum RNNs could transform industries by providing unprecedented accuracy, speed, and scalability in sequential data modeling.

Quantum RNNs in Action: Practical Examples

Machine Translation with Quantum RNNs

Machine translation is one of the most challenging tasks in Natural Language Processing (NLP), especially when translating complex languages with nuanced structures. Quantum RNNs could offer a unique advantage here by capturing long-range dependencies between words more effectively. For instance, understanding a phrase in Japanese and translating it accurately to English often requires context that spans several sentences.

With quantum entanglement, Quantum RNNs could handle these dependencies more efficiently. By capturing broader contexts with fewer computational resources, they can potentially improve translation quality while reducing processing time. Major tech companies are exploring Quantum RNNs as a next-gen solution for multi-language AI systems.

Financial Modeling and Risk Analysis

In the financial sector, predicting market trends and assessing risk are both critical and challenging tasks. Quantum RNNs can be valuable tools for financial analysts and hedge fund managers who rely on accurate forecasting models. These networks are particularly suited for scenarios where decisions hinge on intricate patterns across time, such as asset price movements or credit risk analysis.

By processing historical market data in quantum states, Quantum RNNs can analyze multiple variables at once, quickly finding relationships that traditional models might overlook. Banks and financial institutions are looking into quantum-based financial models for both risk management and algorithmic trading, envisioning tools that respond faster to market changes with minimal lag.

Quantum RNNs in Climate Modeling

Climate science is another domain where sequential data modeling is crucial. From tracking temperature changes to studying rainfall patterns, climate models are increasingly complex and require long-term data processing. Quantum RNNs could bring significant improvements in predicting climate trends, simulating potential climate change impacts, and even forecasting extreme weather events.

For instance, by combining Quantum RNNs with existing climate models, researchers could potentially accelerate climate simulations and improve accuracy. The additional processing power of Quantum RNNs would make it feasible to model more extensive datasets, improving predictions that inform climate policy decisions and disaster preparedness.

Technical Challenges and Research Opportunities

Limited Quantum Memory and Data Encoding

One technical challenge in Quantum RNNs lies in data encoding. Quantum data encoding is more complex than the standard numerical encoding used in traditional RNNs. When converting real-world data into quantum states, researchers face challenges in maintaining data fidelity and ensuring compatibility with quantum circuits.

Additionally, quantum systems have limited memory and data coherence. In other words, they struggle to hold onto the information for extended periods, which can lead to data loss during processing. Improving memory retention in quantum systems is a major research focus, as it will determine the feasibility of using Quantum RNNs for applications requiring long sequence retention.

Cost and Accessibility of Quantum Technology

Another significant barrier is the cost of quantum hardware. Quantum computers are not widely available, and their operational costs are high. This limited accessibility creates a bottleneck for widespread experimentation and adoption of Quantum RNNs, as only a handful of research institutions and tech giants currently have access to cutting-edge quantum systems.

That said, recent advancements in cloud-based quantum computing are making these resources more accessible. Platforms like IBM Quantum and Google Quantum AI now offer cloud access to quantum processors, allowing researchers to experiment with Quantum RNNs without needing in-house quantum hardware.

Developing Quantum-Optimized Learning Algorithms

The need for quantum-optimized algorithms is pressing, as most machine learning techniques developed for classical RNNs don’t translate directly to quantum computing. For instance, gradient descent, a common optimization technique in neural networks, has limitations when applied to Quantum RNNs due to noisy quantum environments and difficulties in measuring quantum states accurately.

New quantum-friendly algorithms, such as variational quantum algorithms, are being explored to address these issues. These algorithms seek to adapt traditional learning processes to quantum environments, improving the accuracy and speed of Quantum RNN training. Researchers in this field are also working on quantum regularization techniques to help Quantum RNNs generalize better and avoid overfitting.

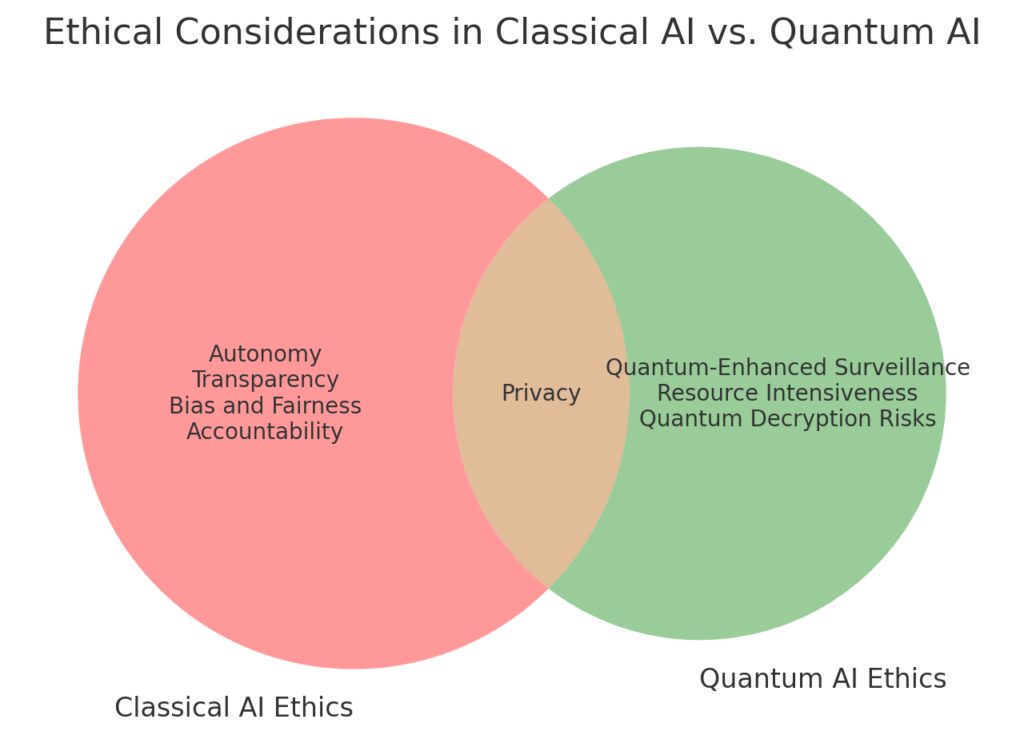

Ethical and Security Considerations in Quantum AI

As with any powerful technology, Quantum RNNs raise questions around ethics and security. Quantum computing could potentially decode data at unprecedented speeds, raising concerns around data security. Quantum-enhanced AI models might also make it easier to track and interpret personal data in new ways, requiring careful attention to data privacy standards.

Unique to Classical AI: Bias and fairness, transparency, accountability, and autonomy.

Unique to Quantum AI: Quantum decryption risks, quantum-enhanced surveillance, and resource intensiveness.

Overlapping Concerns: Privacy, highlighting shared issues around data protection and user confidentiality.

This visualization emphasizes both shared and unique ethical challenges, highlighting new concerns arising from the capabilities of Quantum AI.

Moreover, the advent of Quantum RNNs could lead to job displacement in roles where sequential data analysis is prevalent. As with many AI advancements, ethical considerations should guide the development and deployment of Quantum RNNs to ensure they are used responsibly.

Future of Quantum RNNs: Possibilities and Predictions

Expanding Beyond Research to Real-World Applications

As quantum hardware becomes more sophisticated and accessible, Quantum RNNs are expected to move beyond experimental research and into real-world applications. Industries like healthcare, finance, and climate science may soon benefit from Quantum RNNs that can solve data-heavy problems with greater accuracy and efficiency.

Quantum RNNs could also enhance real-time decision-making systems. For example, in healthcare, Quantum RNNs might eventually be used to monitor patient data continuously and predict critical health events before they happen. Similarly, smart cities could use Quantum RNNs for traffic forecasting and energy grid optimization, improving urban management and reducing resource consumption.

Transforming Machine Learning and AI Capabilities

Perhaps most significantly, Quantum RNNs hold the potential to transform machine learning and artificial intelligence as a whole. By providing new ways to handle complex, sequential data, these networks could lead to breakthroughs in modeling human language, predicting scientific phenomena, and simulating biological processes.

In the coming years, as Quantum RNNs evolve, they may become fundamental to next-gen AI systems, enabling us to solve problems that today’s technology can barely approach. With each advance in quantum hardware, we’re one step closer to realizing the incredible potential of Quantum RNNs and reshaping how we analyze, predict, and interact with the world around us.

Conclusion: Quantum RNNs and the Future of AI

Quantum RNNs represent a compelling fusion of quantum computing’s power with the sequential modeling strength of RNNs. By harnessing superposition and entanglement, Quantum RNNs hold the potential to revolutionize language processing, financial forecasting, and scientific research.

While challenges remain, advancements in quantum hardware and algorithms bring us closer to leveraging these models in the real world. As this field progresses, Quantum RNNs could reshape the future of AI and enable groundbreaking applications in data-heavy fields, pushing the boundaries of what’s computationally possible.

FAQs

How does quantum computing enhance RNNs?

Quantum computing uses qubits that can represent multiple states simultaneously, unlike classical bits limited to 0 or 1. This parallel processing capability allows Quantum RNNs to model complex relationships and dependencies in sequential data more efficiently than classical RNNs. For example, Quantum RNNs can manage long sequences without the limitations of vanishing gradients that traditional RNNs often face.

What are the key applications of Quantum RNNs?

Quantum RNNs have several potential applications, especially in fields that rely on sequential data analysis. Key areas include Natural Language Processing (NLP), such as language translation and text generation, financial forecasting for stock price predictions, and climate science for long-term weather modeling. As Quantum RNNs improve, they may expand to applications in healthcare, genomics, and energy management.

What challenges do Quantum RNNs face?

Several challenges stand in the way of fully realizing Quantum RNNs, including limited quantum hardware, high error rates, and data encoding issues. Quantum computers today are still prone to noise, and scaling them up for large applications is costly. Additionally, quantum data encoding and stable qubit memory retention remain complex technical hurdles.

Are there ethical concerns with Quantum RNNs?

Yes, as with any powerful technology, Quantum RNNs raise ethical concerns. These include data privacy and security risks, given the potential of quantum computing to break traditional encryption. Additionally, the efficiency and power of Quantum RNNs may have significant societal impacts, such as potential job displacement in roles involving data analysis and forecasting.

What is the future outlook for Quantum RNNs?

As quantum technology advances, the outlook for Quantum RNNs is promising. They could become integral to next-generation AI models, enabling breakthroughs in fields like healthcare, finance, and climate research. However, achieving this requires further improvements in quantum hardware, accessible platforms, and quantum-compatible learning algorithms.

Are Quantum RNNs available for commercial use?

Currently, Quantum RNNs are primarily in the research phase and are not widely available for commercial use. Some companies and research institutions are experimenting with hybrid quantum-classical models on cloud-based quantum platforms. As quantum hardware becomes more accessible, we can expect an increase in experimental and eventually commercial applications.

How do Quantum RNNs compare to classical RNNs in terms of performance?

Quantum RNNs have the potential to outperform classical RNNs in specific tasks involving complex dependencies in sequential data, thanks to quantum properties like superposition and entanglement. While classical RNNs struggle with long-range dependencies and face issues like the vanishing gradient problem, Quantum RNNs could manage these more effectively. However, because quantum hardware is still developing, actual performance comparisons are limited and largely theoretical for now.

Are there any real-world examples of Quantum RNN applications?

Although Quantum RNNs are mostly in the experimental phase, pilot projects and research studies have explored their use in machine translation, stock market prediction, and climate modeling. Companies like IBM and Google have conducted studies using hybrid quantum-classical RNNs on their quantum platforms to test potential applications. These early projects provide a glimpse into what Quantum RNNs might achieve as the technology matures.

Can Quantum RNNs be trained like classical RNNs?

Training Quantum RNNs requires different techniques than those used in classical RNNs due to the quantum properties of qubits and their sensitivity to environmental factors. Common machine learning techniques like gradient descent are challenging to implement directly in quantum environments. New methods, like variational quantum algorithms and quantum circuit learning, are being developed to help train Quantum RNNs effectively within quantum constraints.

What are hybrid quantum-classical models, and why are they important?

Hybrid quantum-classical models combine quantum circuits with classical processing to create a model that leverages the strengths of both computing types. These hybrids allow researchers to test Quantum RNNs on quantum processors while handling parts of the computation on classical hardware, making them more feasible for current technology. Hybrid models are essential as they allow Quantum RNN research to progress without waiting for fully error-corrected, large-scale quantum computers.

What role do quantum gates play in Quantum RNNs?

In Quantum RNNs, quantum gates are operations that manipulate qubits, changing their state based on the sequential data input. These gates allow Quantum RNNs to model relationships in the data similarly to how neurons operate in classical RNNs. However, because quantum gates handle data in a probabilistic and parallel way, they enable Quantum RNNs to capture more complex relationships in data, theoretically improving sequence processing efficiency.

How does error correction work in quantum computing, and why is it important for Quantum RNNs?

Error correction in quantum computing is essential because quantum systems are prone to noise and instability. When qubits interact with their environment, they can lose coherence, leading to errors. Quantum RNNs require accurate and stable qubit interactions to process sequential data effectively, making error correction critical. Current methods, such as quantum error-correcting codes, aim to reduce errors, but more robust solutions are needed for Quantum RNNs to perform reliably in real-world applications.

Are there software tools for building Quantum RNNs?

Yes, several quantum computing libraries now support Quantum RNN development. Qiskit (IBM’s quantum computing framework) and PennyLane (an open-source library for quantum machine learning) provide tools for creating and testing Quantum RNNs on simulators or real quantum hardware. Additionally, TensorFlow Quantum is emerging as a tool to integrate quantum layers with traditional machine learning models, allowing researchers to experiment with hybrid quantum-classical RNNs.

What is the Noisy Intermediate-Scale Quantum (NISQ) era, and how does it impact Quantum RNNs?

The NISQ era refers to the current stage of quantum computing, where quantum processors are powerful but still limited by noise and instability. NISQ devices are capable of running small quantum circuits but are not yet scalable or stable enough for large-scale applications. For Quantum RNNs, this means that while small, experimental models are possible, full-scale deployment for real-world tasks will require more stable and error-corrected quantum computers.

How can businesses prepare for the potential impact of Quantum RNNs?

Businesses interested in data-driven decision-making should start by exploring quantum computing basics and potential applications in their industry. Partnering with research institutions, investing in quantum computing education, and experimenting with hybrid models on cloud-based quantum platforms can be good initial steps. While Quantum RNNs aren’t yet widely available, businesses that build foundational knowledge now will be better positioned to adopt these technologies as they mature.

Resources

Articles and Tutorials

- IBM Quantum Hub

IBM’s Quantum Hub offers extensive resources and tutorials, from beginner guides on quantum computing basics to advanced discussions on Quantum RNNs and hybrid models.

Link: IBM Quantum Hub - Quantum Machine Learning on Medium

Medium has a variety of blogs and tutorials on quantum machine learning. Look for articles by industry experts discussing quantum applications in NLP, financial forecasting, and more. - Google Quantum AI Blog

Google’s Quantum AI blog offers insights into their latest research, including hybrid quantum models and applications in artificial intelligence. It’s a great way to stay updated on practical advancements in Quantum RNNs.

Research Papers

- “Quantum Recurrent Neural Networks” (Research Paper)

This foundational paper explores Quantum RNN models, detailing how qubits can be used to represent sequential data and the advantages of quantum states in AI applications.

Download on arXiv: Quantum Recurrent Neural Networks - “Hybrid Quantum-Classical Neural Networks with PyTorch and PennyLane”

This paper provides a practical look at hybrid quantum-classical models, showing how PennyLane and PyTorch can be used together for quantum RNN implementations.

Download on arXiv: Hybrid Quantum-Classical Neural Networks - “Quantum Long Short-Term Memory” (LSTM)

This paper discusses quantum analogs of LSTM networks, an advanced type of RNN, examining how quantum LSTMs could improve memory retention and solve vanishing gradient issues.

Download on arXiv: Quantum Long Short-Term Memory

Courses and Tutorials

- MIT OpenCourseWare: Quantum Computing

This course provides a comprehensive introduction to quantum computing fundamentals, which is a great starting point if you’re new to the field. - Udacity: Introduction to Quantum Computing and Quantum Machine Learning

This Udacity course covers the basics of quantum computing and its applications in machine learning, including Quantum RNN concepts. - Qiskit Textbook

The Qiskit textbook from IBM Quantum provides an interactive introduction to quantum computing. It includes sections on quantum algorithms and machine learning that are applicable to Quantum RNNs.

Quantum Computing Libraries

- Qiskit (IBM)

Qiskit is IBM’s open-source quantum computing software development framework. It supports building Quantum RNNs with detailed tutorials on quantum machine learning.

Link: Qiskit - PennyLane

PennyLane, developed by Xanadu, specializes in hybrid quantum-classical machine learning. It’s a powerful library for experimenting with Quantum RNNs using familiar frameworks like PyTorch and TensorFlow.

Link: PennyLane - TensorFlow Quantum

TensorFlow Quantum is Google’s quantum machine learning library, extending TensorFlow to support quantum circuits. It’s ideal for those familiar with TensorFlow who want to try quantum neural networks.

Communities and Forums

- Quantum Computing Stack Exchange

Quantum Computing Stack Exchange is a Q&A community for quantum computing enthusiasts and professionals, ideal for finding answers to specific questions and staying updated on Quantum RNN advancements.

Link: Quantum Computing Stack Exchange - r/QuantumComputing on Reddit

Reddit’s Quantum Computing community has discussions ranging from beginner questions to advanced topics, including practical applications of Quantum RNNs and quantum AI research.

Link: r/QuantumComputing