What is R-CNN and Why Does It Matter?

Introduction to R-CNN

Region-based Convolutional Neural Networks (R-CNN) are a milestone in the field of object detection. Developed to detect objects in 2D images, R-CNN revolutionized the way deep learning models identify and classify objects.

It uses selective search to propose regions of interest (ROIs) and then applies a CNN to extract features and classify objects.

R-CNN sparked a series of improvements: Fast R-CNN, Faster R-CNN, and Mask R-CNN. These models dramatically boosted object detection accuracy and speed.

But there’s a catch: R-CNN was originally built for 2D images. For real-world scenarios like autonomous driving, robotics, and augmented reality, 3D object detection becomes essential.

Why 3D Object Detection is Critical

3D object detection moves beyond flat 2D images to include depth information. This allows systems to detect an object’s position, size, and orientation in 3D space. Applications like self-driving cars rely heavily on this for obstacle avoidance, environment mapping, and navigation.

LIDAR sensors and point clouds play a critical role in enabling 3D detection. They provide a detailed 3D representation of the surroundings, capturing depth and spatial geometry.

Combining R-CNN’s strengths with 3D data creates opportunities for groundbreaking advancements.

Transitioning to 3D Object Detection: The Basics

From 2D to 3D: A Paradigm Shift

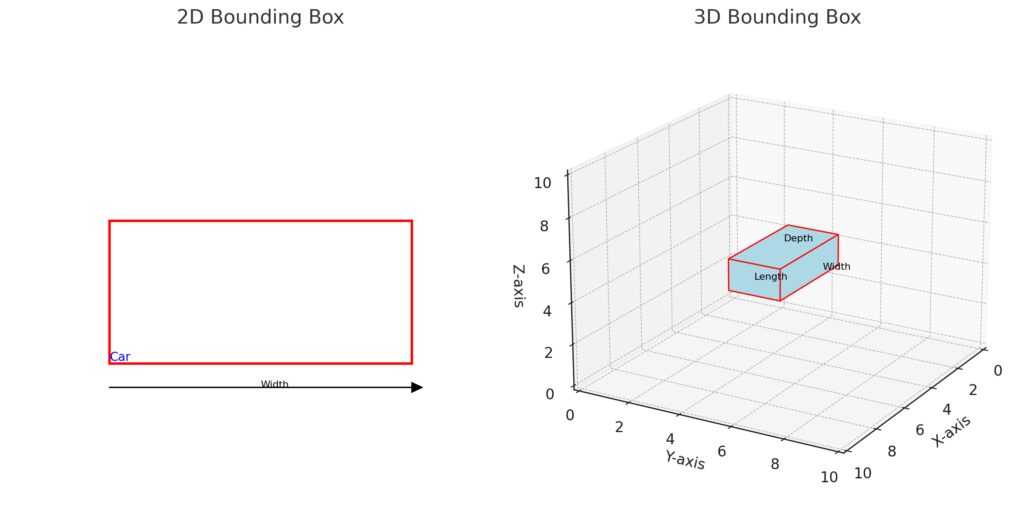

Traditional 2D object detection involves identifying objects in an image with bounding boxes. These bounding boxes, however, lack depth information. In contrast, 3D object detection produces cuboids that precisely enclose objects in 3D space, providing coordinates like (x, y, z).

This transition introduces challenges, including:

- Handling sparse and unordered point cloud data.

- Mapping and aligning 2D image data with 3D spatial information.

- Increasing computational complexity due to 3D volumes.

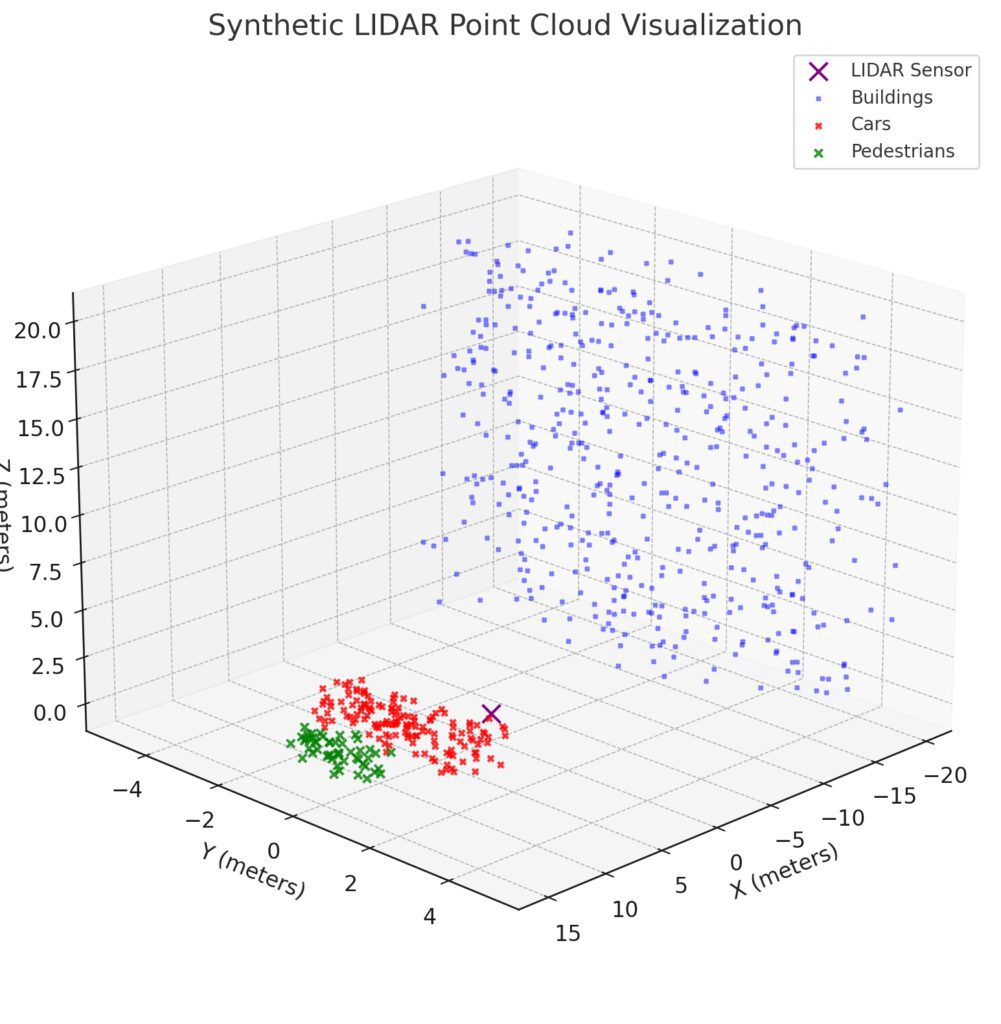

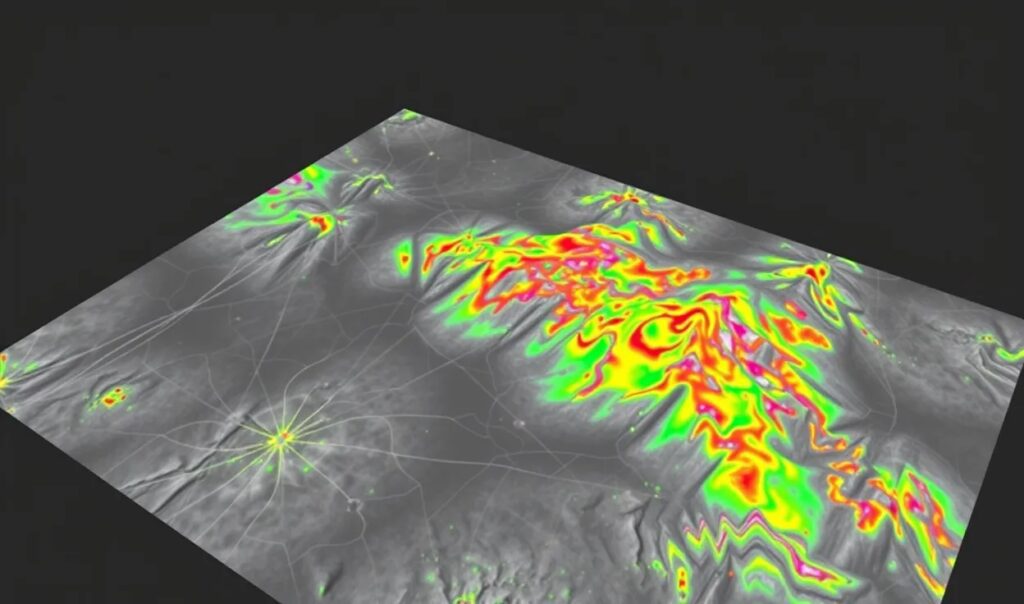

The Role of LIDAR in 3D Perception

LIDAR (Light Detection and Ranging) sensors are widely used for 3D data collection. They emit laser pulses and measure the time it takes for the light to return, generating a point cloud—a collection of (x, y, z) coordinates that represent surfaces in 3D space.

Advantages of LIDAR include:

- High accuracy in capturing depth and spatial details.

- Effective performance in low-light conditions compared to traditional cameras.

- Essential for applications like autonomous vehicles and environment mapping.

However, LIDAR point clouds tend to be sparse and irregular, posing challenges for neural networks that process structured grid data.

Point Clouds: The Backbone of 3D Detection

Point clouds are the output of LIDAR systems and serve as input for 3D object detection models. Each point in the cloud holds spatial information, often augmented with intensity or reflectance values.

Challenges of working with point clouds include:

- Irregular format: Point clouds are unordered, unlike grid-based image data.

- High dimensionality: Handling millions of points requires efficient processing.

- Noise and sparsity: Points may be missing or noisy due to sensor limitations.

To address these challenges, researchers leverage techniques like voxelization (converting point clouds into structured grids) and advanced neural network architectures tailored for 3D data.

Extending R-CNN to 3D Object Detection

3D R-CNN: An Overview

The concept of R-CNN can be extended to 3D data with adaptations that suit LIDAR point clouds or 3D volumes. Instead of using selective search on 2D images, 3D R-CNN models propose regions of interest in 3D space and process them with specialized convolutional networks.

Key adaptations include:

- Using 3D region proposals to identify candidate regions in point clouds.

- Applying 3D CNNs to extract spatial features from the proposed regions.

- Incorporating multi-view fusion to integrate information from both 2D images and 3D point clouds.

VoxelNet: From Points to Voxels

A major step in 3D detection was VoxelNet. This model bridges the gap between irregular point clouds and grid-based CNNs. It converts point clouds into voxels (3D cubes), allowing CNNs to process structured voxel data efficiently.

The process of converting raw point clouds into voxels for structured 3D feature extraction in VoxelNet.

Here’s how it works:

- Voxelization: The point cloud is divided into voxels. Each voxel holds a set of points.

- Feature extraction: A Voxel Feature Encoding (VFE) layer extracts meaningful features from the points within each voxel.

- 3D CNN: The voxel features are passed through 3D convolutional layers to detect objects and predict their 3D bounding boxes.

VoxelNet laid the foundation for modern point cloud processing pipelines, enabling accurate and efficient 3D object detection.

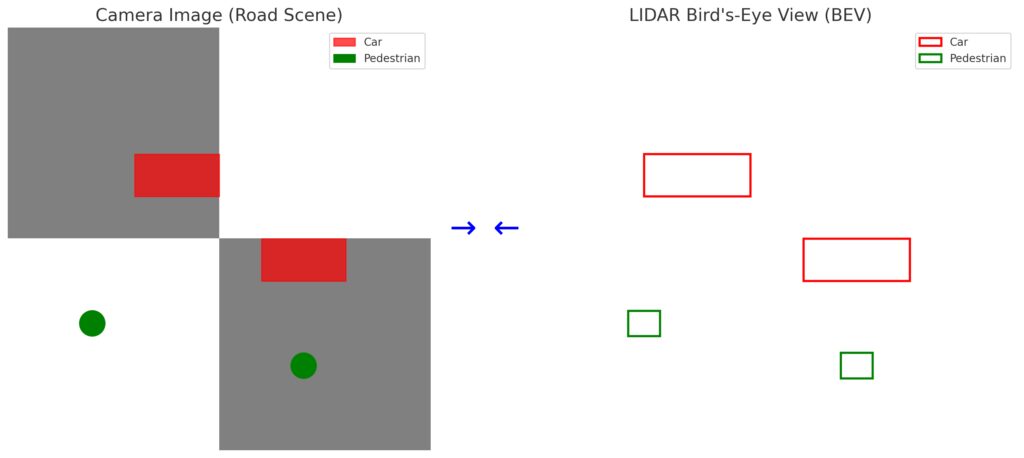

Combining LIDAR and Image Data: Multi-Modal Approaches

Why Combine LIDAR and Cameras?

While LIDAR provides accurate depth data, it lacks rich visual information like color and texture. Cameras, on the other hand, excel at capturing detailed visual features but cannot measure depth directly.

Right Panel (LIDAR Bird’s-Eye View):Displays the same cars and pedestrians as bounding boxes in a top-down perspective.

Arrows:Highlight the fusion of data between the two modalities to enhance detection.

This comparative visual showcases how multi-modal fusion integrates camera and LIDAR data for robust perception.

By fusing data from LIDAR and cameras, we achieve multi-modal object detection that leverages the strengths of both sensors.

Fusion Strategies

- Early Fusion: Combine LIDAR and camera data at the input stage. For example, project point clouds onto the image plane to align visual and spatial information.

- Late Fusion: Process LIDAR and camera data independently and merge the results in the final detection stage.

- Intermediate Fusion: Fuse features extracted from both LIDAR and camera data within the network. This balances computational efficiency and accuracy.

Models like PointFusion and MV3D have successfully implemented multi-modal fusion for 3D detection.

MV3D, for example, uses three views:

- Bird’s-eye view (BEV) of point clouds.

- Front view of point clouds projected onto 2D.

- Camera images for visual context.

Combining these views enables robust 3D detection, especially in complex environments like autonomous driving scenarios.

3D Detection Networks: Key Innovations

PointNet: Processing Raw Point Clouds

PointNet revolutionized 3D deep learning by processing raw point clouds directly. Unlike voxelization, it avoids information loss caused by discretizing points into grids.

Key innovations include:

- A symmetry function (like max pooling) to handle unordered point clouds.

- Learning global features and local features to capture fine-grained geometry.

PointNet++ extended this idea by introducing hierarchical feature learning, similar to CNNs for images.

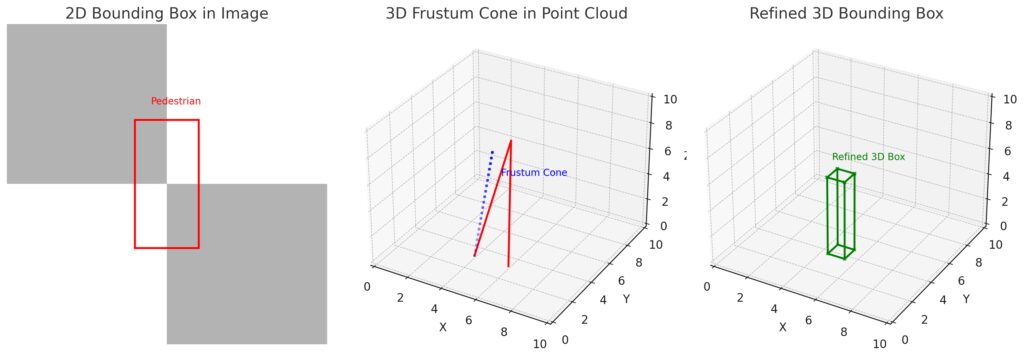

Frustum PointNets: Combining 2D and 3D

Frustum PointNets bridge 2D detection with 3D object localization. Here’s how:

- A 2D detector identifies regions of interest (ROIs) in images.

- These 2D ROIs are projected into 3D frustums (cones extending into 3D space).

- PointNet processes the points within the frustum to estimate 3D bounding boxes.

This two-step approach efficiently combines 2D visual features and 3D spatial information for accurate detection.

Panel 2 (Frustum Cone Projection):The 2D box extends into 3D space as a frustum cone in the LIDAR point cloud.

Panel 3 (Refined 3D Bounding Box):The pedestrian’s point features are used to create a tight, refined 3D bounding box.

This step-by-step illustration demonstrates how Frustum PointNets process data for accurate 3D object detection.

Enhancing 3D Object Detection with Advanced Architectures

Faster R-CNN for 3D: Adapting Region Proposal Networks

Faster R-CNN improved upon R-CNN by introducing the Region Proposal Network (RPN), which generates region proposals directly from feature maps instead of relying on external methods like selective search. Extending Faster R-CNN to 3D requires adapting the RPN to handle 3D point clouds or voxelized data.

In 3D detection, the RPN identifies 3D regions of interest (ROIs) by processing spatial features extracted from voxel grids or raw point clouds. Key adaptations include:

- Designing anchor boxes in 3D space to capture object dimensions and orientation.

- Integrating Bird’s-Eye View (BEV) maps, which project point clouds onto a 2D plane to simplify region proposal generation.

By combining RPN with 3D CNNs or PointNet-based architectures, Faster R-CNN becomes a powerful tool for real-time 3D object detection in applications like self-driving cars.

PV-RCNN: Bridging Voxels and Point Features

PV-RCNN (Point-Voxel R-CNN) takes a hybrid approach, combining voxelized point clouds with raw point cloud features for higher detection accuracy. Here’s how it works:

- Voxelization: Convert point clouds into voxels for efficient 3D CNN processing.

- Voxel feature learning: Extract spatial features from the voxel grid.

- Point-based refinement: Use PointNet to refine predictions by focusing on key points within the 3D ROIs.

PV-RCNN achieves state-of-the-art results by balancing the strengths of voxel-based and point-based methods. This hybrid approach reduces computation while preserving fine-grained spatial details.

Point Cloud Segmentation and 3D Object Detection

Instance Segmentation in Point Clouds

Segmentation in point clouds involves classifying each point as belonging to an object or background. This step is crucial for separating individual objects in cluttered 3D environments.

Popular methods like PointNet++ and KPConv (Kernel Point Convolution) achieve segmentation by learning both global context and local geometric features. These features can then be passed to detection networks like R-CNN to generate accurate 3D bounding boxes.

For instance:

- Segment the ground plane to filter out irrelevant points in autonomous driving.

- Identify pedestrians, vehicles, and obstacles using per-point classifications.

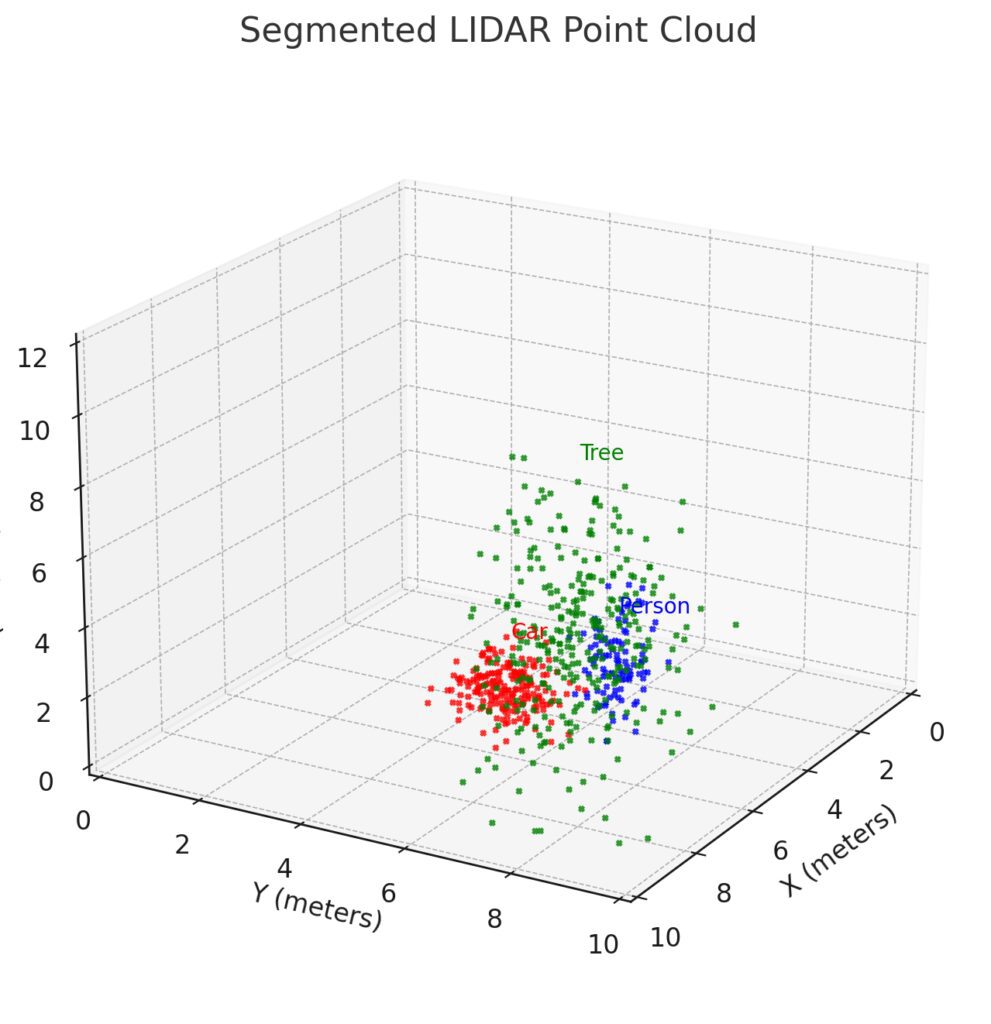

Car: Cluster in red with low-lying points.

Tree: Tall vertical cluster in green.

Person: Sparse vertical cluster in blue.

Labels: Each object is annotated with its corresponding name (Car, Tree, Person) for clarity.

This realistic outdoor LIDAR point cloud demonstrates successful instance segmentation with sparse points.

Multi-Scale Feature Learning

Point clouds often exhibit varying levels of density, with sparse regions requiring careful processing. Multi-scale learning techniques process point clouds at different resolutions to capture both fine and coarse features.

Models like PointNet++ and 3D U-Net implement hierarchical architectures that:

- Downsample the point cloud to capture broader context.

- Upsample to refine details for precise localization and segmentation.

These multi-scale features significantly improve 3D object detection accuracy, particularly in dense, complex scenes.

Applications of 3D R-CNN in Real-World Scenarios

Autonomous Driving and Robotics

Autonomous vehicles rely on LIDAR point clouds for real-time perception. By extending R-CNN models to 3D, systems can:

- Detect and classify vehicles, pedestrians, and cyclists in 3D space.

- Estimate the velocity and trajectory of moving objects.

- Avoid obstacles and plan safe paths.

KITTI dataset benchmarks have driven significant progress in 3D detection for autonomous driving. Models like VoxelNet, SECOND, and PointPillars have achieved high performance on this dataset.

Augmented and Virtual Reality (AR/VR)

3D object detection enhances AR/VR experiences by enabling accurate understanding of real-world environments. Applications include:

- Overlaying virtual objects onto real-world surfaces.

- Tracking and interacting with 3D objects in dynamic scenes.

- Enhancing spatial awareness for immersive AR gaming and simulations.

Robotic Manipulation

In robotics, precise 3D object detection is essential for tasks like grasping, picking, and placing objects. By identifying object location, size, and orientation in 3D space, robots can:

- Navigate cluttered environments.

- Perform bin-picking in warehouses.

- Operate in industrial and household settings with high accuracy.

Future Directions in 3D Object Detection

End-to-End 3D Detection Models

Most current approaches involve multiple steps: preprocessing, voxelization, feature extraction, and prediction. End-to-end 3D detection models aim to simplify this pipeline, reducing latency and improving efficiency.

For example:

- Models like PointPillars process point clouds directly in a single shot, bypassing complex voxelization steps.

- End-to-end frameworks integrate 2D and 3D data seamlessly for faster, unified detection.

Deep Fusion of Sensor Modalities

Combining data from LIDAR, cameras, and RADAR can unlock higher detection accuracy. Future research focuses on deep sensor fusion, where features are extracted and combined at multiple levels within the network.

Advanced fusion techniques will enable systems to handle challenging environments, such as fog, rain, or poor lighting, where single sensors may fail.

Real-Time 3D Detection

Efficiency remains a major challenge for 3D detection, especially in real-time applications like autonomous driving. Innovations include:

- Lightweight architectures that reduce computational overhead.

- Hardware acceleration using GPUs and custom chips (like Tesla’s FSD chip).

- Sparse convolution techniques that skip processing empty regions in point clouds.

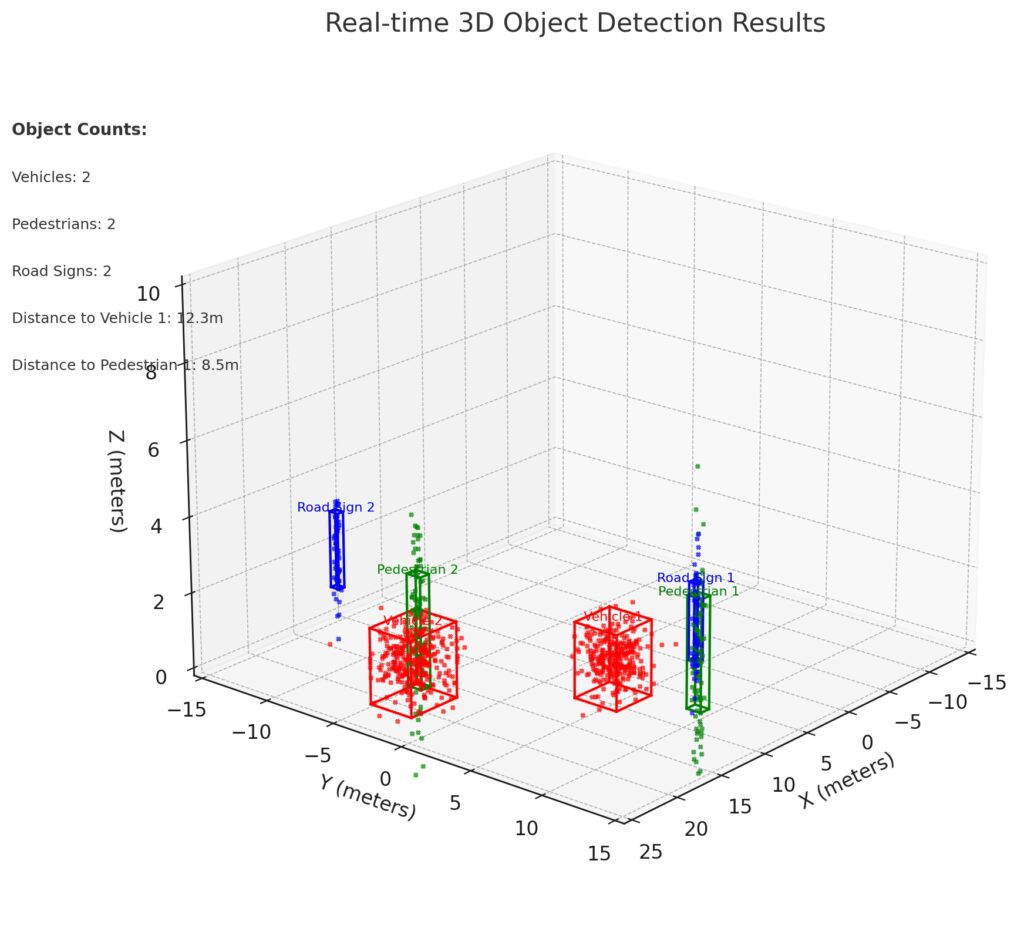

Point Cloud Scene: Simulated LIDAR point cloud with sparse and dense points.

Vehicles: Red boxes.

Pedestrians: Green boxes.

Road Signs: Blue boxes.

Overlayed Statistics:

Object counts (e.g., Vehicles: 2, Pedestrians: 2, Road Signs: 2).

Distance measurements to key objects (e.g., Vehicle 1: 12.3m).

This visualization illustrates a real-time dashboard view for 3D object detection using LIDAR data.

Conclusion: R-CNN’s Impact on the 3D Detection Revolution

Extending R-CNN to 3D object detection has opened new frontiers in perception technology. By leveraging LIDAR point clouds, 3D CNNs, and point-based networks, researchers have developed models capable of understanding complex 3D environments with remarkable accuracy.

Applications in autonomous driving, robotics, and AR/VR are transforming industries, pushing the boundaries of what’s possible with machine vision. As models evolve towards real-time performance, multi-sensor fusion, and end-to-end pipelines, the future of 3D object detection looks more promising than ever.

The integration of R-CNN principles with 3D data isn’t just an extension—it’s a revolution that’s redefining the way machines perceive the world.

FAQs

What is a point cloud, and why is it important?

A point cloud is a set of 3D data points representing an object’s or environment’s surface geometry. Each point has spatial coordinates (x, y, z) and sometimes additional information like intensity or color.

Point clouds are essential because they provide machines with the depth and structure of their surroundings. For example, a robot navigating a warehouse uses point clouds to detect shelves, boxes, and paths, ensuring collision-free movement.

How does voxelization help in processing point clouds?

Voxelization converts irregular point clouds into a structured grid of 3D cells called voxels (like 3D pixels). By structuring the data, voxelization allows convolutional neural networks (CNNs) to process it efficiently.

For example, in the VoxelNet model, point clouds are divided into voxels, and each voxel’s features are learned using a Voxel Feature Encoding (VFE) layer. This enables accurate object detection without losing critical spatial information.

What are real-world applications of 3D object detection?

3D object detection is widely used in applications like:

- Autonomous driving: Self-driving cars use 3D detection to identify vehicles, pedestrians, and road obstacles with precision. For example, Tesla and Waymo rely on LIDAR and vision data to ensure safety.

- Robotics: Robots performing tasks like picking up objects rely on 3D detection for localization and grasping.

- AR/VR: Augmented reality devices use 3D detection to overlay virtual objects onto real-world surfaces. Imagine placing virtual furniture in your living room using AR apps.

- Mapping and surveying: Drones and LIDAR-based systems map large environments, creating detailed 3D models for construction or urban planning.

How does multi-modal fusion improve 3D object detection?

Multi-modal fusion combines data from LIDAR sensors and cameras, leveraging the strengths of both:

- LIDAR provides depth and spatial geometry.

- Cameras offer rich visual features like color and texture.

For instance, a multi-modal approach in self-driving cars allows better identification of vehicles (shape from LIDAR, appearance from cameras) and pedestrians (depth for distance, color for clothing or body detection).

What challenges arise in processing LIDAR point clouds?

While LIDAR point clouds are powerful, they present challenges:

- Sparsity: Point clouds can be incomplete or unevenly distributed, especially at greater distances.

- Irregular format: Unlike images, point clouds are unordered, requiring specialized models like PointNet to process them effectively.

- Noise: Points can include sensor noise, requiring filtering and preprocessing.

For example, detecting small objects like traffic cones or distant pedestrians can be tricky due to sparse point distributions. Models like PointPillars address this challenge with efficient point cloud processing.

How does PointNet handle raw point clouds?

PointNet processes raw point clouds directly without voxelization. It uses a symmetry function like max pooling to handle the unordered nature of point clouds.

For instance, PointNet can classify objects such as cars, pedestrians, or furniture directly from raw 3D data. This approach avoids the information loss caused by voxelization, making it ideal for tasks requiring fine-grained details.

What is the role of Frustum PointNets in 3D detection?

Frustum PointNets bridge 2D image detection and 3D point cloud processing. Here’s how:

- A 2D detector identifies objects in an image (e.g., a car in a photo).

- The 2D bounding box is extended into 3D space as a frustum (a cone-like region).

- PointNet processes the points inside the frustum to predict the object’s precise 3D bounding box.

For example, in autonomous driving, Frustum PointNets help accurately locate vehicles and pedestrians by combining camera images and LIDAR data.

What advancements are improving real-time 3D detection?

Real-time 3D detection requires both accuracy and speed. Recent advancements include:

- Sparse convolutional networks: Skip processing empty regions in point clouds to reduce computation.

- Lightweight architectures: Models like PointPillars and SECOND streamline voxelization and feature extraction.

- Hardware acceleration: GPUs and specialized chips enhance processing speeds for real-time detection.

For instance, PointPillars processes point clouds in under 50 milliseconds, enabling real-time perception in autonomous driving systems.

How do models like VoxelNet process LIDAR point clouds?

VoxelNet converts sparse point clouds into structured 3D voxel grids to make them compatible with convolutional neural networks (CNNs). Here’s how it works:

- Voxelization divides the point cloud into small 3D voxels (like cubes in a grid).

- Each voxel’s internal points are encoded with a Voxel Feature Encoding (VFE) layer to extract meaningful features.

- These voxel features are passed through a 3D CNN to detect objects and predict their 3D bounding boxes.

For instance, in autonomous driving, VoxelNet helps detect vehicles at varying distances with high accuracy by effectively combining sparse point cloud features with CNN-based processing.

What are the limitations of LIDAR in 3D object detection?

While LIDAR sensors are excellent at capturing depth and spatial information, they have a few limitations:

- High cost: LIDAR sensors can be expensive, making them less accessible for certain applications.

- Sparsity at a distance: LIDAR points become sparse as objects get farther from the sensor, reducing detection accuracy.

- Weather sensitivity: LIDAR performance can degrade in fog, heavy rain, or snow.

For example, detecting small objects like traffic cones at long distances becomes challenging due to sparsity. To overcome this, multi-sensor fusion (combining LIDAR with cameras or radar) is often used.

How does PointPillars make 3D detection faster?

PointPillars is designed for fast, real-time 3D object detection. It avoids the costly voxelization process by:

- Dividing the point cloud into vertical pillars instead of cubic voxels.

- Learning features directly from the points within each pillar using a PointNet-style encoder.

- Processing these pillar features with a 2D CNN, simplifying computation.

This approach reduces processing time dramatically. For instance, PointPillars can process LIDAR point clouds in under 50 milliseconds, making it ideal for real-time applications like self-driving cars.

What role does Bird’s-Eye View (BEV) play in 3D detection?

Bird’s-Eye View (BEV) is a top-down projection of the LIDAR point cloud onto a 2D plane. It simplifies the processing of 3D data by converting it into a 2D grid, where each cell contains aggregated features like point density or intensity.

BEV is widely used in 3D detection models like MV3D and SECOND because:

- It simplifies computation, allowing 2D CNNs to process spatial data efficiently.

- It provides a clear view of object locations and distances in a driving scenario.

For example, in autonomous driving, BEV maps help systems detect and track vehicles, lanes, and pedestrians with high accuracy while maintaining spatial relationships.

How does multi-modal fusion improve detection accuracy?

Multi-modal fusion combines data from different sensors—typically LIDAR for depth and cameras for visual details—resulting in more accurate 3D object detection.

For example:

- LIDAR provides precise 3D spatial coordinates but lacks color and texture details.

- Cameras capture visual features (e.g., a red stop sign or a pedestrian’s clothing) but cannot measure depth.

Fusion strategies like early fusion (combining data before processing) or late fusion (combining outputs of separate models) allow systems to leverage the best of both worlds. A self-driving car, for instance, might use LIDAR to detect a car’s location while using the camera to classify its make or model.

What makes PointNet ideal for raw point cloud processing?

PointNet directly processes raw point clouds without requiring voxelization. Its unique design handles the unordered nature of point clouds using:

- Symmetry functions like max pooling, ensuring the network remains invariant to the order of points.

- Global and local feature extraction to capture fine-grained object details.

For example, PointNet can classify objects like chairs, tables, or vehicles directly from raw point cloud data, avoiding information loss caused by converting points into voxels.

How do Frustum PointNets bridge 2D and 3D detection?

Frustum PointNets combine the strengths of 2D and 3D detection models. Here’s how it works:

- A 2D detector first identifies objects in a camera image and generates 2D bounding boxes.

- These boxes are extended into 3D frustums—cone-like regions projected into 3D space.

- PointNet processes the points inside the frustum to predict 3D bounding boxes.

For instance, if a 2D detector identifies a pedestrian in an image, Frustum PointNet can locate the exact position and orientation of the pedestrian in 3D space using LIDAR point clouds.

What challenges remain for real-time 3D object detection?

Despite significant advancements, real-time 3D object detection still faces challenges, including:

- Computational efficiency: Processing dense point clouds or large volumes of data in real time remains resource-intensive.

- Sparse data: Handling incomplete or noisy LIDAR data, particularly at longer ranges, is difficult.

- Sensor fusion complexities: Aligning data from LIDAR, cameras, and radar requires precise calibration and synchronization.

For example, detecting small, fast-moving objects (like cyclists or debris) at highway speeds demands both accuracy and low latency—a challenge that researchers continue to address with efficient architectures like Sparse CNNs and PointPillars.

Resources for R-CNN and 3D Object Detection

Research Papers and Publications

- “Rich Feature Hierarchies for Accurate Object Detection and Semantic Segmentation” – The original R-CNN paper by Ross Girshick. A foundational read for understanding the evolution of region-based detection.

Read the Paper - “VoxelNet: End-to-End Learning for Point Cloud Based 3D Object Detection” – The VoxelNet paper that introduced voxelization for efficient point cloud processing.

Read the Paper - “PointNet: Deep Learning on Point Sets for 3D Classification and Segmentation” – A breakthrough paper on processing raw point clouds without voxelization.

Read the Paper - “Frustum PointNets for 3D Object Detection from RGB-D Data” – Combines 2D image detection with 3D point clouds using a frustum-based approach.

Read the Paper - “SECOND: Sparsely Embedded Convolutional Detection” – Introduces efficient sparse convolution networks for real-time point cloud detection.

Read the Paper - “PointPillars: Fast Encoders for Object Detection from Point Clouds” – A key resource for understanding lightweight architectures for real-time 3D detection.

Read the Paper

Datasets for 3D Object Detection

- KITTI Dataset

The KITTI Vision Benchmark Suite is the gold standard for autonomous driving research. It includes LIDAR point clouds, RGB images, and ground-truth 3D annotations for cars, pedestrians, and cyclists. - Waymo Open Dataset

A large-scale autonomous driving dataset with high-quality LIDAR, camera data, and 3D bounding box annotations for objects in complex driving scenarios. - NuScenes Dataset

A multi-modal dataset for autonomous vehicles with LIDAR, cameras, radar, and detailed 3D annotations across challenging environments.

Explore NuScenes - Argoverse 3D Tracking Dataset

Includes LIDAR and stereo images for tracking 3D objects and predicting their motion in driving scenarios.

Visit Argoverse

Open-Source Tools and Frameworks

- Open3D

An open-source library for 3D data processing, including point cloud visualization, voxelization, and segmentation.

Explore Open3D - MMDetection3D

A PyTorch-based toolbox for 3D object detection, supporting models like SECOND, PointPillars, and VoxelNet.

GitHub Repository - Detectron2

Facebook AI’s library for object detection and segmentation, useful for implementing and experimenting with R-CNN-based models.

Access Detectron2 - ROS (Robot Operating System)

A widely used robotics framework that integrates sensor data (LIDAR, cameras) for real-time 3D object detection and navigation.

Learn More About ROS - TensorFlow and PyTorch

Leading deep learning frameworks for implementing 3D detection models, including support for PointNet, Frustum PointNets, and voxel-based architectures.

Tutorials and Learning Materials

- “An Introduction to Point Clouds and LIDAR Data Processing” – A step-by-step guide for understanding LIDAR data and point clouds.

- “3D Object Detection with Point Clouds: A Practical Guide” – A hands-on tutorial for implementing 3D object detection models like PointPillars and VoxelNet.

- “Getting Started with Open3D for 3D Point Cloud Visualization” – Learn how to process and visualize point clouds using Open3D.

- “KITTI 3D Object Detection – A Deep Dive” – A detailed breakdown of the KITTI dataset and how to train models for 3D detection.

- “How to Implement PointNet in PyTorch” – A step-by-step implementation of the PointNet architecture for processing point clouds.

Industry Use Cases

NVIDIA DriveWorks

NVIDIA’s end-to-end platform for autonomous vehicles includes tools for 3D object detection, sensor fusion, and path planning.

Explore NVIDIA DriveWorks

Waymo’s Self-Driving Car Perception System

Learn how Waymo combines LIDAR, cameras, and machine learning to enable precise 3D object detection and obstacle avoidance.

Tesla Autopilot: Vision and 3D Perception

Explore how Tesla’s vision-based approach replaces LIDAR with cameras and deep learning for autonomous driving.