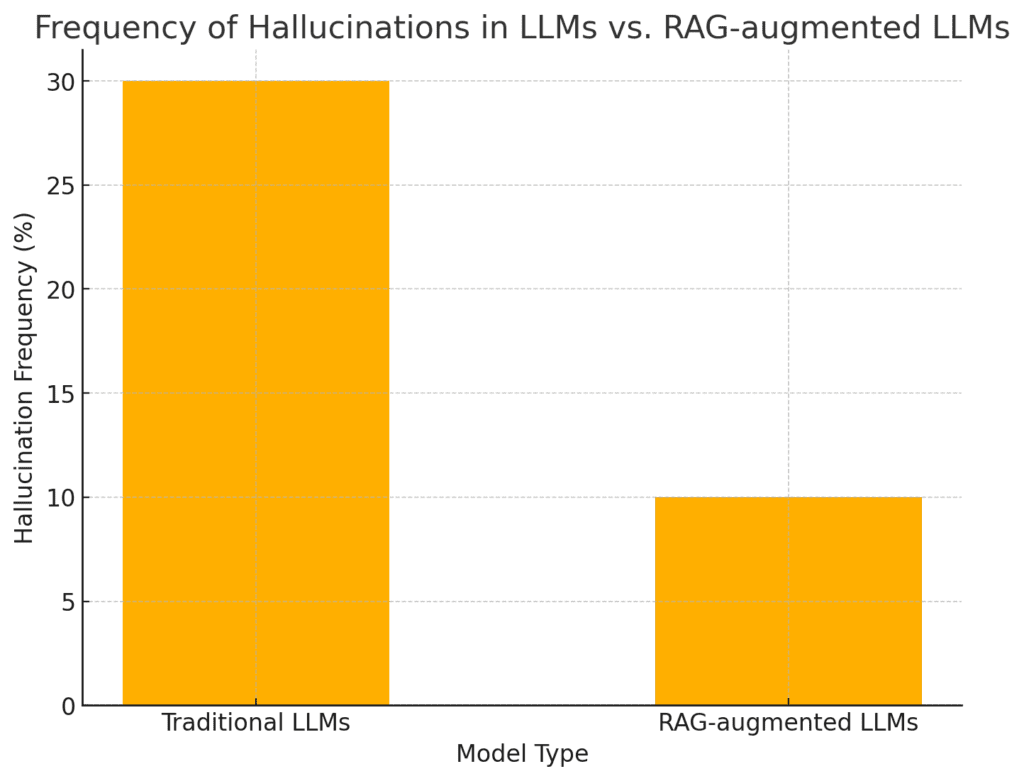

AI-generated text can be impressive, but there’s a significant issue: hallucinations.

These are the moments when the AI confidently provides information that’s entirely fabricated or misleading. Whether it’s a wrongly attributed quote, incorrect data, or just an entirely made-up fact, AI hallucinations can lead to trust issues.

Why does this happen? It often boils down to the fact that most large language models (LLMs) generate text based on patterns in vast datasets, not by verifying facts. The AI might sound credible, but that doesn’t guarantee accuracy. That’s where Retrieval-Augmented Generation (RAG) comes into play.

RAG: A Hybrid Approach to Accuracy

So what is RAG? Retrieval-Augmented Generation is a process that blends the generative power of LLMs with the ability to retrieve information from trusted external sources in real time. Instead of relying solely on pre-trained data, RAG searches through specific databases or knowledge bases, pulling in fresh, accurate information. This combination helps mitigate hallucinations and boosts factual reliability.

When RAG is used, the AI accesses external documents, ensuring the text it generates isn’t just based on prior training but on current, verified sources. This leads to more grounded, factually accurate output.

How Does RAG Help AI Stay Grounded?

Unlike traditional LLMs, RAG reduces hallucinations by retrieving data directly from trusted sources at the moment of text generation. Imagine an AI answering a history question: rather than generating a response based purely on its training, it will search a database, fetch reliable data, and integrate it into its response.

This dynamic retrieval ensures the AI pulls in up-to-date, verified facts, greatly reducing the risk of misinformation. The more accurate the source, the more trustworthy the AI’s response. Think of it as the difference between a student who remembers facts from a textbook and one who checks a reference book right before answering.

Hallucinations in Context: Why It Happens

Even the best AI models hallucinate. Why? The core reason is that most AI models are trained on vast amounts of unstructured text from the internet, which is often a mix of both credible and non-credible information. The training data doesn’t always differentiate between fact and fiction.

LLMs don’t “know” the truth—they generate text based on patterns, meaning they sometimes generate inaccurate information with full confidence. This lack of factual grounding is what makes hallucinations so tricky.

With RAG, however, we see a shift from pattern-based generation to evidence-based generation. By pulling information from up-to-date databases, the model is less likely to hallucinate, because it’s cross-referencing facts.

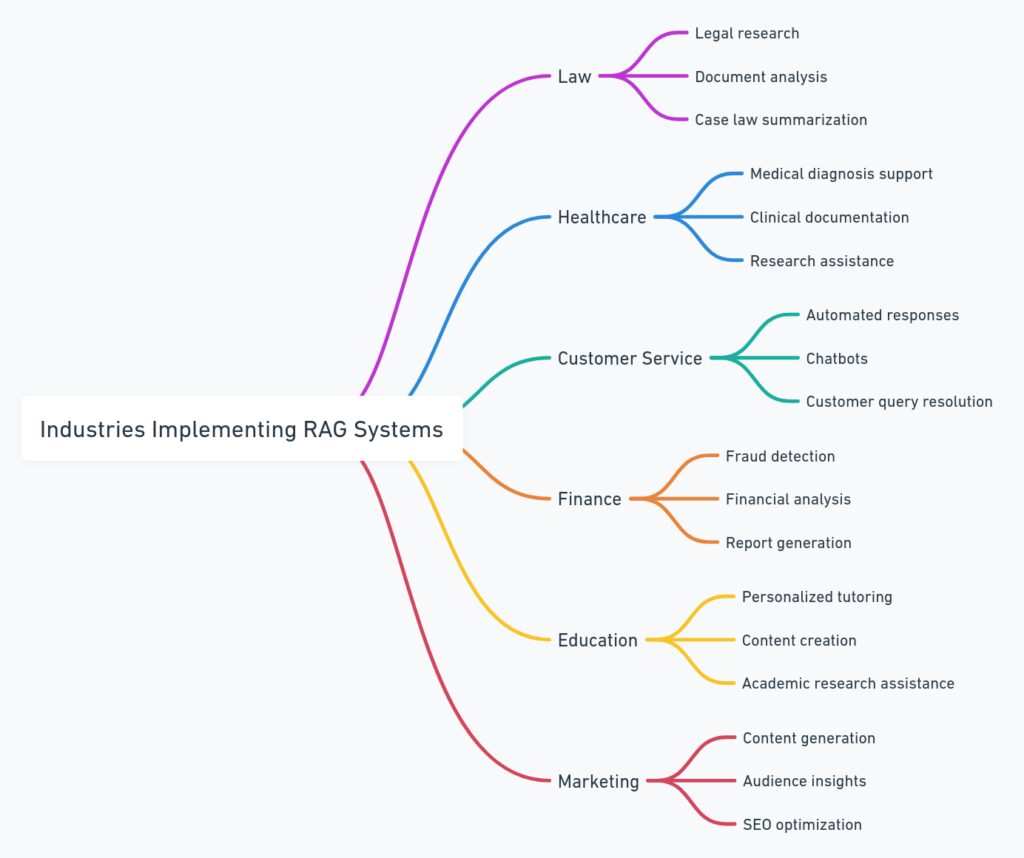

Real-World Applications of RAG in AI Systems

In today’s world, where information accuracy is paramount, industries are quickly adopting RAG systems to tackle the issue of hallucinations. From customer service bots to research assistants, AI models are being designed with RAG to ensure the information they provide is trustworthy.

For example, when a chatbot is tasked with answering complex legal questions, it can use RAG to pull in data from legal databases, ensuring that its responses are grounded in the most recent laws and regulations.

Similarly, in medical AI applications, RAG can help fetch peer-reviewed studies or clinical guidelines, ensuring that medical advice remains accurate and up-to-date.

RAG’s ability to retrieve real-time, credible information is a game changer in industries where accuracy and precision are vital.

The Technical Backbone of RAG

The magic behind RAG lies in its dual-architecture design. First, there’s the retrieval module, which searches through specific data sources, knowledge bases, or APIs. These sources are typically curated to ensure high accuracy. The second module is the generative model, which then takes the retrieved information and uses it to generate a response.

This combination of retrieval and generation means that the model isn’t limited to outdated or pre-trained knowledge. Instead, it can pull in real-time information from the most relevant sources. This ensures a higher degree of factual accuracy and makes hallucinations less frequent.

Curating Reliable Sources: The Key to RAG’s Success

One critical aspect of making RAG effective is source selection. After all, if the AI is retrieving information from unreliable sources, it could end up generating misleading content despite its retrieval capabilities. Developers need to carefully curate and maintain the databases and knowledge repositories that feed into the retrieval module.

In a news or research setting, for example, RAG systems would typically pull from verified databases such as PubMed or industry-specific repositories, ensuring that the model has access to only the most credible information. This curation step is crucial for ensuring factual integrity.

Overcoming Hallucinations: A Collaborative Effort

It’s essential to recognize that solving the problem of AI hallucinations isn’t just the responsibility of engineers and data scientists. Content creators, fact-checkers, and subject-matter experts all play a role in ensuring that the databases and knowledge sources used by AI are accurate and up-to-date.

Additionally, organizations must continually refine the retrieval sources, making sure the AI has access to the most relevant and factual data. It’s this collaborative effort that ensures AI-generated content remains trustworthy.

The Future of RAG in Ensuring Factual Integrity

As RAG systems continue to evolve, we’re likely to see even more advanced methods of verifying information. There’s a growing interest in developing real-time fact-checking systems that work alongside RAG models to ensure that every piece of generated content can be cross-checked for accuracy.

We may also see the integration of expert oversight in specific fields like healthcare or law, where humans work hand-in-hand with AI to oversee the accuracy of retrieved information. This collaboration between AI and humans will likely define the next stage in AI-driven knowledge systems.

Conclusion: RAG as the Solution to Hallucinations in AI

The future of AI is promising, but overcoming hallucinations is crucial to building trust. RAG technology offers a practical solution by ensuring that AI systems pull in verifiable, up-to-date information.

As the adoption of AI expands across various industries, RAG stands out as a vital tool for ensuring that AI models deliver not just fluent but factually grounded responses.

Resources

Here are some useful resources for diving deeper into RAG technology and AI hallucinations:

- Retrieval-Augmented Generation (RAG) Research Paper

The original RAG paper by Facebook AI introduces the core concept and its applications.

Facebook AI: RAG Paper - OpenAI GPT-3 Overview

OpenAI provides details about GPT-3 and its functionality, helping understand how hallucinations occur in language models.

OpenAI GPT-3 - Allen Institute AI2: AI Hallucination and Mitigation

This organization focuses on understanding and combating hallucinations in AI models, offering research papers and projects.

AI2: Hallucination Research - Hugging Face RAG Model

Hugging Face offers a user-friendly platform to explore and implement RAG models, including hands-on tutorials for developers.

Hugging Face: RAG Model - Google AI Blog: Language Model Hallucinations

Google AI’s blog posts about the causes of hallucinations in text generation and efforts to address them.

Google AI Hallucinations

Allen Institute Mind Map on AI Systems

Explore the various applications of RAG in real-world settings like healthcare, finance, and legal tech through a mind map visualization. The Allen Institute’s website includes research projects that explain how RAG works in multiple industries.

Allen AI Projects: Mind Map