What is Real-Time Topic Modeling?

Imagine trying to make sense of thousands of documents in mere moments, identifying patterns that help drive decisions on the fly.

That’s where real-time topic modeling comes into play. It’s a way to understand the topics hidden in vast streams of data as they’re happening. From social media trends to breaking news analysis, real-time topic modeling helps businesses and researchers process information in real-time, allowing them to respond quickly to changes.

However, the catch is—real-time topic modeling is not as straightforward as regular batch processing. It requires fast, adaptive, and scalable approaches to capture the ever-changing nature of data.

Introduction to Latent Dirichlet Allocation (LDA)

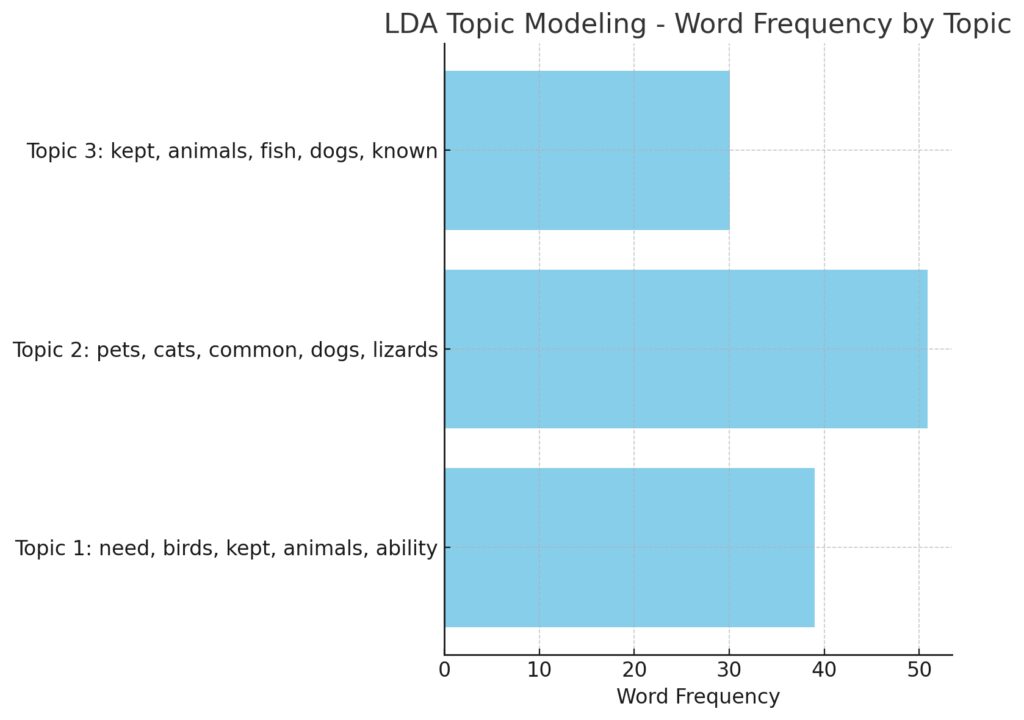

Latent Dirichlet Allocation (LDA) is one of the most popular methods for topic modeling. It works by assuming that each document is a mixture of topics and each topic is a distribution of words. Essentially, LDA finds patterns in the way words group together in a dataset, and from those patterns, it identifies latent or hidden topics.

While highly effective in batch settings, LDA wasn’t originally designed for real-time applications. Applying it to streaming data brings a host of new challenges. But first, why should we even bother with real-time insights?

The Importance of Real-Time Insights

In today’s fast-paced world, waiting to analyze data can be costly. For instance, in the world of social media marketing, trends shift quickly. A viral post today might be irrelevant tomorrow. Brands that can capture these shifts in real-time have a competitive edge.

Real-time topic modeling helps in customer feedback analysis, detecting emerging trends, and even in more serious fields like fraud detection or cybersecurity, where delays in spotting trends could lead to catastrophic outcomes.

Now, let’s dive into the specific challenges we face when using LDA in real-time settings.

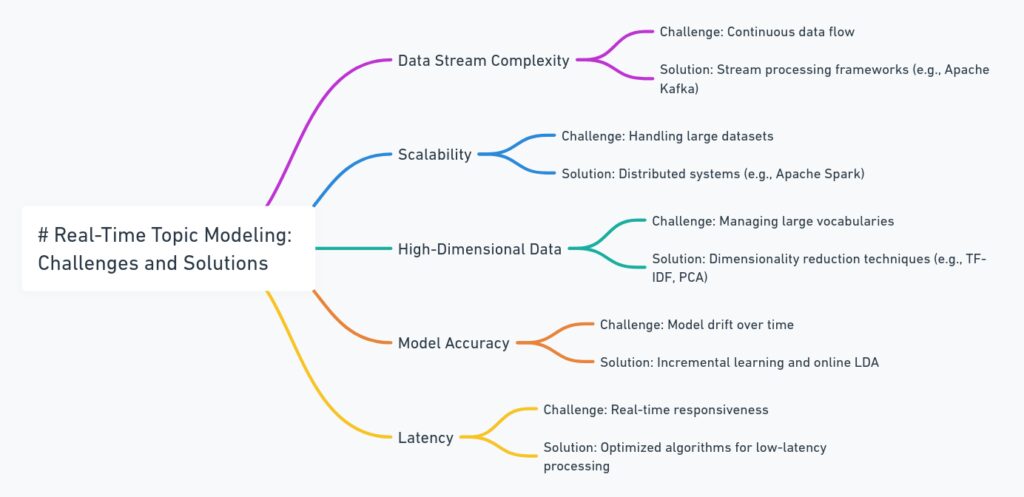

Challenges of Real-Time Topic Modeling with LDA

Applying LDA to real-time data streams is tricky for several reasons. First, the nature of data streams means that the data is constantly evolving—new topics may emerge, and old ones may disappear.

Second, real-time analysis demands quick results, and traditional LDA can take a long time to train. Finally, there’s the issue of scalability, where processing huge volumes of data quickly can strain even the most powerful systems.

But don’t worry—there are solutions to these problems, and we’ll cover those soon.

Data Stream Complexity and Dynamic Nature

One major challenge is the dynamic nature of data streams. In a traditional LDA model, you feed in a fixed set of documents, run the algorithm, and wait for the topics to emerge. But in real-time settings, the data keeps coming in continuously, which means you have to constantly update your model. What’s more, the topics in real-time data might shift drastically from one moment to the next.

For instance, in news analysis, the topic distribution changes as new stories break. Your LDA model needs to be adaptive enough to capture these shifts without lagging behind.

Scalability Issues in LDA for Real-Time Applications

When processing large data streams, scalability is a big concern. Traditional LDA works well with small to moderate-sized datasets, but real-time applications often involve huge streams of data. Processing this data efficiently, while maintaining high performance, can be tough.

The more data you have, the more computational resources you’ll need. Scaling LDA to handle terabytes of data in a short timeframe is where many businesses struggle. Without effective solutions, bottlenecks in performance can occur, making real-time topic modeling impractical.

Handling High-Dimensional Data in Real-Time

Another difficulty is dealing with high-dimensional data. Text data, by nature, involves thousands of unique terms or features, which makes the data high-dimensional. In a real-time scenario, the challenge lies in continuously handling this high-dimensional space while keeping the computations fast and accurate.

To complicate matters, as new data comes in, the dimensionality may grow even higher, making it harder for LDA to identify topics without introducing significant delays. Without efficient techniques for reducing dimensionality, real-time processing can quickly slow to a crawl.

Delays in Model Training and Prediction

LDA can be slow. It typically requires several iterations to converge, especially when the data is large and complex. In a real-time setting, you don’t have the luxury of waiting for a model to train for hours. You need insights now.

Prediction latency is another concern. Even after training, making predictions on new data points can take time—too much time if you’re aiming for real-time results. This creates a gap between data ingestion and actionable insights, which defeats the whole purpose of real-time topic modeling.

Real-Time Data Preprocessing Hurdles

Before you even run LDA, there’s the issue of data preprocessing. In real-time applications, you’re dealing with raw, unstructured text that needs to be cleaned, tokenized, and transformed into a format suitable for LDA.

This preprocessing must be done on the fly, adding extra layers of complexity. If your preprocessing pipeline isn’t efficient, it will introduce delays that could make your real-time analysis feel anything but real-time. Techniques like stopword removal and stemming can be computationally expensive when done at scale, slowing down your pipeline.

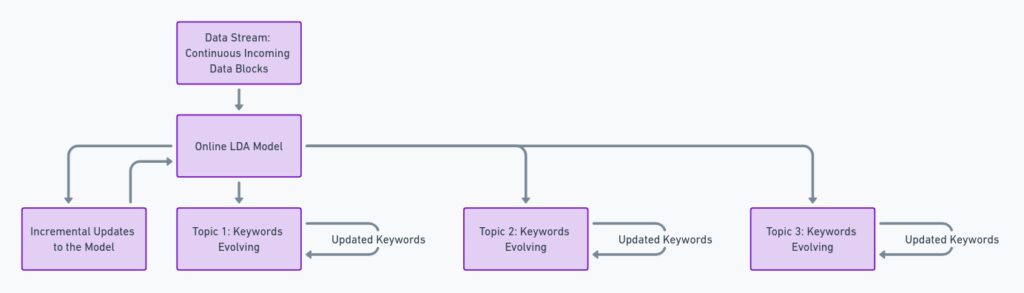

Adaptive Algorithms: Evolving LDA Models

To address the challenges posed by dynamic data streams, adaptive algorithms offer a significant advantage. Traditional LDA operates under the assumption that the dataset is static, but real-time environments are anything but. Online LDA and streaming LDA models are specifically designed to adapt as new data flows in. These models update themselves incrementally rather than requiring a full re-training every time fresh data appears.

For example, online LDA, pioneered by Matthew Hoffman, works by breaking the data into mini-batches and updating the topic distribution as each new batch is processed. This way, you avoid the time-consuming process of retraining the model from scratch every time a new document comes in. With such adaptability, real-time models stay relevant even as the data evolves.

Streamlining LDA with Online Learning

Online learning methods allow LDA to handle new data points continuously and efficiently. The difference between batch learning and online learning is simple: while batch learning waits for all data to be available before training, online learning updates the model with every new data point or batch of data. This approach keeps the topic model fresh, always aligned with the most current trends.

One powerful method is variational inference for LDA, where an approximate solution is found quickly for new data, instead of computing the exact distribution, which is often computationally expensive. By leveraging online learning, organizations can save both time and computational power, making real-time insights more achievable.

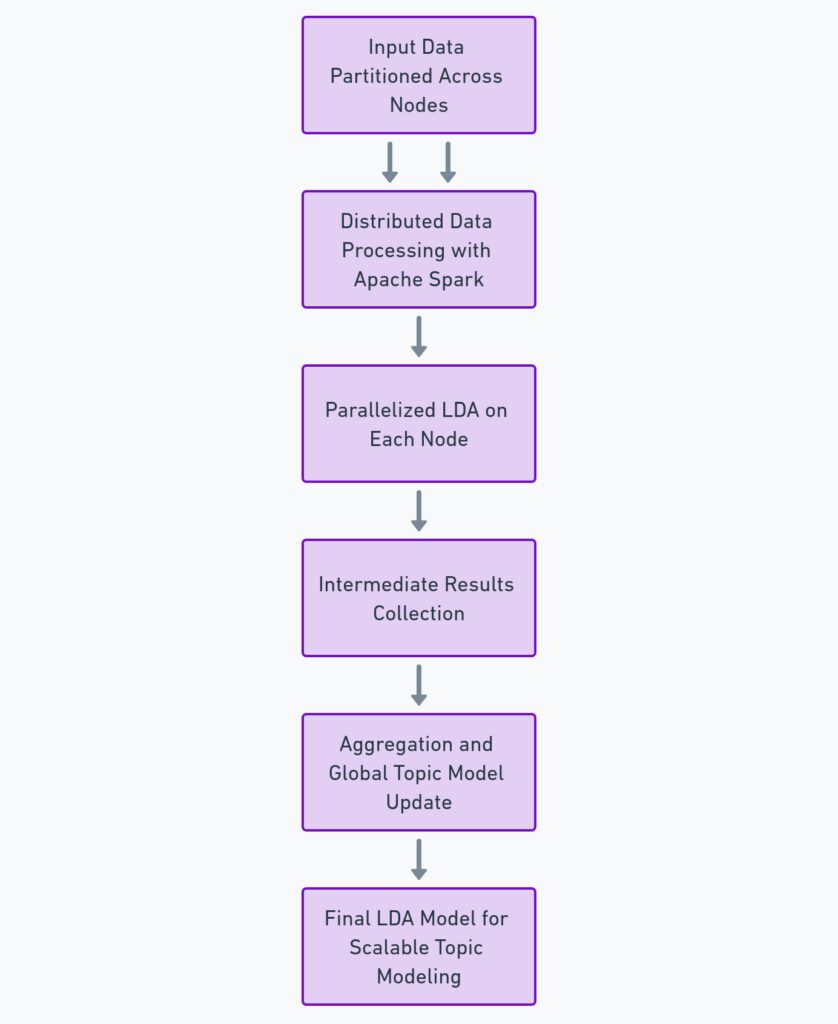

Leveraging Distributed Systems for Scalability

Scalability issues often arise when LDA is applied to large-scale, real-time datasets. The good news is that we can scale LDA models by distributing the computational workload across multiple machines. Distributed systems like Apache Spark or Hadoop offer frameworks that allow for parallel processing, making LDA scalable to vast data streams.

These systems break down the data and computations into smaller tasks that can be handled simultaneously by different machines. This approach drastically reduces the time it takes to process large data sets, making real-time topic modeling more efficient and manageable, even for companies with limited resources.

For instance, Spark’s MLlib provides distributed LDA, which efficiently handles large datasets and allows for real-time processing of topic modeling tasks across clusters. This ensures that your model can scale horizontally, without sacrificing speed or accuracy.

Optimizing Real-Time LDA: Hardware Solutions

Sometimes, the solution to slow LDA performance isn’t just in the algorithm but in the hardware. GPU acceleration is one way to dramatically boost the speed of LDA computations. GPUs, or graphics processing units, are designed to handle parallel tasks more efficiently than traditional CPUs. By offloading LDA’s matrix operations to GPUs, you can achieve real-time performance even for large-scale topic models.

Additionally, using cloud-based infrastructure provides the flexibility to scale hardware resources as needed. Many companies are now leveraging platforms like Google Cloud or AWS to allocate resources dynamically. This way, as the data stream grows, the infrastructure can automatically scale up, ensuring that the LDA model keeps up with the data flow without hitting a performance wall.

Case Studies: Real-World Applications of LDA in Real-Time

Several industries are already harnessing the power of real-time LDA for various applications. In social media analytics, LDA is used to detect emerging trends and monitor public sentiment. For example, during a major event like a product launch, companies can use real-time LDA to capture trending topics and quickly respond to consumer feedback.

In cybersecurity, real-time LDA models are being applied to detect potential threats by analyzing patterns in network traffic. Sudden spikes in certain topics or clusters could indicate suspicious activity, allowing security teams to take preventive measures before a breach occurs.

Another exciting application is in news and media organizations, where LDA helps identify trending stories in real-time. By continuously processing news articles as they are published, media companies can offer curated content based on the latest hot topics, keeping their audiences engaged and informed.

Tools and Technologies to Overcome LDA Challenges

To successfully implement real-time topic modeling with LDA, having the right tools and technologies is essential. Several platforms and libraries are available to help you manage the complexity, scalability, and processing speed issues that arise when using LDA in real-time.

One of the most popular frameworks is Gensim, an open-source Python library designed for topic modeling and document similarity analysis. Gensim’s online LDA feature allows you to process large amounts of data incrementally, making it ideal for real-time applications. Similarly, scikit-learn offers LDA functionality, though it’s more suited for batch processing unless extended with additional methods for streaming data.

Apache Kafka also plays a critical role in real-time data processing. By providing a robust platform for managing data streams, Kafka allows for seamless ingestion of real-time data, which can then be fed directly into your LDA pipeline. Kafka is often paired with Apache Spark for distributed processing, ensuring that you can handle vast amounts of data in real-time without performance lags.

Moreover, Google Cloud’s BigQuery offers real-time data analytics solutions with built-in machine learning capabilities. You can use it to process and analyze data streams, scaling up as necessary to accommodate larger data volumes without impacting performance.

Future Directions for Real-Time Topic Modeling

Looking forward, the field of real-time topic modeling is evolving rapidly. One exciting area of research is the integration of neural networks with LDA. While LDA is a probabilistic model, researchers are exploring how deep learning techniques can enhance topic modeling by capturing more complex patterns in the data. This fusion of deep learning and LDA, sometimes referred to as neural topic models, promises to improve the accuracy and speed of real-time applications.

Another promising development is the rise of AutoML (Automated Machine Learning) frameworks, which could automate the process of selecting the best LDA configuration for a given task. AutoML systems could continuously optimize real-time LDA models, ensuring they stay relevant without the need for constant human intervention.

The future might also bring more advancements in edge computing, where LDA models can be deployed directly on devices closer to the data source. This would reduce the latency caused by sending data to a central server for processing, making real-time topic modeling faster and more efficient.

By addressing the core challenges and adopting the right tools, real-time topic modeling with LDA is becoming increasingly feasible. As technologies evolve, it’s exciting to see where this powerful combination of data science and real-time analytics will take us!

FAQs on Real-Time Topic Modeling with LDA

Why is real-time topic modeling important?

In fast-paced industries like social media, finance, or cybersecurity, real-time insights allow organizations to respond to trends or issues immediately. Whether it’s detecting emerging topics, identifying anomalies, or adjusting marketing strategies, real-time topic modeling helps make data-driven decisions on the fly.

What are the biggest challenges with using LDA in real-time?

Real-time LDA poses challenges like handling the dynamic nature of data streams, scalability issues, managing high-dimensional data, and minimizing delays in model training and predictions. Preprocessing data efficiently and adapting models to changing data are also significant hurdles.

How does online LDA differ from traditional LDA?

Traditional LDA processes data in batches, requiring all data to be available beforehand. Online LDA, however, processes data incrementally. It updates the model continuously as new documents arrive, making it suitable for real-time applications where data is constantly being generated.

Can LDA handle large-scale data in real-time?

Yes, but it requires optimization through methods like online learning, distributed systems (e.g., Apache Spark), or cloud-based infrastructure. Scalability issues can be addressed by distributing the computational workload across multiple machines or leveraging GPU acceleration.

What is online learning, and how does it help in real-time LDA?

Online learning is a machine learning approach where the model updates itself as new data points arrive. In the context of LDA, it allows the topic model to evolve incrementally rather than retraining from scratch, saving time and resources while maintaining accuracy.

What tools are available for real-time topic modeling?

Popular tools include Gensim, Apache Spark, and Kafka. Gensim offers online LDA, while Spark and Kafka provide scalable platforms for processing data streams and distributing computational tasks across clusters.

What role do adaptive algorithms play in real-time LDA?

Adaptive algorithms enable the LDA model to evolve as new data comes in. These algorithms ensure the model stays up-to-date, continuously improving its topic predictions as data streams change, without requiring complete retraining.

Can real-time topic modeling be used in industries other than tech?

Absolutely. Fields like healthcare, marketing, and education benefit from real-time topic modeling. In healthcare, it helps in analyzing patient feedback, while marketers use it for understanding consumer trends in real-time. Education platforms can use it to monitor student engagement across learning materials.

What are some real-world applications of real-time LDA?

Real-time LDA is used in social media monitoring, customer feedback analysis, cybersecurity (to detect emerging threats), and news organizations (for identifying trending stories). Businesses can also use it for analyzing large datasets of customer reviews or survey responses as they come in.

What is the difference between batch and streaming LDA?

Batch LDA processes a fixed dataset, meaning it cannot handle new data without retraining the model. Streaming LDA, on the other hand, processes data incrementally, adapting to changes in real-time, making it ideal for continuous data streams like social media posts or news articles.

Can LDA models be deployed on edge devices?

Yes, with the growing capabilities of edge computing, LDA models can be deployed on edge devices to process data closer to the source, minimizing latency and improving the speed of real-time topic modeling.

How can hardware optimizations improve real-time LDA?

Using GPU acceleration or cloud-based infrastructure can significantly speed up the computations involved in LDA. GPUs are highly efficient at parallel processing, while cloud platforms like Google Cloud or AWS can dynamically scale resources as data streams increase.

What is the future of real-time topic modeling with LDA?

The future includes advancements in neural topic models, combining deep learning with LDA to improve accuracy. AutoML is also emerging, offering automated model optimization, and edge computing will play a bigger role in deploying LDA models for faster, decentralized real-time analysis.

How does real-time topic modeling help with customer feedback analysis?

Real-time topic modeling allows companies to analyze customer feedback as it comes in, identifying emerging issues or trends quickly. This helps brands respond faster to complaints, optimize products based on user suggestions, and even capitalize on positive trends. With LDA, companies can continuously refine their understanding of what customers are talking about, enabling them to stay proactive in meeting customer needs.

Can LDA be used for multilingual data streams in real-time?

Yes, LDA can be applied to multilingual datasets, but it requires preprocessing steps like tokenization and language detection. Some multilingual LDA models are designed to handle text in multiple languages simultaneously. By combining language identification tools with LDA, companies can analyze real-time feedback from diverse regions and customer bases, ensuring global reach without losing precision.

Is there a difference between topic modeling and text classification?

Yes, topic modeling and text classification serve different purposes. Topic modeling, as seen with LDA, is unsupervised, meaning it discovers latent topics within a dataset without predefined categories. Text classification, on the other hand, is a supervised learning method that assigns labels or categories to texts based on a training dataset. Real-time LDA focuses on discovering trends and patterns, while classification assigns known topics to incoming data.

How does real-time topic modeling compare with sentiment analysis?

Real-time topic modeling identifies the topics or themes being discussed in a dataset, while sentiment analysis focuses on detecting the tone (positive, negative, neutral) of the data. These two techniques can be used together—real-time topic modeling can surface what people are talking about, and sentiment analysis can reveal how they feel about those topics. Together, they provide a more comprehensive picture of real-time conversations.

What are the key differences between traditional and real-time preprocessing for LDA?

In traditional LDA, preprocessing happens once before running the model, involving tasks like tokenization, stopword removal, and stemming. For real-time LDA, preprocessing must occur continuously as new data streams in. This adds complexity since the system needs to handle noisy, unstructured data quickly without introducing delays. Efficient pipelines, including real-time tokenization, cleaning, and transformation, are critical for maintaining real-time performance.

What are the performance metrics used to evaluate real-time LDA models?

Common performance metrics include perplexity and coherence score. Perplexity measures how well the LDA model predicts a set of unseen documents, where a lower perplexity indicates a better model fit. Coherence score evaluates how consistent and interpretable the topics are. In real-time applications, additional factors like latency, scalability, and real-time accuracy are critical to measure how well the model adapts to fast-moving data streams.

How does distributed computing improve real-time LDA?

Distributed computing splits the computational workload across several machines or processors, which allows large datasets to be processed in parallel. In real-time LDA, distributed systems like Apache Spark enable models to scale horizontally as the data stream increases. This ensures that the model remains responsive, regardless of the volume of incoming data, helping maintain low latency and high throughput for real-time applications.

What role does feature engineering play in real-time LDA?

Feature engineering transforms raw text into a more structured format suitable for LDA. This includes converting text into a bag of words or using term frequency-inverse document frequency (TF-IDF) to measure the importance of words. In real-time LDA, feature engineering needs to be fast and efficient, as each document or batch of data must be processed on the fly. Feature engineering improves the quality and accuracy of the topics produced by the model.

Can neural networks replace LDA for real-time topic modeling?

Neural topic models are an emerging alternative to LDA, leveraging deep learning to capture more complex structures in text. While traditional LDA is simpler and faster, especially with real-time data, neural networks can provide more nuanced topic representations. However, the complexity and computational costs of neural networks make them less ideal for real-time processing unless optimized for speed with tools like TensorFlow or PyTorch.

What are some pitfalls to avoid when using LDA in real-time?

One pitfall is neglecting data drift, where topics and patterns in the data evolve over time. If your LDA model doesn’t adapt to these changes, it can become outdated quickly. Another common mistake is overloading the model with too many topics or not fine-tuning hyperparameters like the number of topics and iterations. Finally, inefficient preprocessing pipelines can cause bottlenecks, defeating the purpose of real-time modeling.

How can businesses improve topic quality in real-time LDA?

Businesses can improve topic quality by ensuring clean, well-processed data before it enters the LDA model. Optimizing hyperparameters, like the number of topics and alpha, the parameter controlling document-topic distribution, also helps. Regular evaluation and retraining can refine the model’s accuracy over time, ensuring that it stays relevant to the latest trends and data streams.

Can LDA handle non-textual data in real-time?

LDA is typically used for text data, but with modifications, it can be applied to other types of data like images or audio by converting them into suitable representations. For instance, bag-of-visual-words models are used to apply LDA-like techniques to images. In real-time applications, this would require an additional layer of feature extraction to convert non-textual data into a format that LDA can process.

Resources for Learning and Implementing Real-Time Topic Modeling with LDA

- Gensim Documentation

Gensim is one of the most widely used Python libraries for topic modeling. It provides an implementation of online LDA that allows for processing large-scale and real-time data.- Gensim Official Documentation

- Apache Spark and MLLib

Spark’s MLLib library includes distributed LDA implementations that allow for handling massive datasets in real-time. Spark is excellent for scaling and distributing your LDA processes. - Online LDA by Matthew Hoffman

Matthew Hoffman’s paper on Online Learning for Latent Dirichlet Allocation provides the foundation for many online LDA implementations. It’s an essential read for understanding how LDA can be adapted to real-time settings.- Hoffman et al. Paper on Online LDA

- Kafka and Real-Time Data Processing

Apache Kafka is a distributed event streaming platform used to build real-time data pipelines and applications. Kafka can handle real-time data ingestion for LDA. - Introduction to Latent Dirichlet Allocation by Blei, Ng, and Jordan

The original paper introducing LDA by its creators, David Blei, Andrew Ng, and Michael Jordan, is a must-read to understand the theory behind the LDA model.- Blei et al. LDA Paper

- Google Cloud BigQuery ML

BigQuery ML is a fully managed data warehouse that offers machine learning tools for processing real-time data at scale, including LDA for topic modeling.- BigQuery ML Documentation

- Real-Time Natural Language Processing with Python

This book covers real-time processing of text data using Python and includes detailed examples on using libraries like Gensim and NLTK.- Real-Time Natural Language Processing with Python

- Variational Inference for LDA

Variational inference is a method that speeds up the estimation of topic models, making it suitable for real-time applications. This resource provides insights into how variational inference works with LDA. - TensorFlow for Neural Topic Modeling

TensorFlow allows you to implement neural topic models, an alternative to traditional LDA, which leverages deep learning for real-time topic modeling.- TensorFlow Neural Topic Modeling Guide

- AWS Real-Time Analytics Solutions

Amazon Web Services (AWS) provides cloud-based services that can be used for real-time topic modeling, combining tools like Amazon Kinesis for streaming data and AWS Lambda for serverless computing.