As models become more complex, there’s a growing interest in combining powerful architectures to achieve even greater performance.

One such combination is merging ResNets with Transformers, an exciting trend that opens up a realm of possibilities in machine learning.

In this article, we will explore how this hybrid architecture approach works, its benefits, and its real-world applications.

Why Combine ResNets with Transformers?

ResNets (Residual Networks) and Transformers are both game-changers in their respective fields. But why bring them together?

ResNets excel at processing images by leveraging residual connections, while Transformers revolutionized NLP by allowing models to focus on different parts of input data. Now, the synergy between these architectures can bring major improvements, especially in computer vision and multimodal tasks.

Leveraging the Power of ResNets in Image Processing

At its core, a ResNet is designed to tackle the vanishing gradient problem. With the addition of shortcut connections, ResNets pass information across layers, ensuring that deeper networks can train efficiently.

When used in hybrid architectures, ResNets provide:

- Efficient feature extraction: By focusing on extracting spatial features, ResNets give the Transformer a strong starting point to process data.

- Depth without complexity: By introducing residual layers, ResNets handle deeper architectures better than traditional convolutional networks.

Together, these advantages ensure that any hybrid model can tackle large datasets without losing critical information.

Transformers: Revolutionizing Attention Mechanisms

Transformers shook the deep learning space with their attention mechanism, a method that allows models to focus on important parts of the input data. While primarily used in natural language processing (NLP), they’re increasingly adapted for vision tasks.

When integrated into hybrid models, Transformers bring:

- Enhanced global context understanding: Unlike convolutional layers, which focus on local features, Transformers capture the relationships across the entire image.

- Multi-head attention: This feature allows the model to pay attention to different parts of the data simultaneously, which boosts accuracy in complex tasks.

By combining this with ResNets’ strong feature extraction, you’re looking at a model that can handle spatial and contextual data with ease.

Bridging the Gap: How ResNets and Transformers Work Together

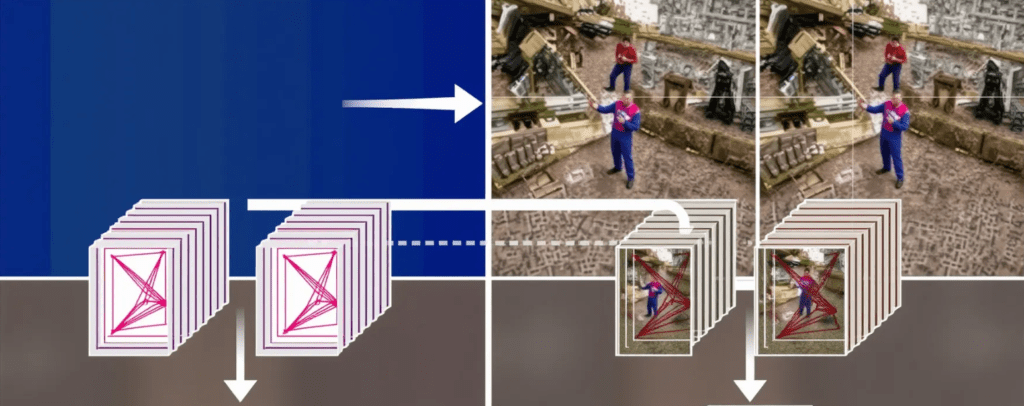

When combining ResNets with Transformers, the idea is simple—each architecture compensates for the other’s limitations.

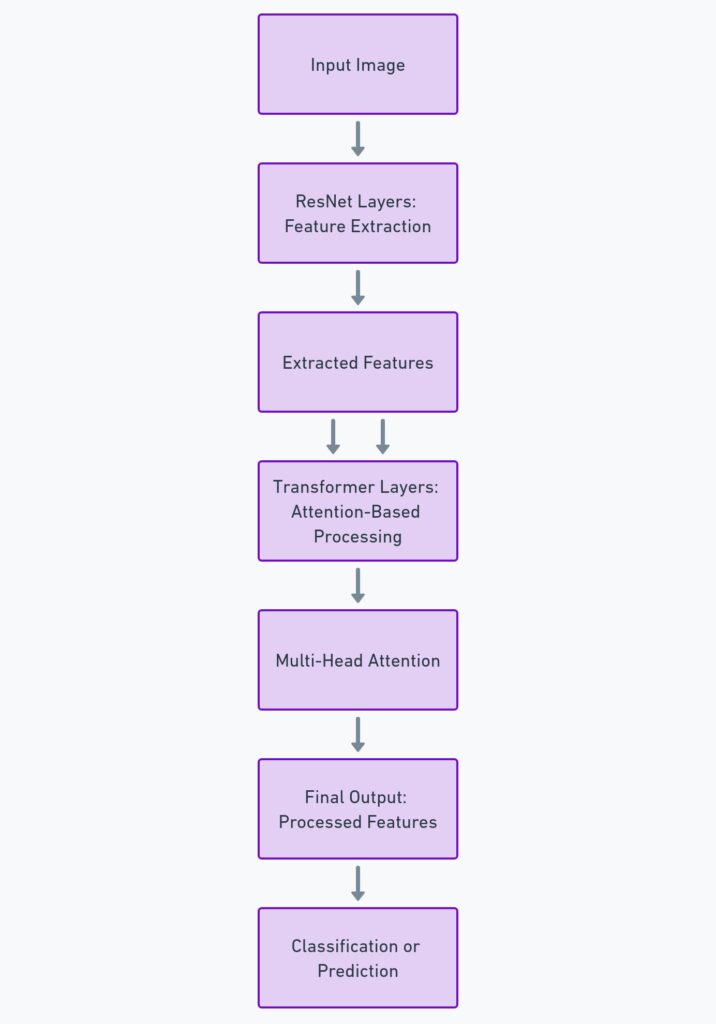

Here’s how it works:

- ResNet layers handle early-stage feature extraction. This is where spatial data is captured and passed along.

- After initial processing, the Transformer blocks step in to manage global attention and refine the model’s understanding of the image.

- The two layers interact in a back-and-forth pattern, with attention layers refining spatial features while residual blocks help retain critical information throughout the process.

This hybrid model can be seen as a two-step process: ResNets build a foundation, and Transformers fine-tune it for optimal performance.

Benefits of Hybrid Architectures for Multimodal Learning

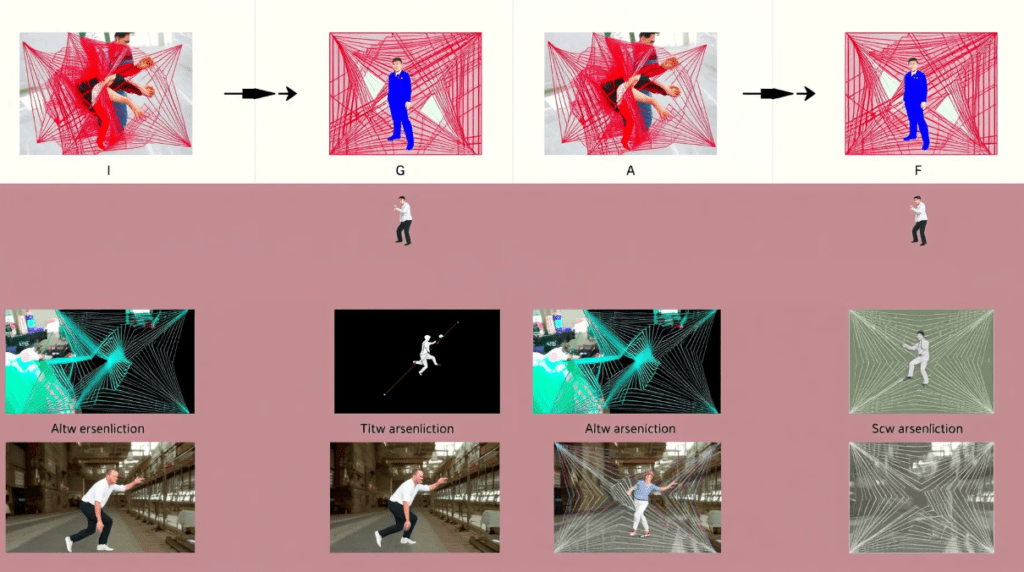

When you blend the strengths of ResNets and Transformers, the potential for multimodal learning skyrockets. Multimodal tasks involve processing text, images, and other data types simultaneously, and this is where hybrid models truly shine.

Key benefits include:

- Increased flexibility: These models handle various data types more naturally, leading to better performance in complex tasks like video understanding or image captioning.

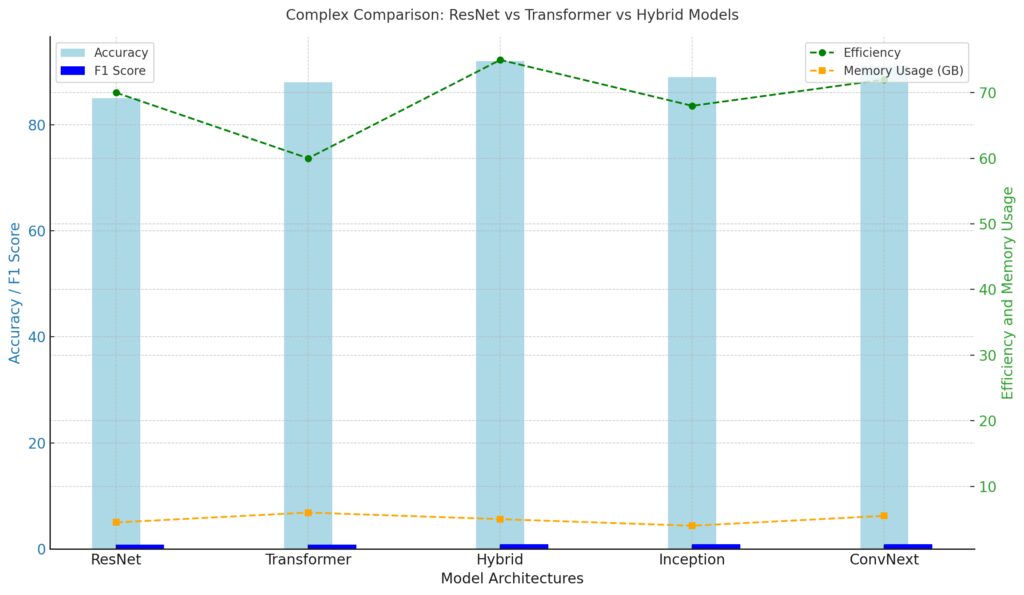

- Reduced computation costs: With ResNets handling the feature extraction, the Transformer layers can focus on high-level processing. This division of labor keeps computation lower than running a standalone Transformer-based architecture.

- Better accuracy for large datasets: Hybrid models balance efficiency and complexity, allowing for better results in larger, more complex datasetsal-World Applications of ResNet-Transformer Hybrids

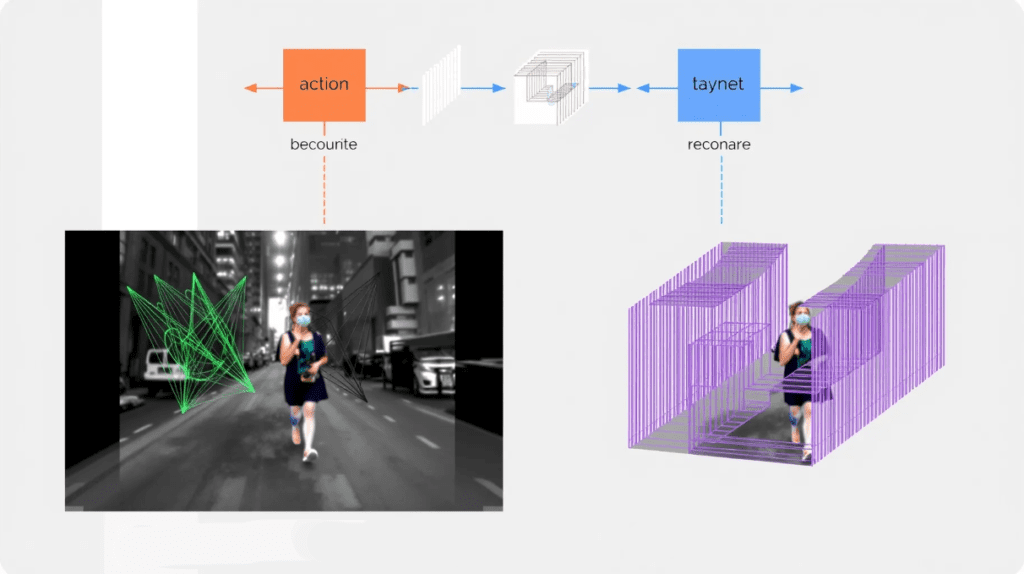

From image recognition to autonomous driving, hybrid architectures are making their way into a wide variety of applications:

- Medical Imaging: These architectures can detect anomalies in medical scans, leveraging ResNet’s ability to spot fine details while Transformers focus on the broader picture.

- Video Analysis: With hybrid models, it’s possible to both analyze individual frames (via ResNets) and grasp overall scene dynamics (via Transformers).

- Natural Language Understanding: By combining visual data with text (e.g., image captioning tasks), hybrid models open the door for multimodal AI solutions.

The Future of Deep Learning: Hybrid Models Leading the Way

It’s clear that combining ResNets and Transformers is more than just a passing trend. With their ability to tackle complex datasets and process multiple data types, hybrid models are on track to drive the next phase of deep learning advancements. As more developers adopt this approach, we can expect breakthroughs in areas like self-driving cars, virtual assistants, and predictive healthcare.

Challenges of Combining ResNets with Transformers

However, no breakthrough comes without challenges. When combining two distinct architectures, researchers face several obstacles:

- Training complexity: Hybrid models are naturally more complex to train due to their multi-component nature. Striking the right balance between feature extraction and attention mechanisms is crucial.

- Resource demands: While hybrid architectures can save on computation in some cases, they often require significant computational power during training, especially for large-scale tasks.

- Fine-tuning: Getting these models to work effectively requires careful hyperparameter tuning, which can be time-consuming and resource-intensive.

Despite these hurdles, the potential upside is enormous.

Hybrid Architectures in NLP and Beyond

Interestingly, the combination of ResNets and Transformers isn’t limited to computer vision. These models are making waves in NLP, too. By applying residual connections in text processing, researchers are optimizing language models for tasks like machine translation and sentiment analysis.

This crossover shows the versatility of hybrid models, demonstrating their potential to reshape not just how we process images but how we approach data in general.

Training Hybrid Models: Best Practices

If you’re planning to experiment with hybrid architectures, there are a few best practices you should follow:

- Start with pre-trained models: Pre-trained ResNets or Transformers can speed up training and reduce computational costs.

- Fine-tune in stages: Begin by training ResNet layers before adding Transformers into the mix.

- Optimize hyperparameters: Hybrid models are sensitive to hyperparameters, so careful tuning is essential for getting the most out of your architecture.

By following these steps, you can ease the transition into the complex but rewarding world of hybrid architectures.

Why Hybrid Architectures Are Revolutionizing AI

In deep learning, innovation often comes from the combination of already-powerful tools. Hybrid architectures, which merge ResNets and Transformers, are at the forefront of this evolution. These two architectures individually excel in different areas, but together, they create models that can handle increasingly complex tasks with precision.

Merging ResNets’ deep feature extraction capabilities with Transformers’ global attention mechanism forms a system that is both powerful and flexible. The potential to improve performance in fields like computer vision, natural language processing, and multimodal tasks makes this hybrid approach a game changer.

ResNets: Mastering Feature Extraction

Residual Networks (ResNets) have become the go-to solution for tasks involving image classification and recognition. Their main strength lies in their shortcut connections, which allow them to effectively combat the vanishing gradient problem. This makes it possible for networks to go deeper without losing accuracy or becoming untrainable.

Here’s why ResNets remain indispensable:

- Residual learning: By adding shortcut connections, ResNets allow information to skip layers. This ensures that important features aren’t lost as the network deepens.

- Stacking without degradation: Traditional deep neural networks face degradation in performance as layers are stacked. ResNets bypass this issue, making deeper, more complex networks feasible.

- Fast and efficient: The structure of ResNets allows for faster training times, even when handling vast datasets, thanks to their focus on feature refinement rather than rebuilding.

These strengths make ResNets a robust starting point for building more complex hybrid architectures.

Transformers: Capturing Context and Meaning

While ResNets shine in the domain of feature extraction, Transformers dominate when it comes to capturing context. Originally developed for natural language tasks, the self-attention mechanism of Transformers allows the network to focus on different parts of input data. This makes it uniquely suited for tasks that require a broad global understanding.

In vision tasks, Transformers bring:

- Attention to detail: Instead of treating each pixel equally, Transformers focus on the most relevant parts of the image, making them ideal for object detection and segmentation.

- Contextual relationships: Transformers’ ability to track relationships between different parts of an image or sequence helps them excel in tasks that require understanding spatial and semantic hierarchies.

- Scalability: Transformers can be scaled up to larger datasets and more complex tasks, thanks to their architecture that allows for parallelization.

This focus on context complements ResNets’ feature extraction, allowing hybrid architectures to not only identify individual elements in an image but understand how they relate to each other.

Synergy: The Power of Combining ResNets and Transformers

Hybrid architectures that blend ResNets and Transformers offer a holistic approach to complex problems. While ResNets handle low-level feature extraction, Transformers manage high-level processing like attention and contextual understanding.

The combination provides:

- Deep insights from shallow data: ResNets extract rich features from image data, while Transformers ensure that the model doesn’t lose track of the big picture.

- Improved generalization: Hybrid models are better equipped to handle both small details and overarching patterns, leading to better generalization on unseen data.

- Balanced complexity: With ResNets working on reducing the input size and providing feature maps, the Transformers have less to process, which optimizes resource usage.

Together, these benefits create a streamlined workflow that handles everything from object detection to language-vision tasks with grace.

Training Challenges and Strategies for Hybrid Models

Despite the advantages, training hybrid models is no small feat. The increased complexity means they are more computationally expensive and require meticulous hyperparameter tuning to achieve optimal performance. However, the rewards are well worth the effort, especially for cutting-edge applications.

Some strategies to tackle these challenges include:

- Layer-wise training: Train ResNets and Transformers in stages rather than simultaneously to avoid overwhelming your hardware.

- Model fine-tuning: Starting with pre-trained models, especially in the case of Transformers, can significantly reduce the required training time and resources.

- Balanced resource allocation: While Transformers are resource-heavy, ensuring that ResNet layers are optimized can reduce the overall computational load.

By managing these challenges effectively, hybrid architectures can deliver exceptional results even on resource-constrained systems.

Use Cases: From Autonomous Vehicles to Healthcare

The combination of ResNets and Transformers is proving to be impactful across multiple industries, offering groundbreaking improvements in everything from healthcare to autonomous systems.

- Autonomous Driving: In autonomous systems, identifying objects accurately and understanding their context within a scene is crucial. Hybrid models help vehicles understand not only what’s on the road, but how those objects interact, improving decision-making and safety.

- Medical Imaging: By combining ResNet’s detail-oriented feature extraction with Transformer’s contextual understanding, hybrid models can assist in diagnosing diseases like cancer from imaging scans. This dual focus ensures that both fine-grained details and overall patterns are analyzed.

- Robotics: Hybrid architectures can guide robots in recognizing and interacting with objects, especially in dynamic environments where both object recognition and spatial awareness are vital.

- Natural Language Processing: In NLP, ResNet-Transformer hybrids can improve tasks like speech-to-text, machine translation, and document analysis, where both sequence processing and feature recognition are needed.

The versatility of these models is quickly making them indispensable for a wide variety of applications.

Overcoming Limitations: How Hybrid Architectures Excel

One of the primary motivations for combining ResNets with Transformers is the need to overcome each architecture’s limitations. By leveraging the strengths of both, hybrid models can mitigate some of the performance bottlenecks found in traditional deep learning architectures.

For example, ResNets struggle with tasks that require understanding relationships over long sequences or areas, whereas Transformers excel at capturing contextual dependencies but can be computationally expensive in image-based tasks. Together, these architectures can fill each other’s gaps and provide more robust, generalizable models.

Reducing Computational Costs with Efficiency in Mind

Although Transformers typically require significant computational resources, pairing them with ResNets can help mitigate these demands. ResNets can handle initial feature extraction, reducing the complexity of the data before passing it to the Transformer for higher-level processing.

This strategic division of labor allows for more efficient resource usage, as ResNets simplify the input, which leads to faster training times and lower memory requirements. Additionally, with fine-tuning techniques, it’s possible to focus computational power on the most critical components of the hybrid architecture.

Multimodal Learning: Enhancing Data Understanding

One of the most exciting applications of ResNet-Transformer hybrids is in multimodal learning. This refers to tasks that require the integration of different types of data, such as combining text, images, and sound. Examples include image captioning, visual question answering, and autonomous systems that need to understand both visual and auditory inputs.

By using ResNets to process visual data and Transformers to analyze text or sequence-based data, hybrid architectures are able to merge these inputs into a coherent understanding. This combination is especially valuable in applications like human-computer interaction, where understanding both spoken language and visual cues is crucial.

Potential for Future Research and Development

As the field of deep learning evolves, hybrid models combining ResNets and Transformers are poised to be a key area of innovation. Researchers are exploring more advanced methods of integrating these architectures to further enhance their synergy and efficiency.

Some potential future developments include:

- Advanced attention mechanisms: Introducing more sophisticated attention layers into ResNets to allow for even finer control over feature extraction.

- Self-supervised learning: Combining the strengths of ResNets and Transformers in unsupervised or self-supervised learning frameworks, which can reduce the need for large labeled datasets.

- Transfer learning optimization: Using hybrid models to transfer learning between modalities, improving the ability to apply knowledge from one domain (such as image recognition) to another (like language processing).

Final Thoughts on Hybrid Architectures

Hybrid architectures are undoubtedly the future of deep learning. By combining the efficient feature extraction of ResNets with the powerful attention mechanisms of Transformers, these models unlock new possibilities in handling complex, multimodal tasks with improved accuracy and efficiency.

As these technologies continue to develop, their potential applications will only grow, offering smarter, more capable AI systems across various industries. The combination of ResNets and Transformers is already showing incredible promise in areas like healthcare, robotics, and natural language processing, and their impact will only deepen in the coming years.

Resources

- The Original ResNet Paper

- Title: Deep Residual Learning for Image Recognition

- Authors: Kaiming He, Xiangyu Zhang, Shaoqing Ren, Jian Sun

- Link: ResNet Paper on arXiv

This foundational paper introduced Residual Networks (ResNets), showcasing how deep networks could be trained efficiently using residual connections.

- Attention Is All You Need (The Transformer Paper)

- Authors: Ashish Vaswani, Noam Shazeer, Niki Parmar, Jakob Uszkoreit

- Link: Transformer Paper on arXiv

This paper introduces Transformers and their revolutionary attention mechanisms, which have since reshaped the landscape of natural language processing and computer vision.

- A Comprehensive Guide to Transformers

- Platform: Hugging Face

- Link: Transformers Explained

Hugging Face offers accessible guides and tools to understand how Transformer architectures work, especially in NLP. Their library is widely used for research and applications.

- A Tutorial on Hybrid Architectures (ResNet + Transformer)

- Platform: Papers with Code

- Link: ResNet-Transformer Hybrid Models

This resource provides code, papers, and information on recent developments in hybrid architectures combining ResNets and Transformers.

- Stanford CS231n: Convolutional Neural Networks for Visual Recognition

- Link: CS231n Course

Stanford’s CS231n course provides an in-depth introduction to Convolutional Neural Networks (CNNs) like ResNets, and includes relevant sections on hybrid models.

- Link: CS231n Course