The evolution of deep learning models has dramatically transformed computer vision capabilities. Two architectures that stand out in this journey are ResNet (Residual Networks) and ResNeXt.

Both have made significant contributions, each bringing unique features that address challenges in depth, computational efficiency, and feature representation. In this article, we’ll dive into the advancements these architectures have brought to computer vision and explore their individual strengths.

The Breakthrough of ResNet: Solving the Depth Limitation

Understanding the Challenges of Deep Networks

In deep learning, adding more layers to a network should theoretically improve its ability to capture complex patterns. However, deep networks face challenges like the vanishing gradient problem and increasing computational demands. As networks grow deeper, gradients (or the changes in weights) tend to diminish, making it hard for models to learn and optimize effectively. This results in increased error rates and diminished accuracy in very deep architectures.

These issues created a need for new architectures that could manage depth more effectively. Before ResNet, networks could hardly exceed 20 or 30 layers without running into these problems.

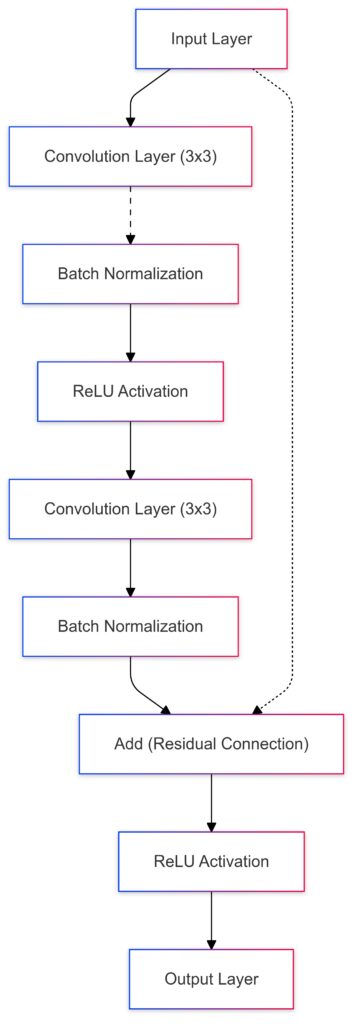

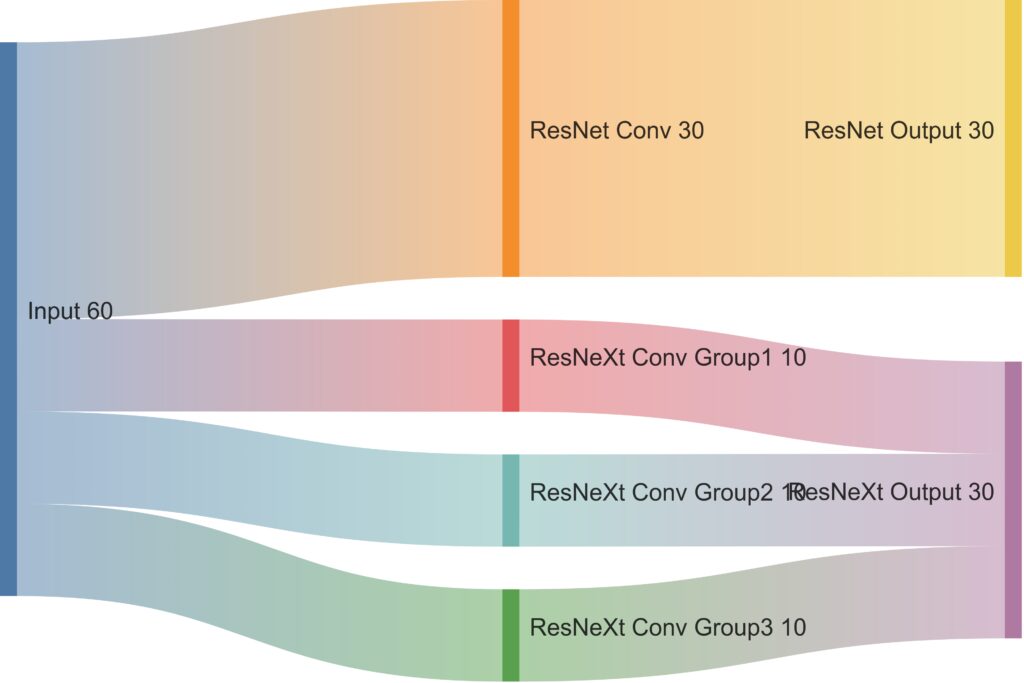

Input Layer: Starts with input data.

Convolution Layers: Two 3×3 convolution layers with batch normalization and ReLU activation.

Add Layer (Residual Connection): Combines the bypassed input with the output of intermediate layers.

Output Layer: Resulting output after the addition operation.

The Innovation of Residual Connections

ResNet’s introduction of residual connections offered an elegant solution. In this approach, instead of learning a direct mapping between inputs and outputs for each layer, the model learns a “residual” function—a difference between the input and output. This residual is added back to the original input via a shortcut connection, allowing information to skip layers without modification.

These shortcut connections helped solve the vanishing gradient problem by preserving gradient flow even in very deep networks. As a result, ResNet could effectively train networks with 50, 101, or even 152 layers, dramatically enhancing the model’s capacity to capture complex patterns without falling into overfitting or gradient issues.

Variants of ResNet: Tailoring Depth and Efficiency

ResNet’s design became the foundation for several variations, each optimized for different tasks and constraints:

- ResNet-18 and ResNet-34: Lighter models suitable for real-time applications and edge devices.

- ResNet-50, ResNet-101, and ResNet-152: Larger, deeper models optimized for high-resolution tasks and rich datasets, offering superior accuracy in complex scenarios.

These variants showcase ResNet’s flexibility across a range of applications and computing environments.

ResNeXt: A New Approach with Enhanced Cardinality

What Sets ResNeXt Apart: The Power of Cardinality

After ResNet, researchers aimed to build architectures that could further improve performance while maintaining efficiency. ResNeXt addressed this challenge by introducing the concept of cardinality—the number of independent paths or transformations within each block. This means that instead of increasing the depth (layers) or width (channels), ResNeXt increased the number of paths through which data could travel in each layer.

By increasing cardinality, ResNeXt captures richer, more diverse feature representations without the heavy computational load associated with additional layers or channels. This makes it possible to build highly expressive models that retain strong predictive power while remaining efficient.

Grouped Convolutions: A Key Feature of ResNeXt

In ResNeXt, grouped convolutions play a central role. Each block divides the convolutions into smaller groups, which independently process parts of the input. Grouped convolutions allow ResNeXt to capture multiple feature representations in parallel without drastically increasing the parameter count or computational burden.

This architecture enables ResNeXt to achieve better performance than comparable ResNet models with fewer parameters, providing a more efficient balance of model size and accuracy. As a result, ResNeXt is especially well-suited for applications where computational resources are limited, such as mobile devices or real-time video processing.

Practical Differences Between ResNet and ResNeXt

While both ResNet and ResNeXt excel in visual tasks, ResNeXt has an edge in certain practical scenarios. For instance:

- Computational Efficiency: ResNeXt achieves similar or better accuracy than deeper ResNet variants with fewer resources, making it ideal for on-device AI.

- Training Time: ResNeXt’s design is highly parallelizable, reducing the training time and allowing for faster deployment on large-scale datasets.

These advantages make ResNeXt a go-to choice in real-time applications where efficiency and speed are critical.

ResNet processes the input through a single convolution path.

ResNeXt uses grouped convolutions, splitting the input into three parallel groups, which enhances efficiency by distributing resources across multiple branches (cardinality). This approach allows ResNeXt to achieve greater computational efficiency and representational power.

Core Comparisons: ResNet vs. ResNeXt

Model Efficiency and Computational Cost

A major challenge in deep learning is balancing accuracy with computational efficiency. ResNeXt’s use of grouped convolutions reduces computational costs, allowing for faster training and inferencing without sacrificing model performance. ResNet, while powerful, typically requires more layers and parameters for similar accuracy levels.

In summary:

- ResNet: Known for robustness with higher depth, but often computationally demanding.

- ResNeXt: Achieves competitive accuracy with a more balanced parameter count, making it computationally more efficient.

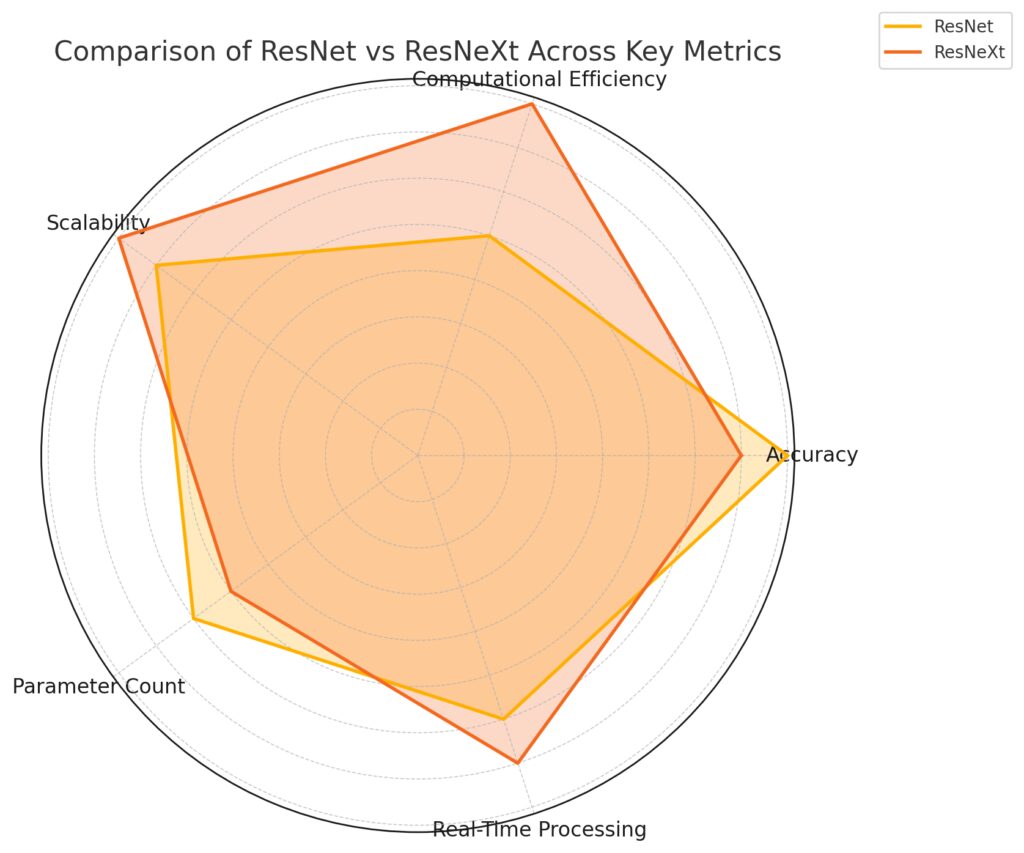

Accuracy: ResNet scores higher, reflecting its robustness in achieving high accuracy across deep architectures.

Computational Efficiency: ResNeXt excels due to grouped convolutions, enhancing efficiency.

Scalability: Both models score well, with ResNeXt slightly advantaged by its modular approach.

Parameter Count: ResNet has a higher parameter count, while ResNeXt optimizes this with fewer parameters.

Real-Time Processing: ResNeXt’s efficiency provides an edge, making it more suitable for real-time applications.

Performance on Benchmarks

When it comes to benchmark performance, both architectures have been widely adopted and tested on ImageNet and COCO datasets, among others. ResNeXt models often achieve similar or better accuracy on image classification tasks compared to ResNet of the same depth, due to their higher capacity for learning representations.

- Accuracy: ResNeXt typically performs on par or better with fewer parameters.

- Efficiency: ResNeXt is faster to train for similar performance levels, making it suitable for applications with resource constraints.

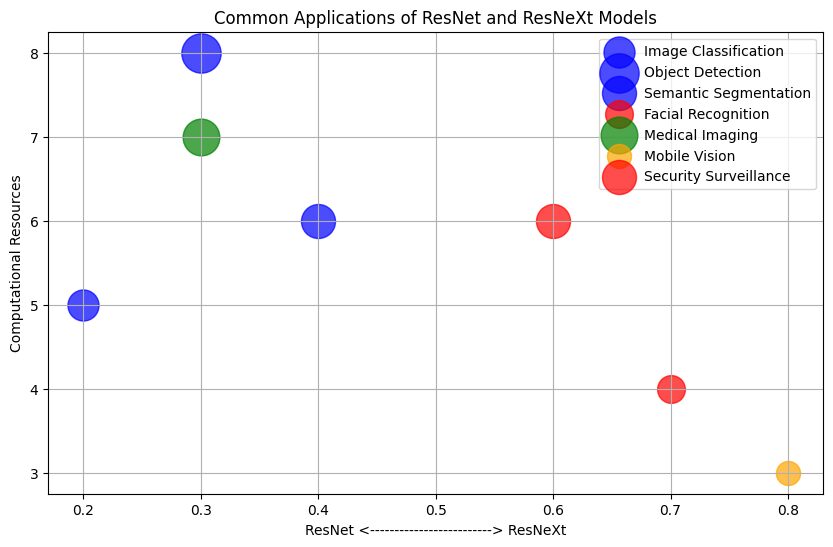

Unique Applications of ResNet and ResNeXt

When to Use ResNet

Due to its simplicity and depth, ResNet is often preferred for applications where interpretability and robustness are key. It’s widely used in medical imaging, autonomous driving, and industrial inspection, where reliable image recognition is critical and computational resources are less constrained.

When ResNeXt Shines

ResNeXt’s efficient architecture is ideal for scenarios where computational resources or power efficiency is a consideration, such as mobile devices or edge computing. By offering similar accuracy to ResNet with a lower computational footprint, ResNeXt has become popular in real-time applications and high-volume image processing tasks.

Practical Considerations for Deployment

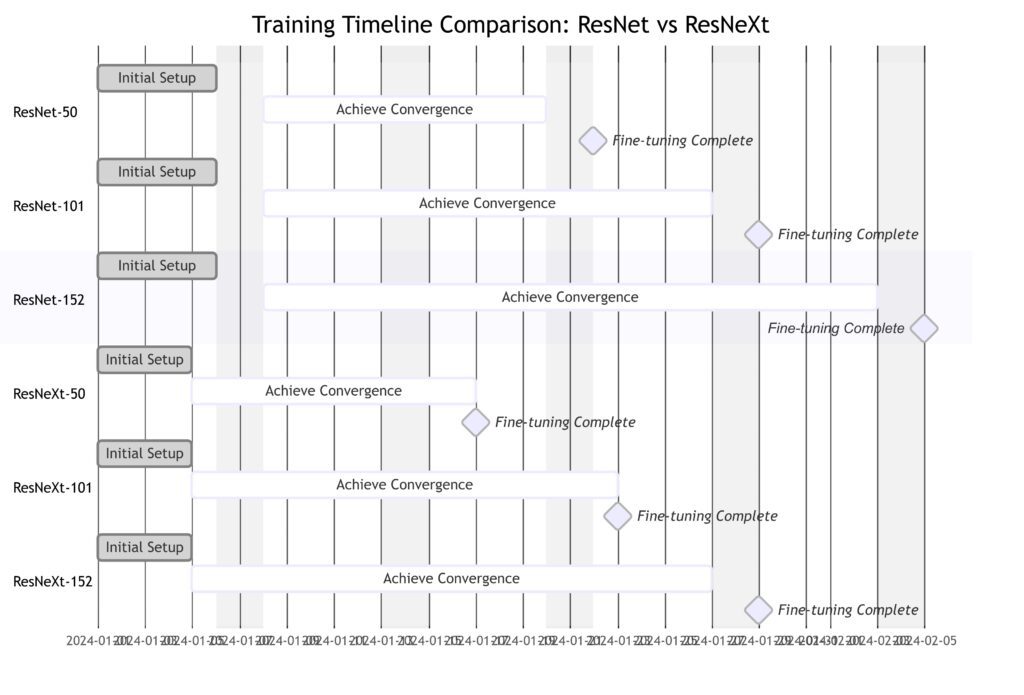

Training Complexity

ResNet models are straightforward to implement and train due to their clear architecture, making them well-suited for researchers and practitioners just getting started with deep learning. ResNeXt, while more complex, offers advantages when computational power or memory is limited, especially for deployment on cloud platforms or IoT devices.

Initial Setup: Preparatory steps common across models.

Achieve Convergence: Training period required to reach initial model convergence.

Fine-tuning Complete: Final milestone where each model completes fine-tuning.

This breakdown highlights the relative efficiency of ResNeXt, especially in shallower models.

Model Fine-Tuning

ResNeXt models can often achieve a better fine-tuning performance with fewer iterations, which is particularly beneficial when working with large datasets or complex domains. Fine-tuning is also efficient for ResNet but generally requires slightly more computational resources for similar results.

Compatibility and Integration

Both ResNet and ResNeXt are widely supported across deep learning frameworks like PyTorch and TensorFlow, offering pre-trained weights and extensive documentation. For developers looking to integrate these models, both options are accessible and versatile, allowing customization based on application needs.

Future of Residual Architectures in Computer Vision

The future of computer vision will likely continue to be influenced by residual architectures like ResNet and ResNeXt. Emerging research is exploring hybrid models that combine residual blocks with attention mechanisms to capture spatial and temporal information more efficiently. With applications expanding from autonomous vehicles to medical diagnostics, these architectures provide a critical foundation for the next generation of AI-driven image analysis tools.

ResNet vs ResNeXt: Key Advancements in Computer Vision

In this article, we’ve explored how ResNet and ResNeXt have shaped the field of computer vision, each offering unique benefits and applications. From ResNet’s pioneering residual blocks to ResNeXt’s innovative grouped convolutions, these architectures have become foundational for image recognition and deep learning advancements. Let’s conclude by summarizing where each model shines and how they continue to impact AI research and real-world applications.

Summary: Choosing Between ResNet and ResNeXt

Strengths of ResNet

ResNet’s innovation in residual connections paved the way for training very deep neural networks with ease. It remains a top choice for projects that can benefit from deep layers and require robust performance, especially when computational power is not a limiting factor. Industries like healthcare, manufacturing, and transportation still leverage ResNet due to its proven reliability in high-stakes environments.

- Best For: Applications needing interpretability and robust performance with ample computational resources.

- Typical Use Cases: Medical imaging, industrial inspection, autonomous driving.

ResNeXt’s Flexibility and Efficiency

ResNeXt stands out for its ability to balance accuracy with computational efficiency. The model’s use of grouped convolutions allows it to achieve high accuracy with a reduced parameter count, making it ideal for real-time applications and edge computing. With more industries deploying AI at the edge, ResNeXt’s efficient structure is gaining traction in resource-constrained settings like mobile and IoT devices.

- Best For: Applications that require high accuracy on resource-limited platforms.

- Typical Use Cases: Mobile image processing, edge computing, high-volume image classification.

Future Directions in Computer Vision with Residual Architectures

Hybrid Models with Attention Mechanisms

As computer vision advances, researchers are experimenting with architectures that blend residual networks with attention mechanisms. These new models aim to improve upon traditional architectures by capturing contextual dependencies and temporal information more effectively. For instance, combining residual blocks with self-attention layers helps the network focus on the most relevant parts of an image, improving accuracy on complex tasks.

Enhanced Efficiency for Real-World Applications

With the rise of edge computing and real-time applications, there is an ongoing push to make neural networks more compact and efficient. Architectures inspired by ResNet and ResNeXt are often deployed as the starting point for model pruning and quantization techniques to fit on smaller devices. This trend indicates a future where residual networks continue to be relevant, though optimized for speed and low power consumption.

Final Thoughts on ResNet vs. ResNeXt

Both ResNet and ResNeXt have cemented their place in the landscape of computer vision architectures, each contributing unique strengths. ResNet’s depth-first approach is ideal for complex tasks requiring deep feature extraction, while ResNeXt’s focus on flexibility makes it a winner in resource-constrained environments. As technology progresses, hybrid models and efficiency-driven architectures inspired by these networks are expected to play a crucial role in advancing AI applications across industries.

FAQs

Which model is better for mobile and edge computing applications?

ResNeXt is often the preferred choice for mobile and edge computing because it provides high accuracy with a lower computational footprint. The grouped convolutions allow ResNeXt to run faster on limited hardware, making it ideal for real-time applications, such as mobile image recognition and IoT devices.

Is ResNet or ResNeXt more suitable for transfer learning?

Both ResNet and ResNeXt are effective for transfer learning; however, ResNeXt may offer advantages due to its efficient architecture, allowing for faster fine-tuning with fewer parameters. If computational resources are limited, ResNeXt can be more efficient for transfer learning on large datasets, though ResNet remains a reliable and powerful option, especially for tasks requiring deep feature extraction.

Can ResNeXt achieve better performance than ResNet on ImageNet?

Yes, ResNeXt has demonstrated similar or better performance on ImageNet compared to ResNet, particularly when comparing models with similar parameter counts. The grouped convolution approach in ResNeXt allows for richer feature learning, which can lead to improved accuracy on large-scale image classification datasets.

Are both ResNet and ResNeXt compatible with major deep learning frameworks?

Yes, both ResNet and ResNeXt are widely supported in major deep learning frameworks like PyTorch and TensorFlow. These frameworks offer pre-trained weights and support for fine-tuning, making it easy to integrate either model into existing workflows for various computer vision tasks.

What problem does the ResNet architecture solve in deep learning?

ResNet addresses the vanishing gradient problem, which occurs when training deep networks. As layers are added, gradients diminish, causing the network to struggle with capturing complex features. ResNet solves this with skip connections in its residual blocks, allowing information to bypass certain layers and enabling very deep networks to be trained effectively without a loss in accuracy.

Why is ResNeXt called an “enhancement” of ResNet?

ResNeXt is built on the foundation of ResNet but introduces cardinality, or the idea of having multiple parallel paths within each residual block. By using grouped convolutions, ResNeXt achieves better performance with fewer parameters compared to ResNet of the same depth, making it more computationally efficient. This structural adjustment is what enhances ResNeXt’s feature extraction capabilities while keeping model size manageable.

How do grouped convolutions work in ResNeXt?

In ResNeXt, grouped convolutions divide the input into separate groups that each process independently before merging. This technique allows multiple, parallel “transformations” within each layer, enabling the model to capture more detailed and diverse features without increasing the number of parameters substantially. Grouped convolutions make ResNeXt computationally efficient and better suited for applications that need high accuracy with minimal resources.

Can ResNet and ResNeXt be used for video analysis?

Yes, both ResNet and ResNeXt can be adapted for video analysis, often by adding temporal or 3D convolutional layers to handle the additional time dimension in videos. However, ResNeXt’s efficiency can be advantageous for video applications that require real-time processing, such as security surveillance or augmented reality, where computational efficiency is crucial.

Are there any limitations to using ResNeXt?

While ResNeXt is efficient and powerful, the architecture’s complexity (with grouped convolutions) can make it harder to understand and implement for beginners. Additionally, although it has fewer parameters, it may still require substantial memory and resources when implemented with high cardinality for large tasks. However, these limitations are generally outweighed by its accuracy and efficiency benefits in practical applications.

How well do ResNet and ResNeXt perform on small datasets?

Both ResNet and ResNeXt perform well on large datasets but can also be adapted for smaller datasets by using pre-trained models and fine-tuning. ResNet is more commonly used for smaller datasets due to its straightforward architecture, which is easy to adjust for different input sizes. ResNeXt, though slightly more complex, can still offer strong performance on small datasets when transfer learning and fine-tuning techniques are applied.

Is one architecture better suited for real-time applications?

ResNeXt tends to be better suited for real-time applications because of its efficient use of parameters through grouped convolutions. This allows it to process images faster without sacrificing accuracy, making it ideal for tasks where speed and accuracy are essential, such as mobile vision apps, AR applications, and real-time object detection on edge devices. ResNet can also be used but may require more resources to reach similar speeds and performance.

Are there advanced versions of ResNet and ResNeXt?

Yes, several advanced versions of ResNet and ResNeXt have been developed. For example, ResNet-50, ResNet-101, and ResNet-152 are increasingly deeper variants of ResNet, and Wide ResNet is a variant that emphasizes network width over depth. ResNeXt-50 and ResNeXt-101 are common advanced versions of ResNeXt, with various adaptations to optimize performance on different tasks. Some models also incorporate attention mechanisms for improved focus on relevant features in the image, enhancing the models’ performance on complex tasks.

How do ResNet and ResNeXt perform in transfer learning for non-image tasks?

Both ResNet and ResNeXt have proven effective in transfer learning, not only for image tasks but also for non-image domains such as NLP and time-series data. Their hierarchical feature extraction can capture complex patterns useful across domains. ResNeXt’s grouped convolutions help streamline the model size, making it efficient for resource-constrained tasks in non-image domains where pattern recognition is critical, like sound classification and medical diagnosis from non-visual data.

What is cardinality, and why is it important in ResNeXt?

In ResNeXt, cardinality refers to the number of independent paths (or transformations) in each layer. By increasing cardinality rather than depth or width, ResNeXt can capture more diverse patterns with fewer parameters, making the network both accurate and efficient. This approach helps ResNeXt achieve high performance without the memory and processing requirements that typically come with increasing depth or width in traditional architectures.

Can ResNet and ResNeXt handle higher-resolution images?

Yes, both ResNet and ResNeXt can be configured to process higher-resolution images, although this requires more computational resources. When dealing with high-resolution inputs, ResNeXt’s grouped convolutions can help balance the need for accuracy with computational efficiency, making it more scalable. ResNet, while also effective, may require more modifications or resources for similar efficiency at high resolutions, as it doesn’t inherently optimize for parameter efficiency in the same way.

Are ResNet and ResNeXt suitable for object detection and segmentation tasks?

Absolutely, both ResNet and ResNeXt are frequently used as backbone models for object detection and image segmentation frameworks, such as Mask R-CNN and YOLO. ResNet’s robust feature extraction capability makes it ideal for complex segmentation, while ResNeXt’s efficiency often results in faster detection without sacrificing accuracy, especially for real-time detection tasks. Both are suitable for tasks like autonomous driving, medical imaging, and security.

What is the impact of depth in ResNet, and does it affect model performance?

In ResNet, increasing depth (e.g., using ResNet-101 or ResNet-152) typically improves performance because deeper layers capture more complex features. However, adding depth also increases the model’s computational requirements and may lead to diminishing returns in performance, especially when the architecture becomes too deep. This is why some tasks favor ResNet-50 over deeper versions to balance accuracy and speed effectively.

How do skip connections in ResNet benefit training?

The skip connections in ResNet allow gradients to bypass certain layers during backpropagation, which mitigates the vanishing gradient problem that can occur with deep architectures. This results in smoother training and allows for extremely deep networks to learn effectively without becoming “stuck” in early layers. These connections also help the network learn residual mappings, which speeds up training and often results in higher overall accuracy.

How does ResNeXt handle feature extraction differently than ResNet?

While ResNet focuses on depth through residual blocks, ResNeXt improves feature extraction with multi-branch transformations via grouped convolutions. This structure allows ResNeXt to extract multiple perspectives of an image feature simultaneously, enhancing its ability to capture fine-grained details and diverse patterns. This gives ResNeXt an edge in scenarios where recognizing subtle variations (such as texture details or background elements) is critical.

Is there an optimal depth for ResNet and ResNeXt models?

The optimal depth for ResNet or ResNeXt depends on the task, dataset size, and available resources. For most applications, ResNet-50 or ResNeXt-50 offer a good balance between accuracy and computational efficiency. However, for very large datasets or complex tasks, deeper variants like ResNet-101 or ResNeXt-101 may provide better results. In practice, the choice of depth should consider the trade-off between accuracy and training time, as deeper models tend to be slower and more resource-intensive.

Are ResNet and ResNeXt still relevant with the rise of transformer-based models?

Yes, despite the popularity of transformer-based models like ViTs (Vision Transformers) in computer vision, ResNet and ResNeXt remain relevant due to their simplicity, efficiency, and robustness. Transformer models often require vast amounts of data and computational resources to outperform convolutional architectures. As a result, ResNet and ResNeXt are still widely used, particularly in resource-limited settings, or when pre-trained transformer models are impractical. Additionally, hybrid models that combine CNNs with transformers are being developed, using the strengths of both architectures.

Resources

Tutorials and Courses

- Fast.ai’s Practical Deep Learning for Coders: This course offers hands-on training for various deep learning tasks, including image recognition with ResNet. Suitable for beginners and intermediate learners. Visit Fast.ai

- Coursera – Convolutional Neural Networks by Andrew Ng: This course, part of the Deep Learning Specialization, covers CNN basics and advanced techniques, including residual networks. Visit Coursera

Framework Documentation

- PyTorch Documentation: PyTorch offers pre-trained models and tools for implementing ResNet and ResNeXt with flexibility for custom layers and modifications. Explore PyTorch

- TensorFlow and Keras Documentation: TensorFlow’s Keras API provides implementations and pre-trained weights for ResNet variants, making it easy to integrate into existing projects. Explore TensorFlow