AI workloads demand powerful infrastructure that scales efficiently. Azure Kubernetes Service (AKS) is a robust platform for deploying, managing, and scaling AI and machine learning (ML) applications.

Here’s how AKS enables scalable, reliable, and cost-effective AI operations.

What is Azure Kubernetes Service (AKS)?

A Managed Kubernetes Solution

AKS is Microsoft Azure’s managed Kubernetes offering that simplifies container orchestration. Kubernetes, an open-source platform, automates the deployment and management of containerized applications, while AKS removes the complexity of managing Kubernetes clusters.

- Pre-configured clusters: Easily deploy AI models without worrying about infrastructure setup.

- Seamless Azure integration: Access Azure-native services like AI and ML frameworks directly.

Ideal for Scaling AI Applications

AI applications often require dynamic scaling to handle workloads, especially during training or inference stages. AKS excels here:

- Elastic scaling: Adjusts to workload spikes automatically.

- Resource efficiency: Distributes workloads optimally across clusters.

Key Benefits of Using AKS for AI Workloads

Effortless Scalability

AI models often process massive datasets and require flexible scaling. AKS supports horizontal scaling, enabling you to add or remove containers on demand.

- Example: An AI recommendation engine during Black Friday sales scales automatically to handle traffic spikes.

- Node Pools: Separate workloads (e.g., AI training vs. real-time inference) for optimized scaling.

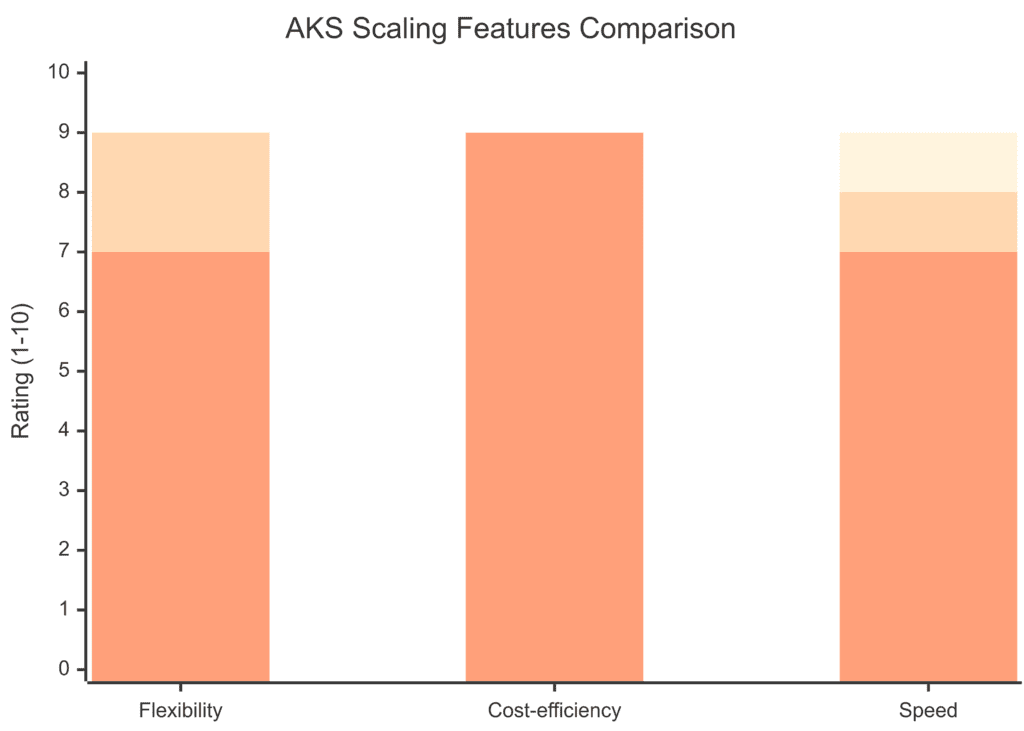

Showing the relationship between key scaling features of AKS, such as horizontal pod autoscaling (HPA), virtual nodes, and node pools.

Accelerated Development with Azure ML Integration

AKS integrates seamlessly with Azure Machine Learning for:

- Model training: Distribute training across GPU-accelerated nodes.

- Inference deployment: Quickly deploy models in scalable containers.

- Experimentation: Run multiple AI experiments simultaneously without resource conflicts.

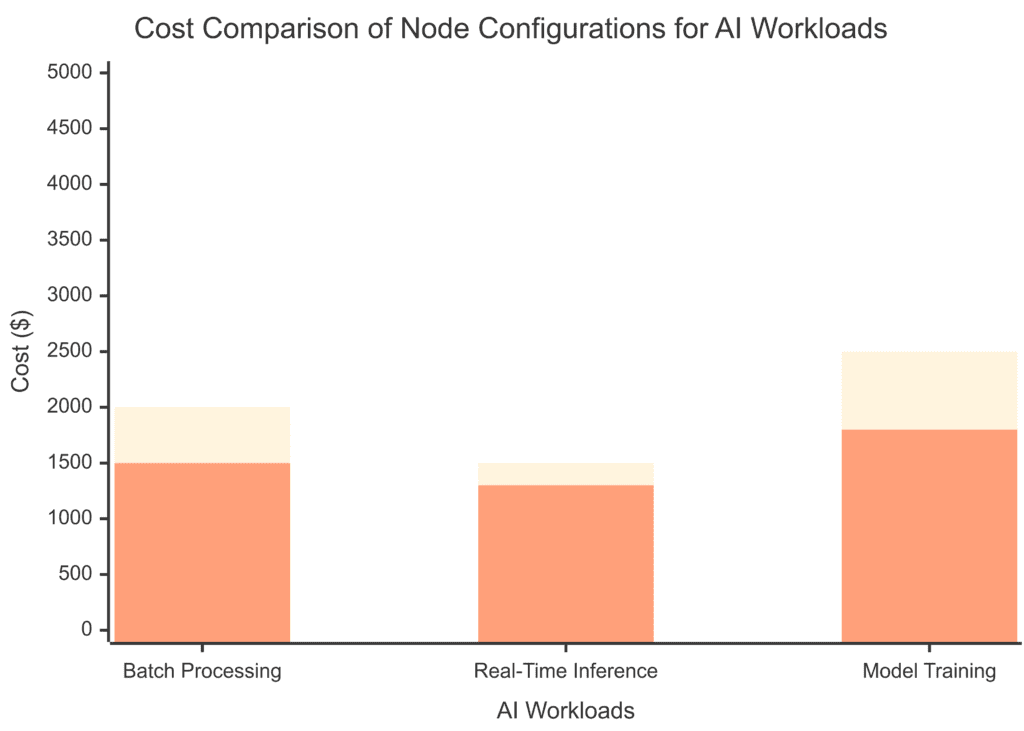

Cost Optimization

AKS ensures you pay only for the resources you use. Features like spot nodes allow running non-critical AI tasks at a lower cost, making it ideal for experimentation.

- Example: Training large language models using spot instances during off-peak hours to save costs.

Enhanced Reliability and Security

AKS offers enterprise-grade security and reliability for AI operations.

- High availability: Redundancy ensures your AI workloads stay online during node failures.

- Role-based access control (RBAC): Secure sensitive AI data and configurations.

- Azure Active Directory (AAD): Integrates with existing security policies for seamless identity management.

How to Optimize AI Operations on AKS

Using GPU-Enabled Nodes

AI workloads often involve heavy computations, like deep learning. AKS supports NVIDIA GPU nodes, accelerating performance for tasks such as:

- Image recognition.

- Natural Language Processing (NLP).

- Complex model training.

Implementing Autoscaling

Enable Cluster Autoscaler to automatically add or remove nodes based on workload requirements.

- Vertical Pod Autoscaler (VPA): Adjusts resource allocation for individual containers.

- Horizontal Pod Autoscaler (HPA): Scales the number of pods for high-demand AI inference tasks.

Practical Use Cases for AI with AKS

Real-Time AI Applications

Deploy AI-powered chatbots, recommendation systems, or fraud detection algorithms using AKS for:

- Quick response times with load-balanced containers.

- Dynamic scaling during high-traffic events.

AI Model Training Pipelines

Use AKS to orchestrate multi-node pipelines for model training and hyperparameter tuning. For instance:

- Scenario: Training a sentiment analysis model with thousands of tweets distributed across GPUs in AKS.

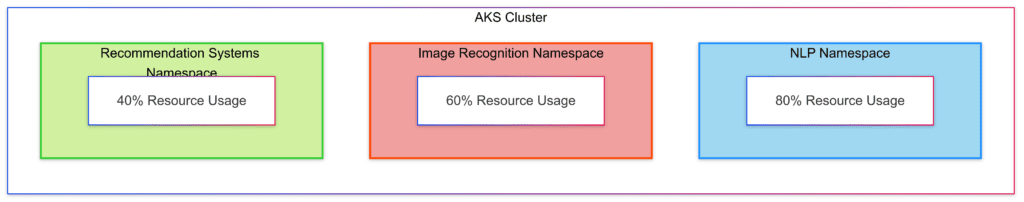

Multi-Tenancy AI Environments

Support multiple teams or AI projects in isolated environments. AKS ensures:

- Resource segregation for experiments.

- Cost tracking by workload or team.

Tools and Services to Enhance AI Workflows with AKS

Azure DevOps and CI/CD

Integrate AKS with Azure DevOps to automate deployment pipelines for AI models.

- Continuous Integration (CI): Validate changes to AI models.

- Continuous Deployment (CD): Push updates to production containers seamlessly.

Azure Monitor and Insights

Use Azure Monitor to track the performance of AI workloads running on AKS.

- Monitor GPU and CPU usage for optimization.

- Receive alerts for performance bottlenecks or errors.

Azure Kubernetes Extension for VS Code

Simplify the management of AKS clusters using Visual Studio Code with the Kubernetes extension:

- Debug AI container deployments directly.

- Manage pods, services, and logs without switching tools.

Advanced Features of AKS for AI Scaling

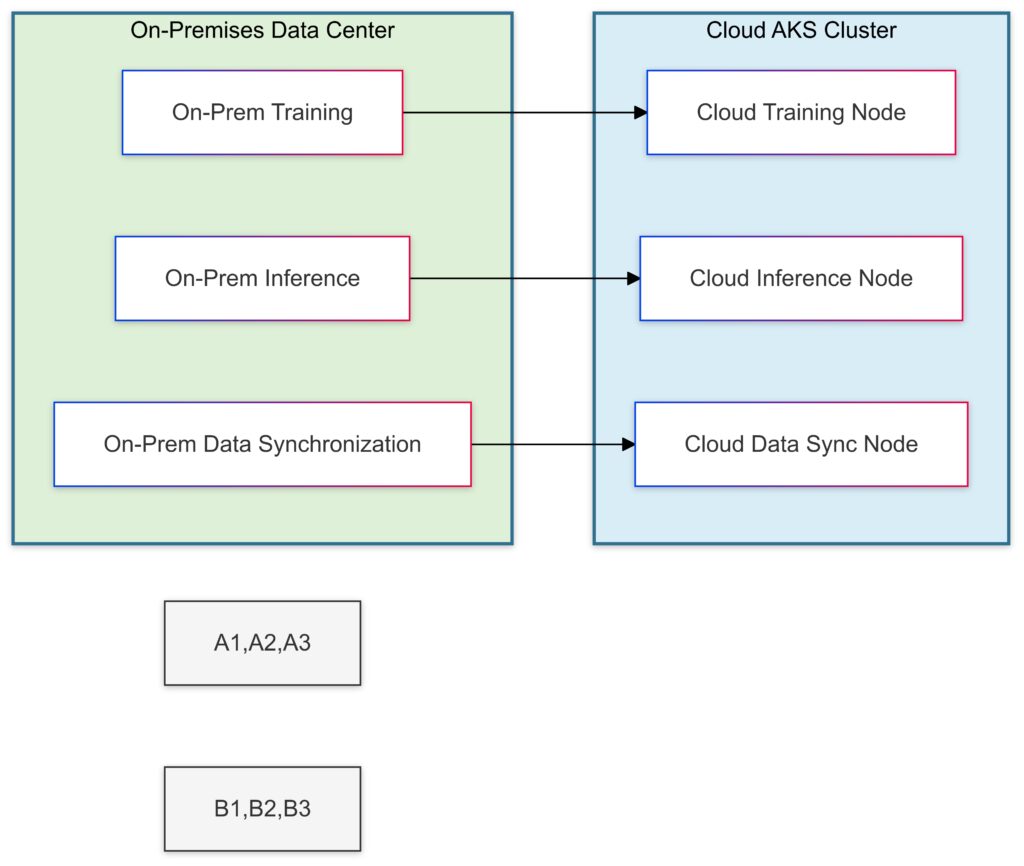

Hybrid and Multi-Cloud Deployments

AKS enables hybrid AI operations through Azure Arc, extending AI workloads to on-premises and other cloud platforms.

- Example: Run sensitive AI workloads on-premises while offloading non-critical tasks to the cloud.

Hybrid AI deployment model combining on-premises and cloud resources using AKS and Azure Arc.

Serverless Kubernetes

With Virtual Nodes, AKS offers serverless capabilities, running AI containers on-demand without managing infrastructure.

- Use Case: Deploying short-lived AI tasks like batch data processing or real-time analytics.

Edge AI Deployment

Leverage AKS with Azure IoT Edge to deploy AI models at the edge for low-latency applications.

- Example: AI-powered video analytics on cameras in retail stores.

Advanced Scaling Strategies for AI on AKS

Leveraging Multi-Node Pools for Optimized Workloads

Node pools in AKS allow you to separate workloads based on requirements like hardware, operating systems, or performance needs.

- GPU node pools: Ideal for resource-heavy training tasks like deep learning or image recognition.

- CPU node pools: Optimized for lightweight AI inference tasks such as chatbot operations.

- Spot VM pools: Perfect for non-critical workloads like data preprocessing or experimentation, reducing costs by up to 90%.

By strategically assigning workloads to different pools, you maximize resource utilization while minimizing costs.

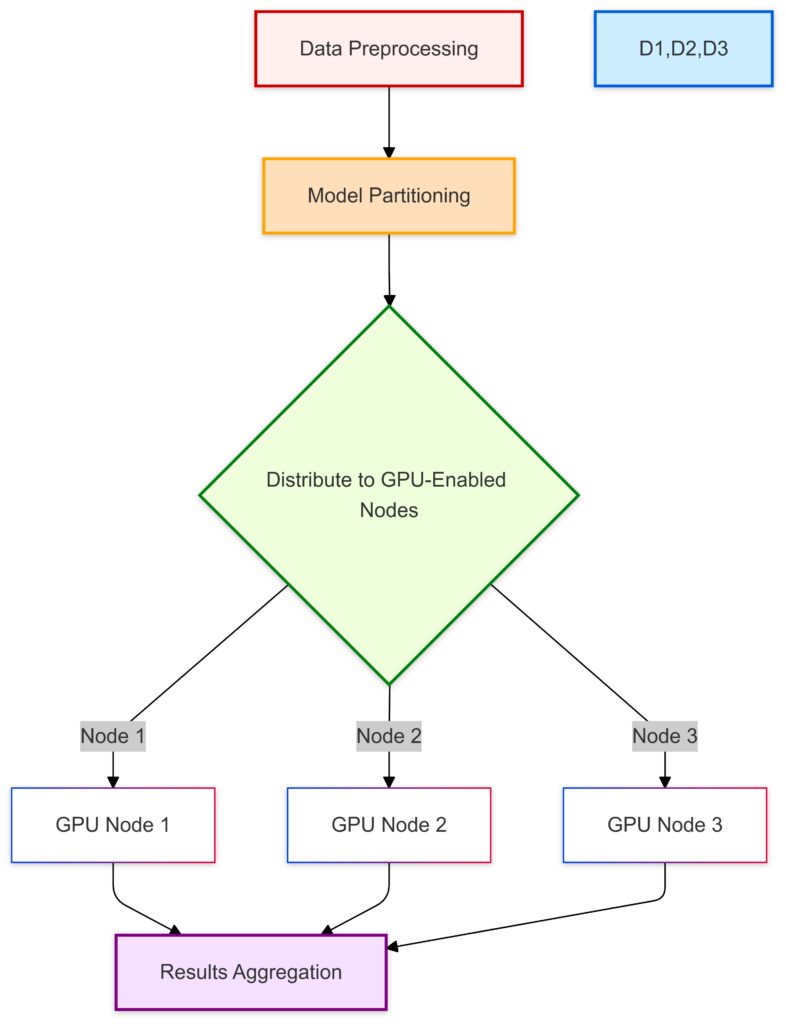

Distributed AI Model Training

AKS supports distributed model training across multiple nodes using frameworks like TensorFlow, PyTorch, or Horovod.

- Parallel training: Splits large datasets across multiple nodes for faster processing.

- Elastic training: Dynamically adjusts the number of nodes based on training demands.

Example: Training a language model like GPT on terabytes of text data, where AKS automatically scales to handle the workload efficiently.

Workflow of distributed AI model training on AKS, leveraging GPUs for efficient parallel computations.

Handling Large-Scale Inference with Serverless AKS

For AI inference, AKS’s virtual nodes and KEDA (Kubernetes Event-Driven Autoscaler) enable serverless scaling.

- Virtual Nodes: Instantly spin up resources for inference without maintaining a persistent cluster.

- KEDA: Scales pods based on external events like message queues or HTTP requests, ensuring rapid response times.

Use Case: Scaling a real-time fraud detection API that processes thousands of transactions per second during peak hours.

Industry Use Cases Showcasing AKS for AI

Healthcare: AI-Driven Diagnostics

Hospitals deploy AI models for diagnosing diseases like pneumonia or cancer using AKS.

- Model Training: Distributed training of diagnostic algorithms on MRI scans.

- Inference Deployment: Real-time patient scan analysis using AI inference clusters.

- Example Tools: Integration with Azure Machine Learning and DICOM data processing tools.

Retail: Personalized Recommendations

Retailers use AKS to run recommendation engines that analyze customer behavior.

- Scenario: During holiday sales, AKS dynamically scales up to process billions of interactions in real time.

- Hybrid Deployment: Sensitive customer data is processed on-premises, while product recommendations are generated in the cloud.

Autonomous Vehicles: Real-Time Sensor Data Processing

AKS enables scalable AI pipelines for processing sensor data from autonomous vehicles.

- Edge AI: Vehicles deploy AI models trained in AKS clusters to perform low-latency tasks like object detection.

- Centralized Training: High-resolution video data from multiple vehicles is processed in the cloud using AKS GPU clusters.

Best Practices for Scaling AI with AKS

Implementing CI/CD Pipelines for AI Models

Use AKS with Azure DevOps or GitHub Actions to ensure seamless updates to AI models.

- Automate training, testing, and deployment of new AI versions.

- Use Blue-Green Deployments to ensure zero downtime when updating inference models.

Optimizing Cluster Costs

- Autoscaling with Limits: Set upper limits on scaling to prevent runaway costs.

- Monitor Idle Resources: Use Azure Monitor to identify and shut down underutilized nodes.

- Spot Instances: Assign non-critical tasks to spot nodes to reduce expenses.

Integrating AI Pipelines with AKS

Combine AKS with Azure’s data and AI tools to build end-to-end AI pipelines.

- Data Ingestion: Azure Data Factory processes raw data before sending it to AKS.

- Model Training: Train models using AKS GPU clusters.

- Inference Deployment: Deploy real-time inference services in AKS with autoscaling enabled.

Challenges and How AKS Solves Them

Challenge: Managing Complex AI Pipelines

Solution: Use Azure Kubernetes Fleet Manager to simplify managing multiple AKS clusters across regions or environments.

Challenge: Security for Sensitive AI Workloads

Solution: AKS integrates with Azure Policy and Confidential Computing, offering encrypted nodes and compliance with security standards like HIPAA.

Challenge: Debugging and Monitoring Distributed AI Applications

Solution: Tools like Azure Monitor, Log Analytics, and Prometheus/Grafana provide real-time insights into node and pod performance, making it easier to diagnose issues.

Emerging Trends in AI Scaling with AKS

Federated Learning with AKS

AKS enables federated learning, where AI models are trained on decentralized data while ensuring privacy.

- Use Case: Training healthcare models on patient data across multiple hospitals without sharing sensitive information.

AI for Sustainable Scaling

AKS can integrate with Azure Sustainability Calculator to analyze the environmental impact of scaling AI workloads, helping businesses optimize resource usage for energy efficiency.

Multi-Cloud and Hybrid AI Operations

Leverage AKS with Azure Arc to deploy AI workloads across multiple clouds, ensuring redundancy and global reach.

- Example: Running AI applications in Azure, AWS, and on-premises for disaster recovery.

Resources for Getting Started

Official Documentation

Learning Platforms

Community Support

- AKS GitHub Repository

- Kubernetes Forum

With its flexibility, scalability, and integration capabilities, Azure Kubernetes Service is a cornerstone for modern AI operations. Whether you’re training massive models, running real-time inference, or deploying AI at the edge, AKS offers the tools and support to make your AI initiatives succeed.

FAQs

How does AKS optimize costs for AI operations?

AKS enables cost optimization through features like autoscaling and spot nodes. Autoscaling adjusts resources based on workload demands, while spot nodes provide cheaper alternatives for non-critical tasks. For example, a startup could use spot nodes to preprocess large datasets overnight, saving costs compared to running standard virtual machines.

Is AKS suitable for small AI projects?

Absolutely. AKS scales down to fit small projects while retaining its robust feature set. For instance, a developer building a chatbot prototype can deploy it on AKS with minimal initial resources. As the project grows, AKS can handle increased traffic or model complexity without requiring a full system overhaul.

How does AKS integrate with other Azure AI services?

AKS seamlessly integrates with Azure AI services like Azure Machine Learning and Cognitive Services. For example, you can train a natural language processing model in Azure Machine Learning and deploy it to AKS for real-time chatbot inference. Similarly, AKS can connect to Cognitive Services for features like sentiment analysis or speech recognition.

Can AKS support AI pipelines with heavy computational needs?

Yes, AKS is ideal for computationally intensive AI tasks. With GPU-enabled node pools, AKS accelerates training for complex models like convolutional neural networks. For example, a healthcare company could use AKS to train a diagnostic model on thousands of medical images, leveraging distributed GPU nodes for faster results.

How does AKS ensure high availability for AI applications?

AKS provides features like node redundancy and automated failover to ensure high availability. For example, in an AI-driven recommendation engine, AKS ensures that even if one node fails, the application remains operational by rerouting workloads to other nodes in the cluster.

Is AKS secure enough for AI workloads involving sensitive data?

AKS offers enterprise-grade security through features like Azure Active Directory (AAD) integration, role-based access control (RBAC), and data encryption. For example, a financial institution deploying fraud detection models on AKS can use AAD to control access to the models and RBAC to restrict cluster permissions.

Can AKS support real-time AI applications?

Yes, AKS is designed to handle real-time workloads. For example, an autonomous drone system could use AKS to process sensor data in real time, leveraging event-driven scaling to adjust resources during high-demand scenarios, such as disaster response.

How does AKS facilitate hybrid or multi-cloud AI deployments?

Using Azure Arc, AKS can extend its functionality to on-premises or other cloud platforms. For instance, a company could train its AI models in Azure’s cloud but deploy inference services on-premises to comply with data residency regulations. Azure Arc ensures seamless management of these distributed resources.

How does AKS handle varying workloads for AI applications?

AKS supports horizontal pod autoscaling (HPA) to manage workload fluctuations dynamically. For instance, if an AI-powered e-commerce recommendation engine experiences a surge during a flash sale, AKS automatically scales up the number of pods to handle the increased traffic. After the sale, it scales down to save costs.

Can AKS support distributed training for machine learning models?

Yes, AKS facilitates distributed training by orchestrating multiple nodes to work on the same model. For example, a natural language processing (NLP) model like GPT-3 can be trained on massive datasets by distributing computations across GPU-enabled nodes, significantly reducing training time.

Is AKS suitable for deploying AI applications at the edge?

AKS integrates seamlessly with Azure IoT Edge, making it an excellent choice for deploying AI models at the edge. For example, a manufacturing plant could use AKS to manage models that detect defects in real-time using camera feeds, with inference happening directly on IoT devices for minimal latency.

How does AKS ensure scalability for AI inference tasks?

AKS uses features like virtual nodes and event-driven scaling to handle large-scale inference tasks. For example, a video streaming platform using AI to recommend content can rely on AKS to scale rapidly when traffic spikes, ensuring low-latency recommendations for millions of users.

Can AKS manage multi-team AI development environments?

Yes, AKS supports multi-tenancy, enabling teams to work in isolated namespaces within the same cluster. For example, a retail company could host separate namespaces for teams working on price prediction, supply chain optimization, and customer segmentation models, ensuring resource isolation and cost efficiency.

How does AKS support hybrid deployments for AI applications?

AKS, when paired with Azure Arc, enables hybrid AI deployments. For instance, a financial institution could deploy sensitive workloads, such as fraud detection models, on on-premises AKS clusters, while using Azure cloud resources for less sensitive applications like customer sentiment analysis.

What tools can be used with AKS for monitoring AI applications?

AKS integrates with tools like Azure Monitor, Prometheus, and Grafana to monitor AI workloads. For example, a data science team deploying a computer vision application can use Azure Monitor to track GPU usage, ensuring optimal resource allocation during model inference.

Does AKS support containerization of pre-trained AI models?

Yes, AKS is ideal for deploying pre-trained models in containers. For example, a healthcare organization can containerize a TensorFlow-based diagnostic model for X-ray image analysis and deploy it on AKS for real-time inference in clinics.

How does AKS support model versioning and updates?

AKS simplifies model versioning through canary deployments and blue-green deployment strategies. For example, a gaming company updating its AI matchmaking algorithm can roll out the new version to a small subset of users first, monitor performance, and then gradually scale to the entire user base.

Can AKS handle AI workloads across different regions?

Yes, AKS supports multi-region deployments for global scalability. For example, a logistics company using AI for route optimization can deploy inference services in clusters located in different geographic regions, ensuring low latency for local users while maintaining centralized training in a specific region.