Advancing Natural Language Understanding (NLU) for low-resource languages is a critical step in making AI accessible globally. Let’s explore the challenges, strategies, and ongoing efforts to ensure inclusivity.

Why Low-Resource Languages Are Critical

Overlooked Languages Reflect Global Diversity

Millions of people rely on low-resource languages like Amharic or Khmer. Ignoring them creates a digital divide.

Most AI models prioritize widely spoken tongues like English, leaving smaller communities underserved. This bias can reinforce inequities.

Building inclusive AI ensures these communities have equal access to information and opportunities. It’s not just a challenge—it’s a necessity.

Challenges of Limited Resources

Low-resource languages often lack digitized corpora, making training NLU models difficult.

Even common tools like spell-checkers or tokenizers may be unavailable, limiting progress in understanding syntax and semantics.

Compounding the problem is the lack of skilled linguists for annotation, slowing model improvement.

Strategies for Scaling NLU

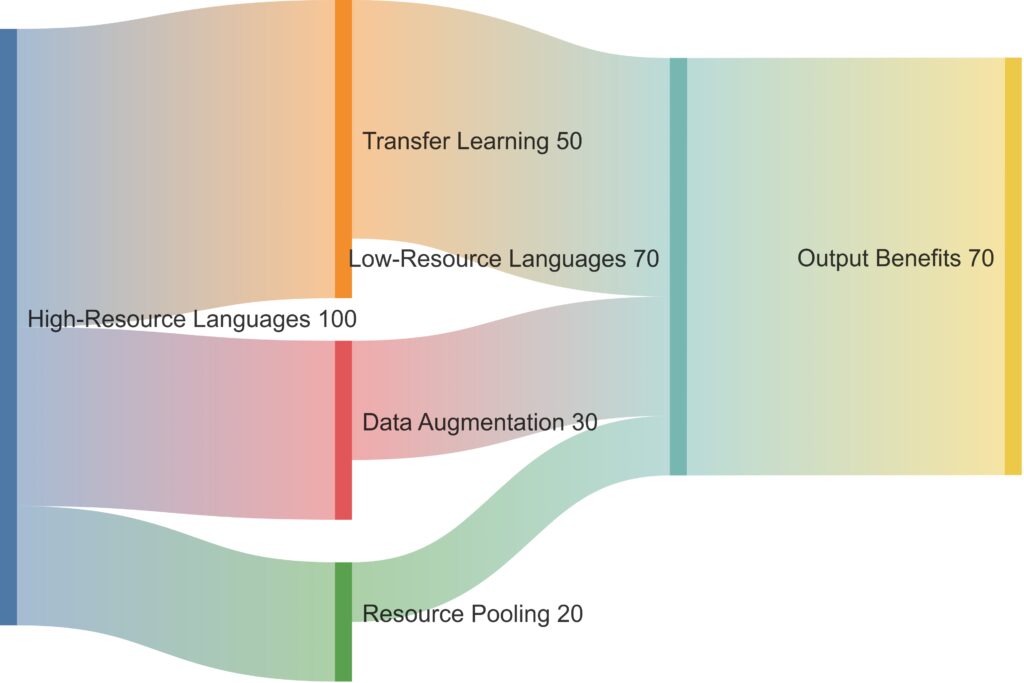

Leveraging Transfer Learning

Transfer learning enables knowledge-sharing from high-resource languages. Models like BERT can adapt to new languages with minimal data.

Fine-tuning on closely related languages improves performance dramatically. For instance, Hindi models can help bootstrap Urdu systems.

This approach minimizes costs while enhancing performance, especially for syntactically similar languages.

Data Augmentation Techniques

Generating synthetic data through back-translation or paraphrasing increases training examples.

Crowdsourcing labeled datasets is another way to bridge gaps, empowering communities to co-create resources for their language.

These strategies enrich corpora, offering better NLU accuracy with fewer initial inputs.

The Role of Multilingual Models

Bridging Language Barriers

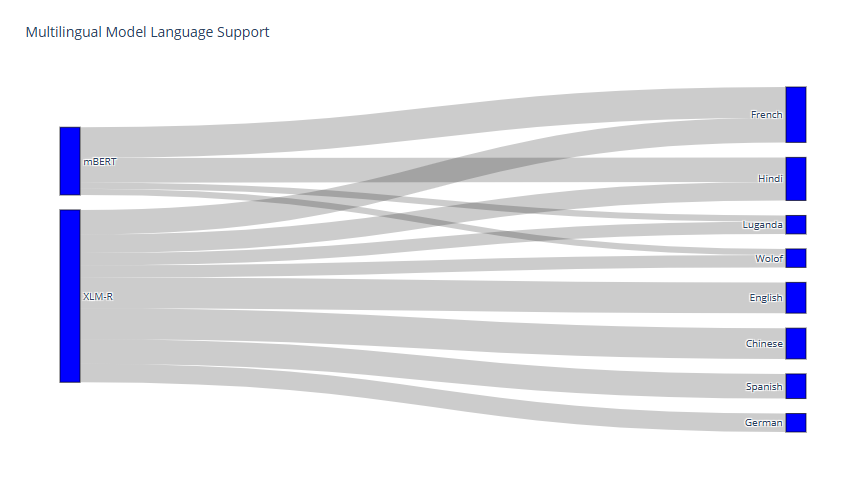

Multilingual models like mBERT or XLM-R support many languages simultaneously. They help share learned patterns across languages.

By pooling data, these models can even aid languages with virtually no resources.

Although not perfect, multilingual models are a huge leap forward in scalability and accessibility.

Limitations of Current Models

Despite progress, multilingual systems often favor dominant languages during training.

Addressing this bias means allocating specific resources and prioritizing fairness in development pipelines.

Ongoing refinement is critical to ensure inclusivity without sacrificing performance.

Community-Led Approaches

Building Local Capacity

Empowering linguists and researchers in underserved regions helps create sustainable solutions.

Community-driven annotation tools and open datasets promote ownership and long-term growth for low-resource NLU.

Collaborative AI Development

Nonprofits and tech giants alike are forming alliances. Initiatives like AI4D Africa or Masakhane demonstrate the power of collective effort.

Such collaborations build not only better tools but also trust and inclusion in the global AI community.

Policy and Advocacy for Inclusivity

Setting Global Standards

Advocacy groups are urging for language equity in AI policies. Standards around NLU must mandate inclusive practices.

For instance, the UNESCO guidelines emphasize prioritizing cultural and linguistic diversity.

Funding for Low-Resource Research

Dedicated funding can catalyze progress. Grants and competitions can incentivize innovative methods for low-resource language scaling.

Without financial backing, promising ideas often remain unrealized.

Real-World Applications of Inclusive NLU

Bridging the Information Gap

Inclusive NLU ensures access to vital resources in health, education, and governance for underserved communities.

For example, AI-powered health chatbots tailored for low-resource languages provide lifesaving advice in remote regions.

Such applications empower individuals, enabling them to engage in the digital economy and public discourse in their native language.

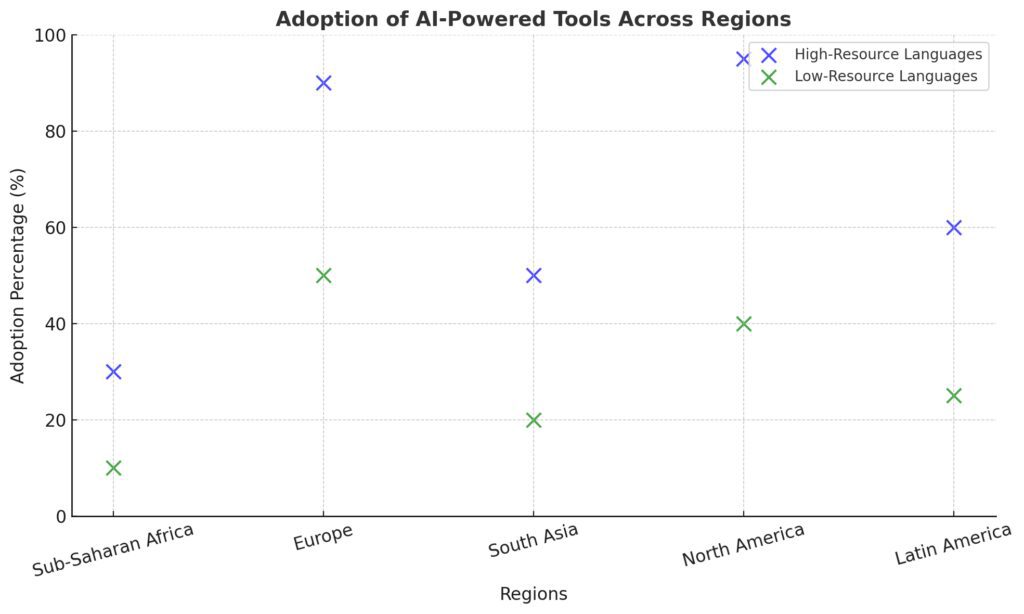

Low-resource languages (e.g., Swahili, Luganda) have lower adoption, with noticeable gaps in regions like Sub-Saharan Africa and South Asia.

Regions like Latin America show a moderate adoption for both language types, suggesting mixed accessibility.

Enhancing User Experiences

Personalized voice assistants and chatbots often exclude users speaking non-dominant languages.

Inclusive NLU expands functionality, making tools like virtual assistants and customer support systems accessible to more users.

These improvements go beyond convenience—they foster a sense of belonging in the digital world.

The Role of Open-Source Initiatives

Democratizing Access to Technology

Open-source platforms like Hugging Face or Masakhane are instrumental in scaling NLU.

They enable researchers to build and share models, datasets, and tools for underserved languages freely.

Such collaborations lower entry barriers for small organizations and individual researchers.

The Power of Shared Datasets

Projects like Common Voice by Mozilla crowdsource speech and text datasets from native speakers.

This approach accelerates NLU for languages with little pre-existing data, fostering inclusivity on a global scale.

Future Innovations in Low-Resource NLU

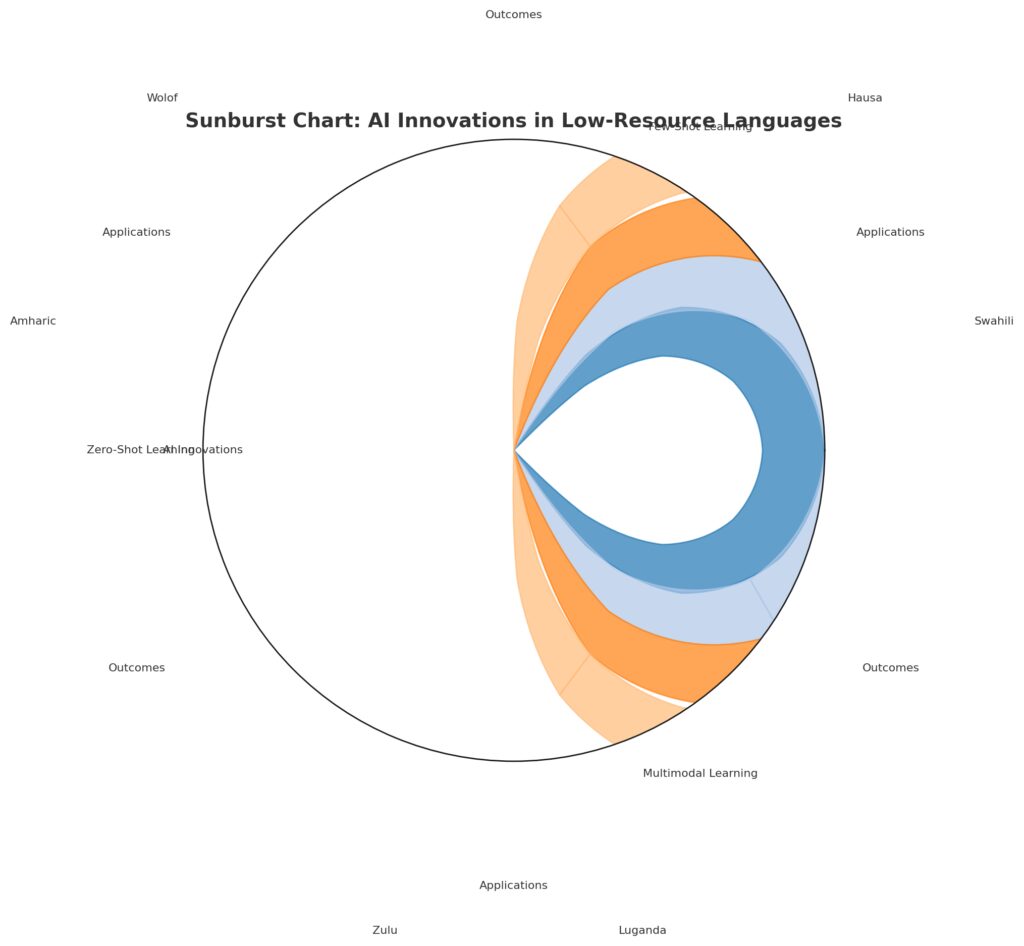

Few-Shot and Zero-Shot Learning

Advanced methods like few-shot learning allow models to learn from just a handful of examples.

Similarly, zero-shot learning enables predictions in languages the model hasn’t explicitly trained on.

These breakthroughs could revolutionize low-resource NLU by reducing the dependency on large datasets.

Zero-Shot Learning: Used for Wolof (text classification) and Amharic (sentiment analysis).

Multimodal Learning: Enhances Zulu (speech-to-text) and Luganda (image captioning).

Multimodal Learning for Context

Incorporating visual, audio, and textual data can help models understand languages more comprehensively.

For instance, pairing images with captions builds contextual understanding for languages with limited textual data.

This holistic approach enhances NLU accuracy while opening doors for underrepresented dialects.

Measuring Success: Metrics for Inclusivity

Accuracy Beyond the Numbers

Evaluating NLU success in low-resource languages requires a nuanced approach. Metrics must account for cultural and contextual understanding, not just raw accuracy.

For example, correctly interpreting idiomatic expressions or local metaphors is crucial for real-world relevance.

Tracking Language Representation

Transparent reporting on language inclusivity in AI systems ensures accountability.

Regular audits of datasets and models can highlight progress—or gaps—in representing diverse languages effectively.

Linguistic Nuances Demand a Paradigm Shift

Many low-resource languages contain unique structures and cultural contexts that don’t map directly to dominant languages.

For instance, polysynthetic languages like Inuktitut combine multiple ideas into a single word, challenging standard tokenization techniques.

Future NLU systems must move beyond one-size-fits-all approaches to truly understand linguistic diversity.

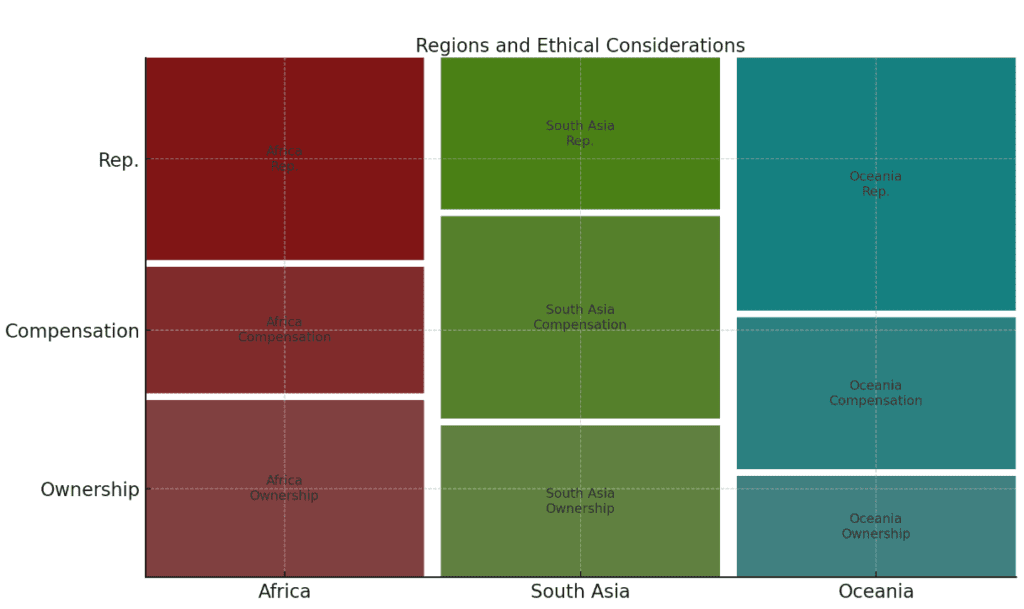

Ethics of Data Collection in Vulnerable Communities

Building datasets for low-resource languages often involves engaging with indigenous or marginalized communities.

It’s not just about quantity—ethical considerations like data ownership, consent, and fair compensation are critical.

Adopting frameworks like Fair Data Principles ensures that these communities benefit directly from AI advancements.

The Risk of Digital Extinction

Languages face extinction risks not just in the real world, but in the digital space.

Without intervention, underserved languages may lose their relevance in the tech ecosystem, further marginalizing their speakers.

Proactive scaling of NLU for these languages can act as a form of digital preservation.

Cross-Language Collaboration: A Hidden Superpower

Neighboring languages often share grammatical features or vocabulary.

Leveraging cross-linguistic similarities can accelerate development. For example, models trained on Swahili can aid Kiswahili, or resources for Quechua may help Aymara.

Focusing on regional ecosystems, rather than isolated efforts, maximizes scalability.

The Role of AI in Empowering Local Innovation

Inclusive NLU doesn’t just serve existing needs—it can spark local innovation.

When people have tools in their own language, they can build apps, services, and solutions that reflect their culture.

This flips the script from external aid to community-led technological empowerment, creating sustainable growth.

These insights push beyond surface-level discussions and show how scaling NLU for low-resource languages has cultural, ethical, and transformative implications.

Conclusion: A Roadmap to Inclusivity

Inclusive NLU isn’t just a technological challenge—it’s a moral imperative.

By blending innovation, collaboration, and advocacy, the AI community can bridge the gap for low-resource languages, paving the way for a truly global digital future.

FAQs

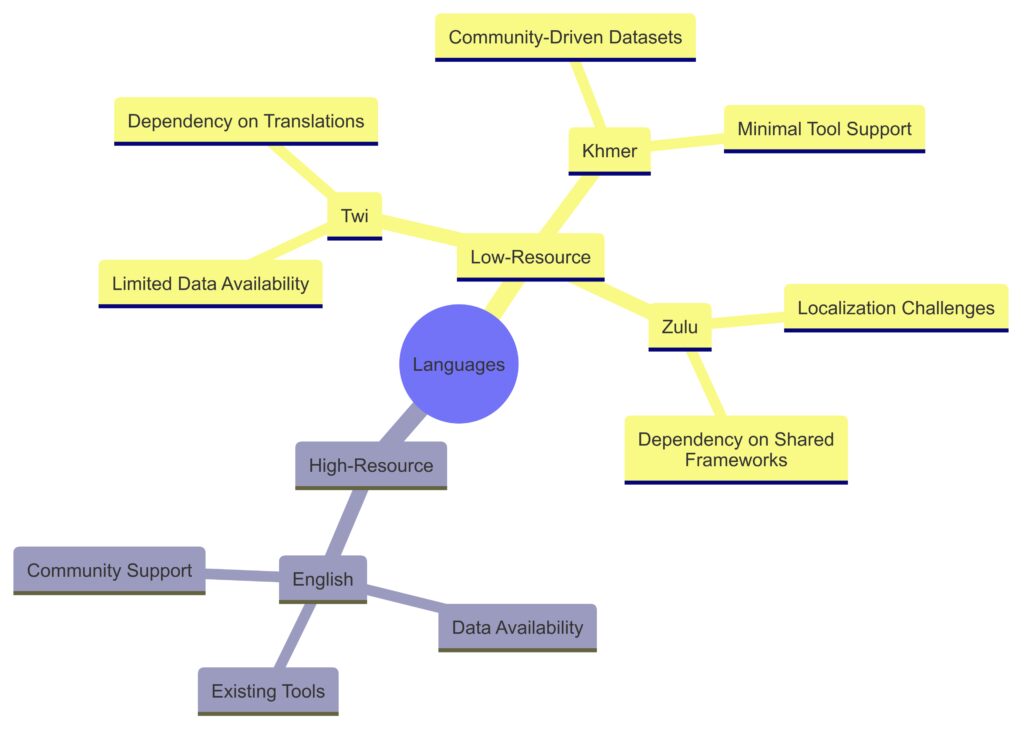

How do low-resource languages differ from high-resource ones?

Low-resource languages lack extensive datasets, tools, and research attention compared to high-resource languages like English or Mandarin.

For example, Twi (spoken in Ghana) has limited digitized text, whereas Spanish has abundant corpora and pre-trained models. This scarcity creates significant challenges for training AI systems.

Why is it important to include low-resource languages in AI?

Excluding low-resource languages widens the digital divide, limiting access to education, health, and technology for millions.

For instance, creating AI tools in Tagalog or Zulu ensures speakers can engage with global information in their own tongue, preserving cultural identity and fostering economic growth.

What are some key techniques used to improve NLU for these languages?

Techniques like transfer learning and data augmentation are game-changers.

For example, fine-tuning mBERT with data from Portuguese can enhance understanding of Galician, given their linguistic overlap. Back-translation for Somali can create new training examples by translating sentences into English and back into Somali.

What role does the community play in developing NLU for low-resource languages?

Communities are invaluable for annotating data, validating models, and offering cultural insights.

For instance, Masakhane’s African NLP project relies on volunteers to build datasets for languages like Yoruba and Shona. This participatory approach empowers communities to shape their digital futures.

Are there real-world examples of successful low-resource NLU systems?

Yes, several! In Rwanda, a chatbot built in Kinyarwanda provides agricultural advice to farmers. Similarly, healthcare apps in Hausa deliver maternal care information to Nigerian mothers in their native language. These initiatives showcase the transformative potential of inclusive AI.

How can individuals or organizations contribute to low-resource language AI?

Anyone can contribute by supporting open-source projects like Common Voice or donating to initiatives like Masakhane.

For example, a linguist might annotate text for underrepresented dialects, while tech companies can provide compute resources for training models.

What’s the future of NLU for low-resource languages?

The future lies in zero-shot learning, multimodal AI, and scalable models that adapt quickly to new languages.

Imagine a system that understands indigenous Mayan languages without direct training, enabling entire communities to access modern tools in their mother tongue.

How do multilingual models support low-resource languages?

Multilingual models like mBERT or XLM-R share knowledge across languages, benefiting those with limited data.

For example, patterns learned from Hindi can enhance performance in Bhojpuri, as both languages share grammatical structures. These models act as a bridge, amplifying the reach of smaller languages.

What ethical concerns arise in scaling NLU for low-resource languages?

Ethical concerns include data privacy, ownership, and fair representation.

For instance, collecting conversational data from indigenous communities must respect their cultural rights and provide tangible benefits in return. Missteps can lead to exploitation or loss of trust.

Can AI help preserve endangered languages?

Absolutely! AI can document and digitize endangered languages, making them more accessible and usable.

For instance, researchers are using speech recognition tools to archive spoken Ojibwe, preserving it for future generations while enabling applications like translation or education tools.

What’s the role of governments in supporting low-resource languages?

Governments can invest in language digitization, promote local research, and mandate inclusivity in AI policies.

For example, India’s government supports projects for regional languages like Tamil and Marathi, enabling broader adoption of AI in education and public services.

Are there challenges in ensuring fair representation in multilingual AI systems?

Yes, multilingual models often overfit to dominant languages, overshadowing smaller ones.

For example, when training on both English and Maori, the model might perform poorly in Maori if the dataset is skewed. Equal representation during training and evaluation is essential to prevent this bias.

Rep.: Shortened form of “Representation.”

Compensation: Retained as is.

How does the lack of a writing system impact low-resource NLU?

Languages without a standardized writing system, like some indigenous tongues, pose unique challenges for NLU.

Speech-based approaches, such as creating ASR (Automatic Speech Recognition) tools, become crucial. For example, researchers working with the Maasai language use oral data to train models.

Can local dialects within low-resource languages also benefit from AI?

Yes, dialects can gain visibility and functionality through inclusive NLU efforts.

For instance, tools built for Arabic often focus on Modern Standard Arabic, but dialectal variations like Egyptian or Levantine Arabic also need specific attention. AI can help bridge the gap between dialects and their formal counterparts.

How can businesses benefit from investing in low-resource language NLU?

Businesses can tap into underserved markets and foster brand loyalty by supporting low-resource languages.

For instance, a tech company offering customer service in Tigrinya could gain trust in Ethiopia’s growing digital economy. This investment creates social impact and business opportunities.

Are there success stories of collaboration for low-resource NLU?

Yes, collaborative projects like AI4D Africa have developed tools for languages such as Luganda and Wolof.

Similarly, Mozilla’s Common Voice gathers voice samples globally, enabling speech recognition for smaller languages like Basque. These stories underline the power of partnerships in driving change.

Resources

Datasets for Low-Resource Languages

- ELRC-SHARE Repository: Provides multilingual datasets for European and low-resource languages.

Access ELRC-SHARE - Linguistic Data Consortium (LDC): Hosts various text and speech datasets, including low-resource languages.

Explore LDC - AI4D Africa Dataset Repository: Specializes in African language resources for NLP research.

AI4D Africa Resources

Multilingual Model Solutions

- mBERT and XLM-R: Pretrained models supporting multiple languages, often useful for low-resource cases.

Learn About mBERT - IndicNLP Library: Tools for Indian languages, including tokenization and transliteration.

Explore IndicNLP