What is Transfer Learning and Why Does It Matter?

Definition and Basic Concept

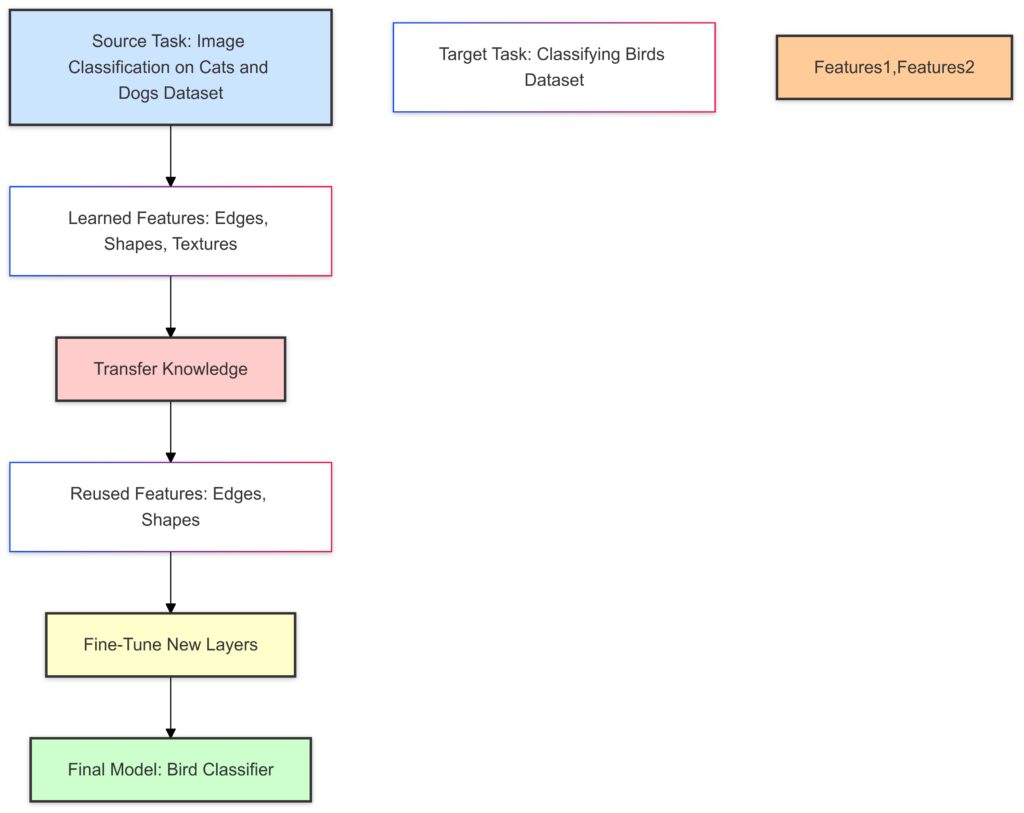

Transfer learning involves leveraging a pre-trained model to solve a new task. Instead of training a model from scratch, it reuses the knowledge learned on a different, often larger dataset. Think of it as adapting a skilled chef to cook in a new kitchen—they already have the expertise; they just need to adjust to the tools.

This approach is particularly useful when you lack the data or resources to build a model from the ground up.

Historical Evolution of Transfer Learning

Transfer learning isn’t new but has gained momentum with the rise of deep learning. Early forms existed in traditional machine learning, like feature extraction. Today, advanced architectures like convolutional neural networks (CNNs) and transformers have transformed how transfer learning is applied.

Common Scenarios for Applying Transfer Learning

Transfer learning shines in areas where labeled data is scarce. For example:

- Healthcare: Training a medical imaging model with limited MRI scans.

- Natural Language Processing (NLP): Adapting language models for domain-specific tasks.

- Retail: Enhancing product recommendation systems with sparse user data.

How Transfer Learning Overcomes Small Dataset Challenges

Feature Reuse and Knowledge Transfer

At its core, transfer learning reuses features learned by a model on one dataset and applies them to another. These features can include patterns, edges, and embeddings—depending on the task. This reuse ensures small datasets can leverage patterns from larger, diverse datasets.

Reducing Computational and Time Costs

Instead of training a deep model for hours or days, you can save time by fine-tuning pre-trained weights. This makes transfer learning a practical choice for projects with tight deadlines or limited resources.

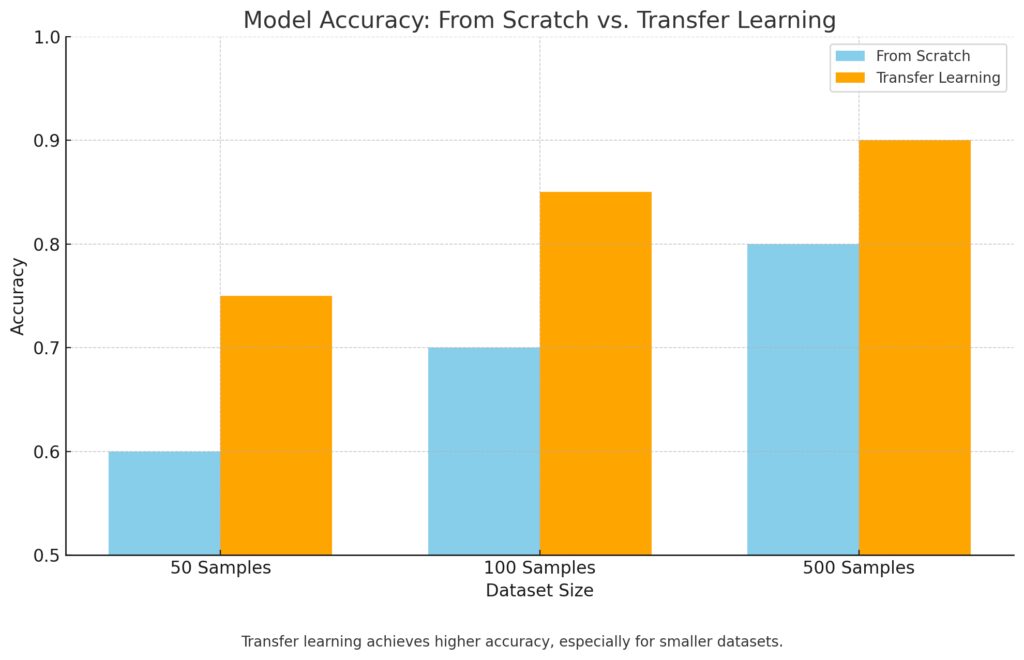

From Scratch: Accuracy improves as the dataset size increases but is consistently lower than transfer learning.

Transfer Learning: Achieves higher accuracy, particularly with smaller datasets, showcasing its effectiveness in leveraging pre-trained knowledge.

Addressing Overfitting Issues

Small datasets often lead to overfitting, where models perform well on training data but fail to generalize. Transfer learning combats this by using generalized features already refined during pre-training.

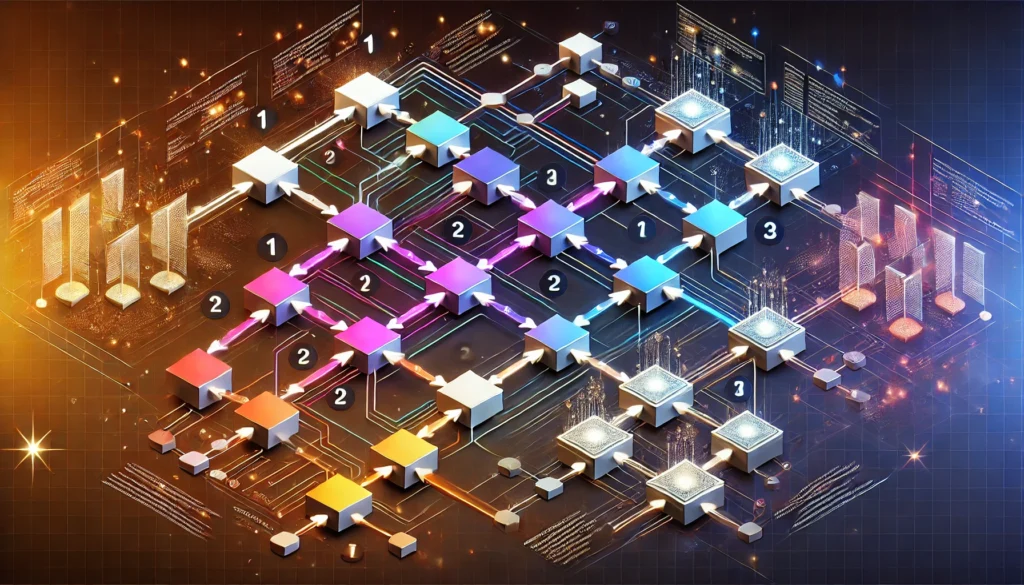

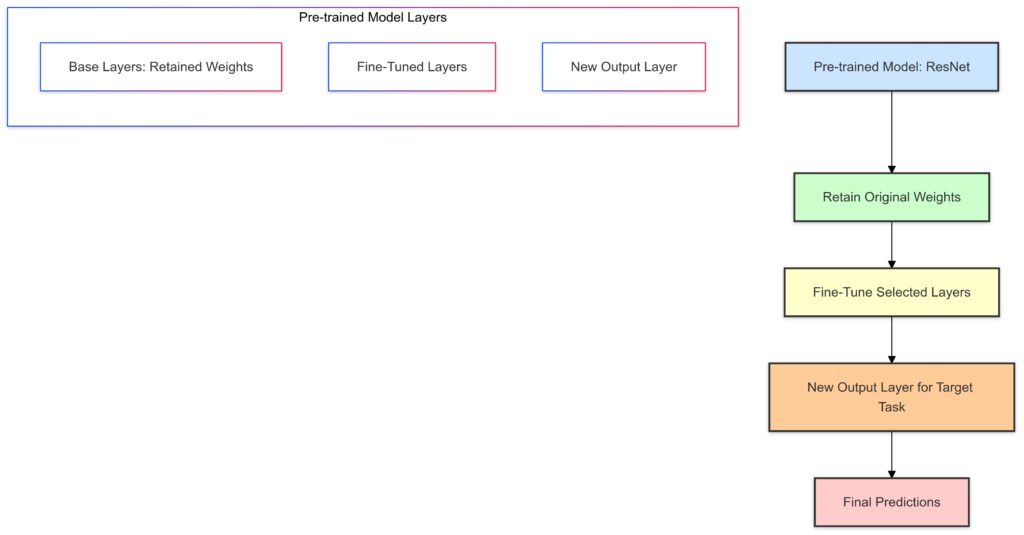

Pre-trained Models: The Core of Transfer Learning

Retained Weights:Base layers like edge and texture detectors remain unchanged.

Fine-Tuned Layers:Select higher layers are adjusted for the new task.

New Output Layer:Customized for the specific target task (e.g., classification for new categories).

Final Predictions:The output layer generates predictions for the new task.

Popular Pre-trained Models

Some widely used pre-trained models include:

- ResNet: Great for image recognition tasks.

- BERT: A powerhouse for NLP.

- GPT: A top choice for text generation and language understanding.

Each model excels in specific domains, so selecting the right one depends on your use case.

How to Select the Right Model for Your Task

When choosing a pre-trained model, consider:

- Domain compatibility: Does the pre-trained model’s data match your target problem?

- Model size: Smaller models work better on limited resources.

- Ease of fine-tuning: Some models are more beginner-friendly than others.

Advantages of Pre-trained Architectures

Pre-trained models offer:

- Robust feature sets

- Higher starting accuracy

- Reduced development effort

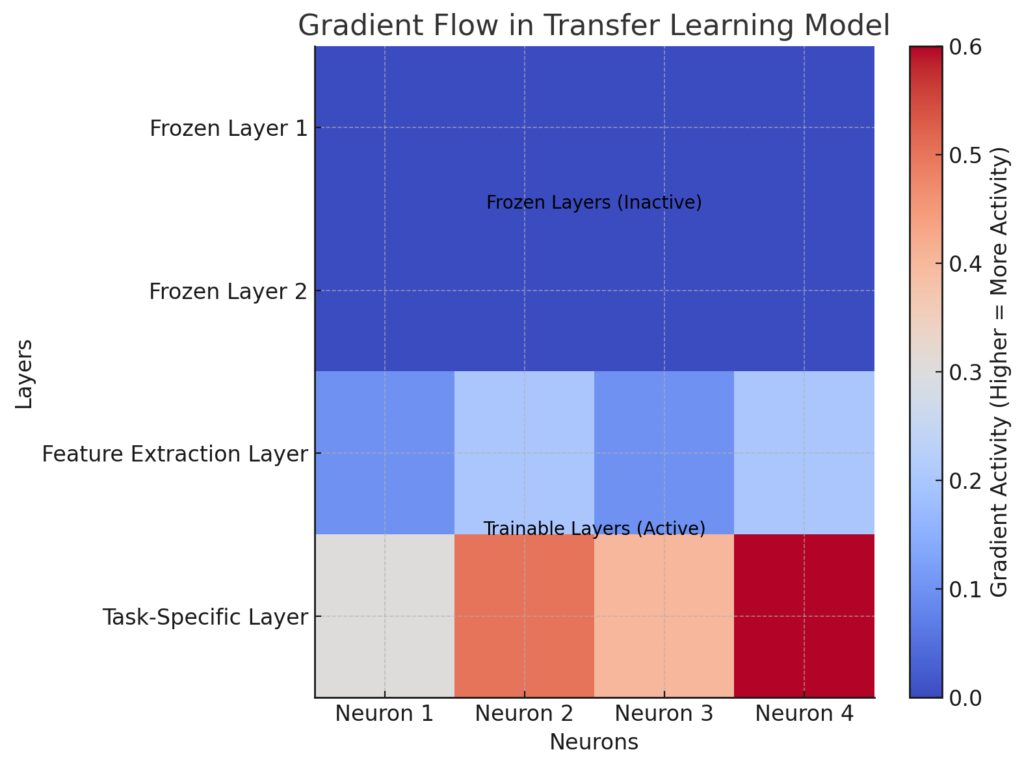

Fine-tuning: Unlocking the Power of Pre-trained Models

Frozen Layers:

- Represented with no gradient activity (dark blue).

- These layers are inactive and retain pre-trained weights, functioning as feature extractors.

Trainable Layers:

These layers are fine-tuned for the target task, with the task-specific layer having the highest activity.

Show higher gradient activity (shades of red).

How Fine-tuning Works on Small Datasets

Fine-tuning involves updating parts of a pre-trained model to adapt it to your dataset. For example, you might:

- Freeze the early layers to keep general features intact.

- Train the last layers for task-specific learning.

Techniques to Avoid Overfitting

To ensure fine-tuning works effectively:

- Use data augmentation to artificially expand your dataset.

- Regularize the model with dropout layers or weight decay.

- Use early stopping to avoid overtraining.

Balancing Frozen and Trainable Layers

The trick lies in balancing what you freeze and what you train. Too many frozen layers, and the model won’t adapt well. Too few, and you risk overfitting to your small dataset.

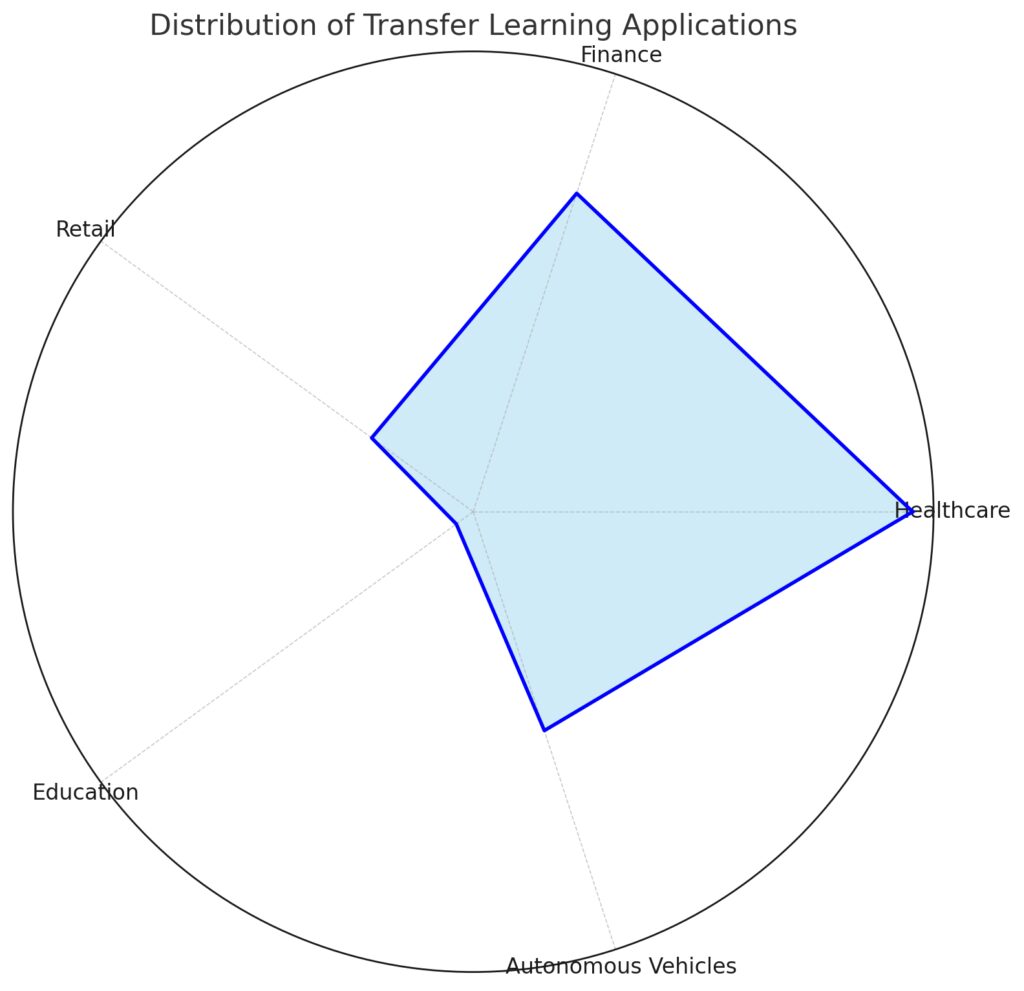

Applications of Transfer Learning for Small Datasets

Popular domains leveraging transfer learning for solving industry-specific challenges.

Real-world Examples Across Domains

Transfer learning is already changing the game in various fields:

- Medical Imaging: Detecting anomalies in CT scans.

- Customer Support: Chatbots trained for industry-specific queries.

- Environmental Monitoring: Classifying satellite images for deforestation tracking.

Image Recognition, NLP, and More

Specific tasks benefiting from transfer learning include:

- Classifying images with tools like ResNet or Inception.

- Fine-tuning BERT for sentiment analysis or question answering.

Use Cases for Startups and Researchers

Startups can use transfer learning to innovate without a large data budget, while researchers can explore niche problems like rare disease detection or low-resource languages.

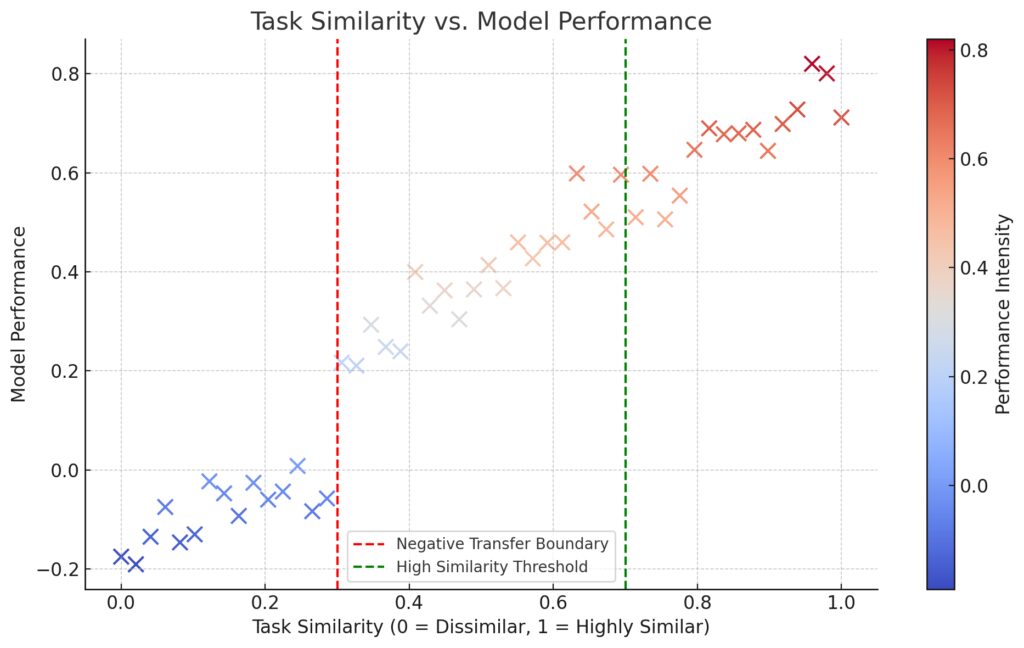

Challenges and Limitations of Transfer Learning

High Similarity: On the right side (similarity > 0.7), performance improves significantly due to effective knowledge transfer.

Negative Transfer: On the left side (similarity < 0.3), performance drops due to dissimilar tasks causing negative transfer.

Key Annotations:

Red dashed line at similarity = 0.3 marks the threshold for negative transfer.

Green dashed line at similarity = 0.7 indicates the threshold for high task similarity.

Dependency on Pre-trained Model Quality

The success of transfer learning hinges on the quality of the pre-trained model. If the source model is poorly trained or irrelevant to the target task, it may fail to deliver meaningful improvements.

For instance, using a model trained on general image data might not be suitable for medical imaging unless the datasets share common characteristics.

Risk of Negative Transfer

Sometimes, knowledge from the source domain can hinder rather than help. This phenomenon, called negative transfer, occurs when the source and target tasks are too dissimilar. A model trained on street photography may struggle to adapt to aerial images.

Computational Overhead

Fine-tuning large models like BERT or GPT can be resource-intensive. Even with pre-trained weights, hardware limitations and memory requirements might challenge small teams. Tools like Google Colab or AWS Sagemaker can help mitigate this issue.

Tools and Frameworks for Transfer Learning

Popular Frameworks

Transfer learning has become more accessible thanks to user-friendly libraries, including:

- TensorFlow and Keras: Easy APIs for building transfer learning pipelines.

- PyTorch: A favorite for research and custom implementations.

- Hugging Face Transformers: Ideal for NLP tasks with models like BERT and GPT.

Integrating Pre-trained Models

Most frameworks allow you to:

- Import pre-trained weights directly.

- Modify architectures to suit your needs.

- Fine-tune selectively to balance speed and accuracy.

Tools for Deployment

Once trained, models can be deployed using tools like TensorFlow Lite or ONNX for mobile and edge devices, enabling practical applications in low-power environments.

Optimizing Transfer Learning for Your Dataset

Curate and Preprocess Data

The quality of your target dataset plays a significant role in transfer learning success. Even with a small dataset:

- Remove noise: Ensure clean, labeled data.

- Augment wisely: Use techniques like cropping, flipping, or rotating images to expand your dataset artificially.

Hyperparameter Tuning

Adjust key hyperparameters like:

- Learning rate: Fine-tune pre-trained layers at a slower pace.

- Batch size: Experiment with smaller sizes for memory-constrained systems.

Experiment with Transfer Strategies

Transfer learning isn’t one-size-fits-all. Test multiple approaches, such as:

- Training only the classifier head.

- Fine-tuning the entire model in stages.

Comparing Transfer Learning with Traditional Training

Efficiency and Speed

Training from scratch demands extensive time, data, and computational resources. Transfer learning dramatically reduces these needs by starting with a pre-trained foundation.

Performance on Small Datasets

Traditional models often suffer poor performance on limited data, whereas transfer learning achieves higher accuracy by leveraging generalized features.

Resource Accessibility

For startups or independent researchers, pre-trained models lower barriers to entry. Traditional methods, on the other hand, often remain out of reach without substantial funding.

The Future of Transfer Learning

Key trends shaping the future of transfer learning across industries.

New Model Architectures

Emerging architectures, like Vision Transformers (ViT), are extending the capabilities of transfer learning. These models enable better performance on visual tasks with smaller datasets.

Multimodal Transfer Learning

Future models may combine multiple data types (e.g., text and images) to deliver richer insights. Imagine using a single model to analyze product descriptions and images simultaneously.

Democratization of AI

With cloud-based platforms and open-source tools, transfer learning is empowering more people to solve complex problems. It’s no longer exclusive to big tech companies or well-funded labs.

Customizing Transfer Learning for Unique Domains

Domain-Specific Challenges

Every domain comes with its quirks:

- Healthcare: Data is sensitive and hard to share.

- Finance: Models need to handle fast-changing trends.

- Retail: Seasonal and location-specific variations must be factored in.

Transfer learning can help tackle these challenges by reusing models trained on similar domains or tasks, adapting them to specific contexts.

Leveraging Domain Knowledge

Incorporating domain-specific insights can enhance transfer learning outcomes. For instance, tailoring the preprocessing pipeline to account for domain idiosyncrasies—like prioritizing high-contrast images in radiology—can make a significant difference.

Case Study: NLP in Legal Tech

A legal tech company might fine-tune BERT to understand contracts, adapting it with carefully curated examples from legal jargon and templates.

Ethical Considerations in Transfer Learning

Bias in Pre-trained Models

Transfer learning inherits biases from its pre-trained models. A language model trained on biased internet data can perpetuate stereotypes. Detecting and mitigating such biases is crucial.

Data Privacy

When dealing with sensitive fields like healthcare, it’s important to consider the ethical implications of using data from unrelated domains. Ensuring compliance with standards like HIPAA or GDPR is essential.

Accountability and Transparency

Models adapted through transfer learning should remain interpretable. Explainable AI (XAI) tools can provide insights into how decisions are made, building trust in applications like loan approvals or diagnostic tools.

Cost-Effective Strategies for Transfer Learning

Cloud-Based Solutions

Platforms like Google Cloud AI and Amazon SageMaker provide affordable compute power for fine-tuning large models. These pay-as-you-go services make high-quality transfer learning accessible to smaller teams.

Open-Source Alternatives

Open-source initiatives like Hugging Face, PyTorch Hub, and FastAI provide robust pre-trained models and tools, minimizing costs for both training and experimentation.

Reusing Industry Models

Many industries offer pre-trained models tailored to their use cases, such as BioBERT for healthcare or Roberta for financial NLP, reducing the need to start from scratch.

When NOT to Use Transfer Learning

Lack of Similarity Between Domains

Transfer learning struggles when the source and target datasets share little overlap. In such cases, training from scratch or using simpler techniques may be more effective.

Minimal Dataset Size

Even with transfer learning, some tasks may fail if the target dataset is too small to provide meaningful fine-tuning. Data augmentation or synthetic data generation may offer better alternatives.

Resource Constraints

Fine-tuning large models can still be costly in terms of compute power and storage. If resources are limited, lightweight architectures like MobileNet might be a better fit.

Key Trends in Transfer Learning Research

Zero-Shot and Few-Shot Learning

These techniques push transfer learning further, enabling models to generalize to new tasks without direct training. OpenAI’s CLIP is an example of this innovation.

Federated Transfer Learning

Combining transfer learning with federated learning ensures models improve without directly sharing sensitive data between organizations, a win for privacy-focused industries.

Expansion Across Modalities

The integration of text, images, and audio into unified models opens doors for multimodal learning, where a single system can tackle diverse inputs simultaneously.

Conclusion: Harnessing Transfer Learning for Small Datasets

Transfer learning has revolutionized the way we approach machine learning, especially when faced with small datasets. By leveraging the knowledge embedded in pre-trained models, you can bypass the challenges of limited data, reduce training time, and achieve impressive accuracy.

From image recognition to NLP and even specialized domains like healthcare or finance, transfer learning empowers individuals and organizations to innovate without requiring massive data resources. However, as with any technology, understanding its limitations—such as the risk of negative transfer or the potential for inherited biases—is key to success.

As the field evolves with advancements in few-shot learning, federated transfer learning, and multimodal AI, the accessibility and versatility of transfer learning will only grow. By staying informed and adopting best practices, you can unlock its full potential and stay ahead in the ever-changing AI landscape.

FAQs

Can transfer learning work with extremely small datasets?

Yes! Transfer learning is specifically designed to handle small datasets. By leveraging pre-trained models, you reuse previously learned features, reducing the data requirements for training. For instance, in NLP, fine-tuning a BERT model on just a few hundred legal documents can yield impressive results for contract analysis.

How do I choose the right pre-trained model for my task?

The right model depends on your specific use case and domain. For image-based tasks, models like ResNet or EfficientNet are popular. For text-based tasks, models like BERT or GPT work well. If you’re working with medical imaging, domain-specific models like CheXNet (for chest X-rays) may be ideal.

What is the difference between feature extraction and fine-tuning in transfer learning?

- Feature extraction involves freezing the pre-trained model’s layers and using them to extract features from your dataset. Only the final layer (classifier) is trained.

- Fine-tuning updates the weights of some or all layers in the pre-trained model to adapt it more closely to your specific task.

For instance, using ResNet for facial recognition in a small dataset could involve freezing the lower layers (edges and patterns) while fine-tuning the higher ones (face-specific features).

Are there risks associated with transfer learning?

Yes, transfer learning can lead to negative transfer if the source and target tasks are too dissimilar. For example, using a model trained on animal images for satellite image analysis might reduce performance. Additionally, pre-trained models might inherit biases present in their original datasets, requiring careful evaluation.

Can transfer learning be applied in real-time applications?

Absolutely! Many real-time systems rely on transfer learning. For example, smartphone AI cameras use pre-trained models to detect faces or optimize scenes on-the-fly. Lightweight models like MobileNet are often deployed for such tasks due to their efficiency.

How does transfer learning compare to training a model from scratch?

Training from scratch requires vast amounts of data, time, and computational resources. Transfer learning starts with a strong foundation, cutting training time dramatically and achieving higher accuracy with smaller datasets. For example, training an NLP chatbot from scratch might take weeks, but fine-tuning GPT could take just hours.

What industries benefit most from transfer learning?

Transfer learning has a wide range of applications:

- Healthcare: Diagnosing diseases using medical scans with limited training data.

- Finance: Fraud detection using sparse transaction data.

- Education: Language models tailored for low-resource languages.

A great example is how startups use transfer learning to build personalized recommendation engines without requiring Netflix-scale datasets.

What tools should I use for transfer learning?

Popular tools include:

- TensorFlow/Keras: Great for beginners with simple APIs.

- PyTorch: Preferred for research and flexibility.

- Hugging Face Transformers: Ideal for NLP with pre-trained models like BERT or RoBERTa.

If you’re working on vision tasks, libraries like OpenCV or FastAI provide user-friendly integrations with transfer learning techniques.

Can transfer learning handle multi-task learning?

Yes! Transfer learning can be adapted for multi-task learning, where a single model is trained to perform multiple tasks simultaneously. For example, a language model like GPT can handle translation, summarization, and text classification within one framework. This is achieved by fine-tuning the model with datasets that include all target tasks.

How can I prevent overfitting in transfer learning?

Overfitting can be managed through:

- Data augmentation: Adding diversity by flipping, rotating, or cropping images.

- Dropout layers: Randomly disabling some neurons during training to improve generalization.

- Regularization: Techniques like weight decay to prevent overly complex models.

For example, if you’re using ResNet to classify flowers from 100 images, you could augment the dataset by adding synthetic variations.

Is transfer learning suitable for time-series data?

Yes, transfer learning can work with time-series data, although it’s less common than in NLP or computer vision. Pre-trained models, like InceptionTime or Transformer-based architectures, can be adapted for tasks like forecasting or anomaly detection.

For example, you might adapt a weather prediction model trained on global data to forecast microclimates in a specific region.

What’s the difference between zero-shot learning and transfer learning?

- Transfer learning requires some labeled target data for fine-tuning or training specific layers.

- Zero-shot learning doesn’t need labeled target data—it generalizes to new tasks or domains based solely on its existing knowledge.

An example of zero-shot learning is CLIP, which can match images and captions without being explicitly trained for specific pairings.

Are there any tools for explainable AI in transfer learning?

Yes, several tools help interpret and explain models adapted through transfer learning:

- SHAP (SHapley Additive exPlanations): Identifies which features influenced predictions.

- LIME (Local Interpretable Model-agnostic Explanations): Provides insights into individual predictions.

- Grad-CAM: Visualizes which parts of an image the model focused on, especially useful for computer vision tasks.

For example, Grad-CAM can highlight tumor regions in medical imaging tasks adapted via transfer learning.

Can transfer learning be used with reinforcement learning?

Yes, transfer learning can enhance reinforcement learning (RL) by initializing an RL agent with knowledge from a pre-trained model. For instance, a game-playing AI trained on Atari games might be fine-tuned to play a new, similar game with fewer training episodes.

How is transfer learning different from meta-learning?

- Transfer learning: Focuses on reusing knowledge from one task for another related task.

- Meta-learning: “Learning to learn,” where the goal is to train a model to adapt quickly to new tasks with minimal data.

For example, a meta-learning model might learn how to optimize itself for unseen datasets, while transfer learning adapts an existing model like ResNet for specific tasks.

How much data is enough for fine-tuning in transfer learning?

The amount of data required depends on the similarity between the pre-trained model’s source task and your target task:

- Very similar tasks: A few hundred examples might suffice.

- Moderately similar tasks: A few thousand labeled examples may be necessary.

- Very different tasks: Consider a larger dataset or using techniques like synthetic data generation.

For instance, fine-tuning a model like BERT for sentiment analysis might only require 500 labeled reviews, while training for a new language entirely would need more data.

Can transfer learning work with imbalanced datasets?

Yes, but it requires care. Techniques like class weighting, oversampling, or undersampling can help address imbalances. For example, if you’re training a medical diagnosis model on imbalanced data (e.g., 90% healthy, 10% sick), you could oversample the minority class to improve predictions.

What are some practical examples of transfer learning in startups?

- Healthcare startups: Detecting early-stage diseases with limited labeled medical data.

- Retail startups: Creating personalized recommendations using models pre-trained on large e-commerce datasets.

- EdTech startups: Developing language-learning tools by fine-tuning NLP models on regional dialects.

For example, a healthcare startup might use a pre-trained model like CheXNet and adapt it to detect tuberculosis from X-rays.

Resources

Tutorials and Documentation

- TensorFlow Transfer Learning Guide

- Comprehensive tutorials on building transfer learning models for vision and NLP tasks.

- Official resource: TensorFlow Transfer Learning

- PyTorch Transfer Learning Recipes

- Step-by-step guides to implement transfer learning for tasks like image classification and NLP.

- Explore: PyTorch Official Tutorials

- Hugging Face Transformers

- Detailed guides for NLP tasks using state-of-the-art models like BERT, RoBERTa, and GPT.

- Visit: Hugging Face Docs

- FastAI Course

- A beginner-friendly course covering transfer learning in vision and text, with practical examples.

- Enroll here: FastAI Course

Books

- “Deep Learning for Natural Language Processing” by Palash Goyal

- A beginner-friendly resource with a focus on NLP transfer learning techniques like BERT.

- Available on Amazon

- “Deep Learning with Python” by François Chollet

- Explains transfer learning in the context of Keras and TensorFlow. Ideal for hands-on learners.

- Check out Deep Learning with Python

- “Hands-On Machine Learning with Scikit-Learn, Keras, and TensorFlow” by Aurélien Géron

- Covers transfer learning with examples using TensorFlow and Keras.

- Available on O’Reilly

Online Courses

- Coursera: Deep Learning Specialization (by Andrew Ng)

- Offers a solid foundation in transfer learning as part of its deep learning modules.

- Start here: Deep Learning Specialization

- Udemy: Transfer Learning with TensorFlow 2.0

- Focuses on applying transfer learning to real-world problems.

- Enroll at: Udemy Transfer Learning

- Kaggle Courses: Intro to Deep Learning

- Practical tutorials on transfer learning with datasets and challenges.

- Learn at: Kaggle Courses

Research Papers

- “A Survey on Transfer Learning” by Pan and Yang

- A foundational paper covering the theory and applications of transfer learning.

- Read here: Survey on Transfer Learning (arXiv)

- “Imagenet Classification with Deep Convolutional Neural Networks” by Alex Krizhevsky et al.

- Introduced the importance of pre-trained models like AlexNet.

- Available at: ImageNet Classification

- “BERT: Pre-training of Deep Bidirectional Transformers for Language Understanding”

- Key paper explaining BERT’s architecture and its transfer learning applications.

- Access at: BERT Paper (arXiv)

Tools and Libraries

- Hugging Face

- Offers pre-trained models for NLP tasks and an easy-to-use library for transfer learning.

- Visit: Hugging Face

- TensorFlow Hub

- A repository of pre-trained models for vision, text, and audio tasks.

- Explore: TensorFlow Hub

- PyTorch Hub

- Contains a library of pre-trained models ready for transfer learning applications.

- Check out: PyTorch Hub

- Model Zoo by OpenCV

- Pre-trained models optimized for deployment on edge devices.

- Visit: OpenCV Zoo

Communities and Forums

- Reddit: Machine Learning Subreddit

- Engage with practitioners and get practical advice on transfer learning.

- Join here: r/MachineLearning

- Kaggle Community

- Forums and datasets for transfer learning projects with collaborative support.

- Visit: Kaggle

- Stack Overflow

- Ask questions and get solutions for transfer learning challenges in TensorFlow, PyTorch, and more.

- Explore: Stack Overflow