Spiking Neural Networks (SNNs) are creating a buzz in the world of artificial intelligence and neuromorphic engineering.

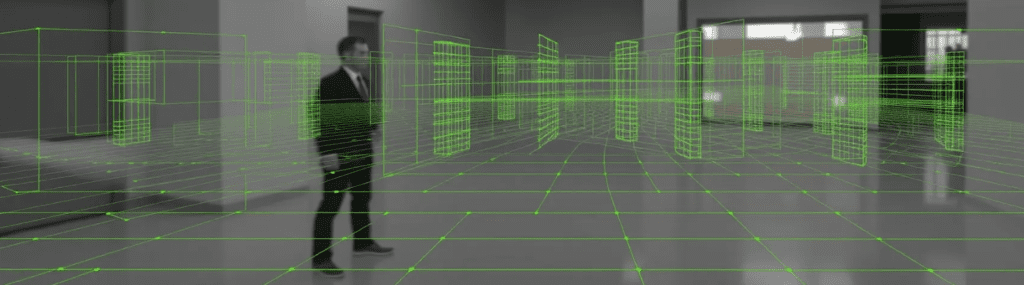

Real-time computing for various applications, especially in vision systems, SNNs offer a new way to process information that mimics the brain’s unique ability to handle dynamic and sensory inputs. By enabling event-based processing, SNNs are transforming how machines perceive and react to their environments, allowing for faster and more efficient real-time perception.

In this article, we’ll explore how SNNs in vision systems are driving advances in event-based processing, the benefits they offer, and why they are a game-changer for real-time perception.

What are Spiking Neural Networks (SNNs)?

Understanding the Basics of SNNs

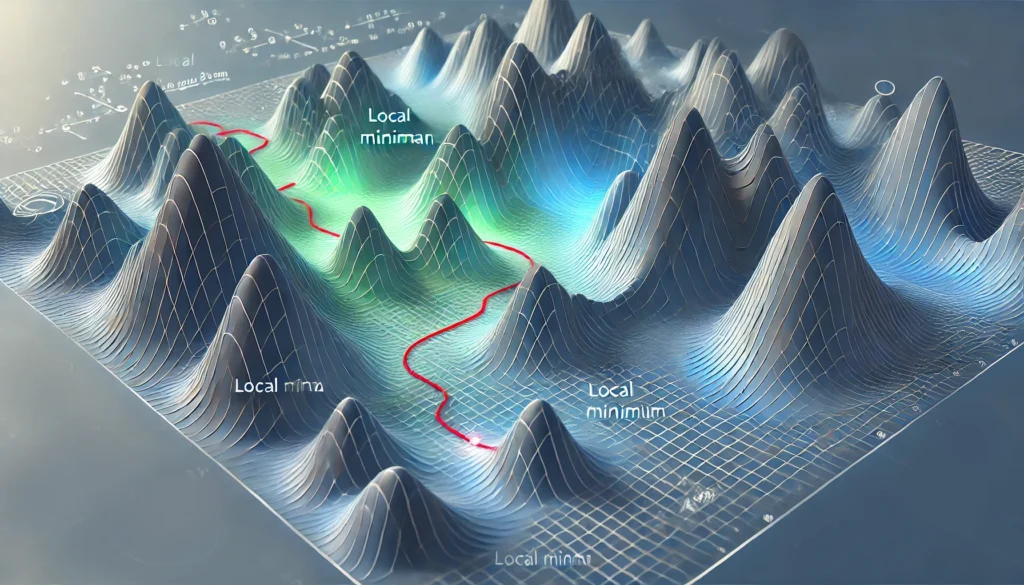

Spiking Neural Networks are inspired by the way biological neurons communicate. Unlike traditional neural networks that use continuous values to represent information, SNNs process information in the form of spikes—short bursts of electrical activity similar to how neurons in the brain operate. These spikes only fire when a certain threshold is reached, making SNNs much more energy-efficient compared to conventional artificial neural networks (ANNs).

This neuron-like behavior gives SNNs the ability to perform event-based processing, meaning they can detect and respond to changes or “events” in the input data as they occur, rather than processing data in static frames like traditional methods.

How SNNs Differ from Traditional Neural Networks

Traditional neural networks operate by using dense layers of neurons to process data in a stepwise, frame-by-frame manner. However, this approach can be both energy-intensive and slower, especially when dealing with real-time, dynamic environments such as self-driving cars, drones, or robotics.

In contrast, SNNs only compute when there is something to compute, which makes them much more efficient in scenarios that require immediate reactions based on changes in the environment.

Event-Based Processing: The Power Behind SNNs

What is Event-Based Processing?

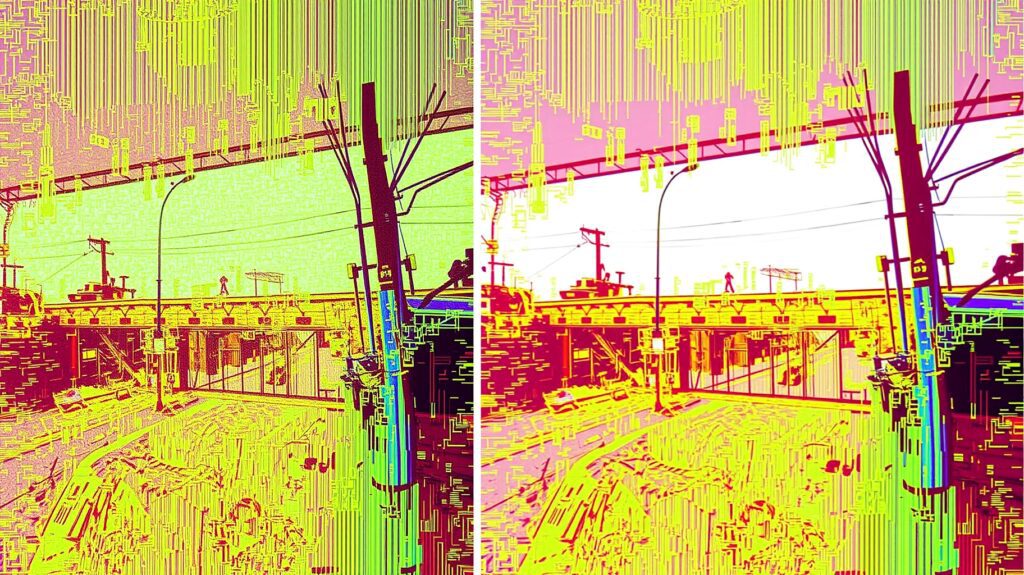

Event-based processing refers to systems that only process new information when an event occurs. In the context of vision systems, an event can be a change in light, motion, or contrast within the visual field. Instead of analyzing every frame like in conventional vision systems, event-based cameras and SNNs only process changes—meaning the system reacts to what’s happening right now.

This is in stark contrast to frame-based processing, which requires large amounts of redundant data to be processed every second. Event-based processing ensures that only the relevant information is processed, allowing for much faster response times and greater energy efficiency.

Why Event-Based Processing is Crucial for Real-Time Perception

In real-world applications, especially those requiring real-time response, being able to quickly detect changes is vital. For example, autonomous vehicles need to perceive changes in the environment, such as obstacles or other vehicles, as soon as they happen to avoid accidents. Event-based vision systems enable this kind of instant reaction, reducing latency significantly compared to traditional frame-based systems.

Furthermore, event-based processing mimics how biological systems like human vision work, allowing machines to perceive their surroundings in a more natural and dynamic way.

Advantages of SNNs in Vision Systems

Low Power Consumption

One of the biggest advantages of using SNNs in vision systems is their low power consumption. Since SNNs only process data when spikes occur, they can operate with minimal energy usage. This is particularly beneficial for mobile and battery-operated devices like drones, wearables, or edge computing platforms that require prolonged use in real-time environments.

Ultra-Fast Response Times

Because SNNs operate on an event-based model, they allow vision systems to react faster to environmental changes. This real-time processing is critical in applications where millisecond reactions can make a significant difference, such as in autonomous navigation, robotics, or surveillance systems.

Improved Accuracy and Precision

By focusing only on relevant data, SNN-powered vision systems can reduce the noise and irrelevant information that often slow down traditional systems. This results in higher precision, particularly in scenarios where constant changes are happening, such as monitoring traffic patterns or managing industrial processes in real-time.

Scalability and Flexibility

SNNs are highly scalable and can be adapted to a variety of tasks and environments. Whether it’s gesture recognition, motion tracking, or even object detection, SNN-based systems can be customized to suit the specific needs of different real-time applications. This makes them ideal for industries ranging from healthcare to smart cities and logistics.

Applications of SNNs in Real-Time Vision Systems

Autonomous Vehicles and Drones

In the world of self-driving cars and drones, event-based vision systems powered by SNNs provide a significant advantage. By processing only the critical changes in the environment, these systems can detect objects, pedestrians, and hazards in real time with reduced energy consumption, leading to safer and more efficient navigation.

Robotics and Automation

SNNs are also revolutionizing robotics, where quick and accurate decision-making is essential. For example, in robotic surgery, SNN-based vision systems allow for precise control and fast responses, which are essential for minimally invasive procedures.

Surveillance and Security Systems

Surveillance systems need to monitor vast areas constantly, making power efficiency and quick responses key priorities. SNNs allow these systems to focus only on changes or suspicious activities, cutting down on both processing time and energy costs. This is especially useful in large-scale public security networks, where vast amounts of visual data need to be processed continuously.

Industrial IoT and Smart Manufacturing

In smart factories, real-time event-based processing is vital to monitor equipment, track production quality, and ensure safety. SNN-powered vision systems allow machines to react instantly to changes in the environment, improving both efficiency and safety in industrial settings.

Challenges and Future Prospects of SNNs

Overcoming Computational Complexity

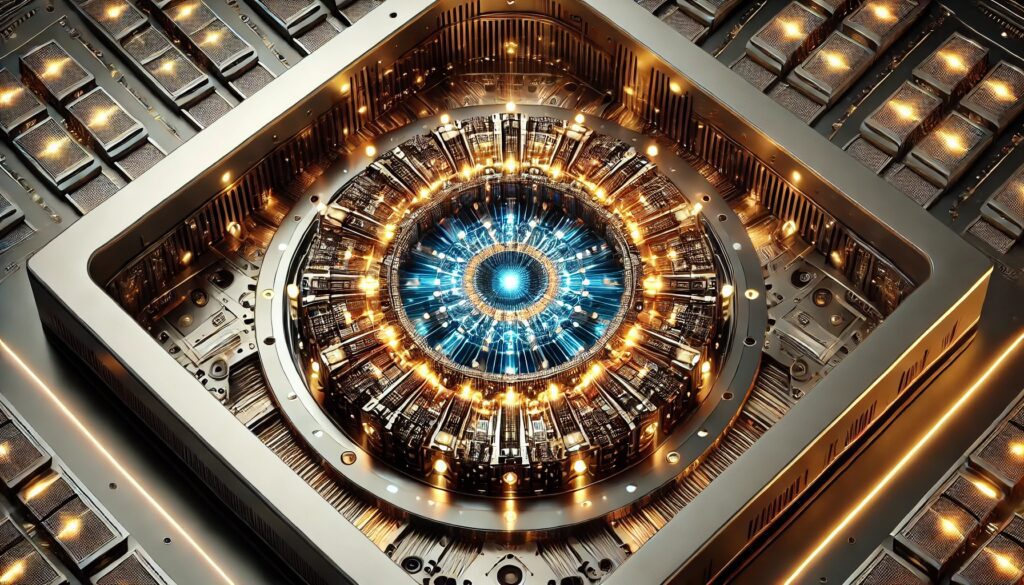

Despite their promise, SNNs still face challenges when it comes to scaling up for larger, more complex vision tasks. The spiking behavior of neurons can be computationally intensive, requiring specialized hardware like neuromorphic chips to fully harness their potential.

However, with ongoing advances in neuromorphic computing, it’s likely that these challenges will be overcome in the near future, making SNNs even more widely applicable.

Integration with Existing Systems

Integrating SNN-based vision systems with existing technologies also remains a challenge. While SNNs offer clear advantages for event-based processing, many current systems rely on frame-based methods that will need to be adapted for event-based integration.

Final Thoughts

Spiking Neural Networks are unlocking a new frontier in vision systems by enabling event-based processing that mimics the brain’s ability to process dynamic inputs in real-time. With the ability to offer faster response times, lower power consumption, and improved accuracy, SNNs are set to transform applications ranging from autonomous vehicles to smart factories.

As the technology continues to evolve, SNNs will likely play a crucial role in the future of real-time perception, bringing us closer to machines that think and react as quickly as humans do.

FAQs

Why are SNNs more energy-efficient?

SNNs are more energy-efficient because they only compute when a spike is triggered by an event. This reduces unnecessary processing and lowers power consumption, especially when compared to traditional neural networks that require continuous data processing.

Where are SNNs applied in real-time systems?

SNNs are applied in real-time systems like autonomous vehicles, drones, robotics, and surveillance systems. They excel in tasks that require quick responses, such as object detection, motion tracking, and decision-making in dynamic environments.

What challenges do SNNs face?

SNNs face challenges such as computational complexity and the need for specialized hardware like neuromorphic chips. Additionally, integrating SNNs with existing technologies can be difficult because many current systems rely on frame-based, rather than event-based, processing.

How do SNNs improve real-time perception?

SNNs improve real-time perception by responding only to relevant events, such as motion or changes in the environment, rather than processing all incoming data continuously. This allows for faster decision-making and reactions, making them ideal for applications where milliseconds matter, such as in autonomous driving or robotics.

What is the difference between SNNs and traditional neural networks?

The main difference between SNNs and traditional neural networks lies in how they process information. Traditional neural networks use continuous values and process data in static frames, whereas SNNs rely on spikes and event-driven processing. This makes SNNs more efficient in scenarios where changes are rapid and only certain events are important to the task at hand.

What makes event-based processing ideal for vision systems?

Event-based processing is ideal for vision systems because it reduces the need to process every frame of data, focusing only on changes that matter. This method mimics how the human eye works, detecting movement and shifts in the environment in real-time. As a result, event-based systems are faster, more efficient, and require less computational power compared to traditional frame-based systems.

Can SNNs be used in consumer electronics?

Yes, SNNs can be used in consumer electronics, especially in areas requiring efficient, low-power solutions for real-time perception. For instance, event-based cameras and wearable devices benefit from the low energy consumption and quick response times offered by SNN-based processing, making them suitable for mobile applications like smartphones, AR/VR, and smart home devices.

How do SNNs contribute to the development of neuromorphic hardware?

SNNs are closely linked to the development of neuromorphic hardware, which is designed to mimic the brain’s structure and function. Neuromorphic chips, specifically built to support the spike-based nature of SNNs, allow for highly efficient processing, making them perfect for real-time tasks in robotics, AI, and sensor systems. These chips are instrumental in scaling up SNN applications for larger, more complex tasks.

How do SNNs handle noisy environments?

SNNs are particularly good at handling noisy environments because they are event-driven, meaning they focus only on significant changes in data and ignore irrelevant or redundant information. This ability to filter out noise makes SNNs highly efficient in complex, dynamic environments such as traffic monitoring or industrial settings, where distinguishing relevant signals from background noise is crucial.

What role do SNNs play in robotics?

In robotics, SNNs provide real-time processing capabilities that enable robots to adapt and respond quickly to environmental changes. For example, in robotic arms used for surgery or manufacturing, SNNs help the system react instantly to external stimuli like unexpected movements or obstacles, improving both accuracy and safety.

Are SNNs suitable for large-scale data processing?

While SNNs excel at handling real-time, event-based data, scaling them for large-scale data processing can be challenging. The computational complexity of SNNs requires specialized hardware, like neuromorphic chips, which are not yet as widely available as traditional processors. However, as neuromorphic technology advances, we’re likely to see more scalable SNN systems that can handle larger datasets more efficiently.

How are SNNs contributing to advancements in AI?

SNNs are pushing the boundaries of AI by introducing a brain-inspired approach to data processing. Unlike traditional AI models that rely on continuous learning and massive datasets, SNNs can learn from sparse data and respond in real-time, making them a perfect fit for applications requiring immediate, intelligent decision-making. Their integration with neuromorphic hardware is expected to further enhance the efficiency and adaptability of AI systems in the future.

Can SNNs be integrated with traditional machine learning models?

Yes, SNNs can be integrated with traditional machine learning models through hybrid systems. These systems leverage the strengths of both approaches: the high accuracy of traditional models and the real-time, low-energy benefits of SNNs. For instance, an autonomous vehicle might use traditional machine learning for long-term decision-making and SNNs for real-time obstacle avoidance, creating a more efficient and responsive overall system.

How do event-based cameras complement SNNs?

Event-based cameras and SNNs are a perfect match because both operate on event-driven principles. Event-based cameras capture changes in the scene (such as motion or light variations) rather than recording frames. This complements SNNs’ ability to process data only when significant events occur, leading to ultra-fast and efficient visual perception systems ideal for real-time applications like surveillance, robotics, and autonomous vehicles.

Further Reading:

Neuromorphic Engineering and Its Applications

How Event-Based Vision Is Changing Robotics