What is Synthetic Data, and Why is It Gaining Popularity?

Defining Synthetic Data

Synthetic data refers to artificially generated datasets created using algorithms, simulations, or generative models rather than collected from real-world scenarios. It mimics the statistical properties and features of real data but is inherently artificial.

For example, a retail company can simulate customer purchasing behaviors instead of relying solely on actual transaction records. These synthetic datasets closely resemble the real world but remain free of personal identifiers.

Popularity Among Data Scientists

Synthetic data is revolutionizing AI model training for several reasons:

- It can scale without limits, enabling experiments on larger datasets.

- It solves data privacy issues by eliminating sensitive information.

- It’s versatile, simulating scenarios where real-world data may be scarce.

A Real Game-Changer for AI Training

Industries such as healthcare, finance, and self-driving cars have increasingly turned to synthetic data. Its ability to simulate high-risk or rare events is invaluable.

Benefits of Synthetic Data for Model Training

Real-World Data (Exponential Growth):

Cost increases exponentially with time due to resource constraints, labor, and data acquisition challenges.

Synthetic Data (Linear Growth):

Costs grow linearly with time, as synthetic data generation scales efficiently and avoids real-world limitations.

Scalability Without Cost Constraints

One of the key advantages is scalability. Real-world data collection is costly and time-consuming, especially in sensitive or niche domains. Synthetic data offers infinite replication, allowing teams to scale datasets overnight.

For instance, a computer vision model might need millions of annotated images. Generating synthetic images programmatically saves resources while still covering diverse scenarios.

Addressing Data Scarcity

Rare or unusual events are often underrepresented in real datasets. With synthetic data, such gaps are filled by generating rare edge-case scenarios. Self-driving car developers, for instance, simulate dangerous conditions (like icy roads or sudden pedestrian crossings) to improve safety features.

Enhanced Data Privacy

Traditional datasets pose privacy risks, especially in healthcare or customer analytics. Synthetic data eliminates identifiable features, reducing compliance issues with regulations like GDPR or HIPAA.

Additionally, organizations can share synthetic datasets without compromising sensitive information.

Methods to Generate Synthetic Data

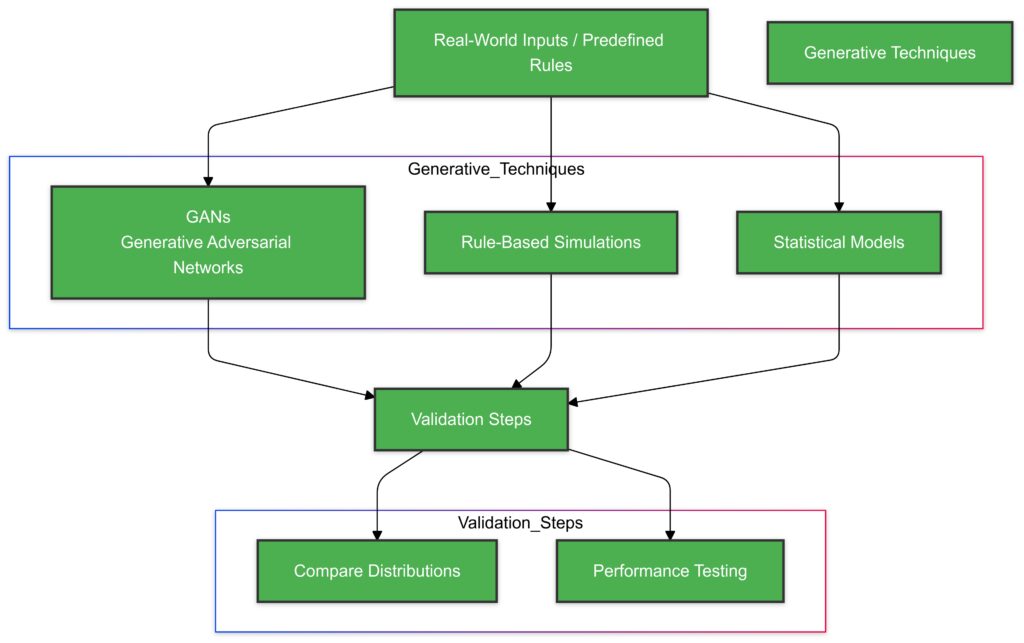

Inputs:Start with real-world data or predefined rules to initiate synthetic data generation.

Generative Techniques:GANs: Generate data using adversarial learning.

Rule-Based Simulations: Follow predefined logical or mathematical rules.

Statistical Models: Create data based on probabilistic distributions.

Validation Steps:Compare Distributions: Ensure the synthetic data aligns with the real-world distribution.

Performance Testing: Evaluate how well the synthetic data performs in the intended application.

Generative Adversarial Networks (GANs)

GANs are a powerful AI-driven technique for generating synthetic data. These models pit two neural networks—the generator and discriminator—against each other to create hyper-realistic outputs.

- Used in image generation, GANs create high-quality, realistic visuals for training purposes.

- In text processing, GANs simulate conversational data for chatbots or NLP models.

Rule-Based Simulations

Rule-based systems simulate real-world scenarios using predefined logical rules. This approach works well for domains like physics-based simulations or structured data such as finance records.

For example, creating stock price datasets based on historical trends involves rule-based simulations to mimic market conditions.

Statistical Modeling

By replicating statistical distributions observed in real-world data, statistical models generate synthetic datasets. These datasets often work well for numerical or tabular data, such as demographic trends or credit scores.

Challenges in Using Synthetic Data

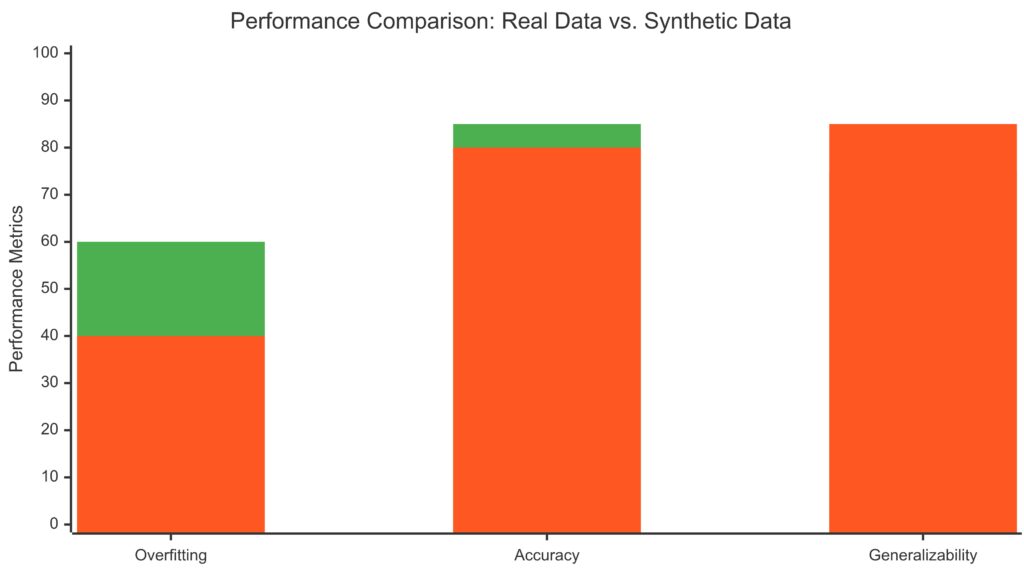

Models trained on real data tend to overfit more (score: 60).

Synthetic data reduces overfitting (score: 40) due to controlled diversity.

Accuracy:

Slightly higher for real data (85) compared to synthetic data (80).

Real data may better reflect true distributions.

Generalizability:

Synthetic data excels in generalizability (85) over real data (75).

It creates broader training scenarios for unseen conditions.

Risk of Overfitting to Synthetic Bias

Synthetic data may introduce biases if the generative model doesn’t align perfectly with the real-world distribution. This mismatch can lead to overfitting, where the AI model performs poorly on real data.

- Solution: Regularly validate synthetic datasets against real-world counterparts to ensure accuracy.

Computational Resource Requirements

Generating high-quality synthetic data can be computationally intensive, particularly with complex GANs or large simulations.

- Solution: Cloud-based services like AWS or Google Cloud streamline synthetic data generation at scale.

Validation and Quality Assurance

Ensuring that synthetic data maintains real-world relevance is critical. Poor validation can lead to unrealistic models, rendering synthetic data ineffective.

- Solution: Adopt rigorous QA processes, cross-referencing synthetic data with real-world insights.

Synthetic Data in Key Industries

Healthcare

Synthetic patient records are transforming healthcare AI while keeping patient privacy intact. AI-driven tools like disease detection models are trained on simulated datasets that mimic medical imaging data or EHRs.

Automotive

In autonomous vehicle development, synthetic road scenarios simulate weather conditions, accidents, and traffic flows. This allows companies to refine algorithms for edge cases without endangering lives.

Retail and Marketing

Marketers use synthetic datasets to forecast consumer trends, test campaigns, or even segment audiences. This reduces reliance on costly customer data acquisition.

Practical Applications of Synthetic Data Across Industries

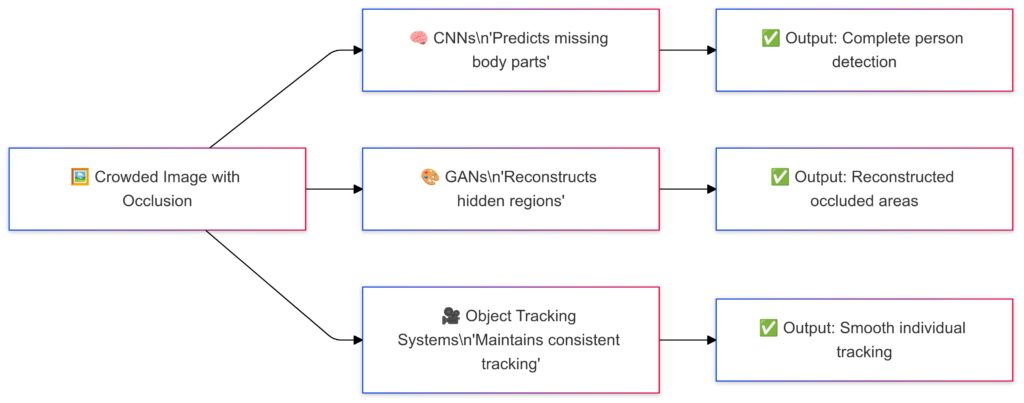

Enhancing Machine Learning Model Performance

Synthetic data is a critical enabler for training high-performing machine learning models. By supplementing real-world datasets, it ensures diverse and balanced training inputs.

- In image recognition, synthetic images diversify the dataset, improving the model’s ability to recognize edge cases like damaged objects or obscured faces.

- Chatbots and NLP models leverage synthetic conversational data to simulate user interactions, refining responses to uncommon queries.

Testing Complex Systems Safely

Synthetic data allows businesses to simulate intricate scenarios without real-world risks. For example:

- Self-driving car algorithms are tested on synthetic road conditions like severe weather or heavy traffic, minimizing accidents.

- Financial systems simulate fraud attempts, enabling secure algorithm development.

Such tests provide realistic environments for refining AI systems.

Democratizing Data Access

For startups or small businesses with limited resources, acquiring proprietary datasets is a challenge. Synthetic data offers a cost-effective alternative.

- By creating simulated customer demographics or product usage trends, startups can develop targeted marketing strategies without expensive data sources.

- Small AI firms can generate data for model prototyping, bypassing costly data acquisition processes.

Synthetic Data for Startups and Small Businesses

Cost-Effective Prototyping

Building robust models from scratch often requires enormous datasets. Synthetic data drastically reduces costs by eliminating the need for extensive collection efforts.

For example:

- A startup building a recommendation system could simulate purchase behavior data instead of relying on costly partnerships.

- In e-commerce, businesses use synthetic user interaction data to refine search algorithms.

Enhancing Scalability

Startups often outgrow their initial datasets quickly. Synthetic data helps scale models efficiently, accommodating rapid business growth. By generating diverse datasets, companies can expand their reach to new demographics or product categories.

Improving Collaboration and Data Sharing

Many businesses hesitate to share data due to privacy concerns. Synthetic datasets overcome this hurdle by anonymizing sensitive details.

- Collaborations between small firms or academic researchers benefit from freely shareable synthetic data.

- Open-source communities increasingly rely on synthetic datasets for project development.

Advanced Industry Use Cases

Cybersecurity Training

Synthetic data is pivotal for creating realistic cybersecurity attack scenarios, enabling organizations to develop defense mechanisms against evolving threats.

- Security teams simulate phishing attempts or malware patterns, training algorithms to detect them proactively.

- Threat detection systems rely on log data from synthetic network activity to identify unusual behaviors.

Financial Risk Analysis

Banks and financial firms use synthetic data to simulate market volatility, credit risk, or fraud. These datasets are crucial for stress-testing systems:

- Simulated stock market fluctuations train AI models to predict and respond to crashes.

- Synthetic customer profiles help refine credit scoring algorithms.

Advanced Robotics

Robotics development leverages synthetic data to train robots in diverse environments.

- Factory automation systems simulate various assembly-line conditions to enhance robotic precision.

- Humanoid robots train on synthetic interactions to improve user adaptability.

Synthetic Data Generation Tools for Businesses

Open-Source Platforms

Several open-source tools simplify synthetic data creation for businesses:

- SDV (Synthetic Data Vault) is popular for generating structured data.

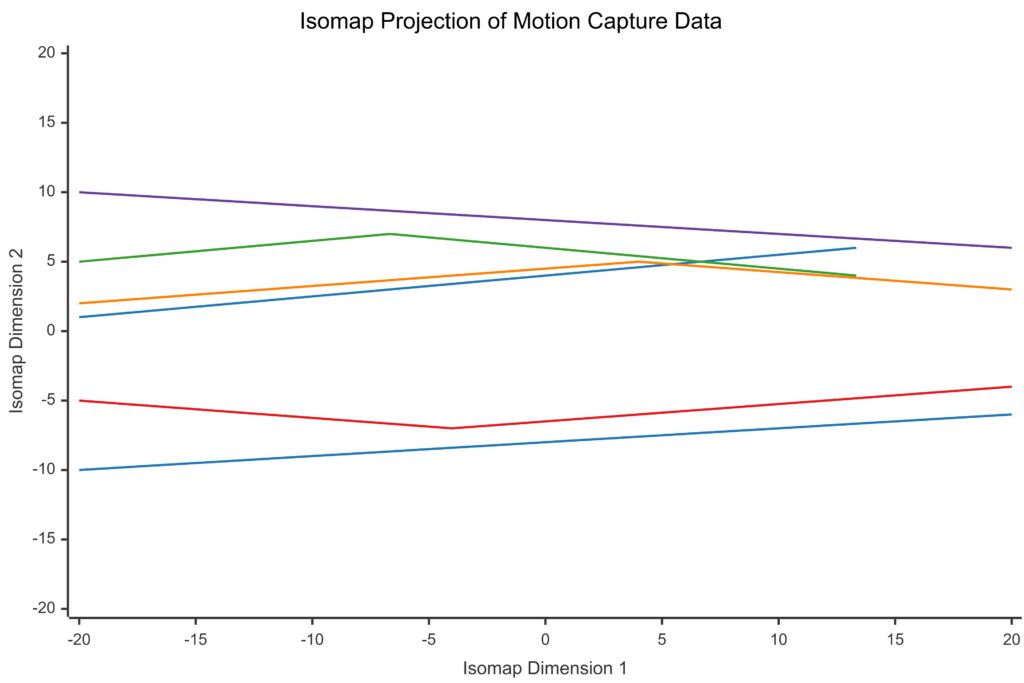

- DeepLabCut creates synthetic data for computer vision tasks like motion tracking.

These tools provide startups with accessible, cost-effective solutions.

Commercial Solutions

For larger-scale projects, commercial synthetic data platforms like Synthetaic or Datagen offer pre-built datasets tailored to industries such as healthcare or automotive.

Cloud-Based Services

Cloud providers like AWS SageMaker or Google AI Platform streamline synthetic data workflows, offering scalability for small and large businesses alike.

Ethical Concerns Surrounding Synthetic Data

Maintaining Fairness and Avoiding Bias

While synthetic data solves many issues, it risks perpetuating or amplifying biases if not carefully designed. For instance:

- If the underlying algorithm mirrors biases in real-world data, synthetic datasets will carry those flaws.

- Synthetic credit scoring data may inadvertently disadvantage minority groups if not balanced properly.

Solution: Implement fairness auditing and diverse input data to avoid biased synthetic outputs.

Misuse of Synthetic Data

Synthetic data can be exploited for unethical purposes, such as creating deepfakes or fabricating results in research. Misuse may undermine public trust in technology and AI.

Solution: Clear guidelines and regulatory frameworks can ensure synthetic data is used responsibly. Organizations like the Partnership on AI advocate for transparency in synthetic data applications.

Privacy Concerns Despite Anonymization

Though synthetic data often removes identifiable information, it isn’t foolproof. In some cases, generated datasets can still reveal patterns traceable to real individuals.

Solution: Techniques like differential privacy add noise to synthetic data, ensuring privacy protection. Regular evaluations are critical for compliance with GDPR, HIPAA, and similar regulations.

Ensuring Realistic Data Quality

Validation Against Real Data

Synthetic datasets must closely mimic real-world distributions to remain effective. If quality checks are skipped, models trained on poor-quality synthetic data may fail.

- Example: A predictive model trained on faulty synthetic weather data might provide inaccurate forecasts.

Approach: Validate synthetic datasets against real-world data benchmarks, ensuring alignment with actual trends and patterns.

Robust Generative Techniques

Modern techniques like variational autoencoders (VAEs) and diffusion models have improved synthetic data realism. Combining multiple generation methods often yields the best results.

Example: Blending GANs with rule-based models can produce highly accurate synthetic image datasets.

Simulation-Specific QA Tools

Industries like automotive or finance use domain-specific tools to ensure synthetic data matches expected scenarios.

- Self-driving car firms leverage driving simulators to refine data realism.

- Financial firms use market simulators to align synthetic trends with real-world economic models.

Future Trends in Synthetic Data

Integration with Federated Learning

Synthetic data and federated learning are emerging as a powerful duo. Federated learning trains models across decentralized datasets while keeping data localized. Synthetic data supplements this by filling gaps, enhancing privacy, and ensuring diverse coverage.

Example: Healthcare AI tools can use federated learning with synthetic EHRs to improve diagnostic accuracy without exposing sensitive patient records.

Scaling Virtual Environments

The rise of metaverse platforms and advanced simulations will create endless opportunities for generating synthetic data in virtual environments. These datasets will train AI for real-world applications, such as human interaction modeling or environmental monitoring.

Ethical AI Certification

As synthetic data usage grows, organizations may adopt certifications for ethical AI practices. These will verify that data creation aligns with fairness, transparency, and privacy standards.

Real-Time Synthetic Data Generation

Future advancements could enable real-time generation of synthetic data. Applications include:

- Autonomous systems adapting to live traffic conditions.

- Retail AI adjusting promotions based on simulated customer activity.

Tools and Technologies Leading the Way

Generative AI Platforms

Modern platforms such as Databricks MosaicML or Hazy excel in creating scalable, high-quality synthetic datasets. These tools integrate seamlessly into AI workflows.

Real-World AI Simulators

Simulation environments like CARLA (for autonomous vehicles) and Unity ML-Agents (for robotics) lead synthetic data innovation, especially for training dynamic systems.

Data Validation Frameworks

Tools like Great Expectations and Apache Superset ensure data quality by performing rigorous validations, crucial for synthetic datasets used in critical systems.

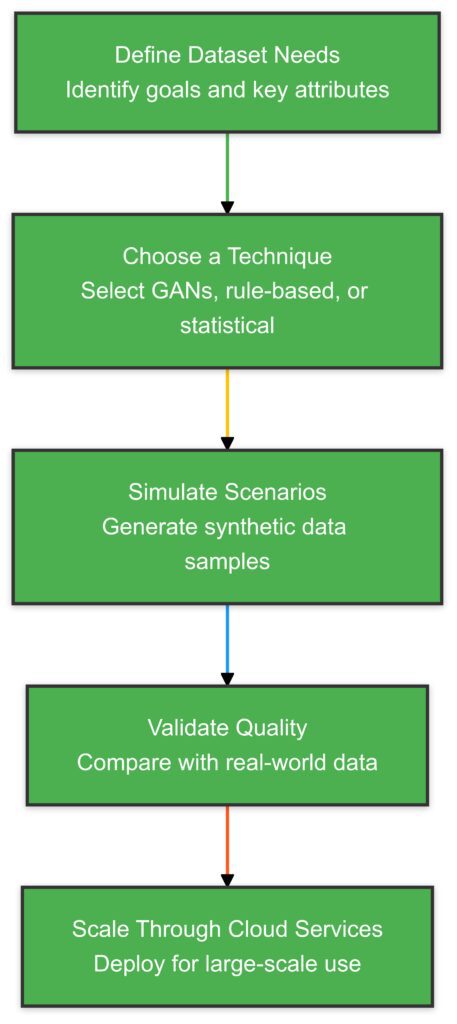

How to Generate Synthetic Data: A Step-by-Step Guide

Step 1: Define Your Dataset Needs

Start by identifying the type of data you need and its purpose. Consider these factors:

- Format: Are you working with images, text, tabular data, or audio?

- Domain-specific requirements: Are there unique scenarios (e.g., rare events) you need to model?

- Scale: How much data is necessary to train or test your model effectively?

Example: A healthcare project might require synthetic patient records, while a retail application may need simulated purchase histories.

Step 2: Choose a Data Generation Technique

Select the most suitable technique based on your project requirements. Common approaches include:

Generative Adversarial Networks (GANs)

Ideal for generating high-quality synthetic images, videos, or audio.

- How to use: Train the GAN model on real-world examples. Over time, the generator produces realistic outputs that the discriminator can no longer differentiate.

- Tools to try: PyTorch and TensorFlow frameworks.

Rule-Based Simulations

Effective for structured or numerical data. Use logical rules or formulas to create datasets.

- How to use: Define the relationships and rules that govern the dataset. For example, simulate customer transactions with random spending patterns based on predefined ranges.

- Tools to try: NumPy and Pandas in Python.

Synthetic APIs and Platforms

Platforms like SDV (Synthetic Data Vault) or Datagen offer pre-built tools for generating domain-specific datasets.

- How to use: Upload a sample dataset (if available) or configure the platform to generate synthetic data based on your specs.

- When to use: Ideal for businesses without in-house AI expertise.

Step 3: Simulate Scenarios (For Complex Cases)

For specialized applications, simulate the environment where the data will be used.

- Autonomous Driving: Use tools like CARLA or LGSVL to create synthetic driving scenarios. Adjust for different weather, road conditions, and obstacles.

- Robotics: Train robots in virtual environments using Unity ML-Agents or Gazebo.

These simulation platforms allow iterative testing and data refinement.

Step 4: Add Variability and Diversity

To avoid overfitting or bias, ensure that the synthetic data includes sufficient diversity:

- Randomize key features like lighting in images, accents in audio, or outliers in tabular data.

- Simulate rare edge cases to make the data robust.

Tip: Use augmentation tools like Albumentations for image variability or create synthetic anomalies using statistical models.

Step 5: Validate the Data Quality

Synthetic datasets must closely align with the statistical properties of real-world data. Key validation steps include:

- Compare distributions: Ensure generated data matches the statistical profile of the original dataset (if available).

- Test on smaller models: Validate that synthetic data improves performance without introducing overfitting.

- Iterate and refine: Adjust the data generation process based on test results.

Tools: Great Expectations or DataPrep to verify dataset quality.

Step 6: Scale Up with Cloud Solutions

For large-scale projects, leverage cloud platforms to generate, manage, and store synthetic datasets:

- AWS SageMaker: Ideal for generating and training on synthetic data in the cloud.

- Google Cloud AI: Provides pre-trained generative models and scalable computing resources.

Tip: Many cloud providers offer templates or pre-configured pipelines to speed up the process.

Step 7: Use Pre-Trained Models for Quick Results

For tasks requiring image generation, text synthesis, or language understanding, pre-trained models provide a fast, effective solution.

- Image Generation: Use tools like DALL-E or DeepMind’s Dreamer for creating realistic synthetic visuals.

- Text Data: Pre-trained language models like GPT-4 or BERT simulate dialogues, reviews, or rare linguistic scenarios.

- Audio Data: Leverage tools like Tacotron 2 or WaveNet to generate high-quality synthetic voice data.

How to Implement

- Fine-tune the pre-trained model using small amounts of real data relevant to your domain.

- Generate large volumes of synthetic data aligned with your target use case.

Example: Fine-tune GPT for creating synthetic customer support conversations based on sample logs.

Step 8: Leverage Data Augmentation for Enrichment

Data augmentation is particularly useful when combining small real-world datasets with synthetic ones.

- Image Data: Apply transformations such as cropping, flipping, rotation, or color adjustments to create diverse versions of the same image. Tools like Albumentations or TensorFlow ImageDataGenerator are ideal for this.

- Text Data: Use paraphrasing, synonym substitution, or sentence shuffling to expand text datasets. Libraries like NLTK or spaCy can automate text augmentation.

- Audio Data: Introduce background noise, pitch alterations, or speed adjustments using tools like Librosa to enrich audio datasets.

Example: An e-commerce company could augment product images by simulating different lighting conditions, ensuring models perform well in real-world scenarios.

Step 9: Combine Real and Synthetic Data

Blending real-world data with synthetic data often results in better-performing models.

- Use real data to ground the model in reality while filling gaps with synthetic datasets.

- In imbalanced datasets (e.g., fraud detection), synthetic examples ensure rare cases are adequately represented.

Best Practices for Integration

- Preprocess both datasets: Ensure compatibility in terms of format, scale, and feature distributions.

- Balance the dataset: Avoid oversampling synthetic data, as it can overshadow real-world examples.

- Validate performance: Continuously test the model on real-world data to confirm its generalizability.

Step 10: Automate the Workflow

Automation streamlines synthetic data generation for projects requiring continuous updates or large-scale outputs.

- Synthetic Data Pipelines: Create end-to-end workflows using tools like Apache Airflow or Prefect. These can automate tasks such as data generation, transformation, and validation.

- Cloud Automation: Platforms like AWS Step Functions and Google Cloud Dataflow simplify the management of synthetic data at scale.

Tip: Regularly update synthetic datasets to reflect changes in real-world conditions, such as evolving customer behaviors or environmental trends.

Step 11: Test Models in Simulated Environments

Testing trained models in synthetic environments validates how well they perform in edge cases or unseen scenarios.

- Virtual Reality (VR) Environments: Use VR simulations for robotics or autonomous systems. Tools like Unity3D and Gazebo are industry leaders in this space.

- Synthetic Market Simulations: In finance, tools like QuantLib create synthetic market conditions for stress-testing algorithms.

- Game Engines: Platforms such as Unreal Engine simulate lifelike interactions for AI models, particularly in gaming or training humanoid robots.

Example: A robotics company may train its model to navigate factory floors by simulating potential obstructions or failures.

Step 12: Use Synthetic Data to Accelerate AI Research

For researchers, synthetic data is invaluable for rapid experimentation. It eliminates bottlenecks caused by limited datasets and enables testing in extreme or hypothetical scenarios.

- Proof-of-Concept Testing: Quickly test ideas without waiting for real-world data collection.

- Benchmark Creation: Simulate data to benchmark algorithms in niche domains, such as rare disease prediction.

Tools: Platforms like SDV or Synthpop in R make it easy to generate reproducible synthetic datasets for research purposes.

Step 13: Ensure Regulatory Compliance

When generating synthetic data for regulated industries (e.g., healthcare, finance), adhere to industry standards and privacy laws:

- Apply differential privacy techniques to ensure individual data points cannot be traced back to real-world records.

- Work with frameworks like OpenDP (from Harvard) or ARX Data Anonymization Tool for added privacy guarantees.

Certification Opportunities

Seek certifications like ISO 27001 or SOC 2 to validate your synthetic data practices as secure and compliant.

Step 14: Monitor and Refine Your Data Generation Process

Synthetic data workflows require continuous monitoring to ensure relevance and quality:

- Periodically compare synthetic datasets against new real-world data to check for drifts in distributions.

- Adjust the data generation model as new edge cases or patterns emerge.

- Maintain version control for synthetic datasets to track changes over time.

Tools: Use MLOps platforms like Weights & Biases or Neptune.ai to integrate monitoring into your synthetic data pipelines.

By following these steps and leveraging the right tools, generating synthetic data becomes an efficient, scalable, and impactful strategy for modern AI development.

Final Summary: Synthetic data has revolutionized model training across industries, addressing privacy, scalability, and accessibility challenges. However, ethical concerns and quality assurance are essential to avoid pitfalls. With evolving tools and trends, synthetic data will remain a cornerstone of AI innovation.

FAQs

Are There Any Limitations to Synthetic Data?

Yes, limitations include:

- Quality Control: Poorly generated synthetic data can introduce noise or errors.

- Limited Realism: It may not fully capture the complexity of real-world scenarios (e.g., edge cases in medical diagnostics).

- Validation Required: Synthetic data still needs validation against real-world data to ensure effectiveness.

How Does Synthetic Data Help Reduce Bias?

Synthetic data enables the creation of balanced datasets by generating samples of underrepresented groups or scenarios. For example, a hiring AI system with bias towards male candidates can be trained using synthetic resumes equally distributed across genders to promote fairness.

Can Synthetic Data Be Used in Sensitive Domains?

Yes, synthetic data is particularly beneficial in sensitive domains like healthcare and finance. For example, it allows researchers to use anonymized yet realistic patient data for disease prediction models without risking privacy breaches.

How is synthetic data used to handle data imbalance?

Synthetic data is often used to address data imbalance by generating examples of underrepresented classes.

- Example: In fraud detection, most transactions are legitimate. Synthetic data can create numerous realistic fraudulent transactions to ensure balanced model training.

This approach improves the model’s ability to detect minority classes while preventing overfitting.

Can synthetic data simulate rare events?

Yes, synthetic data excels at generating rare or high-risk scenarios that are hard to observe naturally.

- Example: In insurance, synthetic datasets simulate scenarios like natural disasters or rare claims, allowing companies to stress-test models.

By generating rare events, synthetic data ensures models are prepared for edge cases.

What is differential privacy, and why is it important for synthetic data?

Differential privacy ensures that individual data points in synthetic datasets cannot be traced back to specific individuals in the source data.

- It adds statistical noise to the generation process, making reverse engineering practically impossible.

- Example: In healthcare, differential privacy allows researchers to study synthetic patient data without risking privacy breaches, ensuring compliance with laws like HIPAA or GDPR.

How does synthetic data impact AI explainability?

Synthetic data can make AI models more explainable by providing controlled, transparent datasets for training.

- Example: In fraud detection, synthetic data allows developers to label and control features influencing fraud risk, making model outputs more interpretable.

However, if synthetic data doesn’t accurately reflect real-world conditions, it can reduce trust in the model’s predictions.

What are the risks of overfitting with synthetic data?

Overfitting occurs when a model becomes too tailored to the synthetic dataset, reducing its performance on real-world data.

- This often happens if synthetic data lacks diversity or realistic patterns.

- Solution: Regularly validate models on real-world data and ensure synthetic datasets incorporate variability.

Example: A facial recognition model trained on overly uniform synthetic faces may struggle with diverse real-world appearances.

Can synthetic data be used for time-series analysis?

Yes, synthetic data is highly effective for time-series applications, such as forecasting or anomaly detection.

- Example: Simulate synthetic stock price data to train models for financial predictions.

- Tools like SDV or TSGAN (Time-Series GANs) are specifically designed for generating synthetic time-series data.

How do startups benefit from synthetic data?

Synthetic data provides startups with a cost-effective way to develop and test AI models without requiring expensive data collection.

- Example: A startup developing a chatbot could use synthetic conversation logs to train its NLP system without accessing private customer data.

It also allows rapid prototyping, helping startups bring products to market faster.

Is synthetic data generation expensive?

The cost of generating synthetic data varies but is generally more affordable than collecting and labeling real-world data at scale.

- Open-source tools like SDV or SynapseML offer cost-effective solutions.

- Cloud-based platforms like AWS or Google Cloud AI provide scalable options for businesses needing large datasets.

Example: Generating millions of annotated synthetic images for a computer vision model is far cheaper than hiring a team to manually annotate photos.

How does synthetic data ensure diversity in datasets?

Synthetic data systems are programmed to incorporate variability, ensuring diverse scenarios, features, and patterns.

- Example: For a voice recognition system, synthetic data can generate accents, dialects, or speech impediments, ensuring inclusivity in model performance.

This diversity enhances the robustness of AI models in real-world applications.

Are there regulations governing synthetic data usage?

While synthetic data sidesteps many traditional privacy concerns, it is still subject to general data protection laws like GDPR or HIPAA.

- Compliance depends on how the source data is handled and whether the synthetic data can be reverse-engineered.

- Example: A healthcare company using synthetic patient data must demonstrate that no real patient information can be traced back to the source.

Adhering to ethical guidelines and industry best practices is crucial for legal and ethical use.

Synthetic data continues to expand its utility across industries, solving critical challenges in AI model training while offering unparalleled scalability and privacy benefits.

Resources

Articles & Guides

- What is Synthetic Data? (IBM)

Comprehensive overview of synthetic data, its uses, and benefits. - Synthetic Data in AI Applications (MIT Technology Review)

Explores real-world applications and the growing impact of synthetic data in AI development. - How Synthetic Data Enhances Machine Learning (Towards Data Science)

Detailed explanation of how synthetic data is generated and used to improve machine learning models.

Research Papers

- Synthetic Data Generation for Machine Learning (arXiv)

A technical paper outlining methods and challenges in synthetic data generation. - Evaluating Synthetic Data: Metrics & Methods (Springer)

A framework for evaluating the quality and usefulness of synthetic data.

Books

- Synthetic Data for Deep Learning by Sergey I. Nikolenko

A comprehensive guide on synthetic data creation for deep learning applications. - Data Science and Artificial Intelligence with Synthetic Data by Eva Murzyn

Discusses the role of synthetic data in AI and data science with case studies.

Tools & Platforms

- Synthea

Synthea is an open-source synthetic patient generator for creating realistic healthcare datasets. - MOSTLY AI

MOSTLY AI provides synthetic data platforms designed for privacy-friendly AI model training. - Datagen

Datagen focuses on generating synthetic datasets for computer vision applications, such as facial recognition. - Hazy

Hazy offers tools for creating enterprise-grade synthetic data for industries like finance and telecom.

Online Communities

- Reddit: r/MachineLearning

Regular discussions and insights on synthetic data, datasets, and AI training. - Kaggle Synthetic Data Datasets

A rich collection of synthetic datasets and projects shared by the Kaggle community.

Organizations & Labs

- Open Data Institute (ODI)

ODI offers research and reports on ethical synthetic data practices. - MIT-IBM Watson AI Lab

MIT-IBM Watson AI Lab conducts cutting-edge research on synthetic data applications in AI.