In the face of climate change, traditional decision-making frameworks often fall short. That’s where non-stationary Markov decision processes (NSMDPs) step in, offering flexible tools to tackle resource management challenges in an unpredictable world.

This article delves into the role of NSMDPs in addressing climate-related resource allocation, conservation strategies, and uncertainty in environmental dynamics.

What Are Non-Stationary Markov Decision Processes?

Key Characteristics of NSMDPs

Unlike standard Markov decision processes (MDPs), NSMDPs allow for dynamic environments where transition probabilities or rewards evolve over time.

- In standard MDPs, assumptions about the environment staying constant often fail in climate-sensitive scenarios.

- NSMDPs account for these shifts, enabling adaptive decision-making.

For example, consider managing a water reservoir. Rainfall patterns and seasonal demands change, making a static model inadequate. NSMDPs shine in these cases.

Why the “Non-Stationary” Element Matters

Climate systems are inherently non-stationary. Shifting baselines, extreme weather events, and long-term warming trends defy simple forecasting.

- NSMDPs help decision-makers adjust policies as conditions change.

- They are particularly useful in long-term planning where trends like glacial melt or deforestation evolve gradually.

This adaptability is critical for ensuring sustainable solutions in areas such as agriculture, fisheries, and renewable energy.

Applications in Climate-Related Resource Management

Water Resource Optimization

Water scarcity is a mounting issue in many regions. With changing precipitation patterns, traditional static policies can fail to distribute resources efficiently.

- NSMDPs can dynamically prioritize water allocations based on current and predicted availability.

- These processes incorporate seasonal shifts, ensuring real-time adjustments to reservoir operations.

For instance, a city relying on a dam for water supply could use NSMDPs to balance agricultural, industrial, and household needs even as droughts intensify.

Adaptive Wildlife Conservation

Habitat degradation, migration shifts, and poaching risks make wildlife management a complex puzzle.

- NSMDPs help conservationists dynamically adjust efforts such as patrol routes or reforestation based on real-time data.

- They account for uncertainty—like sudden natural disasters—ensuring robust strategies.

Take coral reef conservation: NSMDPs could allocate restoration efforts depending on bleaching patterns, seasonal changes, and human activity.

Challenges of Implementing NSMDPs

Data Requirements

NSMDPs thrive on accurate, timely data to predict transitions effectively. But in climate scenarios, reliable datasets can be sparse or expensive to obtain.

- Satellite data and remote sensing have improved matters, but gaps remain in underrepresented regions.

Computational Complexity

Tracking evolving probabilities and optimizing policies require significant computational power.

- Cloud computing and advancements in AI are easing this burden, yet they remain a barrier for resource-limited organizations.

These challenges make collaboration between researchers, policymakers, and technologists essential.

Innovative Models and Frameworks in Use

Deep Reinforcement Learning Meets NSMDPs

Integrating deep learning into NSMDPs is opening new doors. Neural networks can process complex patterns and improve forecasting accuracy.

- For example, reinforcement learning can optimize renewable energy grids as wind or solar output fluctuates.

Multi-Agent NSMDPs for Shared Resources

Many climate issues involve multiple stakeholders, from governments to local communities. Multi-agent NSMDPs allow for cooperative or competitive resource management.

- Fisheries are a prime example, where international waters demand shared governance frameworks.

Climate Change Mitigation Strategies with NSMDPs

Renewable Energy Integration

Renewable energy sources like wind and solar are crucial for decarbonization, but their variability poses challenges for grid stability.

- NSMDPs excel in managing this variability by adapting to changing energy outputs and demand patterns.

- For instance, they can help decide when to store surplus energy, release it during peak times, or prioritize specific energy sources.

As an example, a smart grid could use NSMDPs to balance inputs from multiple solar farms across a region, ensuring stable power supplies even during storms or seasonal dips in sunlight.

Carbon Capture and Storage (CCS)

Implementing CCS at scale requires balancing cost, location, and environmental impact. NSMDPs can guide decision-making in this complex area.

- Dynamic models evaluate where and when to prioritize carbon capture based on emissions data, storage capacity, and economic considerations.

- These tools can also help governments phase in CCS while responding to policy or market fluctuations.

This adaptability ensures that carbon reduction targets remain achievable, even as industrial patterns evolve.

Disaster Risk Management

Climate change has heightened the frequency and severity of disasters such as floods, wildfires, and hurricanes.

- NSMDPs are powerful for allocating resources like emergency personnel, supplies, or evacuation routes in response to shifting risk profiles.

- They enable cities to preemptively adapt policies based on predicted changes in vulnerability or hazard frequency.

For example, flood-prone regions can optimize dike construction, emergency response, and zoning laws dynamically as rainfall intensifies.

NSMDPs in Agricultural Resilience

Precision Farming Strategies

Agriculture faces heightened risks from changing rainfall patterns, pests, and extreme heat. NSMDPs empower farmers with data-driven, adaptable strategies.

- Farmers can adjust planting schedules, irrigation levels, and pest control methods as conditions evolve.

- These systems consider factors like long-term soil health, climate projections, and immediate weather forecasts.

By integrating IoT devices and satellite data, NSMDPs make agriculture more resilient and sustainable, minimizing yield losses while conserving resources.

Tackling Food Supply Chain Disruptions

Climate events like droughts or hurricanes often disrupt food supply chains. NSMDPs can:

- Reconfigure distribution networks in real time, ensuring minimal waste and faster recovery.

- Prioritize perishable goods, focusing on high-impact regions to minimize food insecurity.

This adaptability is especially critical in regions dependent on climate-sensitive crops like rice or coffee.

Case Studies: Success Stories with NSMDPs

Managing Deforestation in the Amazon

Researchers have deployed NSMDPs to monitor and curb illegal logging in the Amazon.

- By incorporating satellite imagery and changing risk probabilities, these models help prioritize patrols in high-risk areas.

- Over time, they dynamically adapt to new trends, such as shifting logging activities.

The result? Improved resource allocation for enforcement efforts, reducing deforestation rates significantly.

Urban Heat Management in Tokyo

Tokyo faces increasing heatwaves due to urbanization and climate change. NSMDPs have been used to:

- Optimize tree planting and reflective infrastructure projects.

- Dynamically adjust cooling strategies for vulnerable areas during peak heat periods.

This targeted approach has mitigated heat risks while ensuring cost-efficient resource use.

The Future of NSMDPs in Climate Policy

Bridging Science and Policy

NSMDPs can serve as decision-support tools for policymakers, helping them design adaptable, evidence-based climate policies.

- From emission reduction plans to renewable subsidies, these tools make policies resilient to uncertainty.

Global Collaboration Opportunities

Climate challenges often require international cooperation, especially for shared ecosystems like oceans or forests.

- NSMDPs provide a framework for coordinating cross-border strategies, ensuring equitable solutions to shared problems.

Expanding Technological Integration

With advancements in AI, IoT, and predictive modeling, NSMDPs will become even more effective.

- Future models might integrate real-time climate sensors and AI-driven insights for even faster adaptation.

Technical Structure of Non-Stationary Markov Decision Processes

Core Components of NSMDPs

At their heart, non-stationary Markov decision processes (NSMDPs) are built upon the same foundation as standard MDPs but introduce time-dependent elements. Here’s a breakdown:

- States (S): Represent the possible conditions of the system, such as reservoir levels, energy demands, or wildlife populations.

- Actions (A): The set of decisions available to a decision-maker at each state, like allocating water, dispatching resources, or investing in infrastructure.

- Transition Probabilities (P): Define how the system moves from one state to another after an action. These probabilities are time-dependent in NSMDPs, reflecting evolving environmental conditions.

- Rewards (R): Represent the benefits or costs of taking an action in a state. Rewards in NSMDPs also vary with time, capturing trends like rising costs or diminishing returns.

- Time Horizon (T): NSMDPs often work on finite or episodic time horizons, where strategies adapt as conditions change over time.

Time-Varying Transition and Reward Functions

The primary feature distinguishing NSMDPs is their time-varying nature:

- P(s’|s, a, t): Transition probabilities depend explicitly on time ttt.

- R(s, a, t): Rewards also change dynamically, influenced by external factors like economic trends or climate shifts.

This flexibility enables NSMDPs to model environments where uncertainty evolves unpredictably.

Algorithms for Solving NSMDPs

Solving NSMDPs involves finding a policy (π\piπ) that specifies the best action for each state over time. Several algorithms exist:

1. Dynamic Programming Extensions

Dynamic programming techniques like value iteration and policy iteration are adapted to account for time dependence.

- Backward Induction: This method calculates the optimal policy by starting at the final time step and working backward.

- Computationally feasible for problems with small state spaces and short time horizons.

2. Reinforcement Learning (RL) Approaches

When transition probabilities or rewards are not explicitly known, RL algorithms are used:

- Q-Learning with Time Dependence: Adapts traditional Q-learning to incorporate time as an additional state variable.

- Temporal Difference (TD) Learning: Models evolving rewards using approximations, reducing the need for exhaustive simulations.

3. Approximate Methods for Large State Spaces

NSMDPs often face scalability issues in real-world applications. To address this, approximation techniques like:

- Monte Carlo Methods: Simulate random scenarios to estimate policies.

- Neural Network Function Approximations: Learn compact representations of value functions or policies in high-dimensional state spaces.

These approaches leverage modern AI tools, enabling NSMDPs to operate in real-time settings.

Practical Implementation of NSMDPs

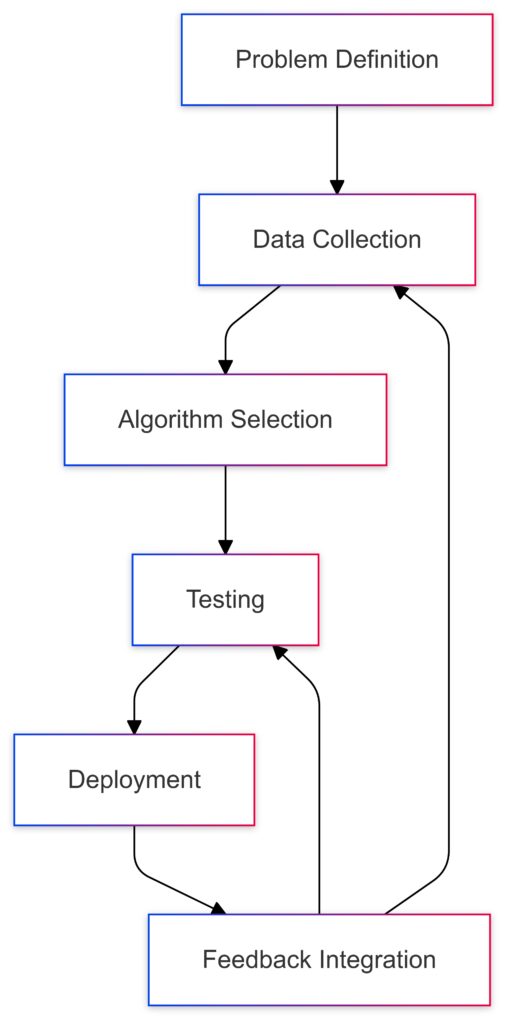

Step 1: Define the Problem and Model

The first step in applying NSMDPs is identifying the states, actions, and time-varying factors:

- States: Enumerate measurable variables (e.g., soil moisture, reservoir level).

- Actions: Define controllable interventions (e.g., irrigation rates, patrol schedules).

- Temporal Dynamics: Quantify how climate-related changes affect transitions and rewards.

For example, managing a drought-stricken region might involve:

- States: Water availability categories (low, medium, high).

- Actions: Increase water restrictions, import supplies, or reallocate resources.

- Transition Dynamics: Probability of rainfall changing supply levels based on seasonal forecasts.

Step 2: Collect and Process Data

Reliable time-series data is crucial for calibrating the model:

- Historical trends (e.g., rainfall, temperature, migration patterns).

- Real-time sensor data (e.g., IoT-enabled weather stations, remote monitoring systems).

- Predictive climate models for future projections.

Data preprocessing, including normalization and noise filtering, ensures that the system captures accurate, actionable insights.

Step 3: Select or Develop an Algorithm

Based on problem complexity, choose a suitable solution approach:

- For small-scale problems: Use dynamic programming.

- For complex, large-scale systems: Implement RL or neural-based approximations.

- Integrate open-source libraries like TensorFlow or PyTorch for advanced models.

Customizing the algorithm to incorporate domain-specific constraints (e.g., legal limits on emissions) ensures practicality.

Step 4: Test and Validate the Model

Testing involves running the model on simulated or historical data:

- Validation Metrics: Evaluate performance using metrics like cumulative rewards, policy robustness, or resource utilization efficiency.

- Stress Testing: Analyze how well the model performs under extreme conditions, such as severe droughts or sudden wildlife migrations.

Step 5: Deploy and Monitor

Implement the model in real-world decision-making systems:

- Integrate it into decision-support tools for resource managers.

- Use adaptive feedback loops to update policies as new data becomes available.

Monitoring ensures that the NSMDP evolves with changing environmental and socio-economic conditions.

Tools and Technologies for NSMDPs

Software Frameworks

Several frameworks exist for building and solving NSMDPs:

- OpenAI Gym: For prototyping RL-based NSMDPs.

- MDPtoolbox (R and Python): Classic algorithms tailored to decision processes.

- DeepMind’s RL Frameworks: For integrating deep learning into dynamic decision models.

Computational Resources

Handling large-scale NSMDPs requires:

- High-Performance Computing (HPC): For simulations of complex systems.

- Cloud Platforms (AWS, Google Cloud): Enable distributed processing and real-time implementation.

Data Sources

Leverage public and private datasets:

- NASA Earth Science Data: Climate observations and projections.

- FAO Databases: Agricultural and water resources data.

- IoT Devices: Collect local, real-time environmental data.

Advanced Techniques in NSMDPs: Addressing Complexity and Uncertainty

Dealing with High Dimensionality

In real-world applications, the state and action spaces of NSMDPs can grow exponentially. Addressing this complexity requires innovative techniques:

State Aggregation

Group similar states into clusters to reduce the size of the state space.

- For instance, in water management, aggregate states by ranges of reservoir levels instead of using precise measurements.

- This reduces computational load while retaining sufficient accuracy.

Function Approximation

Leverage machine learning models to approximate value functions or policies:

- Linear Approximators: Suitable for simple problems where states exhibit linear relationships.

- Deep Neural Networks: Handle highly nonlinear, high-dimensional problems.

Deep Q-learning, for example, can learn to approximate optimal policies without explicitly modeling all state transitions.

Tackling Uncertainty in Transition Dynamics

Climate systems often involve unknown or partially known dynamics, complicating transition modeling. Strategies to address this include:

Robust Optimization

Robust NSMDPs account for worst-case scenarios by optimizing policies under uncertain transitions and rewards.

- This approach ensures that policies are resilient to variability, making it ideal for high-stakes decisions.

Bayesian Approaches

Incorporate Bayesian methods to update models as new data becomes available.

- Transition probabilities are treated as distributions instead of fixed values, allowing for more adaptive decision-making.

- Example: Predicting migration routes for wildlife, where new tracking data can refine movement probabilities over time.

Simulation-Based Models

For highly uncertain systems, simulate a wide range of possible scenarios.

- Use tools like Monte Carlo Simulations to explore potential outcomes.

- Evaluate policies across multiple simulated trajectories to identify the most robust strategies.

Multi-Agent NSMDPs: Collaboration in Shared Resource Management

Understanding Multi-Agent Dynamics

Many climate-related challenges involve multiple stakeholders with conflicting objectives, such as:

- Countries managing transboundary rivers.

- Communities sharing grazing lands or fisheries.

- Organizations coordinating renewable energy grids.

Multi-agent NSMDPs extend traditional models to include cooperative or competitive interactions among agents.

Cooperative Models

In cooperative scenarios, agents work together to maximize a shared reward function.

- Example: Regional water-sharing agreements where upstream and downstream users optimize for joint benefit.

Competitive Models

In competitive settings, each agent seeks to maximize its own reward, leading to potential conflicts.

- Example: Overfishing in international waters, where countries compete for fish stocks.

Solving Multi-Agent NSMDPs

Game Theory Integration

Incorporate concepts from game theory to resolve conflicts:

- Nash Equilibria: Identify stable policies where no agent can unilaterally improve its outcome.

- Pareto Efficiency: Ensure that no agent can benefit without harming others.

Decentralized Solutions

For large-scale problems, decentralized approaches let agents make decisions independently while considering shared dynamics.

- Example: Local communities adjusting water use autonomously based on shared seasonal forecasts.

Real-World Examples of NSMDPs in Action

Optimizing Renewable Energy Grids in Germany

Germany’s transition to renewable energy involves balancing solar, wind, and traditional power sources.

- Challenge: Managing grid stability as weather patterns shift dynamically.

- Solution: NSMDPs use time-varying wind and solar output data to optimize when and where energy is stored or distributed.

- Outcome: Reduced reliance on fossil fuels while ensuring reliable electricity access.

Protecting African Elephant Migration Corridors

NSMDPs have been applied to safeguard migration routes for African elephants amid increasing human-wildlife conflict.

- Challenge: Habitat encroachment and unpredictable migration patterns.

- Solution: Dynamically allocate patrols and resources based on updated migration and poaching risk data.

- Outcome: Improved resource efficiency and enhanced corridor protection.

The Future of NSMDPs in Resource Management

Integration with Advanced Technologies

IoT and Real-Time Data Streams

The rise of Internet of Things (IoT) devices enables continuous data collection. For example:

- Soil sensors feeding real-time data into irrigation models.

- Wildlife tracking collars updating migration patterns in NSMDPs.

Quantum Computing

Quantum algorithms hold promise for solving large-scale NSMDPs faster by handling vast state spaces with ease.

Expansion into Cross-Sectoral Applications

NSMDPs are poised to revolutionize decision-making in:

- Urban Planning: Dynamically adapt infrastructure investments to shifting population and climate patterns.

- Health Systems: Optimize responses to climate-driven disease outbreaks by managing resources like vaccines or medical personnel.

Case Study: Dynamic Water Resource Management in California Using NSMDPs

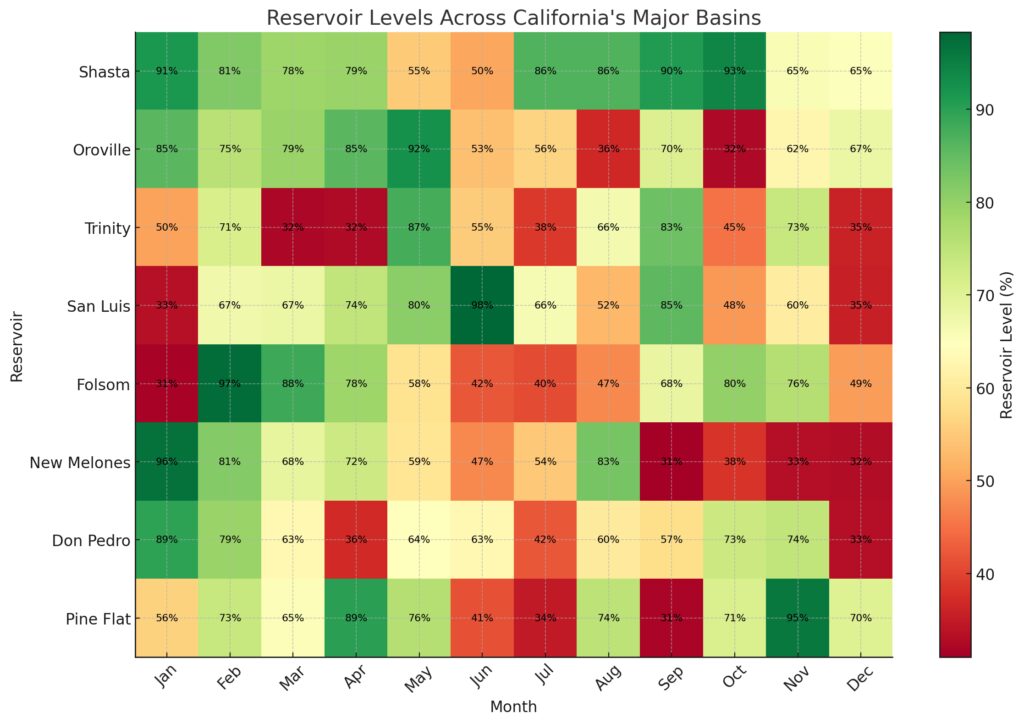

California faces recurring water crises due to droughts, increasing demand, and climate variability. Managing these challenges requires adaptive policies that consider long-term trends and real-time conditions.

Problem Overview

Challenges

- Variable Supply: Climate change has altered precipitation patterns, resulting in shorter, more intense rainy seasons.

- Growing Demand: Population growth and agricultural expansion strain existing resources.

- Competing Stakeholders: Farmers, cities, and environmental groups often have conflicting water needs.

Why NSMDPs?

Traditional static allocation methods cannot respond to these dynamic, time-sensitive issues. NSMDPs enable adaptive decision-making by incorporating:

- Seasonal changes.

- Evolving stakeholder priorities.

- Long-term environmental projections.

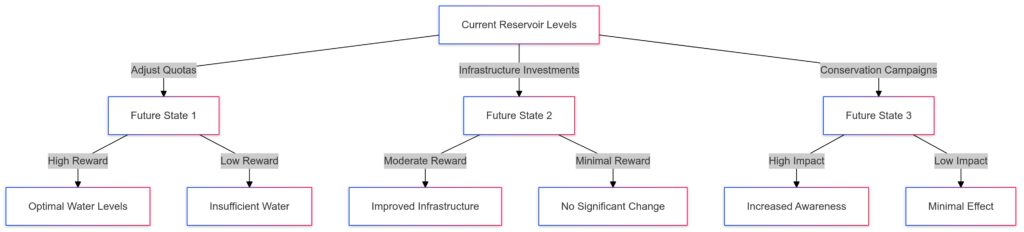

NSMDP Model Design

States (S)

Define states based on water availability in major reservoirs and regional demand levels:

- Reservoir levels: Low, moderate, high.

- Demand categories: Urban, agricultural, environmental conservation.

Actions (A)

Possible actions include:

- Adjusting allocations: Change water quotas for each sector.

- Investing in infrastructure: Develop desalination plants, improve irrigation efficiency, or expand storage capacity.

- Conservation campaigns: Initiate public water-saving programs during critical periods.

Transition Probabilities (P)

Transition dynamics reflect factors such as:

- Rainfall predictions based on seasonal climate models.

- Urban demand growth rates from population data.

- Agricultural water needs, influenced by crop cycles and temperature forecasts.

Rewards (R)

Rewards capture the benefits or costs associated with each decision:

- Urban supply: High rewards for meeting minimum city water needs.

- Agriculture: Rewards linked to crop yield and economic output.

- Environment: Penalties for failing to maintain river flow rates critical to ecosystems.

Time Horizon (T)

The model operates over a 10-year planning horizon, with decisions evaluated monthly to account for seasonal variations.

Implementation

Data Sources

- Historical Reservoir Data: Insights from California’s Department of Water Resources.

- Satellite Imagery: Real-time monitoring of snowpack, a major water source.

- Climate Projections: Downscaled models from NOAA for precipitation and temperature trends.

Algorithm

The problem is solved using temporal difference (TD) reinforcement learning with neural network approximations:

- Model-Free Approach: Enables learning optimal policies without requiring exact knowledge of transition probabilities.

- Scalable Framework: Handles the large state-action space efficiently.

Computational Tools

- TensorFlow: For neural network-based policy approximation.

- AWS Cloud: Provides the computational power to simulate various scenarios.

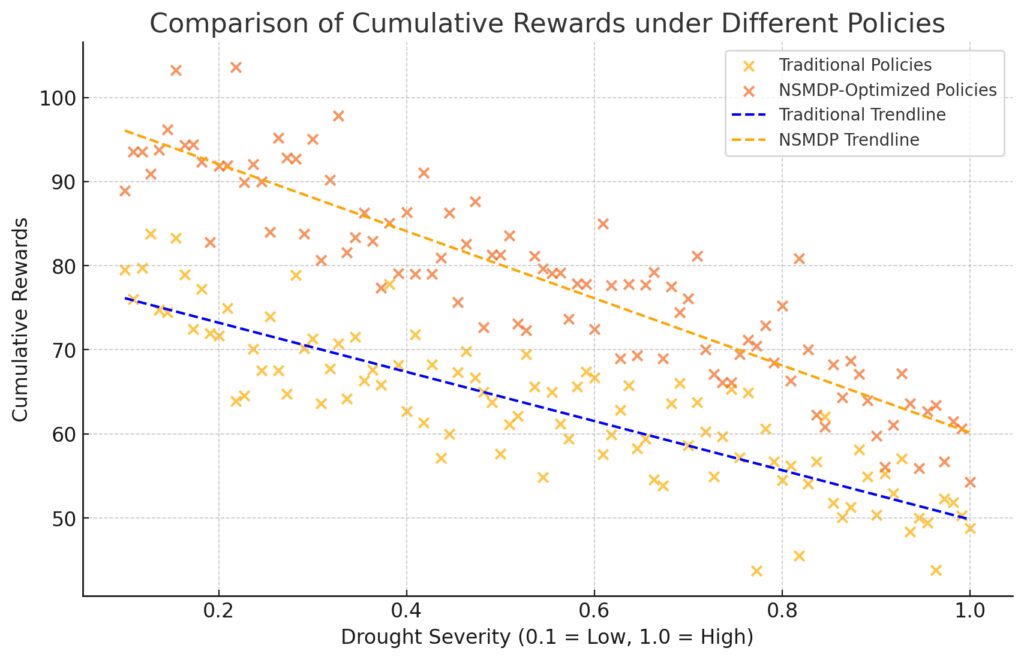

Results

Key Findings

- NSMDPs prioritized urban water needs during peak drought months while gradually increasing agricultural allocations as conditions improved.

- Investments in irrigation efficiency for farmers led to 20% water savings, enabling greater resilience.

- Environmental conservation requirements, like maintaining minimum river flows, were consistently met.

Policy Adjustments

- Conservation campaigns were triggered two months earlier than in prior years based on real-time data, leading to 30% higher participation.

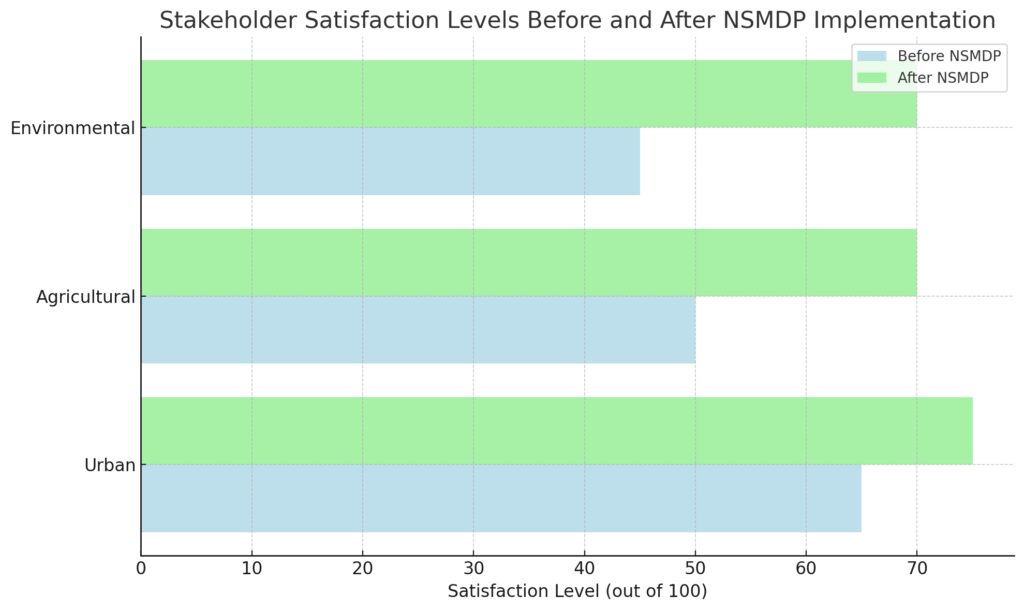

- Dynamic allocation reduced conflict between stakeholders by demonstrating transparency and fairness in water distribution.

Impact

Improved Resource Use

- Water storage levels were maintained at optimal ranges for 8 out of 10 years, even in drought scenarios.

- Urban water shortages dropped by 50%, preventing costly emergency measures.

Stakeholder Engagement

Transparent, data-driven decisions boosted public trust and cooperation among competing users.

Lessons Learned

Success Factors

- Real-Time Data Integration: Timely updates enhanced decision accuracy.

- Stakeholder Collaboration: Including priorities from all sectors ensured buy-in.

Challenges

- Data Gaps: Missing data for remote areas required imputation, reducing accuracy.

- Computational Costs: Scaling models for real-time decisions posed budget constraints.

Future Directions

- Incorporating Climate Shocks: Enhancing the model to account for extreme events like flash floods or heatwaves.

- Expanding Multi-Agent Capabilities: Including federal, state, and municipal stakeholders in a shared framework for better coordination.

- AI Integration: Leveraging AI-driven climate models for more accurate transition probability updates.

FAQ’s

Can NSMDPs work with incomplete data?

Yes, NSMDPs can incorporate Bayesian approaches or robust optimization to handle uncertainty and incomplete data. Reinforcement learning methods can also learn optimal policies iteratively, reducing reliance on pre-defined transition probabilities.

What tools and libraries are available for implementing NSMDPs?

- OpenAI Gym for prototyping RL-based models.

- MDPtoolbox (Python/R) for implementing dynamic programming and reinforcement learning algorithms.

- TensorFlow and PyTorch for building neural network-based models.

- Cloud platforms like AWS and Google Cloud for computational resources in large-scale implementations.

What data sources are commonly used in NSMDP applications?

Key sources include:

- Climate data from NASA Earthdata or NOAA Climate Data Online.

- Environmental data from satellite imagery and IoT sensors.

- Economic and policy data from World Bank databases or government resource allocation reports.

How scalable are NSMDPs for large-scale applications?

NSMDPs are scalable using approximation methods such as deep reinforcement learning for handling high-dimensional problems and parallel computing or cloud-based systems for processing large datasets in real time.

What are some real-world examples of NSMDPs in use?

- Water resource management in California for dynamically allocating water across competing needs like agriculture and urban use.

- Renewable energy grids in Germany for optimizing solar and wind energy integration to ensure grid stability.

- Wildlife conservation in Africa for adapting patrol strategies to protect endangered species like elephants from poaching.

What future advancements can improve NSMDPs?

- Quantum computing for solving large-scale NSMDPs faster.

- AI integration for creating better predictive models for transition probabilities and rewards.

- IoT expansion to enable real-time data inputs for greater adaptability.

Resources

Open-Source Libraries and Tools

- OpenAI Gym

An environment for developing and testing reinforcement learning algorithms, adaptable to NSMDPs.

Link: OpenAI Gym - MDPtoolbox (R and Python)

Offers implementations of classic and advanced MDP algorithms.

Link: MDPtoolbox - TensorFlow and PyTorch

Popular machine learning libraries for building custom NSMDP models with neural network integration.

Link: TensorFlow | PyTorch

Datasets for Climate and Environmental Modeling

- NOAA Climate Data Online (CDO)

Access historical and real-time climate data.

Link: NOAA CDO - NASA Earthdata

Satellite imagery and climate projections for environmental modeling.

Link: NASA Earthdata - FAO AquaCrop Dataset

Specialized data on agricultural water management.

Link: FAO AquaCrop