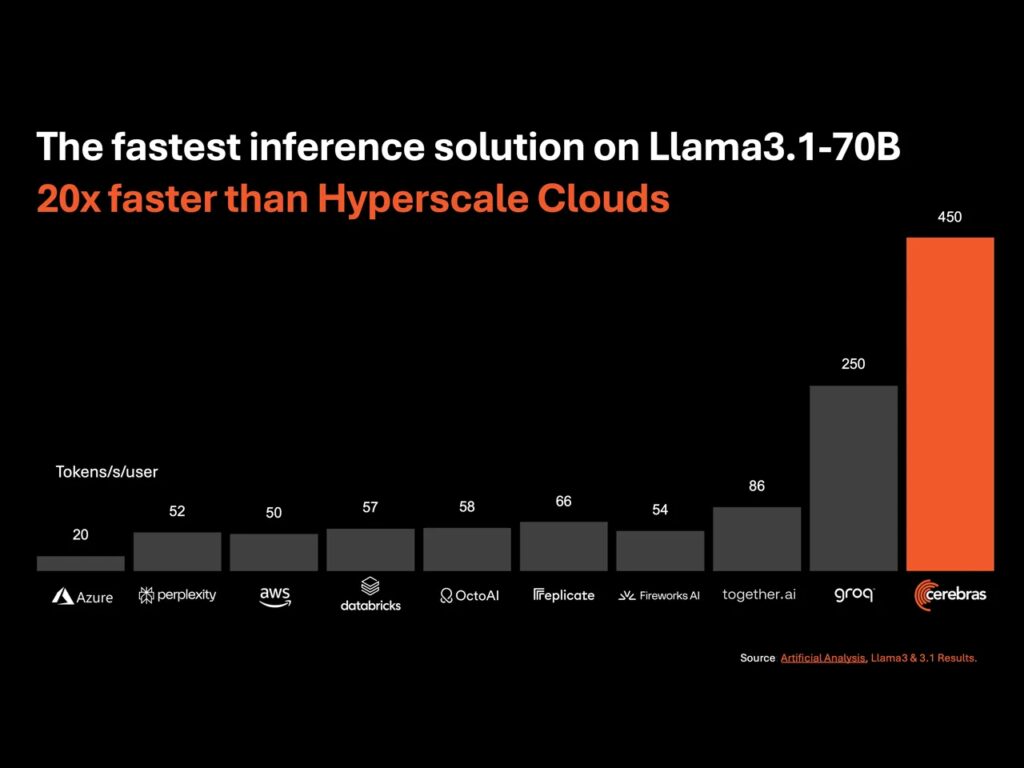

Artificial intelligence (AI) is on the cusp of another revolution, and leading the charge is Cerebras Systems. Known for pushing the boundaries of AI hardware, Cerebras has recently made headlines with its groundbreaking AI inference solution, which promises to be the fastest in the world. But what exactly makes this technology so impressive? Let’s delve into the key innovations behind Cerebras’ latest achievement, exploring both the hardware and software advancements that enable its unprecedented speed.

The Powerhouse: Cerebras Wafer-Scale Engine (WSE)

At the heart of Cerebras’ AI inference breakthrough lies the Wafer-Scale Engine (WSE), a piece of hardware that has redefined what’s possible in AI processing. Unlike traditional processors, which are limited by their relatively small size, the WSE is enormous—literally the size of a wafer. This radical departure from conventional chip design allows the WSE to house an astonishing 2.6 trillion transistors and 850,000 AI-optimized cores, all on a single chip.

But why does size matter here? The vast scale of the WSE means that it can process much more data in parallel than traditional chips. This parallelism is a key factor in achieving faster inference times, as it reduces the need for data to be shuttled back and forth between different parts of a system. The WSE’s design minimizes data movement, which is often a bottleneck in AI computations. By keeping everything on one massive chip, Cerebras has effectively eliminated the latency that slows down other systems.

Memory at Scale: On-Chip RAM and the SWARM Architecture

Image credit: Cerebras.ai

Cerebras didn’t just stop at creating a massive chip; they also rethought how memory should work within that chip. Traditional processors are often hampered by the “memory wall”—the time it takes to move data between the processor and memory. To overcome this, the WSE is equipped with 40 GB of on-chip SRAM, which is directly accessible by the processing cores. This ensures that the data the AI needs is always nearby, drastically reducing latency.

In addition to this, Cerebras introduced the SWARM architecture, a software innovation that orchestrates how data is handled across the WSE. SWARM is designed to optimize the distribution of tasks across the chip’s 850,000 cores, ensuring that each core is working efficiently and that data bottlenecks are avoided. This dynamic management of data flow is essential for handling the massive workloads typical in AI inference without slowing down the system.

Software Smarts: Cerebras’ Weight Streaming

While the WSE’s hardware capabilities are awe-inspiring, they would be incomplete without equally sophisticated software. Cerebras’ weight streaming technology is one such software innovation that sets it apart. In traditional AI models, weights (the parameters the AI uses to make decisions) are stored in memory and need to be loaded into the processor for each computation. This can be a time-consuming process, especially as models become larger and more complex.

Cerebras has flipped this model on its head by streaming weights directly from memory to the processing cores only when they are needed. This means the WSE isn’t bogged down by the need to preload massive amounts of data before starting its computations. Instead, it can continuously receive and process new weights, leading to faster inference times and more efficient use of resources.

Data Flow and the Fabric: Communication Without Compromise

Another critical factor in Cerebras’ speed is its data flow architecture, which allows for incredibly fast communication between cores. Traditional processors often struggle with inter-core communication, especially as the number of cores increases. This can create bottlenecks that slow down processing.

Cerebras tackled this issue with a high-bandwidth, low-latency fabric that connects all 850,000 cores on the WSE. This fabric ensures that data can move freely between cores without hitting any major slowdowns. By enabling seamless communication across the entire chip, Cerebras has effectively eliminated another common bottleneck in AI inference.

Real-World Impact: Speed in Practice

All these innovations come together to create a system that isn’t just theoretically fast—it’s practically blazing. In real-world tests, Cerebras’ AI inference solution has demonstrated speed and efficiency that leaves traditional systems in the dust. This is particularly important in fields like natural language processing, image recognition, and real-time decision-making, where every millisecond counts.

By drastically reducing inference times, Cerebras is enabling AI models to be more responsive and effective in practical applications. This speed can lead to more accurate real-time predictions, more fluid interactions in AI-driven applications, and the ability to handle more complex models without sacrificing performance.

Revolutionizing Healthcare: Precision Medicine and Beyond

Faster Diagnostics and Personalized Treatment Plans

In healthcare, time is often the difference between life and death. This is where Cerebras’ AI inference solution shines. Its ability to process vast amounts of data in parallel allows for rapid analysis of medical images, genetic data, and patient records. For instance, in the field of radiology, AI models can now quickly analyze complex imaging data, identifying abnormalities such as tumors or fractures with unprecedented speed. This enables radiologists to make quicker, more accurate diagnoses, ultimately leading to faster treatment for patients.

Beyond diagnostics, Cerebras’ technology plays a crucial role in the development of personalized medicine. By rapidly processing genetic information, AI can help doctors tailor treatment plans to the individual characteristics of each patient’s genetic makeup. This is particularly important in oncology, where understanding a patient’s unique genetic mutations can inform the most effective treatment strategy, significantly improving outcomes.

Accelerating Drug Discovery

The process of discovering new drugs is notoriously slow and expensive, often taking years and billions of dollars to bring a new treatment to market. Cerebras’ AI inference solution has the potential to dramatically accelerate this process. By enabling the rapid analysis of biological data, such as protein structures and molecular interactions, AI can identify promising drug candidates much faster than traditional methods.

Pharmaceutical companies are already leveraging AI to sift through vast libraries of compounds, predicting which ones are most likely to be effective against specific diseases. With Cerebras’ breakthrough, this process becomes even faster, allowing researchers to test and validate potential treatments in record time. This speed could be particularly crucial in responding to emerging health threats, such as new viruses, where the rapid development of vaccines or antiviral drugs is critical.

Beyond Healthcare: Transforming Other Industries

Finance: Real-Time Fraud Detection

In the finance sector, the ability to process and analyze data in real-time is essential for preventing fraud. Financial institutions are constantly bombarded with vast amounts of transactional data, making it challenging to identify fraudulent activities quickly. Cerebras’ AI inference solution enables these institutions to analyze data streams instantaneously, flagging suspicious transactions as they occur.

This real-time capability is a game-changer for fraud detection systems, which often rely on analyzing patterns of behavior to identify anomalies. With the speed and efficiency of Cerebras’ technology, these systems can operate with a level of accuracy and speed previously unattainable, significantly reducing the time it takes to respond to potential fraud.

Autonomous Vehicles: Real-Time Decision Making

Autonomous vehicles represent another area where Cerebras’ AI inference technology is making a significant impact. For self-driving cars to navigate safely, they must process a continuous stream of data from sensors, cameras, and other inputs. This data must be analyzed in real-time to make split-second decisions, such as avoiding obstacles, recognizing traffic signals, and adjusting speed.

The unparalleled speed of Cerebras’ AI inference solution ensures that autonomous vehicles can process this data quickly and efficiently, leading to safer and more reliable performance on the road. This technology is helping to accelerate the development of fully autonomous vehicles, bringing us closer to a future where self-driving cars are a common sight.

Energy: Optimizing Resource Management

In the energy sector, managing resources efficiently is critical to both operational success and environmental sustainability. AI plays a key role in optimizing energy usage, predicting maintenance needs, and managing the distribution of power across grids. Cerebras’ AI inference solution enhances these capabilities by enabling faster and more accurate analysis of energy consumption patterns, weather data, and other relevant factors.

For example, in renewable energy, where the availability of resources like wind and solar power can fluctuate, AI can help predict when and where these resources will be available, optimizing their use. With Cerebras’ technology, these predictions can be made more quickly, allowing energy providers to respond more dynamically to changes in supply and demand, reducing waste and improving efficiency.

The Future of AI: What Cerebras’ Breakthrough Means for the Industry

Cerebras’ AI inference breakthrough is more than just a technological marvel; it’s a glimpse into the future of AI. As models grow larger and more complex, the need for faster and more efficient processing becomes critical. Cerebras’ innovations in hardware and software are paving the way for a new era of AI, where massive models can be deployed in real time without the limitations of traditional systems.

In conclusion, the key to Cerebras’ unprecedented speed lies in its holistic approach to both hardware and software design. By creating a chip that can process massive amounts of data in parallel, minimizing latency through on-chip memory, and optimizing data flow with advanced architectures, Cerebras has set a new standard for AI inference. This breakthrough not only propels AI performance to new heights but also opens up exciting possibilities for future innovations. As the AI landscape continues to evolve, Cerebras’ achievements will undoubtedly be a major influence, pushing the boundaries of what’s possible in artificial intelligence.

Resources

Cerebras Wafer-Scale Engine (WSE) Overview

Learn more about the technological advancements behind the WSE, the world’s largest AI processor, which powers Cerebras’ inference capabilities.

Cerebras Systems: WSE Technology

AI-Driven Drug Discovery

Discover how AI, powered by technologies like Cerebras, is accelerating the process of drug discovery and development.

AI and Drug Discovery