When it comes to machine learning, few algorithms have revolutionized the field as dramatically as XGBoost. Whether you’re new to data science or a seasoned pro, understanding XGBoost can elevate your predictive modeling to new heights.

What is XGBoost?

XGBoost stands for Extreme Gradient Boosting. It’s an implementation of gradient-boosted decision trees designed for speed and performance. Created by Tianqi Chen in 2016, this algorithm has quickly become a go-to tool for winning data science competitions and developing powerful predictive models.

Why is XGBoost So Powerful?

At its core, XGBoost is all about accuracy and efficiency. Here’s why it stands out:

- Performance: It’s known for its lightning-fast execution and scalability, handling large datasets and high-dimensional data with ease.

- Regularization: XGBoost incorporates L1 and L2 regularization, which reduces overfitting, making the model more generalizable.

- Handling Missing Data: It automatically learns how to handle missing values, filling gaps in data intelligently.

- Flexibility: The algorithm can be used for classification, regression, ranking, and even user-defined prediction problems.

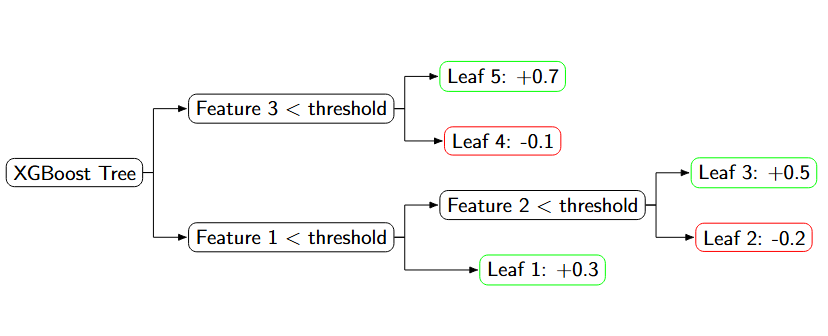

How Does XGBoost Work?

XGBoost builds an ensemble of decision trees in a sequential manner. Each new tree corrects errors made by the previous one, minimizing the residuals or differences between predicted and actual values.

The process involves three key steps:

- Initialization: It starts with an initial prediction, usually the average of the target values.

- Boosting: Subsequent trees are added iteratively, each focusing on the errors of the previous trees.

- Weighted Predictions: The predictions from all trees are combined, usually through a weighted sum, to make the final prediction.

Key Features of XGBoost

XGBoost offers several unique features that distinguish it from other boosting algorithms:

- Tree Pruning: It uses a process called Max Depth to prune trees, ensuring that only relevant splits are considered.

- Handling Imbalanced Data: With built-in options like scale_pos_weight, XGBoost efficiently manages datasets where class distribution is skewed.

- Parallel Processing: Leveraging parallel and distributed computing, XGBoost can train models faster by utilizing multiple cores.

When to Use XGBoost?

While XGBoost is versatile, it truly shines in specific scenarios:

- Kaggle Competitions: Many top-performing models in competitions are powered by XGBoost.

- Large Datasets: Its ability to handle vast amounts of data makes it ideal for big data applications.

- Tabular Data: It excels with structured datasets where relationships between features are critical.

Common Pitfalls and How to Avoid Them

Even with its power, XGBoost isn’t foolproof. Here are some common challenges and tips to avoid them:

- Overfitting: Though XGBoost has built-in regularization, it’s still susceptible to overfitting, especially with noisy data. Tuning hyperparameters and using cross-validation can mitigate this.

- Complexity: The model can become very complex and difficult to interpret. Simplify by limiting the depth of trees and using feature importance to reduce features.

- Memory Consumption: XGBoost can be memory-intensive. Monitor your resources and consider using sparsity-aware algorithms if memory becomes an issue.

Hyperparameter Tuning for XGBoost

Fine-tuning hyperparameters is crucial to unlocking the full potential of XGBoost. Key parameters include:

- Learning Rate: Controls the step size at each iteration. A smaller rate increases accuracy but requires more iterations.

- Max Depth: Limits the maximum depth of a tree. Deeper trees capture more information but can lead to overfitting.

- n_estimators: Number of trees in the model. More trees generally improve performance but also increase computation time.

- Subsample: Fraction of the training data used for each tree. Lower values prevent overfitting but too low may underfit.

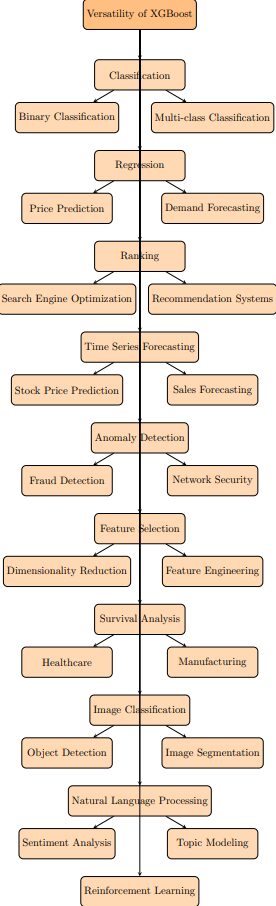

Classification Tasks

One of the most common applications of XGBoost is in classification tasks, where the objective is to categorize instances into predefined classes. This algorithm is particularly effective for both binary and multi-class classification problems. In binary classification, XGBoost can predict outcomes such as whether a customer will churn or remain loyal. In multi-class scenarios, it can classify instances into one of several categories, such as determining the species of a flower based on its characteristics. The algorithm’s ability to handle large datasets with complex feature interactions makes it a robust choice for these tasks.

Regression Tasks

XGBoost also shines in regression tasks, where the goal is to predict a continuous value rather than a categorical outcome. This is especially useful in scenarios like price prediction, where XGBoost can model the relationship between various features (e.g., location, size, amenities) and the price of a house. Another typical application is in demand forecasting, where businesses rely on XGBoost to predict future sales or inventory needs based on historical data. The algorithm’s efficiency in modeling non-linear relationships and handling outliers ensures that it delivers accurate predictions in regression tasks.

Ranking Tasks

In the realm of ranking tasks, XGBoost is invaluable, especially in applications like search engines and recommendation systems. Ranking involves predicting the order of items based on their relevance or importance. XGBoost’s ability to optimize for ranking-specific metrics, such as Normalized Discounted Cumulative Gain (NDCG), makes it particularly effective for these applications. For instance, in a search engine, XGBoost can rank web pages according to their relevance to a user’s query, ensuring that the most pertinent results appear at the top. Similarly, in recommendation systems, it can prioritize items like movies or products that a user is most likely to enjoy.

Time Series Forecasting

While not traditionally designed for time series forecasting, XGBoost can be adapted for this task by framing it as a supervised learning problem. By engineering features that capture temporal dependencies, such as lagged variables, XGBoost can predict future values based on past trends. This approach is useful in predicting stock prices, where historical prices serve as inputs to forecast future movements. It is also applicable in sales forecasting, where past sales data is used to predict future demand. The flexibility of XGBoost allows it to handle the complexities of time series data, making it a powerful tool in forecasting.

Anomaly Detection

Anomaly detection is another area where XGBoost proves its utility. In tasks like fraud detection or system health monitoring, the goal is to identify outliers or rare events that deviate from the norm. XGBoost can be trained to recognize patterns that are indicative of anomalies, such as unusual transaction patterns that might signal fraudulent activity or irregular network traffic that could indicate a security breach. Its ability to model complex interactions and non-linear relationships makes it well-suited for detecting anomalies in large and complex datasets.

Feature Selection and Engineering

Beyond predictive tasks, XGBoost is also highly effective in feature selection and engineering. By evaluating the importance of each feature in contributing to the model’s predictive power, XGBoost helps identify which features should be retained, which can be combined, and which can be discarded. This is particularly useful in scenarios where datasets have a large number of features, many of which may be redundant or irrelevant. Through feature selection, XGBoost not only improves the efficiency of the model but also enhances its interpretability by focusing on the most significant predictors.

Survival Analysis

In the field of survival analysis, XGBoost can be adapted to predict the time until an event occurs, such as the failure of a machine component or the relapse of a disease. Although survival analysis is traditionally handled by statistical methods, XGBoost can be used to model time-to-event data by predicting the likelihood of an event occurring within a certain time frame. This application is particularly valuable in healthcare, where predicting patient survival times can inform treatment decisions, and in manufacturing, where it can help in predicting the lifespan of machinery parts, enabling proactive maintenance.

Image Classification and Computer Vision

While deep learning models are often preferred for complex computer vision tasks, XGBoost can still be applied to simpler image classification problems. By using feature extraction techniques to convert image data into numerical representations, XGBoost can be employed in tasks like object detection, where it identifies and classifies objects within an image, and image segmentation, where it categorizes each pixel in an image into different segments. Although not as powerful as deep learning models for these tasks, XGBoost’s efficiency and lower computational requirements make it a viable option in resource-constrained environments.

Natural Language Processing (NLP)

In the domain of Natural Language Processing (NLP), XGBoost can be effectively used for text classification tasks. By transforming text data into numerical features using techniques like TF-IDF (Term Frequency-Inverse Document Frequency) or word embeddings, XGBoost can classify text into categories such as positive or negative sentiment in sentiment analysis, or identify topics in documents through topic modeling. Its ability to handle sparse data and high-dimensional feature spaces makes it suitable for many NLP tasks, where traditional models might struggle.

Reinforcement Learning

Although not a typical application, XGBoost can also be integrated into reinforcement learning frameworks as part of the function approximation process. In reinforcement learning, where agents learn to make decisions by maximizing cumulative rewards, XGBoost can be used to approximate value functions or policies, particularly in environments where decision trees provide a better approximation than linear models. This integration expands the versatility of XGBoost, allowing it to contribute to more complex, sequential decision-making tasks.

Explanation:

- Main title: The central node, “Versatility of XGBoost,” represents the overall topic.

- Tasks: Different types of machine learning tasks such as Classification, Regression, Ranking, etc., are represented as main nodes branching out from the title.

- Subtasks: Specific examples of each task, like Binary Classification and Multi-class Classification under Classification, are represented as nodes branching out from the main task nodes.

- Arrows: These indicate the relationship between the tasks and subtasks.

Conclusion

The versatility of XGBoost across a wide array of machine learning tasks is a testament to its robust design and adaptability. Whether it’s classification, regression, ranking, or even time series forecasting, XGBoost offers a powerful and efficient solution. Its capability to handle complex, large-scale data makes it an indispensable tool in the data scientist’s arsenal.

For those looking to harness the full potential of their data, XGBoost provides the tools necessary to tackle challenges across different domains. From predicting prices and detecting fraud to ranking search results and forecasting future trends, XGBoost delivers consistent and reliable performance, solidifying its place as a top choice in machine learning.

Discover more about the applications of XGBoost in various industries here.