Agentic AI isn’t just smart—it’s autonomous. These systems set goals, execute tasks, and even reflect on their performance. But how do you take one clever agent and scale it into an entire army of problem-solvers?

This guide walks you through exactly how to scale agentic AI, from core architecture to future-proof infrastructure.

This guide walks you through exactly how to scale agentic AI, from core architecture to future-proof infrastructure.

Understand the Core Architecture of Agentic AI

What makes AI agentic?

Agentic AI doesn’t just respond—it acts. It perceives, plans, executes, and reflects in a loop that mimics human decision-making.

At the heart of agentic systems lie four key modules:

- Goal setting

- Task decomposition

- Memory architecture

- Feedback loops

If you’re scaling, these modules must be modular, API-driven, and robust from day one.

Why this architecture matters when scaling

Scaling agentic systems without modularity is a recipe for chaos. Think: performance lag, memory failures, and decision bottlenecks.

Design with composability in mind. That means clearly defined APIs, microservices, and shared state logic—so each module scales on its own.

Define Clear Objectives for Scaling

Not every agent needs to scale

Before scaling, ask: Why scale?

Is it to:

- Handle a wider range of tasks?

- Operate across different domains?

- Increase throughput or user reach?

Clear goals shape how you scale—and save you from overengineering.

Goals drive how you scale

If you’re scaling for:

- Performance, consider parallel execution and async processing.

- Complexity, look at memory compression and hierarchical reasoning.

Start small—but architect like you’re going big.

Implement Task Decomposition and Orchestration

The magic of breaking things down

Great agentic AI doesn’t attack tasks head-on—it splits them into subtasks.

Want to launch a product? Break it down into research, ideation, development, and marketing. Each could be handled by different sub-agents.

Use orchestration frameworks

Tools like LangChain, CrewAI, and AutoGen handle orchestration for you. Let them manage agent roles, memory flow, and inter-agent dialogue.

Optimize Memory and Context Management

Memory is where agents usually fail at scale

As agents take on more tasks, forgetfulness becomes a real issue. You’ll need layers of memory:

- Short-term cache

- Vector databases (e.g. FAISS)

- Episodic memory (event chains)

Each memory type plays a different role. Balance them based on context length, token limits, and retrieval speed.

Use RAG and memory decay

Retrieval-augmented generation (RAG) lets agents call memory on demand. Add memory decay to avoid bloat—no one needs every detail forever.

Enable Autonomous Goal Setting and Self-Correction

Teach your agents to think for themselves

At scale, you can’t micromanage. Agents need to:

- Set goals

- Evaluate results

- Correct errors

This is where internal scoring, reward models, or reflection agents come in.

Use self-review loops

Use a “review agent” or feedback loop where agents critique each other’s output. It’s your built-in QA team—run by AI.

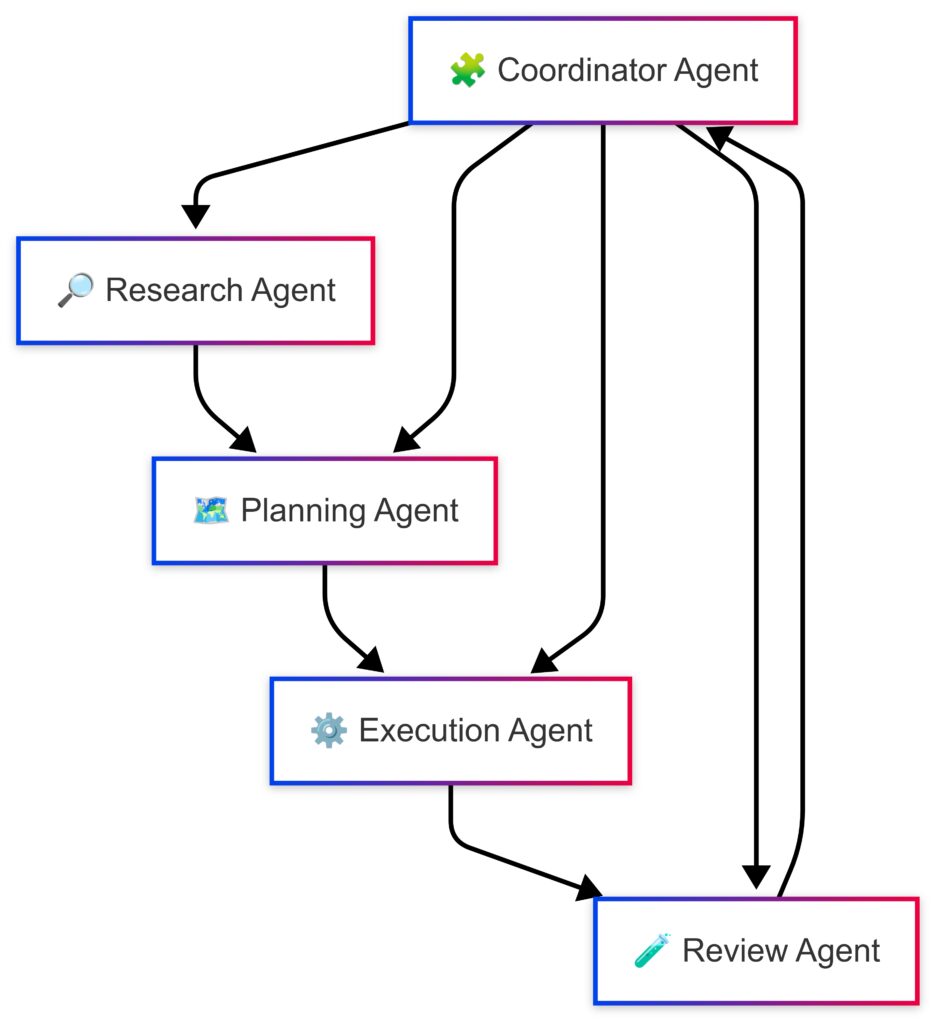

Design Multi-Agent Collaboration Frameworks

Why go multi-agent?

Scaling = specialization. A multi-agent system lets each agent do what it does best.

Example: One agent researches, another writes, another edits.

Coordination is everything

Define:

- Clear roles

- Task triggers

- Output expectations

And appoint a coordinator agent to manage flow and decisions.

Use Communication Protocols and Shared Knowledge Bases

Let agents talk

Structured communication (via JSON, message queues, or APIs) keeps your agent team in sync.

Use platforms like LangGraph or AutoGen for built-in messaging pipelines.

Share what agents learn

Let agents deposit knowledge into a shared vector store or knowledge graph. That way, insights don’t get lost between tasks.

Introduce Environment Abstraction Layers

Real-world interfaces need translating

Agents interact with APIs, apps, and users. Abstraction layers shield them from messy implementation details.

Use tools like Semantic Kernel to define tools, data fetchers, and transformation layers that agents can call cleanly.

Implement Parallelization and Distributed Execution

Speed through scale

Run agents in parallel to speed up workflows. Split tasks across containers, threads, or serverless functions.

Tech like Kubernetes, Ray, or async Python help manage distributed runs.

Don’t forget guardrails

Avoid overlap with deduplication logic. Track performance and fail-safes with monitoring dashboards.

🔑 Key Takeaways

- Multi-agent systems allow role specialization

- Shared memory & protocols keep agents aligned

- Environment abstraction simplifies real-world interactions

- Parallel execution boosts performance exponentially

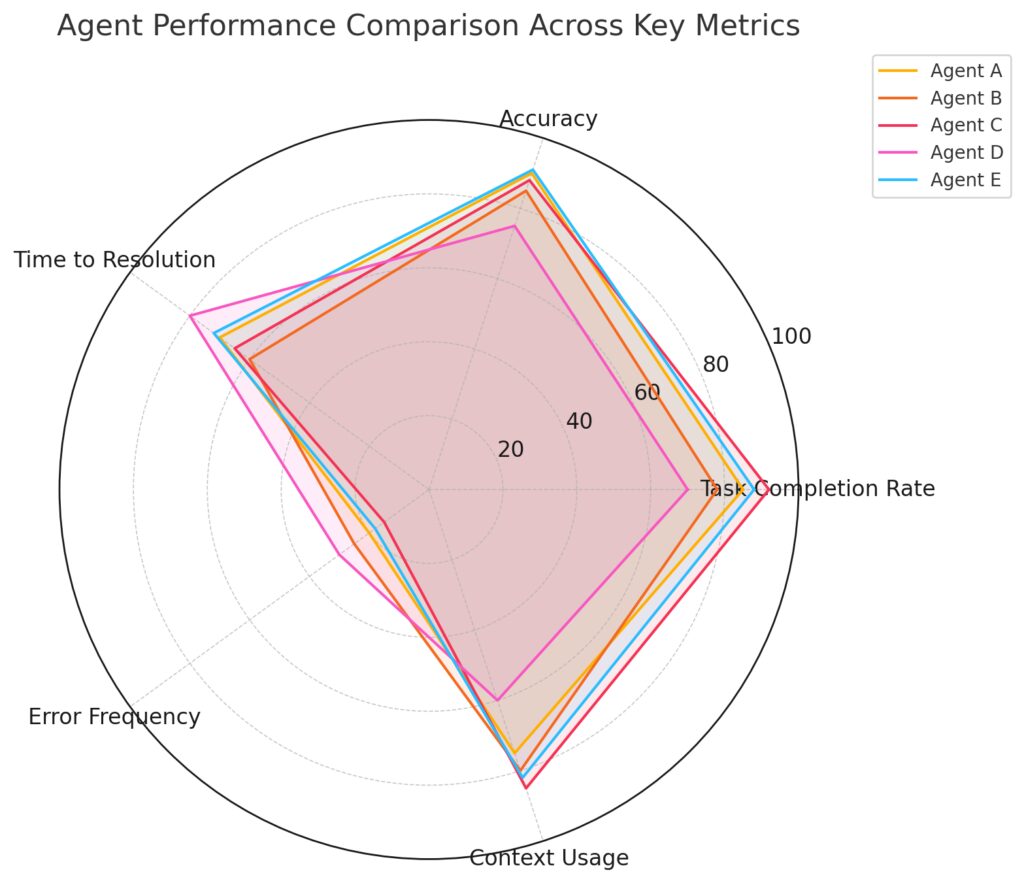

Monitor Agent Performance and Behavior Metrics

Comparative performance analysis of multiple agents using key behavior and efficiency metrics.

What gets measured gets better

Track:

- Success rate

- Memory accuracy

- Error trends

- Time to resolution

Use tools like Grafana or Prometheus to log and visualize performance metrics.

Watch for behavior drift

Agents can “learn wrong.” Set alerts for strange behavior or degraded outputs. Do regular audits.

Build Safety, Control, and Ethical Guardrails

Autonomy with accountability

Agents need permission systems, rate limits, and review triggers before executing real-world actions.

Make safety part of your orchestration.

Ethics matter more at scale

Add checks for bias, fairness, and explainability. Create fallback paths when confidence is low.

Create a Continuous Learning and Adaptation Pipeline

Static agents = obsolete agents

Set up pipelines for:

- Fine-tuning on fresh data

- Incorporating human feedback

- Self-updating prompts

Use RLHF or custom feedback loops.

Self-adaptation = next-level autonomy

Allow agents to revise their strategies live based on outcomes. Keep them sharp without human babysitting.

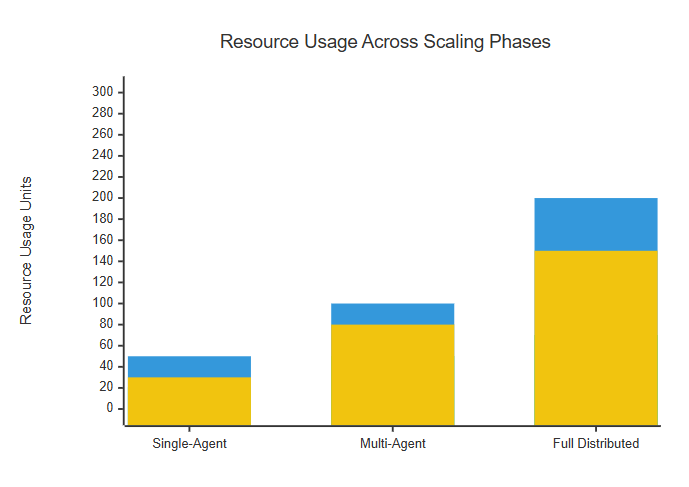

Plan for Infrastructure Scaling and Cost Control

Resource and cost comparison across agentic AI system growth stages with optimization checkpoints.

Don’t let scaling break your budget

Tips for staying lean:

- Use model cascading

- Cache aggressively

- Compress memory indexes

Run agents on serverless, or isolate compute with Docker/K8s.

Scale smart, not fast

Monitor usage patterns. Only scale compute when you’re hitting limits—not just because you can.

What the Pros Know About Scaling Agentic AI

Start With Narrow, High-ROI Use Cases

Insider Tip: Begin with a domain where the agent can clearly outperform or drastically speed up a manual workflow—like summarizing meeting notes, processing reports, or handling lead qualification.

🔍 Example: A startup saved 40+ hours/month by deploying an agent to auto-tag customer tickets with urgency levels and route them to the right team.

Use Lightweight Agents First, Then Specialize

Don’t over-engineer. Instead, use general-purpose agents initially, then specialize based on bottlenecks or performance feedback.

✅ Pro Tip: Clone lightweight agents into variants—like “Research Agent,” “Scraper Agent,” “Formatter Agent”—as your workflows mature.

Separate Reasoning from Execution

Keep agents that plan or make decisions separate from those that execute actions (API calls, file writes, deployments).

This modular split makes debugging easier, prevents runaway agents, and adds flexibility for human-in-the-loop control.

🚫 Avoid: Agents that make irreversible decisions without review checkpoints.

Always Implement a Reflection Loop

Agents without self-assessment routines will degrade in quality fast.

✨ Insider Move: Add a second “review agent” or reflection step where the agent scores or critiques its own output—or gets critiqued by a peer agent.

This creates a self-improving system and catches hallucinations or logic errors before they spread.

Use Task Caching to Save Costs

Agents often repeat similar tasks. Cache intermediate results (especially expensive API calls or model responses) and reuse them where appropriate.

💸 Pro Tip: Save results of common tasks like “generate FAQ” or “summarize PDF” in a vector store or database.

Inject Personas to Sharpen Behavior

Agents become sharper when you assign role-based personas—like “Senior Product Manager,” “SEO Strategist,” or “Startup Founder.” It guides tone, decisions, and priorities.

🧠 Example Prompt: “Act as a data analyst at a fintech company reviewing Q1 growth metrics. Be critical and detailed.”

Build Agent Memory Gradually

Don’t dump all memory in from the start. Feed agents context in small doses and test retrieval accuracy. Overloading context windows = lower performance.

⚙️ Pro Tip: Use conversation-based memory first, then scale to structured memory (vector DBs, logs, ontologies).

Monitor Logs Like an Ops Team

Think of agents as software workers. Their logs = their thought process. Monitor them closely, set alerts for weird outputs, and regularly review decision traces.

📈 Insider Tool: Use tools like LangSmith, Weights & Biases, or custom dashboards for agent observability.

🌟 Future Outlook: The Rise of Autonomic Agents

Agentic AI is evolving fast. What’s coming next?

Expect:

- Self-healing, self-optimizing agents

- Ecosystems of thousands of live task-based agents

- Emotionally intelligent interfaces

- Multi-modal task planning (text, vision, sound)

The future is autonomous—and deeply collaborative.

💬 What’s Your Agentic Vision?

Building your own agentic workflows? Wrestling with scaling pain points?

Drop a comment or share your experience below—let’s shape the next wave of intelligent, scalable AI together!

Expert Opinions, Debates & Controversies

Is Autonomous AI Truly Ready for the Real World?

While the buzz around agentic AI is undeniable, experts are split on its real-world readiness.

Proponents argue that autonomous agents are already demonstrating massive productivity gains—automating workflows, writing reports, and even managing teams of other agents.

Skeptics, however, raise concerns about hallucinations, lack of guardrails, and unpredictable behavior in high-stakes environments.

Dr. Ethan Mollick (Wharton) praises their transformative potential:

“Agentic systems are already outperforming junior staff in many knowledge work scenarios.”

Meanwhile, Dr. Gary Marcus, a vocal AI critic, warns:

“Without interpretability and reasoning checks, we’re deploying black-box agents that might spiral into failure without anyone noticing.”

Do Agentic Systems Threaten Human Jobs—or Just Change Them?

There’s fierce debate over whether agents will replace or augment human labor.

Supporters of augmentation say agents will take over routine tasks, freeing humans for creativity and judgment. Critics argue that even creative roles—like writing, design, and strategy—are increasingly vulnerable.

Example: Marketing teams are already using agents to:

- Research competitors

- Write ad copy

- A/B test campaigns

…and all without human involvement.

Where do we draw the line?

Centralized Control vs. Decentralized Intelligence

Another philosophical divide: Should agentic AI be centralized under a master controller, or should agents act independently and form ad hoc coalitions?

Some advocate for tight control, especially in enterprise or government use cases. Others argue decentralized swarms of agents (think DAOs or open networks) could unlock far more innovation.

This debate mirrors early internet vs. intranet battles—and it’s still unfolding.

Should Agents Be Allowed to Set Their Own Goals?

As agents become more autonomous, a core controversy is emerging:

Should they set and prioritize their own goals?

Letting agents self-direct could enable powerful emergent behaviors—but it also introduces ethical, legal, and control concerns.

Critics argue we need clear intent boundaries and goal alignment frameworks, especially as agents touch sensitive domains like finance, healthcare, or national security.

Open Source vs. Closed Agent Ecosystems

The explosion of open-source agents (AutoGPT, BabyAGI, CrewAI) has been a democratizing force. But some fear that open systems accelerate risk—giving powerful tools to bad actors or spawning unpredictable behaviors.

On the flip side, closed systems limit innovation and concentrate power in a few corporate hands.

The debate mirrors larger tech industry tensions between open development and secure deployment.

⚙️ Agentic AI Toolkits: 2024–2025 Comparison

| Toolkit | Purpose & Philosophy | Key Features | Scaling Potential | Use Case Fit | Downsides / Limitations |

|---|---|---|---|---|---|

| AutoGPT | Open-ended autonomy via task decomposition | Goal setting, recursive planning, tool usage, memory, file system access | ⚪ Experimental | R&D, prototypes, hobby bots | Unstable, high resource usage |

| BabyAGI | Minimal, lightweight autonomous task executor | Task queue, prioritization, feedback loop | ⚪ Low (demo-level) | Concept demos, simple tasks | Limited scalability, no teamwork |

| LangChain Agents | Framework for agent orchestration with LLMs | Agent types (reactive, plan-and-act), toolchains, memory, tracing tools | ✅ High | Production-ready workflows | Complex config, evolving APIs |

| CrewAI | Role-based multi-agent collaboration | Define agents by role, assign tools, team structure, task delegation | ✅ High | Enterprise, collab agents | Less flexible for solo agents |

| MetaGPT | Simulates multi-role software teams | Multi-agent team (PM, engineer, QA), role memory, task assignment | ⚪ Medium | Code generation, PM tooling | Focused mostly on dev workflows |

| OpenAgents (OpenAI) | Personal agents with persistent memory, tools | File, web, code, image tools, multi-step plans, persistent workspace | ✅ High | Knowledge workers, co-pilots | Closed source, tied to OpenAI API |

| AutoGen (Microsoft) | Multi-agent framework for LLM interaction | Conversable agents, turn-based planning, memory integration | ✅ High | Chat-centric use cases | Complex for non-dialog agents |

| Camel | Role-playing agents for co-working and debate | Dual-agent dialogues, idea generation, collaborative reasoning | ⚪ Medium | Ideation, brainstorming | Less suitable for task execution |

| SuperAgent | Plug-and-play agent framework with GUI support | UI builder, plugin manager, scheduling, OpenAI integration | ⚪ Medium | Custom agents for end-users | GUI-centric, less developer-first |

| AI Engineer / Devin (Cognition) | OS-level autonomous developer agents | DevOps, terminal control, IDE integration, debugging loops | ✅ Very High (soon) | Software engineering agents | Not publicly available yet |

🔍 Key Comparison Dimensions

1. 🧠 Agent Intelligence Models

| Toolkit | Planning | Memory | Tool Use | Reflection | Collaboration |

|---|---|---|---|---|---|

| AutoGPT | ✅ Basic | ✅ | ✅ | ⚪ Minimal | ⚪ No |

| LangChain | ✅ Modular | ✅ | ✅ | ✅ | ⚪ Limited |

| CrewAI | ✅ Role-based | ✅ | ✅ | ✅ | ✅ Yes |

| AutoGen | ✅ Turn-based | ✅ | ✅ | ✅ | ✅ Strong |

| MetaGPT | ✅ Scripted | ✅ | ✅ | ⚪ No | ✅ Predefined |

🧭 Summary: Strategic Roadmap for Scaling Agentic AI

| Layer | Focus Area | Key Metric |

|---|---|---|

| Cognitive Architecture | Reasoning, Planning, Reflection | Task completion over time |

| Infrastructure | Compute, Memory, Runtime | Agents per second, cost |

| Coordination | Multi-agent Collaboration | Team throughput, conflict rate |

| Alignment & Safety | Ethics, Oversight, Controls | Intervention frequency |

| Memory & Identity | Persistence of Self + Goals | Continuity score, memory recall |

| Human-AI Feedback | Interpretable UIs, HITL tuning | Human satisfaction, retrain ROI |

| Governance | Deployment Policy, Legal Risk | Auditability, compliance rate |

FAQs

How do I manage memory without running into token limits?

Use retrieval-augmented generation (RAG) and vector databases to store memory outside the model. Then retrieve only what’s relevant during each task.

For example, instead of feeding an agent 100 past conversations, store them in a vector DB and retrieve only the 3 most relevant examples based on context.

Can agentic AI operate across different domains?

Absolutely. With the right toolset and environment abstraction, an agent can switch from writing code to handling customer support or even running business ops.

Imagine an agent that:

- Reads new product specs (engineering)

- Writes press releases (marketing)

- Tracks launch metrics (analytics)

It’s all doable with structured tools and flexible planning logic.

How do I keep agents from overloading APIs or hitting rate limits?

Use rate-limiters and circuit breakers in your orchestration logic. These tools monitor how often agents hit specific APIs and throttle requests when needed.

For example, if five agents are pulling data from the same analytics dashboard, use a centralized fetcher agent that caches responses and shares them—instead of bombarding the API five times.

Can I plug agentic AI into existing software workflows?

Yes—this is one of its biggest strengths. You can embed agents into tools like Slack, Notion, Airtable, or internal CRMs using APIs or RPA (robotic process automation).

Example: A sales agent can auto-log interactions in HubSpot, trigger follow-ups, and summarize meeting transcripts—all while syncing across tools in real time.

What happens if an agent “hallucinates” or makes up data?

This is a known risk with LLM-based agents. Minimize it by:

- Using tool use for factual queries (e.g. database lookup instead of generation)

- Adding a validation agent that checks for hallucination-prone outputs

- Implementing trust thresholds for when to escalate tasks to humans

For example, if an agent generates financial insights, verify numbers via a data-fetching plugin before they get published.

How can agents access real-time or frequently changing information?

Use tool integrations or API plugins to fetch real-time data. This allows agents to pull updated stock prices, traffic conditions, weather, or internal metrics on demand.

Example: A travel-planning agent can check flight prices live from a service like Skyscanner or Kayak, rather than relying on stale training data.

Are there any frameworks that handle all of this?

There’s no one-size-fits-all, but platforms like:

- LangChain – modular pipelines and agent orchestration

- CrewAI – team-based agent collaboration

- AutoGen – conversational multi-agent workflows

- Semantic Kernel – tool integration and memory planning

…are great starting points.

Most teams customize these based on the problem domain, tech stack, and how much autonomy they want to give the agents.

How do I debug agent failures or trace what went wrong?

You need agent observability. Track every decision, tool call, memory access, and message using:

- Structured logs

- Agent step visualizations

- Trace dashboards

Example: If your agent books the wrong meeting slot, you should be able to trace exactly when it misunderstood the time zone, which memory it accessed, and what function it called.

Can agents be reused or cloned across different projects?

Definitely. Think of agents as modular workers. You can reuse a data-cleaning agent, a report-writing agent, or a PDF-extracting agent across multiple projects with minor tweaks.

Just make sure they’re built with clean APIs and configurable parameters, so they slot into new workflows without rework.

Key Resources for Scaling Agentic AI

Frameworks & Libraries

- LangChain – Modular framework for building AI-powered agents with memory, tools, and reasoning capabilities.

- CrewAI – Lightweight multi-agent orchestration engine focused on teamwork and specialized roles.

- AutoGen by Microsoft – Python framework for building multi-agent conversation and task systems with memory and feedback loops.

- Semantic Kernel – SDK for integrating AI with real-world tools, enabling tool-use, memory, and planning.

Tools for Memory and Context Management

- FAISS (Facebook AI Similarity Search) – Popular vector store for fast and efficient similarity search.

- Weaviate – Open-source vector database with semantic search and hybrid search capabilities.

- Chroma – Simple and developer-friendly vector store designed for LLMs and RAG.

Agent Deployment & Scaling Infrastructure

- Ray – Scalable framework for parallel and distributed execution of AI workloads.

- FastAPI – Python web framework perfect for serving lightweight AI agents via APIs.

- Docker + Kubernetes – Industry-standard tools for containerizing and scaling agent environments.

Monitoring, Metrics & Logging

- Prometheus – Monitoring system with powerful time-series database.

- Grafana – Visualization tool for building live dashboards and agent performance tracking.

- OpenTelemetry – Observability framework for tracing, logging, and metrics collection.

Research & Industry Papers

- ReAct: Synergizing Reasoning and Acting in Language Models – Foundational paper on combining reasoning traces with actions in LLMs.

- Toolformer: Language Models Can Teach Themselves to Use Tools – Insights into autonomous tool-use and reasoning.

- AutoGPT & BabyAGI repos on GitHub – Experimental, open-source implementations of early autonomous agents.