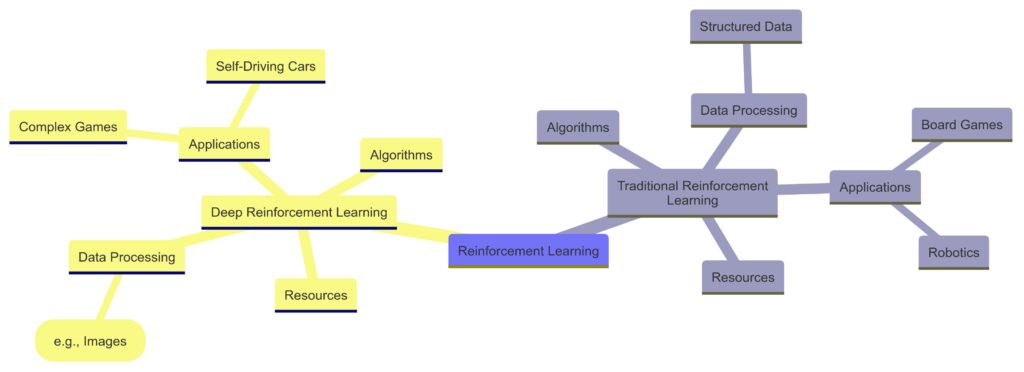

As reinforcement learning (RL) gains momentum in artificial intelligence, a new contender is often at the forefront: deep reinforcement learning (DRL).

Understanding what sets deep reinforcement learning apart from traditional approaches helps clarify why it’s so influential in complex decision-making tasks. Here, we’ll dive into the distinctions between these two frameworks, exploring how they’re reshaping the way AI learns from its environment.

The Basics of Reinforcement Learning

How Traditional Reinforcement Learning Works

In traditional reinforcement learning, an agent learns by interacting with an environment, making decisions that lead to rewards or penalties. Over time, it aims to maximize the rewards by finding an optimal strategy, or policy, that dictates which actions yield the best outcomes in different situations.

Key components include:

- State: The environment’s current conditions.

- Action: The agent’s possible choices.

- Reward: Feedback on whether an action was beneficial.

- Policy: Strategy guiding the agent’s actions.

Core Algorithms in Traditional RL

Traditional reinforcement learning relies on algorithms like Q-learning and policy gradient methods. These algorithms often use lookup tables to store values or policies for different states. While effective for simpler tasks, traditional RL’s approach can struggle with large-scale problems due to memory limitations and computational constraints.

| Algorithm | Description | Best For | Limitations | Examples |

|---|---|---|---|---|

| DQN | Deep Q-Network (DQN) uses a Q-learning approach combined with deep neural networks to handle large state spaces. | Discrete action spaces, especially in environments with a limited number of actions. | Struggles with continuous action spaces and high-dimensional state spaces; requires extensive tuning for stability. | Video game AI (e.g., Atari games), Robotics (for simple tasks) |

| DDPG | Deep Deterministic Policy Gradient (DDPG) is an actor-critic model for continuous action spaces. It utilizes both policy and value networks. | Continuous control tasks and environments requiring precise actions. | Sensitive to hyperparameters; prone to instability in training and divergence with long horizons. | Robotics (fine motor control), Simulated physics tasks |

| PPO | Proximal Policy Optimization (PPO) is a policy gradient method that uses a clipped objective to limit destructive updates. | Policy-based control, complex and continuous action spaces with long episode lengths. | Balancing exploration and exploitation is challenging; may underperform in highly stochastic environments. | Self-driving cars, Healthcare (treatment planning), Financial trading simulations |

Breakdown of Information:

- DQN (Deep Q-Network):

- Description: Combines Q-learning with deep neural networks to handle large discrete state spaces.

- Best For: Discrete action environments, such as those in video games.

- Limitations: Limited to discrete actions, often unstable without careful tuning.

- Examples: Commonly used in video game AI and simpler robotics tasks.

- DDPG (Deep Deterministic Policy Gradient):

- Description: An actor-critic model with policy and value networks, tailored for continuous action spaces.

- Best For: Precise, continuous control tasks.

- Limitations: Prone to instability and sensitive to tuning.

- Examples: Robotics for fine motor control, physics-based simulations.

- PPO (Proximal Policy Optimization):

- Description: A policy gradient method with a clipped objective for stable learning.

- Best For: Complex, continuous action tasks in stochastic environments.

- Limitations: Balancing exploration and exploitation can be tricky.

- Examples: Self-driving cars, healthcare planning, and financial trading.

Deep Reinforcement Learning: A New Approach

Why “Deep” Matters in DRL

The “deep” in deep reinforcement learning comes from using deep neural networks to process the data within the RL framework. Rather than relying on tables, DRL leverages the power of neural networks to approximate values or policies, allowing it to tackle high-dimensional data, like images or real-world simulations.

For example, in a video game setting, a DRL agent can analyze pixels directly, while a traditional RL agent would struggle to interpret such raw data. This shift enables DRL to address far more complex tasks that traditional methods couldn’t handle effectively.

Popular DRL Algorithms

Algorithms like Deep Q-Networks (DQN), Deep Deterministic Policy Gradient (DDPG), and Proximal Policy Optimization (PPO) have revolutionized AI by enabling DRL to solve tasks with thousands or even millions of states. By training on vast amounts of data, these algorithms become highly adept at predicting which actions will yield maximum rewards, even in unpredictable environments.

Key Differences Between Traditional and Deep Reinforcement Learning

Scalability and Complexity

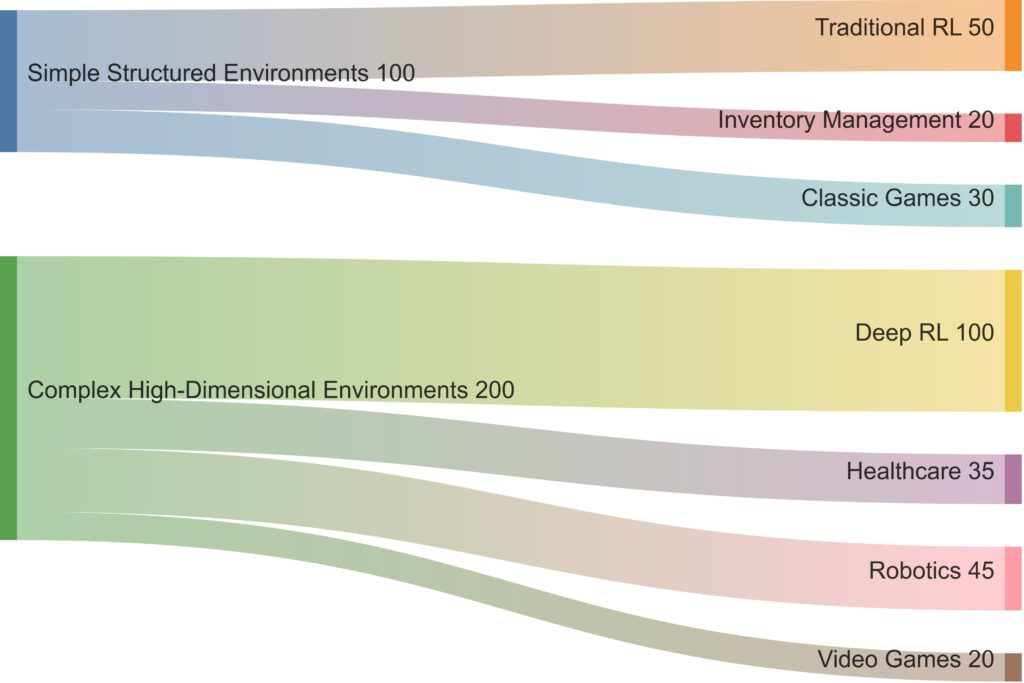

Traditional RL is well-suited for smaller problems with a limited number of states and actions. It works efficiently in structured environments, where it’s possible to maintain a table of all potential states. However, deep reinforcement learning thrives in large, unstructured environments where mapping every possible state would be unmanageable.

This capability is why DRL is used in complex applications, from self-driving cars to robotics and sophisticated games like Go or StarCraft, which involve an immense number of possibilities.

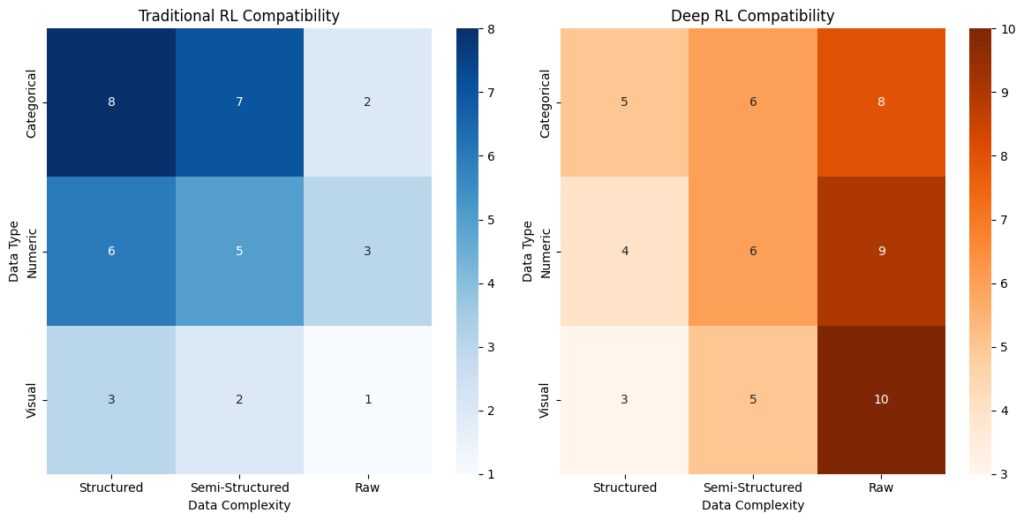

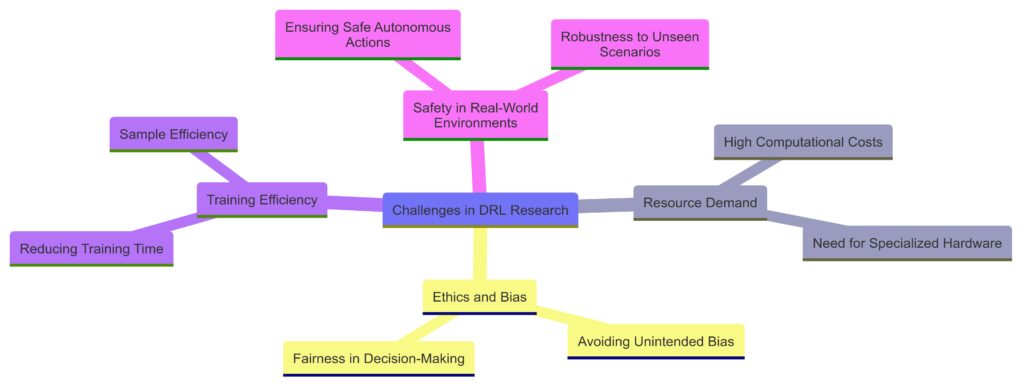

Computation and Resource Demand

Deep reinforcement learning is more resource-intensive, requiring significant computational power and memory for training. The neural networks in DRL necessitate extensive data processing, which often involves GPUs or TPUs to accelerate learning. Traditional RL, on the other hand, can typically function on more modest computational setups, as it doesn’t rely on deep neural networks.

For companies and researchers with access to large-scale data processing capabilities, DRL offers unmatched performance. However, traditional RL remains valuable in simpler applications, especially when resources are limited.

Learning from Raw Data

A standout feature of DRL is its ability to learn directly from raw, unstructured data, such as images or complex sensor inputs, thanks to its neural network’s pattern-recognition abilities. In contrast, traditional RL needs a more structured form of data to function optimally, often requiring predefined state representations.

In environments where direct data interpretation is needed—like interpreting visual information in self-driving cars or in real-time strategy games—DRL’s capacity to handle raw inputs proves invaluable.

Choosing Between DRL and Traditional RL

When to Use Traditional Reinforcement Learning

Traditional reinforcement learning is a great choice when:

- The problem space is small, and states are easy to track.

- Limited computational resources are available.

- The environment is structured and doesn’t change frequently.

Applications might include basic robotics, inventory management, and simple board games like Tic-Tac-Toe or Checkers.

When Deep Reinforcement Learning is Better

Deep reinforcement learning is ideal when:

- The environment is complex or dynamic.

- Raw, high-dimensional data (like images or sensor inputs) must be interpreted.

- Sufficient computational resources (e.g., GPUs) are available for training.

DRL is typically used in more advanced applications, such as autonomous driving, healthcare diagnostics, and complex video game environments.

The Future of Reinforcement Learning

As advancements in computing power continue, deep reinforcement learning is likely to become more accessible and efficient, expanding its applications even further. However, traditional RL remains an important tool, especially for simpler and more resource-limited tasks. Together, they offer a versatile toolkit for solving problems, ranging from the straightforward to the extraordinarily complex.

Understanding the distinctions between these two types of reinforcement learning allows developers and researchers to choose the best tool for the task, leveraging each approach’s strengths in the rapidly evolving field of AI.

FAQs

Why is deep reinforcement learning more resource-intensive?

Deep reinforcement learning requires significant computational power and memory because it uses neural networks to approximate values and policies. Training these networks requires extensive data and usually benefits from high-performance hardware, such as GPUs or TPUs, to process and learn from vast datasets efficiently.

When is it better to use traditional reinforcement learning?

Traditional reinforcement learning works best in smaller, structured environments with limited states and actions. It’s ideal when computational resources are limited or when the task at hand doesn’t require processing complex data, such as in simple robotics, classic board games, or certain inventory management systems.

How does DRL handle complex environments better than traditional RL?

By using neural networks, DRL can learn from high-dimensional or raw data like images, allowing it to interpret and make decisions within large, dynamic environments. This capability enables DRL to tackle applications like autonomous driving and real-time strategy games, where the number of possible states or outcomes would overwhelm traditional RL.

Can deep reinforcement learning learn directly from raw data?

Yes, one of DRL’s standout features is its ability to process raw data directly, such as visual information from sensors or images. Traditional RL, in contrast, typically requires a structured representation of data, as it lacks the neural network’s capacity to interpret unprocessed data.

What are some applications of deep reinforcement learning?

DRL is used in advanced applications that involve large, complex environments and high-dimensional data, such as autonomous vehicles, healthcare diagnostics, financial modeling, and even video game AI. Its capacity to learn from unstructured data makes it a powerful tool in areas requiring intricate decision-making.

Is traditional reinforcement learning still relevant?

Absolutely. Traditional reinforcement learning remains a practical choice for simpler or well-defined tasks, where the complexity of DRL isn’t necessary. It’s highly effective in controlled environments with limited states and where computational resources are limited, proving useful for many everyday applications in robotics, gaming, and process optimization.

Why has deep reinforcement learning gained popularity in recent years?

The rise of deep reinforcement learning stems from advances in computing power and access to larger datasets. The combination of deep neural networks and reinforcement learning opened doors to tackling more sophisticated tasks, such as real-time strategy games and robotics, that traditional RL couldn’t manage. This capability to handle high-dimensional and unstructured data has made DRL a popular choice for complex AI applications.

What are some key algorithms in deep reinforcement learning?

Popular algorithms include Deep Q-Networks (DQN), which extend Q-learning by using neural networks to approximate the Q-value, and Proximal Policy Optimization (PPO), which efficiently improves policy-based learning. Other DRL algorithms like Deep Deterministic Policy Gradient (DDPG) work well in continuous action spaces, making them suitable for applications in robotics and other control tasks.

How do neural networks enhance the performance of deep reinforcement learning?

Neural networks give DRL the ability to recognize patterns and extract features from high-dimensional data, allowing it to handle complex inputs like images or real-time sensor data. Instead of using explicit tables or rules, DRL learns indirectly through neural networks, enabling more generalization and adaptability across diverse, high-variability environments.

What are the challenges of using deep reinforcement learning?

Despite its advantages, DRL faces challenges, including long training times, high resource demands, and potential instability in learning. DRL can be prone to overfitting, where the model performs well in training but struggles in real-world applications. Additionally, ensuring DRL agents behave safely and predictably in uncertain environments remains a focus of ongoing research.

How does reward function design impact reinforcement learning?

In both traditional and deep reinforcement learning, the reward function is crucial because it drives the agent’s learning. An improperly defined reward can lead to undesirable or inefficient behaviors, as the agent optimizes for the reward regardless of the outcome’s quality. Thoughtful reward design ensures that the agent learns behaviors aligned with the desired goals.

What industries benefit the most from deep reinforcement learning?

Deep reinforcement learning has shown great promise in autonomous vehicles, healthcare, finance, robotics, and gaming. For instance, DRL helps self-driving cars interpret sensor data in real-time, while in healthcare, it supports diagnostics and personalized medicine. Financial institutions use DRL for high-stakes trading strategies, and robotics applications rely on it for precise control and task automation.

Can deep reinforcement learning be combined with other AI approaches?

Yes, DRL is often combined with other AI techniques, such as supervised learning or unsupervised learning methods, to create more robust systems. For example, DRL might be paired with supervised learning to pre-train models on labeled data, speeding up the learning process. This integration of techniques can improve performance and make the DRL agent more versatile.

Are there alternatives to deep reinforcement learning for complex tasks?

Alternatives to DRL for complex tasks include model-based reinforcement learning, hierarchical reinforcement learning, and evolutionary algorithms. Model-based RL seeks to create a predictive model of the environment, while hierarchical RL breaks tasks into sub-tasks, simplifying learning. Evolutionary algorithms explore solutions through population-based searches, offering unique approaches for complex, high-dimensional problems.

How does exploration differ between traditional and deep reinforcement learning?

In both traditional RL and DRL, exploration is essential for discovering rewarding actions. However, DRL uses neural networks to explore vast, high-dimensional spaces more effectively, especially when guided by techniques like epsilon-greedy (for balancing exploration and exploitation) and experience replay (storing past experiences to improve learning). Traditional RL typically explores with simpler strategies, which work well in smaller, less complex environments but can fall short in more dynamic settings.

What role does experience replay play in deep reinforcement learning?

Experience replay is a key feature in DRL that enhances learning stability. By storing past experiences (state, action, reward, next state) in a memory buffer and sampling them randomly during training, DRL agents can break the temporal correlation of consecutive experiences, improving the neural network’s performance. This technique enables DRL to learn more efficiently and avoid overfitting to recent events, which is particularly useful for complex, sequential tasks.

How does deep reinforcement learning handle continuous action spaces?

While traditional RL typically excels in discrete action spaces, DRL algorithms like Deep Deterministic Policy Gradient (DDPG) and Soft Actor-Critic (SAC) are specifically designed to handle continuous action spaces. This capability is essential for applications in robotics or control systems, where actions (e.g., motor angles or throttle control) must be precise and can vary across a continuous range.

Is deep reinforcement learning prone to ethical concerns?

Yes, ethical considerations are critical in DRL applications, especially where safety and bias are concerns. For instance, if a DRL agent is trained in a way that rewards aggressive driving behaviors in self-driving cars, it could lead to unsafe actions in real-world scenarios. Similarly, biases in training data can cause DRL agents to act unfairly, emphasizing the need for careful reward design, safety protocols, and monitoring to mitigate risks.

What are the limitations of traditional reinforcement learning?

Traditional RL’s main limitations are its scalability and dependence on predefined states. As tasks grow in complexity or environments become less structured, traditional RL struggles because it relies on tabular representations or fixed policies. These methods aren’t designed for handling high-dimensional, dynamic data, making them less suited for tasks requiring flexibility and adaptability, such as visual processing or continuous action control.

How can deep reinforcement learning improve over time?

DRL is evolving with techniques to stabilize learning, such as Rainbow DQN, which combines multiple improvements (e.g., prioritized replay, dueling networks) to increase performance. Additionally, transfer learning allows DRL models to apply knowledge from one task to another, reducing the need for extensive retraining. Researchers are also working on model-based DRL, which aims to speed up learning by simulating parts of the environment, making DRL more efficient and accessible.

Are there examples of deep reinforcement learning outperforming traditional methods?

Yes, DRL has surpassed traditional methods in several domains, most notably in games and robotics. For example, DeepMind’s AlphaGo and AlphaStar leveraged DRL to outperform human champions in Go and StarCraft, which are highly complex games. In robotics, DRL enables robotic arms to learn precise tasks, such as object manipulation, without requiring detailed pre-programming, outperforming traditional RL methods that lack adaptability for such nuanced tasks.

What are some current challenges in deep reinforcement learning research?

Current challenges include improving training efficiency, handling real-world noise and uncertainty, and ensuring ethical decision-making. Researchers are also focused on creating DRL agents that can generalize better across tasks, as well as increasing stability during training. Solving these challenges will help DRL become more practical for real-world applications that require reliability and safety, like healthcare and autonomous driving.

Can reinforcement learning be used in unsupervised settings?

Yes, both traditional RL and DRL can work in unsupervised or semi-supervised settings, where the agent learns directly from interactions without needing explicit labels. In these setups, the agent learns by maximizing cumulative rewards, which acts as a substitute for labeled data. This approach is particularly useful for problems where obtaining labeled data is difficult or costly, as in dynamic, real-world applications.

Resources

Online Courses

- “Deep Reinforcement Learning Nanodegree” by Udacity – A practical, hands-on course from Udacity.

- “CS50’s Introduction to Artificial Intelligence with Python” by Harvard (edX) – Harvard’s free online AI course, including reinforcement learning.

- “Deep Reinforcement Learning Specialization” by Coursera (University of Alberta) – Covers advanced topics in deep reinforcement learning.

Research Papers

- “Playing Atari with Deep Reinforcement Learning” by Mnih et al. – Introduces DQN, a cornerstone paper in DRL.

- “Proximal Policy Optimization Algorithms” by Schulman et al. – A paper describing the PPO algorithm, widely used in DRL applications.

- “AlphaGo Zero: Mastering the Game of Go without Human Knowledge” by Silver et al. – Learn about the DRL behind AlphaGo Zero.

Tools and Libraries

- OpenAI Gym – Provides a variety of environments for reinforcement learning.

- Stable Baselines3 – Documentation and tutorials for implementing DRL algorithms.

- RLlib by Ray – Scalable reinforcement learning with Ray’s RLlib.