Instance segmentation is evolving at lightning speed, thanks to advancements like Mask R-CNN and transformer-based models. These powerful tools are setting the pace for innovation in computer vision. Let’s dive into how they compare and what lies ahead for instance segmentation.

Understanding Instance Segmentation: The Basics

What is Instance Segmentation?

Instance segmentation is a subset of computer vision where models identify and segment individual objects in an image. Unlike object detection, it not only detects objects but also delineates their precise shape.

Why is Instance Segmentation Crucial?

- Real-world applications: Healthcare imaging, autonomous vehicles, and AR/VR rely on it.

- Accuracy-driven: Pixel-level precision matters when interpreting critical visual data.

- Challenge of diversity: It must handle objects of varying scales, occlusions, and cluttered environments.

Traditional Approaches to Instance Segmentation

Before the rise of Mask R-CNN, segmentation depended on labor-intensive and less scalable models like R-CNN or region-based methods. These approaches, while foundational, lacked the robustness needed for modern applications.

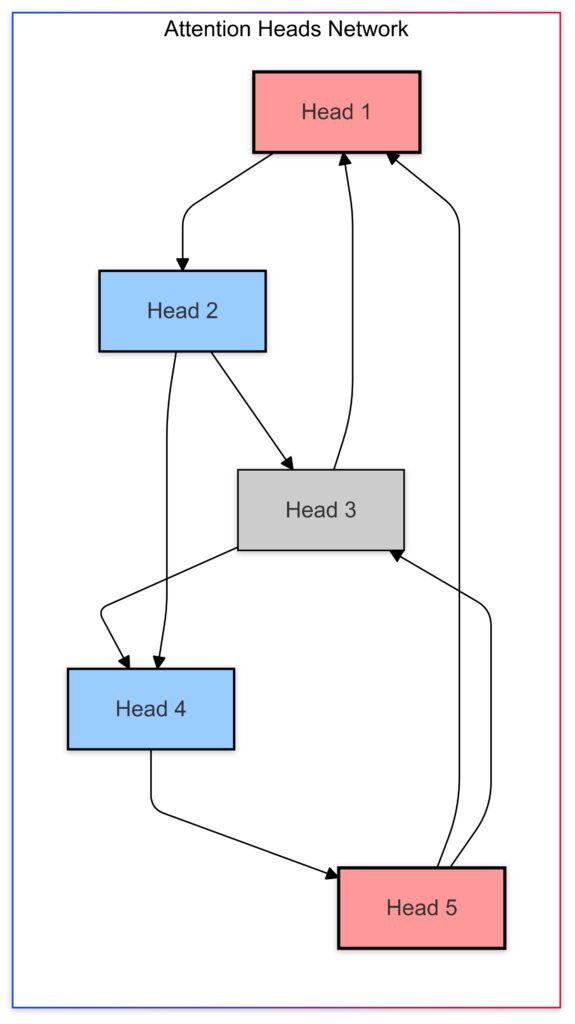

Complexity of Interpreting Attention Heads

Understanding the contribution of a single head requires analyzing the entire network.

Nodes (Attention Heads):

Represented as circles with varying sizes to indicate importance.

Red (Head 1, Head 5): High importance.

Blue (Head 2, Head 4): Moderate importance.

Gray (Head 3): Low importance.

Connections:

Lines indicate dependencies or interactions between attention heads.

Complex web of interactions highlights the challenge of isolating individual head behaviors.

Challenges:

Dependencies between heads create interpretability complexity.

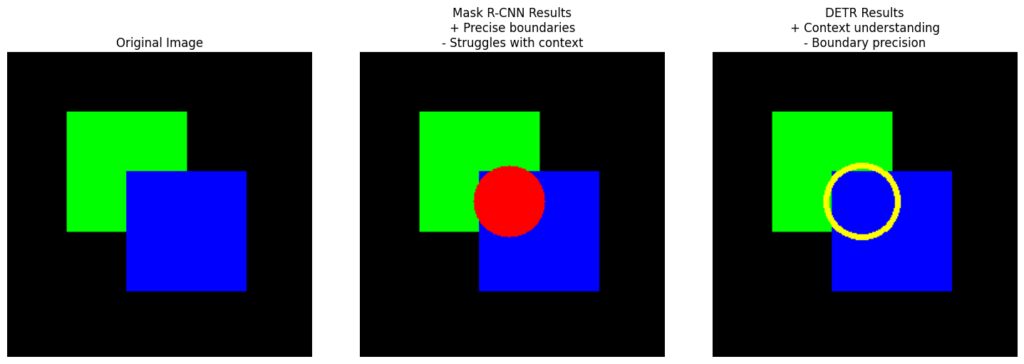

Mask R-CNN: A Gold Standard

How Does Mask R-CNN Work?

Mask R-CNN extends Faster R-CNN by adding a branch for predicting object masks. It performs three tasks:

- Object detection: Identifies bounding boxes and classifies objects.

- Mask prediction: Generates pixel-level segmentation masks.

- Refinement: Aligns mask predictions with bounding boxes using RoIAlign.

Key Strengths of Mask R-CNN

- Simplicity in design: Easy integration with existing object detection frameworks.

- High accuracy: Excels in segmenting small or overlapping objects.

- Broad adoption: Widely used in industries due to its open-source implementation.

Challenges of Mask R-CNN

- Resource-heavy: Requires significant computational power for training.

- Scaling issues: Performance degrades with large datasets or extreme variations in object size.

- Limited adaptability: Struggles in dynamic, real-time environments.

Emerging Transformers in Instance Segmentation

Why Are Transformers Disrupting Computer Vision?

Transformers, initially popularized in NLP, have revolutionized vision tasks by focusing on global context instead of local features. Models like Vision Transformers (ViT) and DETR (DEtection TRansformer) are redefining instance segmentation.

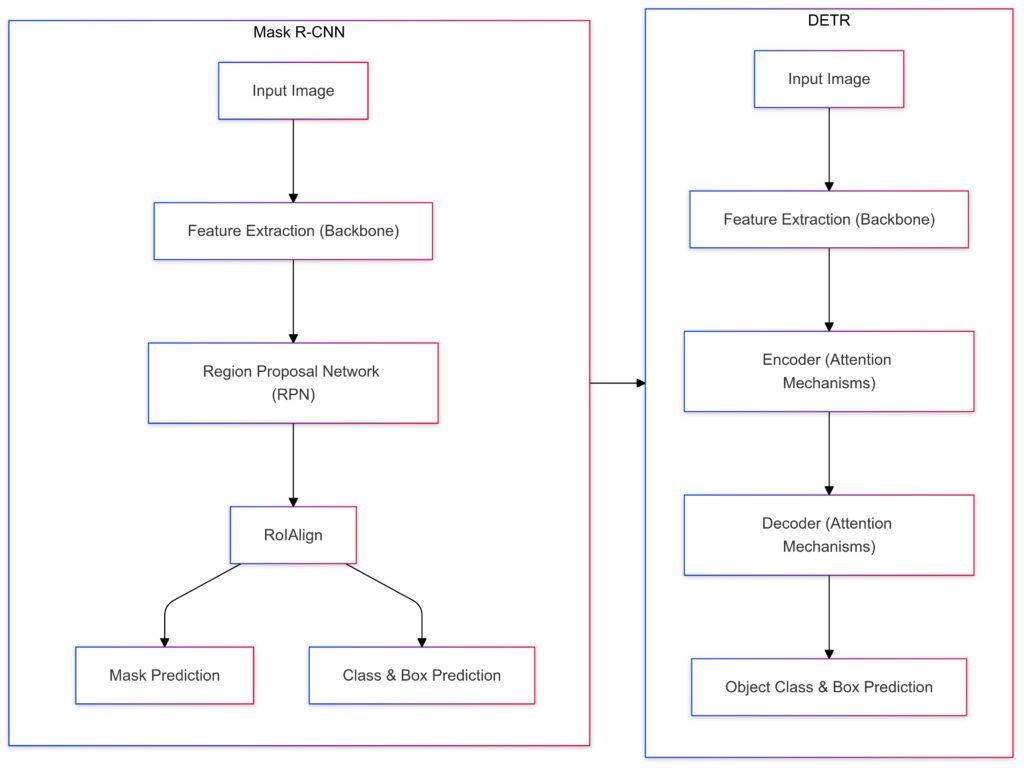

Key Components:

RPN (Region Proposal Network): Identifies regions of interest.

RoIAlign: Refines spatial alignment for predictions.

Mask Prediction Branch: Outputs instance segmentation masks.

Class & Box Prediction Branch: Classifies objects and refines bounding boxes.

Workflow:

Input image → Feature Extraction → RPN → RoIAlign → Branches (Mask and Classification).

DETR

Key Components:

Encoder: Uses attention mechanisms to extract features globally.

Decoder: Aligns queries with object features.

Prediction: Outputs object classes and bounding boxes directly.

Workflow:

Input image → Feature Extraction → Encoder → Decoder → Prediction.

Highlights:

Mask R-CNN: Focuses on region-based proposals with separate prediction branches.

DETR: Operates end-to-end with transformer-based attention mechanisms.

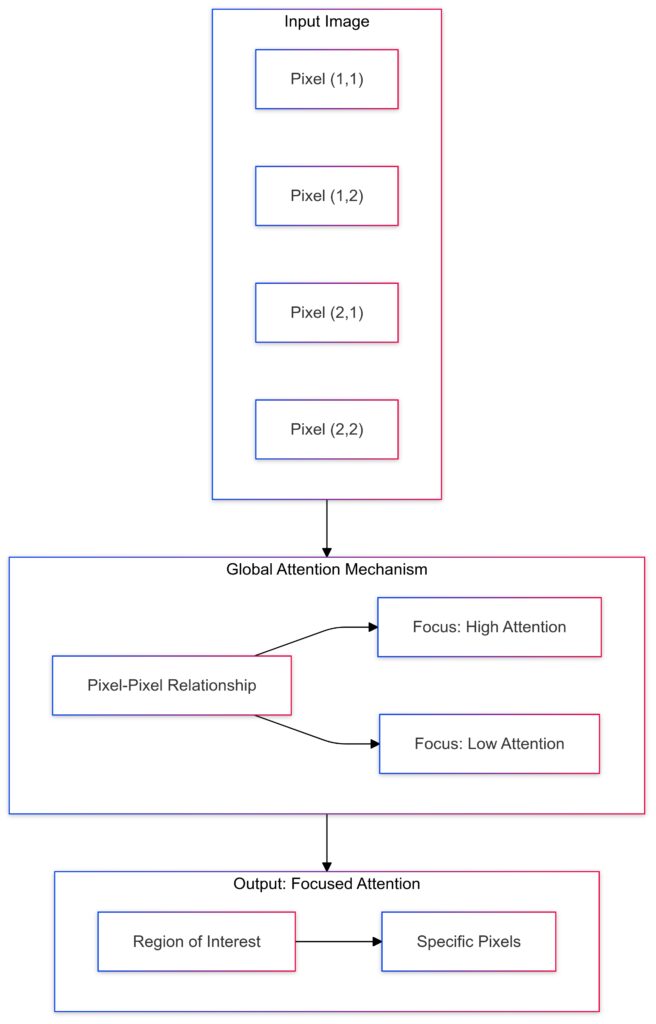

How Transformers Are Used for Segmentation

- Attention mechanisms: These allow the model to understand relationships between pixels across the entire image.

- End-to-end learning: Models like DETR eliminate the need for region proposals, simplifying the pipeline.

- Scalability: Transformers can adapt to larger datasets more effectively than traditional CNNs.

Advantages Over Mask R-CNN

- Improved performance: Especially in complex or crowded scenes.

- Less dependency on handcrafted features: Reduces human intervention during development.

- Future-ready: Designed for compatibility with multimodal AI tasks, blending images, and text.

The Limitations of Transformers

Despite their promise, transformers are not without flaws:

- High computational cost: Training transformers is even more resource-intensive than Mask R-CNN.

- Data hunger: They require massive labeled datasets to achieve optimal performance.

- Early-stage challenges: While promising, transformer-based models are still maturing for segmentation tasks.

Input Image:

Pixels are represented as nodes (e.g.,

Pixel (1,1), Pixel (2,2)).Forms the foundation of attention processing.

Global Attention Mechanism:

Establishes pixel-pixel relationships across the entire image.

Attention weights (e.g., high or low) determine the importance of specific relationships.

Output Focus:

Highlights regions of interest based on attention weights.

Focuses on critical pixels contributing to the final output.

Hybrid Approaches: The Best of Both Worlds?

Combining CNNs with Transformers

Hybrid models aim to leverage the feature extraction capabilities of CNNs with the contextual power of transformers. These approaches:

- Reduce computational demand by integrating CNN backbones with attention modules.

- Boost performance in challenging tasks where object localization and global context are equally critical.

Examples of Hybrid Models

- Swin Transformer: Incorporates transformer blocks with hierarchical CNN features.

- MaskFormer: Combines segmentation and detection using a unified framework.

The Future of Instance Segmentation: Predictions and Possibilities

What’s Driving Innovation in Instance Segmentation?

The demand for more efficient, scalable, and versatile models is propelling advancements. Key factors include:

- Real-time applications: From autonomous driving to live AR/VR integration.

- Complex environments: Models must handle occlusions, dense crowds, and variable lighting.

- Lower hardware constraints: Widespread adoption hinges on models running on edge devices with limited computational power.

Emerging Trends to Watch

- Self-supervised learning: Reducing dependency on labeled datasets by leveraging unlabeled data.

- Edge-friendly transformers: Optimized transformer models for deployment on lightweight hardware.

- 3D segmentation: Enhanced models for AR/VR and autonomous driving, capable of processing spatial data in real-time.

Real-World Applications: Mask R-CNN vs. Transformers

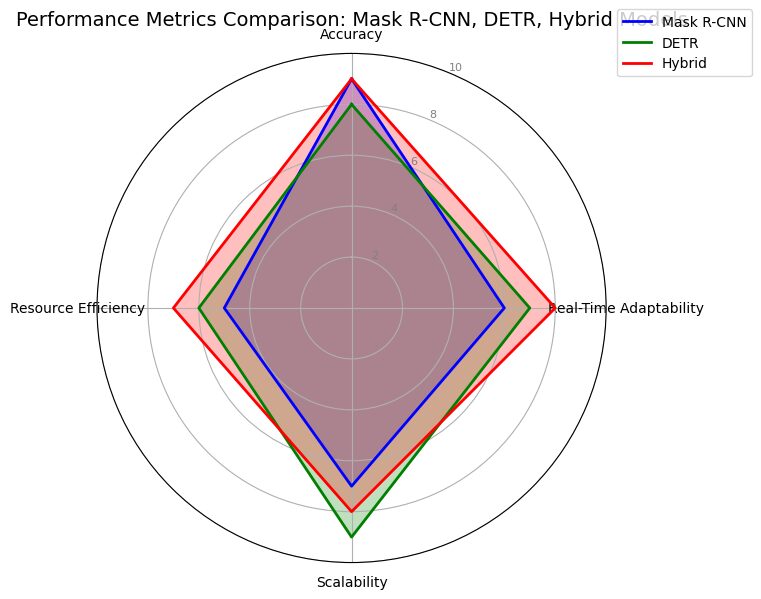

Comparing Mask R-CNN, DETR, and hybrid models across key performance metrics.

Healthcare

- Mask R-CNN:

- Excels at identifying tumors or anomalies in medical images.

- Proven in detecting small, distinct regions like polyps in colonoscopy images.

- Transformers:

- Outperform CNN-based methods in analyzing large-scale datasets, like whole-slide pathology images.

- Provide better global context in segmentation of complex vascular structures or multi-class tissue identification.

Autonomous Vehicles

- Mask R-CNN:

- Effective in scenarios requiring detailed identification of road signs, vehicles, or pedestrians.

- Reliable but struggles with real-time processing due to computational overhead.

- Transformers:

- Superior in dynamic environments with multiple overlapping objects, like dense traffic.

- Scalability and adaptability make them ideal for edge-device processing in cars.

E-commerce and Retail

- Mask R-CNN:

- Ideal for applications like fashion segmentation or product cataloging.

- Performs well when clear object boundaries are needed for augmented reality features.

- Transformers:

- Better suited for cluttered scenes, such as product segmentation in warehouse imagery.

- Offer improved contextual understanding for applications like visual search and recommendation systems.

AR/VR and Gaming

- Mask R-CNN:

- Reliable for static scenes or predefined object sets, such as gaming avatars or furniture.

- Limited when adapting to dynamic real-world input.

- Transformers:

- Seamlessly handle dynamic inputs, offering superior segmentation in real-time AR/VR setups.

- Their flexibility supports evolving environments in gaming worlds and immersive experiences.

Performance and Scalability: A Closer Look

Mask R-CNN Strengths in Controlled Environments

- When datasets are limited or well-curated, Mask R-CNN delivers consistently high accuracy.

- Ideal for use cases with predefined object categories.

Transformers for Large-Scale Challenges

- Shine in open-world tasks where object categories and contexts vary widely.

- Can handle multi-task learning, such as integrating segmentation with image captioning.

Cost-Benefit Analysis

- Mask R-CNN: Lower training costs but limited scalability.

- Transformers: High initial costs offset by better generalization and fewer handcrafted features.

Hybrid Models: Merging CNNs and Transformers

What Are Hybrid Models?

Hybrid models blend the strengths of CNNs (localized feature extraction) and transformers (global context understanding). These models aim to achieve high accuracy while addressing challenges like resource efficiency and real-time performance.

Examples of Hybrid Models

- Swin Transformer: Combines hierarchical processing with transformer-based attention mechanisms, scaling well across tasks.

- Mask2Former: Unifies mask prediction for segmentation and detection with transformer modules, reducing pipeline complexity.

Key Benefits of Hybrid Approaches

- Efficiency: Use CNN backbones for feature extraction to reduce computational costs while leveraging transformers for contextual insights.

- Flexibility: Handle both structured tasks (e.g., bounding boxes) and unstructured tasks (e.g., pixel-level masks).

- Real-time applications: Balances precision with speed for dynamic environments like gaming and autonomous driving.

Sustainability and Ethical AI: Impact of Mask R-CNN and Transformers

Mask R-CNN: Resource-Conscious Applications

- Lower energy demands: Compared to transformers, Mask R-CNN models require less training power, making them greener in controlled tasks.

- Narrow focus reduces waste: Works well with curated datasets, reducing overtraining and unnecessary computation.

Computational costs of training Mask R-CNN, DETR, and hybrid models on different dataset sizes.

Transformers: Challenges and Innovations

- Energy-intensive training: Training transformers like DETR demands enormous power due to their attention-heavy architecture.

- Advances in sustainability: Innovations such as pruned attention layers and knowledge distillation aim to mitigate environmental impact.

- Ethical concerns: Transformers risk bias due to reliance on large-scale, unlabeled data, potentially introducing skewed predictions.

Hybrid Models and Sustainability

- Combine energy efficiency of CNNs with minimal transformer layers for scalability.

- Examples include using pre-trained CNN backbones to reduce training times.

Ecosystem Compatibility: CNNs vs. Transformers

Mask R-CNN in Existing Systems

- Plug-and-play design: Compatible with frameworks like TensorFlow and PyTorch.

- Wide adoption: Easily integrates into industries with robust pipelines, from healthcare to retail.

- Pretrained models: Offers a wealth of pretrained weights for specific tasks, reducing deployment time.

Transformers: Shaping the Future

- Unified frameworks: Transformer models support multitasking (e.g., combining segmentation with image recognition or captioning).

- Multimodal adaptability: Can process text, audio, and images simultaneously, aligning with the rise of AI-powered assistants.

- Customizable pipelines: Allow modular architecture for highly specific applications, such as 3D modeling or AR applications.

Hybrid Models for Diverse Use Cases

- Designed to meet the diverse demands of industries that require scalability and precision.

- For example, in autonomous driving, they provide real-time predictions while maintaining a low computational footprint.

Mask R-CNN vs. Transformers: Comparing Real-World Ecosystems

| Aspect | Mask R-CNN | Transformers | Hybrid Models |

|---|---|---|---|

| Performance | High in structured environments | Excels in complex, dynamic settings | Balanced for various applications |

| Scalability | Limited for large datasets | Designed for open-world tasks | Modular for diverse datasets |

| Computational Needs | Moderate, edge-friendly | High, requires advanced hardware | Optimized balance |

| Integration | Well-suited for existing pipelines | Emerging support, tailored applications | Adaptable to both legacy and new systems |

Adoption Strategies for Mask R-CNN, Transformers, and Hybrid Models

Mask R-CNN: Practical and Proven

Mask R-CNN remains a go-to solution for industries that need robust, ready-to-use systems for structured environments.

- Adoption tips: Leverage pretrained models and frameworks like TensorFlow for quicker deployment.

- Best use cases: Tasks requiring pixel-level precision but limited to smaller datasets, such as medical imaging or e-commerce applications.

- Challenges: Upgrading to more scalable systems for handling large datasets or open-world scenarios.

Transformers: Future-Ready and Adaptable

Transformers like DETR and Vision Transformers (ViT) are reshaping the landscape of dynamic and complex tasks.

- Adoption tips: Start with fine-tuned pretrained models to minimize data requirements. Optimize for specific tasks to reduce computational costs.

- Best use cases: Autonomous vehicles, AR/VR, and large-scale datasets where context matters.

- Challenges: High hardware demands and steep learning curves for development teams.

Hybrid Models: The Middle Ground

Hybrid models address the gaps between CNNs and transformers by combining their strengths.

- Adoption tips: Consider hybrid architectures like Swin Transformers for tasks that require both precision and scalability.

- Best use cases: Industries balancing performance and resource constraints, such as retail, logistics, and smart cities.

- Challenges: Balancing the added complexity of hybrid setups with performance gains.

Broader Implications for AI-Driven Segmentation

Advancing Accessibility

- Mask R-CNN: Democratizes instance segmentation with accessible tools for small-scale industries.

- Transformers and hybrids: Push AI boundaries by enabling cutting-edge applications in global enterprises and innovative sectors.

Bridging the AI Divide

- Hybrid models make high-end segmentation accessible by optimizing resource demands, leveling the playing field for smaller players.

Ethical AI Practices

- Addressing bias in transformer-based systems is crucial as they scale across diverse use cases.

- Transparency and fairness in dataset creation will shape the future of segmentation.

Conclusion: A Dynamic Landscape

Instance segmentation is at a turning point, with Mask R-CNN remaining a trusted standard and transformers redefining the possibilities. Hybrid models are bridging the gap, promising scalable, efficient, and versatile solutions. The choice of approach depends on your use case, resources, and long-term goals.

From healthcare to autonomous vehicles, the future is bright for instance segmentation as AI tools evolve to meet growing demands with precision and adaptability.

FAQs

Are pretrained models available for both approaches?

Yes! Mask R-CNN has a wide range of pretrained weights available in frameworks like TensorFlow and PyTorch, suitable for applications like autonomous drones or security systems.

For transformers, models like DETR and Swin Transformer also come with pretrained options, particularly for complex tasks like satellite image segmentation or climate modeling.

How do these models impact sustainability in AI?

Mask R-CNN is generally more energy-efficient due to its simpler architecture, making it suitable for lightweight systems like edge devices.

Transformers, while powerful, demand significant energy resources during training. Efforts like sparse transformers and hybrid models aim to reduce this impact, making them viable for sustainable AI deployments in industries like renewable energy monitoring.

Can Mask R-CNN or transformers work for 3D instance segmentation?

Yes, but with variations.

- Mask R-CNN: While originally designed for 2D tasks, extensions like PointRend adapt Mask R-CNN for 3D segmentation, such as identifying objects in point clouds for autonomous vehicles or 3D medical imaging.

- Transformers: Models like Voxel Transformer Networks (VTNs) specialize in 3D data, handling applications like segmenting 3D urban landscapes or volumetric biomedical images. Transformers generally offer a better grasp of spatial relationships in 3D data.

Are these models suitable for small-scale applications?

- Mask R-CNN: Absolutely. Its simpler architecture makes it more suitable for smaller datasets or projects with limited computational power, like personal drones or mobile-based AR apps.

- Transformers: While resource-heavy, scaled-down versions like MobileViT or hybrid models are emerging, making them feasible for smaller tasks, such as real-time wildlife monitoring on portable devices.

What industries benefit the most from these models?

- Healthcare: Mask R-CNN is excellent for precise segmentation of tumors or cell structures. Transformers, on the other hand, shine in analyzing complex datasets like histopathological slides.

- Retail: Mask R-CNN performs well for product recognition and augmented reality features in e-commerce. Transformers are superior for analyzing cluttered warehouse images or inventory management.

- Autonomous vehicles: Both approaches are used, but transformers excel in processing real-time traffic data and crowded environments.

- Agriculture: Hybrid approaches like Swin Transformer help identify crops and pests in large-scale satellite imagery with high accuracy.

How can these models handle noisy or cluttered images?

- Mask R-CNN: Relies heavily on region proposals and struggles with dense overlapping objects. For example, identifying animals in a crowded forest might be challenging.

- Transformers: Excel in cluttered or noisy scenes due to their global attention mechanisms, enabling better segmentation of overlapping objects in complex industrial settings or aerial surveillance.

Do these models support unsupervised or semi-supervised learning?

- Mask R-CNN: Generally depends on supervised learning but can be adapted for semi-supervised tasks with additional training strategies.

- Transformers: Show more promise in unsupervised learning, thanks to their ability to self-learn contextual relationships. For example, unsupervised ViT variants can segment objects without labeled data, making them ideal for environments with scarce annotations like deep-sea exploration.

How are Mask R-CNN and transformers used in environmental monitoring?

- Mask R-CNN: Used for tracking changes in satellite imagery, such as deforestation or glacial melting. It segments regions with high precision, making it effective for localized studies.

- Transformers: Provide a broader context, enabling analysis of large-scale environmental changes over time, such as mapping urban sprawl or predicting wildfire risks.

Are hybrid models the future of instance segmentation?

Hybrid models represent a compelling middle ground, combining the strengths of both approaches. For instance:

- Swin Transformer-based hybrids provide global context while being computationally efficient, making them suitable for next-gen applications like smart cities.

- In sectors like robotics, hybrids enable precise object handling with minimal latency, ideal for manufacturing lines or warehouse automation.

What role does hardware play in choosing between these models?

- Mask R-CNN: Compatible with standard GPUs and even some high-end CPUs, making it cost-effective for startups or edge devices like drones.

- Transformers: Demand more powerful GPUs or TPUs, especially for training large models. However, frameworks like NVIDIA’s TensorRT are optimizing transformer inference for deployment on edge devices.

- Hybrid models: Strike a balance, running efficiently on mid-tier GPUs for tasks like smart surveillance systems.

Resources

Official Documentation and Tutorials

- Mask R-CNN:

- TensorFlow Mask R-CNN Tutorial

Step-by-step guide to implement Mask R-CNN using TensorFlow’s built-in libraries. - PyTorch Mask R-CNN Implementation

Access PyTorch’s pre-trained Mask R-CNN models with detailed documentation.

- TensorFlow Mask R-CNN Tutorial

- Transformers:

- DETR (DEtection TRansformer) GitHub Repository

Official repository from Facebook AI Research with code and instructions for using DETR. - Vision Transformer (ViT) Repository

Google Research’s ViT model with resources for training and evaluation.

- DETR (DEtection TRansformer) GitHub Repository

Research Papers and Studies

- Mask R-CNN:

- Mask R-CNN by He et al. (2017): Link to the Paper

A seminal work outlining the design, performance, and applications of Mask R-CNN. - PointRend: Image Segmentation as Rendering (2020): Link

Enhances Mask R-CNN for higher-quality mask predictions, particularly for edges.

- Mask R-CNN by He et al. (2017): Link to the Paper

- Transformers:

- DETR: End-to-End Object Detection with Transformers (2020): Link

Introduction to DETR, explaining its architecture and advantages over traditional CNN-based approaches. - An Image is Worth 16×16 Words: Transformers for Image Recognition (2020): Link

The foundational paper on Vision Transformers (ViT), demonstrating their power in computer vision tasks.

- DETR: End-to-End Object Detection with Transformers (2020): Link

Open-Source Projects and Datasets

- Projects:

- Detectron2 by Facebook

A robust framework for instance segmentation and object detection, with support for Mask R-CNN and hybrids. - Hugging Face Transformers

Hugging Face offers easy-to-use tools for working with transformer models, including DETR.

- Detectron2 by Facebook

- Datasets:

- COCO (Common Objects in Context): COCO Dataset

Standard dataset for object detection and instance segmentation tasks. - Cityscapes Dataset: Cityscapes Dataset

Focused on semantic and instance segmentation in urban street scenes, widely used in autonomous driving. - ADE20K Dataset: ADE20K

Supports segmentation tasks for a wide range of environments and object classes.

- COCO (Common Objects in Context): COCO Dataset

Learning Platforms and Tutorials

- Courses:

- Deep Learning Specialization by Andrew Ng (Coursera)

Includes modules on CNNs, object detection, and segmentation. - Advanced Computer Vision with TensorFlow (Udemy)

Covers instance segmentation and object detection using Mask R-CNN.

- Deep Learning Specialization by Andrew Ng (Coursera)

Community and Forums

- Reddit – r/MachineLearning

Active discussions on new models, tools, and best practices. - Stack Overflow

Get troubleshooting help for implementation challenges. - Kaggle Discussion Boards

Learn from competitions focused on segmentation, like Kaggle’s semantic segmentation challenges.